This was originally a Devember 2021 entry, but it will likely still be in progress for Devember 2022, so I’ll just call it a long term project…

Follow the Github repo for up-to-date progress/documentation.

This was originally a Devember 2021 entry, but it will likely still be in progress for Devember 2022, so I’ll just call it a long term project…

Follow the Github repo for up-to-date progress/documentation.

So, first off, sorry for the late submission. December is usually too busy for me to participate in Devember, but this year I have found a good amount of time to work on my Ansible/OpenBSD project, so here it is!

Over the past couple years, I’ve used Edgemax, Unifi, pfSense and OPNsense router/firewall/gateways. They each have their pros and cons, but I never felt great about any of them. To date, I like Edgerouters the most because VyOS is pretty awesome, but unfortunately the options there are either Ubiquiti Edgerouters (which seem doomed), pay big dollars to use stable VyOS or use bleeding edge VyOS and hope for the best. Additionally, as cool as the networking features of VyOS are, at the end of the day, you’re running a kind of gimped Debian which isn’t flexible in the ways that you’d want a Linux to be.

So at some point, I finally summoned the courage to try plain old OpenBSD. Why not? It’s the best, right? Just kind of intimidating? It turns out I really like it. I miss the familiar configure commit save paradigm of VyOS (and many other network platforms), but I think the safety of that (preventing lockout) can be mitigated to a large extent with CARP and OOBM interfaces. For instance, if I were to make some major configuration changes to VyOS remotely, I would:

If I committed the changes and locked myself out, the scheduled reboot would occur within the service window and restore the old configuration. This is the tried and true way to configure routers and switches. Unfortunately, it is not built into general purpose operating systems. However, if you have redundant gateways which have access to each other’s IPMI ports, then you have a very different, but equally effective solution to the problem of lockout. If you fudge the config on one gateway, log into the other one, console into the failed gateway and fix the problem. A little awkward, but effective, and hopefully you aren’t locking yourself out more than once or twice a year.

As far as switches go, I kind of hate all of them. Used Arista might have been an option except it’s impossible to get software updates and even if you could, by the time they’re affordable, you’ve got maybe a year of updates left. This might change once SONiC switches show up in the used market, but for now, I’m going with Mikrotik because they are affordable, support PXE booting and there is a community Ansible module for RouterOS.

The goal is to use Vagrant, PXE and Ansible to onboard network config onto blank hardware. I want to make this process as unattended as possible. Realistically, I will need to swap an ethernet cable a couple times because only certain ports support PXE booting on most hardware, but once the systems are imaged initially, the entire process of configuring them should be unattended.

The network will configured in a few simple Ansible inventory files configured in yaml. There will be 4 types of inventory files.

group_vars/all.yml

This file defines default values for the all networks. This includes some miscellany, but most notably, service definitions. The service definitions are essentially extensions of /etc/services. If you need to define a bundle of protocols and ports under one name, you can do it there. It is not necessary to redefine what is already in /etc/services, although you can if you want to. In many cases, I’ve noticed that the IANA assigns both UDP and TCP to a service that really only needs one or the other. If you really want to tighten up discrepancies like that, you could set

---

# vim: ts=2:sw=2:sts=2:et:ft=yaml

#

# Global Defaults

#

########################################################################

ansible_user: root

default_comment_prefix: "# vim: ts=8:sw=8:sts=8:noet\n#"

default_comment_postfix: >

"#\n########################################################################"

default_comment_header: >

"This file is managed by Ansible, changes may be overwritten."

# Define services here. If no definition is available, /etc/services is

# used.

srv:

echo:

icmp:

type: echoreq

ssh:

tcp:

- 22

www:

tcp:

- 80

- 443

inventory/fqdn/site.yml

Ex: inventory/campus1_example_com/site.yml

Technically, the filename here doesn’t matter.

This file defines the structure of the network and the relationship between hosts and subdomains. If you’ve written an Ansible inventory, it should look familiar. I write mine in yaml for consistency, but it could be written in toml or even json if you wanted.

---

# vim: ts=2:sw=2:sts=2:et:ft=yaml.ansible

#

# Build the overall structure of the site

#

########################################################################

all:

children:

office_example_com:

children:

gw:

hosts:

gw1: #gw1.office.example.com

gw2: #gw2.office.example.com

net:

vars:

subdom: net #use subdomain net for this group

children:

sw:

hosts:

sw1: #sw1.net.office.example.com

sw2: #sw2.net.office.example.com

sw3: #sw3.net.office.example.com

sw4: #sw4.net.office.example.com

ap1: #ap1.net.office.example.com

ap2: #ap2.net.office.example.com

srv:

vars:

subdom: srv #use subdomain srv for this group

children:

srv:

hosts:

www1: #www1.srv.office.example.com

ftp1: #ftp1.srv.office.example.com

nas1: #nas1.srv.office.example.com

nas2: #nas2.srv.office.example.com

inventory/fqdn/group_vars/fqdn.yml

Ex: inventory/campus1_example_com/group_vars/campus1_example_com.yml

This file is kind of special because it only contains 2 dictionaries which can be rewritten by a handler. Variables here are scoped for the entire network including all subnets and addressing.

# This inventory file contains 2 important variables: site and net. It

# should be written in yaml and named after the fully qualified domain

# name of the site, replacing periods with underscores.

#

# Ex: site_example_com.yml

#

# Configuration here is shared across all hosts in a domain.

# The net role will rewrite this file, so variables declared outside

# of site and net may be overwritten and should be set elsewhere.

#

# Currently only IPv4 is supported, but the design should allow the

# addition of IPv6 later without a radical restructuring.

#

# site:

#

# The site variable is a dictionary of identifiers and metadata that

# define the physical or virtual location of the network. The site could

# be an office, colocation or cloud that share an FQDN, LANs and

# gateway(s).

#

# description: Human-readable name of the site/location/campus

#

# This should be self-explanatory. An example would be 'Headquarters',

# 'Northeast Campus' or similar. The only restriction is that it

# should be contained to a single line. If the description contains

# any quotes or special characters, ensure they are quoted or escaped

# properly.

#

# etld: example.com

#

# Storing the "effective" top-level domain is generally more useful

# than storing both tld and sld/2ld separately since in a practical

# sense, the eTLD is atomic for devop/sysadmin operations.

#

# id: 0–255

#

# The site ID must be unique to the site and in /24 LAN addressing

# corresponds to the 2nd octet. This not only allows us to easily

# distinguish addresses across sites, but also allows for tunneling

# without risk of address conflicts. The LAN addressing schema is

# 10.site.subnet.host.

#

# name: URL-compatible abbreviation

#

# The site name is used to complete the fully qualified domain name

# (FQDN) for the site.

#

# net:

#

# The net variable is a list of semi-complex dictionaries that define

# the networks in the site. This includes addressing and routes, but

# also MTU which is generally an interface configuration but should be

# uniform across any given subnet.

#

# net_name:

#

# This dictionary key should be a URL-compliant abbreviation of the

# subnet or public network. 'wan', 'admin', and 'sales' are some

# examples.

#

# addr:

# - 192.0.2.2

# - 192.0.2.3

# - 192.0.2.4

#

# Optionally, include a list of usable addresses. This is useful

# when WAN allocations may not correspond to the entire usable

# address space in a subnet or if we want to reserve some addresses.

# Note that when the net role selects an IP in a public range, it

# will eliminate any candidates that respond to echo requests (ping)

# to avoid address conflicts. If the addr list is not provided, the

# usable IP range is determined based on randomized private /24

# subnet, or if the network has routes defined, the network is

# determined based on the hop address. For public-facing networks,

# a subnet mask must also be provided (see below).

#

# client:

# - wan

# - echo

# - ssh

#

# The client list enumerates services that will be made available to

# this subnet. Client networks are granted access to services

# provided by hosts as defined by the service list. Servers are

# defined in their host_vars file (per interface) and service

# definitions are in the srv list that can be defined in any user-

# editable inventory file, but are recommended to be set globally

# via group_vars/all.yml in the root playbook directory.

#

# description: Human-readable name of the subnet

#

# Similar to the site description, give the network a short

# description, limited to one line.

#

# id: 0-255

#

# The subnet ID determines the 3rd octet of private subnet

# addresses, the VLAN VID and the CARP VHID. Each subnet's ID must

# be unique within a site. Subnet IDs are randomly generated (2-255)

# if they are not provided. In general, the only reason to provide

# one is to be compatible with existing VLAN infrastructure (for

# instance if the public network is delivered via VLAN) or to

# indicate that a network should not be configured as a VLAN

# (id: 1). ID 0 is viable but reserved for the rescue network.

#

# mask: 24

#

# Set the subnet mask for public-facing networks. If the subnet is

# not provided in cidr format, the net role will convert it to cidr.

# The default is 24 and this should not be changed for LANs.

#

# mtu:

#

# Set this to change the default MTU which is determined by the OS,

# but is generally 1500. This will be applied to all physical

# interfaces participating in the subnet across all hosts and

# switches. If an interface participates in multiple subnets, the

# highest configured MTU is used. MTU is usually only defined to

# configure jumbo frames (mtu: 9000).

#

# promiscuous: false

#

# This determines if layer2 forwarding will be allowed within the

# subnet across switch, software bridge and IaaS. This is often

# referred to as "isolation" on switch platforms and can have other

# names. If layer 3+ filtering should not be applied to intra-subnet

# traffic, set this to true. It is false by default.

#

# route:

# - dest: 0.0.0.0/0

# hop:

# - 192.0.2.1

#

# Set one or more routes with one or more next-hops. The dest

# field of a default gateway may be set as 0.0.0.0/0, 0/0 or

# default. Note that services defined in the client list are granted

# to all routable networks within a given subnet.

#

# rx:

# kbps:

# mbps:

# tx:

# kbps:

# mbps:

#

# Optionally, set bandwidth restrictions on an interface. Either

# kilobits-per-second or megabits-per-second may be provided.

# Currently, only tx is used for FQ-CoDel, but rx can be set for

# future use. These are expected to only be set for public networks,

# but can be set for any network (FQ-CoDel will be configured

# whenever a tx value is defined).

#

# Note: rescue is a special network for use as a dedicated management

# port on the gateway hardware. The ID is always 0 and it is given

# administrative access to the gateway. A physical interface associated

# with the rescue network should be physically secure and unplugged when

# not in use.

#

########################################################################

inventory/fqdn/host_vars/short-hostname.yml

Ex: inventory/campus1_example_com/gw1.yml

This file is also rewritten by an a handler. It defines some host-specific Ansible variables as well as a list of network interfaces. I am still working out how I’d like to implement aggregated LACP and failover connections, but I’m feeling confident in the rest of the config.

# This inventory file contains Ansible's user, Python interpreter and

# privilege escalation (become) variables as well as interface

# definitions for the host. It should be written in yaml format and

# named after the short hostname of the host.

#

# Ex: host.yml

#

# Variables declared outside of ansible_user,

# ansible_python_interpreter, ansible_become_method and iface may be

# overwritten and should be set elsewhere.

#

# ansible_user:

#

# The user ansible uses to login to the host. Set a default in

# group_vars/all.yml. The recommended default is root, unless using an

# environment like vagrant which share a common administrator account.

# If the user is root, the ansible_user role will generate a randomized

# administrator account, migrate the ssh key and record the name here.

#

# ansible_python_interpreter:

#

# If this is not set, the ansible_dependencies role will write it here

# after testing it. Python 3 is preferred to Python 2. Note that while

# Python 3 is available on CentOS/RHEL 7, it is missing libraries

# needed by Ansible's SELinux module and possibly others so it those

# cases, Python 2 should be used.

#

# ansible_become_method:

#

# If not set and doas is available, it is preferred. Otherwise sudo is

# used. The value is written here by the ansible_user role.

#

# iface:

#

# The iface variable is a list of dictionaries that define interface

# configuration on a host. Only variables that correspond to the host

# are set here. Most network-related configuration is set in the

# fqdn.yml file in the inventory/fqdn/group_vars directory.

#

# - bridge: admin

#

# Optionally, configure the interface in a software bridge on a

# given network. The value should correspond to a key in the net

# dictionary. All interfaces with the same name will be bridged

# together with the specified network's VLAN. The bridge interface

# number is determined by the id field in the specified network

# (same as the VLAN VID). So in the exampel of admin above, if

# network admin has an ID of 202, the bridge will be bridge202 and

# will at least include this interface and vlan202.

#

# dev: em0

#

# The name of the device in the OS. Examples include: em10 ix3 re0.

# Either dev or mac_addr must be defined for any given interface. If

# mac_addr is defined, the net role will determine the device based

# on dmesg and write it here.

#

# description: Human-readable name or brief description

#

# This can be set in the net dictionary but we can override it for

# a specific physical interface here.

#

# mac_addr: 00:00:00:00:00:00

#

# The hardware address of the interface. This is used to identify

# the interface via dmesg. It is not applied as a configuration.

# Either mac_addr or dev must be set.

#

# net: sales

#

# Optionally, set the net variable to assign a network directly to

# the physical interface. This value references the keys in the net

# dictionary defined in fqdn.yml. Addressing, routes, mtu and other

# network configuration are pulled from there. When the host is a

# switch, this indicates the primary (untagged) VLAN.

#

# random_mac: true

#

# Set this to false to advertise the hardware MAC address on the

# network instead of a randomized one. Some NICs have issues with

# changing their MAC address. The default is true.

#

# vlan:

# - admin

# - sales

#

# Set a list of vlans to configure on this interface. Names should

# correspond to keys in the net dictionary.

#

# Note: CARP interfaces are created automatically for each net and vlan

# configured on an interface. CARP device names and VHIDs correspond to

# network IDS. If only one address is available (in the case of a public

# network), it is given to the CARP interface. CARP and pfsync are

# automatically negotiated when multiple gateways are defined in

# inventory/fqdn/site.yml.

#

########################################################################

Deploy ssh key to switch and perform minimal initial configuration.

Deploy ssh key to gateway and perform minimal initial configuration.

Each of these represents an Ansible role.

dev based on mac_addr for instance).raw and script modules only).restart ssh handler is a dependency of the next role).So far I have at least some work on 1–15. 5 was definitely the most complicated, although I expect 16 might be worse if I embrace dnssec.

Currently, I have all of this in one place, but I think I should split each role into its own git repo and aim towards contributing them to Ansible Galaxy. Once I have actual code (if you can call it that) up, I’ll begin posting it here.

Also for reference, here is a diagram of a deployment I’ll use this on. Eventually I’d want to use Ansible to provision all of the servers as well:

SO, I was sick the past few days. I figured it was covid, but 2 negative tests, so it seems I succumbed to a plain cold. Anyway, I am feeling better, so back to work!

Initial work on this has been centered on restructuring the roles I have already written into an Ansible collection with each role as its own git repo. While doing this, I have also changed the inventory structure a bit.

With the previous inventory, there was a looming problem. Eventually, I wanted to be able to configure tunnels between sites using Ansible. However, the way the inventory was structured (each site in a folder named after its fqdn), doing this would require loading multiple inventories. Additionally, if I wanted to get a bird’s eye view of all sites, I’d need to load every inventory. This isn’t ideal, so instead of having each inventory in a dedicated fqdn directory, I am putting them all in inventory/ as fqdn.yml.

I hope to begin pushing WIP commits to github soon so I can post something tangible here. I will also edit OP to reflect the changes as they’re implemented.

So, keeping with the late theme, I have some initial commits up. I haven’t added anything to Ansible Galaxy yet because it’s all still very early alpha status, but progress as been steady.

A lot of things changed since my OP here, but then again I think most of it is the same. Anyway, here’s the repo:

Currently only 3 roles are up: inventory, host and network. Network in particular was a lot of work creating equivalent configurations across networkd, Network Manager and OpenBSD. The next one will be firewall which is currently planned to work across pf, firewalld and ufw. I anticipate it to also be pretty labor-intensive. After that, I think routing will be next which will be simpler thankfully.

Realistically, this will likely still be in active development for Devember 2022, so hopefully I’m so late I’ll come around to being on time again.

I want to use the service definitions provided in /etc/services for the firewall role, but it isn’t formatted well for easy conversion to json or yaml (I didn’t see a jc flag for it). But nbd, we can go straight to the source.

The xml version did not convert well with jc, so I am using the csv version. Also, there appears to be an issue with the url plugin for lookup in Ansible. If I try to pull directly from the IANA site that way, I get a Python crash.

lookup('url', 'https://www.iana.org/assignments/service-names-port-numbers/service-names-port-numbers.csv')

results in

objc[86608]: +[__NSCFConstantString initialize] may have been in progress in another thread when fork() was called.

objc[86608]: +[__NSCFConstantString initialize] may have been in progress in another thread when fork() was called. We cannot safely call it or ignore it in the fork() child process. Crashing instead. Set a breakpoint on objc_initializeAfterForkError to debug.

ERROR! A worker was found in a dead state

In my Ansible inventory, I have a srv_def variable for custom service definitions, but I want to fall back to commonly accepted ones:

# Service Definitions

#

# Define services here. If no definition is available, /etc/services is

# used. The IANA service definitions tend to assign both UDP and TCP to

# services that really only need one or the other. Redefining them here

# can be helpful in correcting those generalizations. An example might

# be to distinguish HTTP (TCP port 80) from QUIC (UDP port 80), or to

# simply avoid needlessly opening TCP port 53 when DNS queries are only

# being served on UDP port 53.

#

# Example:

#

# srv_def:

# dhcp:

# udp:

# - 67

# ping:

# icmp:

# - 8

# www:

# tcp:

# - 80

# - 443

So, I’m currently converting the IANA csv to my own simplified yaml format. Initially, the idea was that I would have this in the role, but it is way too slow for that. Going to generate it once and use the static result, but nonetheless, I did implement it in a single, very long, very inefficient set_fact task.

- set_fact:

srv_def_localhost: >

{{ srv_def_localhost

| default({})

| combine( { srv_name_item:

{ 'tcp':

iana_srv

| selectattr( 'Service Name',

'==',

srv_name_item )

| selectattr( 'Transport Protocol',

'==',

'tcp' )

| map(attribute='Port Number'),

'udp':

iana_srv

| selectattr( 'Service Name',

'==',

srv_name_item )

| selectattr( 'Transport Protocol',

'==',

'udp' )

| map(attribute='Port Number')

}

} ) }}

loop: "{{ iana_srv

| map(attribute='Service Name')

| unique }}"

loop_control:

loop_var: srv_name_item

vars:

iana_srv: "{{ lookup('file', '~/Desktop/service-names-port-numbers.csv')

| community.general.jc('csv')

| selectattr('Service Name')

| selectattr('Port Number') }}"

It’s still running so I don’t actually know if it’s successful yet, but tests with just a single service worked fine, so I am optimistic.

Also, sadly:

![]()

lol, I’m swapping hard

In the sober light of day, I have reduced the previous set_fact to this, which is exponentially faster but pretty unreadable.

- set_fact:

srv_def_localhost: >

{{ srv_def_localhost

| default({})

| combine( srv_def_localhost[srv_item['Service Name']]

| default({})

| combine( { srv_item['Transport Protocol']:

srv_def_localhost[srv_item['Service Name']][srv_item['Transport Protocol']]

| default([])

| union([srv_item['Port Number']])

} ) ) }}

loop: "{{ lookup('file', '~/Desktop/service-names-port-numbers.csv')

| community.general.jc('csv')

| selectattr('Service Name')

| selectattr('Transport Protocol')

| selectattr('Port Number') }}"

loop_control:

loop_var: srv_item

It will still take maybe hours to run, but the other one was going to take a day or days. This avoids searching the entire list twice for each protocol and handles everything in one pass. It just requires embedded combines and a union along with a lot of keys in keys which I think is the best way to do it, but is difficult to parse visually.

RIP

Still work to do I guess.

fatal: [localhost]: FAILED! => {"msg": "failed to combine variables, expected dicts but got a 'builtin_function_or_method' and a 'dict': \n<built-in method copy of dict object at 0x1113338c0>\n{\"tcp\": [\"8445\"]}"}

Final form?

- hosts:

- localhost

gather_facts: no

tasks:

- uri:

url: "https://www.iana.org\

/assignments\

/service-names-port-numbers\

/service-names-port-numbers.csv"

return_content: yes

register: iana_port_reg

- set_fact:

srv_def_localhost: >

{{ srv_def_localhost

| default({})

| combine( { srv_item['Service Name']:

srv_def_localhost[srv_item['Service Name']]

| default({})

| combine( { srv_item['Transport Protocol']:

srv_def_localhost[

srv_item['Service Name']

][

srv_item['Transport Protocol']

]

| default([])

| union([srv_item['Port Number']])

} )

} ) }}

loop: "{{ iana_port_reg['content']

| community.general.jc('csv')

| selectattr('Service Name', '!=', '')

| rejectattr('Service Name', 'callable')

| rejectattr('Service Name', 'in', 'copy')

| selectattr('Transport Protocol', 'in', 'tcp,udp')

| selectattr('Port Number', 'gt', '0') }}"

loop_control:

loop_var: srv_item

- debug:

var: srv_def_localhost

Sidenote: the issue with url lookup in Ansible is a macOS thing, so I’ll use the uri module instead.

It’s done:

Didn’t expect that to take all weekend… going to get back to the firewall role now.

Who thought this would be so complicated?

# No timezone configured for the site. Attempt to use timezone of

# localhost

- name: Get list of valid timezones from localhost

ansible.builtin.find:

paths: /usr/share/zoneinfo

file_type: file

excludes: posix*

recurse: true

depth: 2

get_checksum: true

register: valid_tz_reg

delegate_to: 127.0.0.1

- name: Examine /etc/localtime on localhost

ansible.builtin.stat:

path: /etc/localtime

register: localtime_reg

delegate_to: 127.0.0.1

# /etc/localtime may either be a link or a file. If it is a link, we

# use the link source to determine the timezone, if it isn't a link

# (FreeBSD), then use the first checksum match in /usr/share/zoneinfo.

# Additionally, not all systems support UTC/UCT as a timezone since it is

# technically a standard and the associated timezone is GMT, so we

# convert any instance of UTC/UCT to GMT.

- name: >

Set the timezone for {{site['name']}}.{{site['etld']}} to {{tz_var}}

ansible.builtin.set_fact:

site: "{{ site | combine({'tz': tz_var}) }}"

vars:

tz_var: "{{ localtime_reg['lnk_source']

| default( find_reg['files']

| selectattr( 'checksum',

'==',

lt_reg['stat']['checksum']

| default('0') )

| map(attribute='path')

| default([''], true)

| first )

| regex_replace('^.*zoneinfo/', '')

| regex_replace('UTC|UCT', 'GMT')

| default('Etc/GMT', true) }}"

Unfortunately, Ansible facts seem to get the timezone from the date command which will return something like EST instead of US/Eastern which is what is needed to use the timezone module.

Pretty big push today. Still in the early stages, so the commits are big. As the puzzle pieces settle, I hope to make more incremental, atomic changes, but it’ll be chaotic for a while.

Today’s big commit was focused primarily on the inventory role, including much better documentation which you can read through here:

Perfect to ste–borrow from!

Really should pick up the OpenBSD router project, maybe being able to borrow stuff will kick me into gear as my type of procrastination is definitely less productive

I have a first draft of the host role finished. It performs general host configuration, including installing Python if it isn’t present, and it will generate an admin user if you don’t want to use root.

It sounds simple, but there are actually quite a few components.

Network role is looking good. Basic functionality tested here:

and on 2nd run:

I added interface discovery, so if the host has no interface definitions in its host variables, it will identify the host’s physical interfaces, capture the addresses and determine if the interface is configured manually or via DHCP. Here’s an example of the resulting ifaces list.

# BEGIN ANSIBLE MANAGED BLOCK: Network Interfaces

ifaces:

- dev: eth0

mac_addr: 08:00:27:fe:b5:aa

net4:

addr:

- dhcp

- dev: eth1

mac_addr: 08:00:27:4e:fb:3d

net4:

addr:

- 192.168.60.12

mask: '24'

- dev: eth2

mac_addr: 08:00:27:0d:a6:90

# END ANSIBLE MANAGED BLOCK: Network Interfaces

This is helpful if you’re provisioning servers via DHCP/PXE, you can run this against the dhcpd leases and build an initial inventory that way.

For the time being, I have dropped FreeBSD from my target platforms simply because I don’t use it for anything at the moment outside of FreeNAS which isn’t really configurable outside of its GUI. Supporting OpenBSD, Network Manager and networkd was a lot of work already and I’d like to move on.

Doesn’t getent support searching that file?

It does! I forgot about that. And I thought it was only in Linux but OpenBSD does have it. macOS does not, but meh, however, it is only returning tcp results?

-bash-5.1$ getent services domain

domain 53/tcp

-bash-5.1$ grep domain /etc/services

domain 53/tcp # Domain Name Server

domain 53/udp

i think its going with first match… and then stops…

have you tried searching 53/udp instead of domain?

![]()

That does work, but yeah it’s only returning the first result.

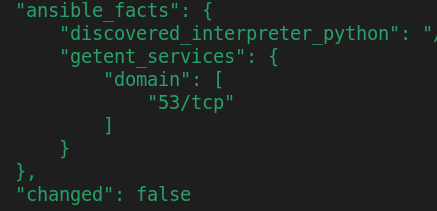

> ansible -i inventory openbsd7 -m getent -a "database=services key=domain"

openbsd7.hq.example.com | SUCCESS => {

"ansible_facts": {

"getent_services": {

"domain": [

"53/tcp"

]

}

},

"changed": false

}