Greetings everyone,

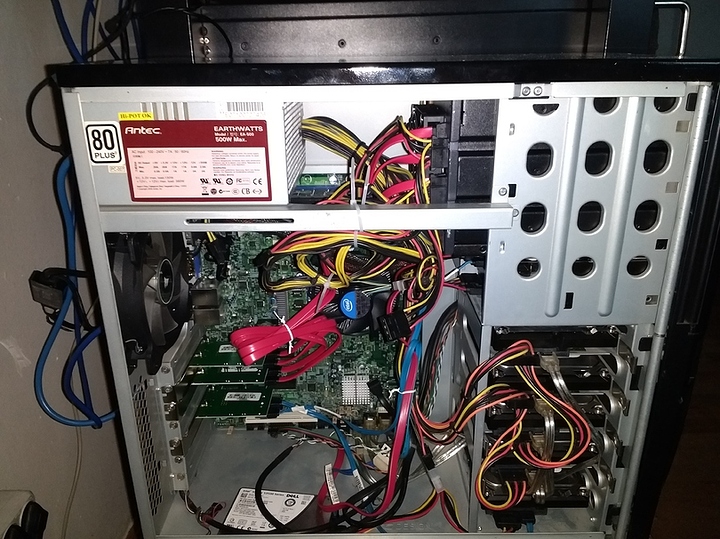

I’m in the process of building a Proxmox server for my home lab using some old hardware I retired from work a long time ago and has been collecting dust. Got a xeon x3440 with 32 gb of ECC RAM. Planning to run 5 to 7 VMs on it, most important being a mail server, a Matrix Synapse + Jitsi server and maybe a PeerTube instance. I will also have a separate VM to use as off-site backup of security cameras recording from a location ~75 miles away (I’ll allocate 2 or 3tb to it).

So, here comes the question about the setup. I have 10x 2TB drives, basically 2 batches, 5x ST2000NC001 made in 08/2014, and 5x ST2000VN000 made in 10/2015. I don’t really trust those drives, and I won’t have a backup for the majority of the data I will start putting on them (except the mail server, I will have a separate box for mail backup with 1TB mirror where I also rsync my /home folder).

Given the perfect match of different models and time of manufacturing, the most logical thing to do is going ZFS stripped mirrors. Another issue that I have is that the box will basically be “unmaintained” (ie: I will only access it remotely) for a long time, so I’m thinking of going 4x RAID10 vdevs and having 2 hot-spares. Sarge is a big influence on me here at Level1 Forum and I know he doesn’t like hot-spares… and frankly, neither do I, I’d rather change the disk when I see an alarm, but in this situation, I have no choice but to leave at the very least 1 hot spare out of the 10 disks. But I’m starting to be greedy for storage (especially if I will host a PeerTube instance). If I could replace the disks remotely somehow, I would go full RAID-z2 on 10 drives for that sweet storage.

IOPS may not necessarily be as important here, the VMs won’t be very pushy (and will have low resources) so I was thinking that maybe RAID-z2 on 8 drives or maybe RAID-z3 on 9 drives would spare a TB or 2, but at the cost of both IOPS and potentially decreased performance, according to:

So, can someone explain to me the ups and downs of using old spinning rust in each setup?

- Stripped-mirrors x4 vdevs + 2 hot spares for ~7.3 TB of usable storage

- RAID-z2 x8 disks + 2 hot spares for ~10.9 TB of usable storage

- RAID-z2 x9 disks + 1 hot spare for ~12.7 TB of usable storage

- RAID-z3 x9 disks + 1 hot spare for ~10.9TB of usable storage

- RAID-z2 x10 disks + screw hot sparring, we going all or nothing for ~14.6 TB of usable storage + no performance degradation from badly configured arrays

My gut feeling tells me to go RAID10 (as I would in my production environments), lose some capacity, skip the bs and not have to worry about resilvering times. I always liked this article: https://jrs-s.net/2015/02/06/zfs-you-should-use-mirror-vdevs-not-raidz/

But in my searching for the ideal config of 8 drives, I stumbled upon this reddit thread (I probably shouldn’t trust plebbit tho’): https://www.reddit.com/r/zfs/comments/erizhw/at_what_size_would_you_stop_using_a_striped/

The idea behind RAID-z* on >4 drives is that your parity calculation would be done faster than the drive can write that data on it, so it wouldn’t really matter if you’re going mirror or parity, because the drives can’t write data fast enough. BUT given again, that I’m using old, used drives, I’m afraid the potential stress put on the drives during parity calculation is just asking for trouble. But again, being just 2TB drives, technically it shouldn’t put too much stress on them for too long to resilver a disk.

The last thing I could do is split the zpool into:

- RAID-z2 x6 disks + 1 hot-spare for ~7.3 TB of usable storage

and - Stripped-mirror x2 disks + 1 hot-spare for ~1.8 TB of usable storage

Not sure if the hassle of splitting into 2 zpools is worth the potential increase in performance of the RAID-z2 zpool. I’d rather just have 7.3 TB and have RAID10. Am I too conscious about this issue? Should I just risk it and go all or nothing with RAID-z2 on 10 disks? Plz halp!

).

).