I have been following the Youtube channel since before the LevelOneTechs days, though it is my first time posting. I have always been impressed with the level of detail and depth of knowledge displayed. Maybe I can lean on it for some pointers in the right direction.

We are looking to run monte carlo simulations using the EGSnrc and GEANT4 codes (cpu-based, Fortran/C++). So this workstation would need as many cores as possible with strong cache and RAM to move data back and forth. The workstation would be running 24/7 for 2-4 weeks per simulation. At this time, we are only looking to convert the trajectory output data into 2d heatmaps. We do not anticipate super intense graphics needs.

Hardware is not my greatest expertise. From the videos, it looks like Epyc (Genoa/Bergamo) may be the way to go. Mainly, we are looking for some workstation systems that are sold by vendors in the US that we could use to just get going. We have a soft budget of 10 k$.

1 Like

If you dont need more than 64 physical cores or more than 512GB of memory, than any threadripper pro workstation fits your needs and budget.

If you need more than that, i.e memory ot dual socket config, then start looking into epyc builds.

Lenovo P620 should be easily available

Puget systems is US based and build profesional workstations to order as well.

If you are in academia, you might be able to get discount if go through your vendor rep.

1 Like

Threadripper has 8 DIMM slots. So 512GB is fairly easy and cheap to get. If you need more, EPYC boards have up to 24 DIMMs. Just so you know what’s possible. Intel has comparable offers.

EPYC has the option for dual-socket with 2x CPU and double the RAM.

And the CPUs with “X” have extra Cache but cost a lot more. So do your calculations and check use case if they are worth the cost.

CPUs are really expensive…high core count means $10k+ just for the CPU. So you have to make compromises.

So yeah…things can move from 10k to 20k or even 30k very quickly if your main performance factor is CPU and memory. Just because 150+ cores are possible today, doesn’t mean it’s cheap.

$10k when buying prebuilt by OEM? Will probably be a 32-core machine and 256-512GB memory.

Building it yourself can save quite a bit of money, that’s what we do here in the forums all the time. But standards and certifications certainly differ from home use to professional use.

Older generation server CPUs are fairly cheap. 3rd gen EPYC 64 cores (7763 SKU) at 2.5k each. Good amount of horsepower especially in a dual socket configuration. But 3rd gen EPYC uses DDR4, so that’s probably a downside judging from the use case.

So you can get 128 cores within a 10k budget when DIYing the thing.

1 Like

If you’ve got a sample problem that’s representative of performance I could run it for you on a Xeon W system.

I’ve been able to get my average MHD simulation (+100DoF FGMRES) times down from ~340 hours to ~180 hours by moving from a 64 core Threadripper pro to a 16 core Xeon W.

Genoa might be even faster than Xeon W depending on which side of Amdahl’s law the problem falls on.

1 Like

Threadripper has 64 cores now, wow. Maybe a machine like that could approach the power of a 64-core cluster that my colleague could use before he graduated. 2GB RAM + 256MB cache per core should be enough for each to load the geometry file, compute a trajectory, and save the results; the more cores, the more trajectories can be computed.

Huh, even Lenovo sells workstations like these. Wow, 7500$ difference between the 5975wx and 5995wx. Do you know what the ~45% discount is about or when it expires? That discount is really helping me afford one of those builds. Both Lenovo and Puget list a 64-core Threadripper PRO system for about 11-12k$ which is pretty close to the high end of my budget.

I assumed Intel must have something to compete. Is that what the Xeon W is for? However, the Threadripper and Epyc systems had the most discussion, by far.

An “X” suffix means that it has more cache. And 3rd gen (meaning Milan or Genoa?) can be more economical per core. Thank you, I will keep those tips in mind. Though, I am not sure that I ever saw a MilanX mentioned anywhere. However, the Epyc systems seem to come in these thick pizza box shapes rather than regular towers. Can we just set those on a desk too?

128 cores sounds amazing. We were hoping to avoid the extra variables of building something ourselves, since we are not that experienced with what all works together well hardware-wise.

twin_savage, It was cool to learn what MHD and FGMRES are. That is some drastic time reduction! That reduction was just from changing the CPU? How kind of you to offer running a sample! Though, our simulation would not be classified as FGMRES. I can send you some installation/compilation instructions for Windows or Linux.

Can you give me some stuff I can do on the hardware I have? Happy to run the numbers as it were. Especially if it’s easy for me to run the numbers

1 Like

While the Xeon cores are slightly faster than the Threadripper Pro cores, they certainly aren’t 7.5̅ times faster as the run times indicate, the main reason it is so much faster is because the Xeons have much more memory bandwidth than the Threadrippers, despite the core count deficit.

My understanding is that at least some monte carlo simulations are also a memory bound workload which is why I bring up the example.

Could you send instructions for installation/compilation on both Windows and Linux? I’ve been observing fairly big performance deltas between Windows and Linux with my specific simulation on the current Xeon and I’m curious to see if it manifests with other workloads as well.

Linux seems to be performing about 30% faster than Windows and I suspect the thread scheduler on Windows isn’t working as well as it is on Linux for the Xeons.

@wendell

not trying to hijack but… Want to try my simulation workload too? I’ve got it in separate self contained runtimes that’ll execute on Win/Linux x86 and ARM.

The only issue with it is that it’s memory footprint will scale with the number of cores you throw at it so >256GB of memory is required unless only a small number of cores are being used.

Sure? What’s my least headache step by step to run something and get numbers?

1 Like

Do you have a mini-problem set example that people can run on their own hardware, we can get you some data points of different systems so you can choose the best system for your budget.

Serve The Home I believe also offers a similar service of running confidential simulations on several hardware configurations to guide you to the optimum system for your budget.

I have a genoa 9124.

If you get multi-cpu systems, you can usually run those at a higher clock speed.

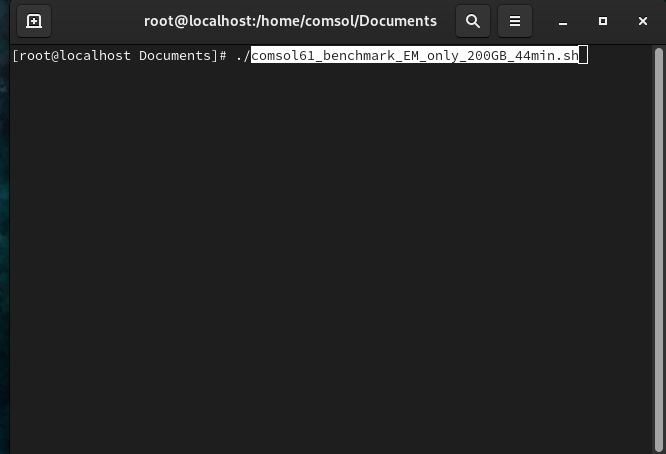

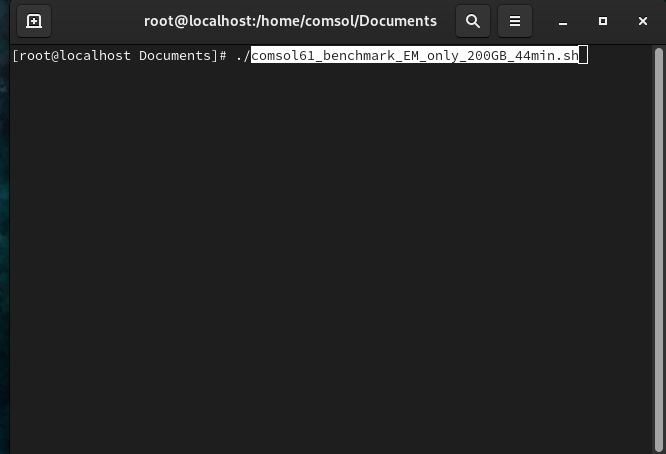

for linux grab comsol61_benchmark_EM_only_200GB_linux.sh out of:

https://drive.google.com/drive/folders/1YX0rqS85H-Z1rzjLTw_k6776FSBppKEB?usp=sharing

make sure it’s executable and run the script via terminal (does not need to be a root account, I’m just too lazy atm to properly setup xrdp)

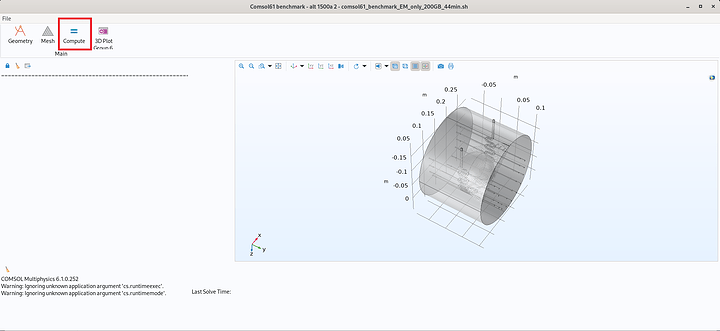

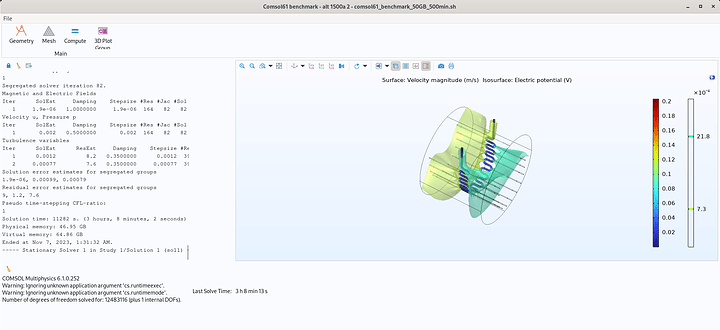

you’ll get the following window, all you need to do is click the “Compute” button and wait ~20 minutes for it to complete

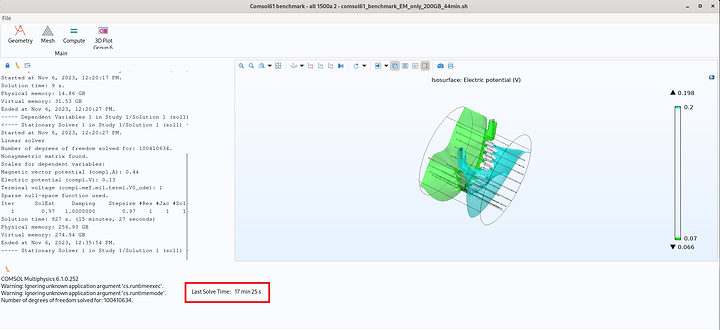

the solve time will be displayed here:

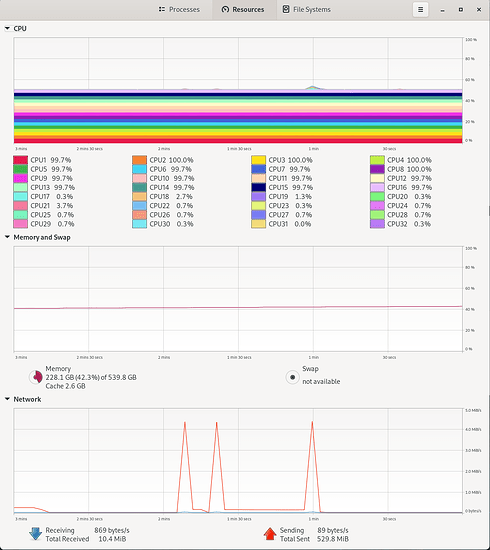

This is what typical resource consumption will look like, it’s purposely not using hyperthreading to achieve best performance:

For Windows you’ll need to grab comsol61_benchmark_EM_only_200GB_windows.exe file from that same google folder and execute it. On Windows it will ask you to install a self contained comsol runtime before the benchmark can actually be run, but other than that it is the same as linux.

*theres a super slight difference in degrees of freedom solved for between Windows and on Linux because the mesher is a tiny bit different between the two, but this shouldn’t be enough to drastically influence the results.

**also the benchmark isn’t perfectly deterministic, it’ll vary abit run to run, but not by the numbers I’m seeing between the two OSes.

3 Likes

================================================================================

Number of vertex elements: 390

Number of edge elements: 21726

Number of boundary elements: 627473

Number of elements: 13034930

Minimum element quality: 0.159

<---- Compile Equations: Stationary in Study 1/Solution 1 (sol1) ---------------

Started at Nov 6, 2023, 9:53:44 PM.

Geometry shape function: Quadratic Lagrange

Running on AMD64 Family 25 Model 17 Stepping 1, AuthenticAMD.

Using 1 socket with 16 cores in total on w11pnative.

Available memory: 98.07 GB.

Time: 165 s. (2 minutes, 45 seconds)

Physical memory: 13.12 GB

Virtual memory: 13.67 GB

Ended at Nov 6, 2023, 9:56:28 PM.

----- Compile Equations: Stationary in Study 1/Solution 1 (sol1) -------------->

<---- Dependent Variables 1 in Study 1/Solution 1 (sol1) -----------------------

Started at Nov 6, 2023, 9:56:28 PM.

Solution time: 13 s.

Physical memory: 15.34 GB

Virtual memory: 16.16 GB

Ended at Nov 6, 2023, 9:56:42 PM.

----- Dependent Variables 1 in Study 1/Solution 1 (sol1) ---------------------->

<---- Stationary Solver 1 in Study 1/Solution 1 (sol1) -------------------------

Started at Nov 6, 2023, 9:56:42 PM.

Linear solver

Number of degrees of freedom solved for: 100365915.

Solution time: 271 s. (4 minutes, 31 seconds)

Physical memory: 88.6 GB

Virtual memory: 98.94 GB

Ended at Nov 6, 2023, 10:01:13 PM.

----- Stationary Solver 1 in Study 1/Solution 1 (sol1) ------------------------>

Then out of memory error. I only have 96GB on this box.

I am going to attempt to set 1tb of virtual memory then run it again. Though the results will probably not be meaningful.

2 Likes

Thanks for trying anyways!!

I’ve actually got another benchmark that only has a 50GB memory footprint, but it is a electromagnetics problem coupled to a CFD problem which is super compute intensive. I put it in that same share drive as comsol61_benchmark_50GB if you want to run it.

1 Like

Sure, I will run that tonight (maybe overnight).

It consumed 224GB of ram, and took 5hours 10 minutes with the virtual memory being supplied by the intel d7-5600 6.4tb.

2 Likes

That is actually alot better than I thought it would be.

The “50GB” benchmark took a w5-3435x 3 hours and 8 minutes to solve:

Do you know how much ram your simulation requires? That needs to be in your budget.

Do you know if your fortran code is compatible with the nvidia compiler to run on GPUs?

For a given CPU architecture, ie zen4, you can compare systems directly to determine their value.

value per mhz * cores.

You also mentioned that cache helps. AMD has an X line with a larger cache. They also have an F line that are Frequency Optimized, ie more ghz.

You had mentioned cache, beyond that there is memory bandwidth. IE the amd epyc 9004 line has 12 ram channels, and 480GB/sec bandwidth to main memory. The amd epyc 7xx3 has 80GB/sec bandwidth to main memory.

You should also check with other people who are running your package. Are there optimizations for intel or AMD?

The Intel AI optional accelerators on some of the xeon CPUs accelerate some AI tasks 6 times faster. Though in that example they were running Stable Diffusion with the final result that a $13,000 intel cpu would be slower than a $600 nvidia GPU.

It is worth it to check with your particular software vendor to determine if some optional accelerators would be beneficial.

These are the AMD

50gb took 6h 12 min.

I will check that i have the performance profile set later today, and it is not configured to go to sleep. This one completed about 1/2 an hour after I woke up.

1 Like

More memory channels would definitely bring that number down.

I just finished testing the “50GB” benchmark on an M630 with dual E5-2650L v4s and eight total channels of DDR4-2400 and it got 8h 21min running on CentosOS Stream and 8h 48min on Windows 10 21H2; that 5% variance is within normal range.

1 Like

yeah, 6 hours 13 minutes 29 seconds. At least it is consistent. When I get more ram I will rerun it.

I know the amd side better than the intel side, on the amd side this is what I would recommend:

BTW the only reason I am not recommending dual epyc is because everywhere I looked, those were only available in prebuilt systems designed for gpu accelerators or with nvme backplanes, and started at 10K with a 1.2k cpu and no ram or storage.

btw the zen4 cores are 30% faster than the zen4c cores.

Motherboard, includes 10g ethernet

https://www.newegg.com/p/N82E16813183820

cooler per the manufacturer good for 500w

https://www.newegg.com/p/13C-000S-000M7

cpu 96 core zen4

https://www.serversupply.com/PROCESSORS/AMD%20EPYC%2096-Core/2.4GHz/AMD/100-000000789_368317.htm

SSD - this is what I am running, I got it a bit lower, the devision of intel was bought by solidigm, and these drives existed in their warehouse still branded intel. They are dumping them now for some reason. Mine had zero hours on it. They are rated to be filled three times a day for 5 years.

You may be able to get a better price elsewhere, mine was $359.

for reference this is the price for that drive you will pay if you get it built in as part of a server:

and it’s cable:

The pcie5 ports on the motherboard are MCIO 8x instead of slimsas.

or you can get a standard m.2 drive for one of the motherboard slots. The above drive is between 3x and 20x the speed of a typical m.2 SSD.

GPU:

You don’t NEED a GPU, but life will be more plesant with one. The IPMI VGA port has a max resolution of 1024x1280. Probably an NVIDIA gpu as there are more models you can accelerate with that, though you have the slots and budget to get an AMD GPU too so you have more flexibility, or even have one drive the monitor while the other gets used for pure compute.

I got this one because it is only 2 slots wide and air cooled(I plan to use all of my slots):

https://www.newegg.com/pny-geforce-rtx-4070-vcg407012tfxxpb1/p/N82E16814133854

For power the motherboard needs ATX+4+8+8. If you get a GPU for it, that will also need an 8 pin or better connector. Make sure your power supply can do that.

25 SSD

400 CPU

150 ram ( a complete guess I don’t know what they need, but they get hot)

200-600 per gpu

power supply: here is a basic one

https://www.newegg.com/rme-corsair-rm1200e-1200-w/p/N82E16817139315

A basic PC case that is large enough to hold the motherboard:

https://www.newegg.com/p/11-129-274?Item=11-129-274&cm_sp=product-_-from-price-options

Spend the remainder of your budget on ram, try and fill all of the slots. You can see from the other discussions that ram bandwidth makes a difference.

If you know that cache makes a significant increase in your speed, this CPU is available for your socket:

https://www.serversupply.com/PROCESSORS/AMD%20EPYC%2096-Core/2.55GHz/AMD/100-000001254_381212.htm

My build with the $1,200 version of that cpu and a $600 gpu cost about $3500. I built a dell server as a duplicate of my computer and that came out to be $20k.

1 Like

Purpleflame, please do take the offers to run benchmarks. You could easily get multiples of performance for your money by buying the right hardware.

3 Likes