Thanks for letting me know. Whats the device limit for the free version? Any draw backs to it?

Im wondering if that’s too much of a limitation. Im totally okay with just naming users and giving them certain levels of ACLs i.e Guest, Friends, Family

Thanks for letting me know. Whats the device limit for the free version? Any draw backs to it?

Im wondering if that’s too much of a limitation. Im totally okay with just naming users and giving them certain levels of ACLs i.e Guest, Friends, Family

3 users, up to 100 devices. routed devices don’t count so if you tailsale your router and allow subnet access you can end up with access to many more than 100 devices.

100 devices is kind of a lot imho

Yeah its way over the count I have. I wonder if mikrotik supports it. Man this would make remote management of family networks spread across two states way easier

I am pretty sure they do.

Sweeet this is going to work out well

Just added a link to my previous post. Mikrotik has native docker support on the routerOS so you can run a Tailscale gateway in docker.

I’m having troubles with permissions, when deploying nextcloud:

failed to deploy a stack: Network nextcloud_default Creating Network nextcloud_default Created Container nextcloud-db-1 Creating Container nextcloud-db-1 Created Container nextcloud-app-1 Creating Container nextcloud-app-1 Created Container nextcloud-db-1 Starting Container nextcloud-db-1 Started Container nextcloud-app-1 Starting Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: chdir to cwd (“/var/www/html”) set in config.json failed: permission denied: unknown

Im using the latest truenas scale if that makes a difference and debian 12.

nextcloud/data + nextcloud/database are getting created with these owners and groups. I’m really struggling to get it working at this point.

drwxrwx— 2 root root 2 Jul 10 23:07 data

drwxrwx— 5 999 systemd-journal 13 Jul 10 22:35 database

I’m having the same problem. A previous answer in this thread stated that adding “no_root_squash” to the fstab mount command and the rebooting the vm might fix it but when I try it the share doesn’t mount at /nfs. If you have any luck please update the thread.

Okay, here is what you do:

In the TrueNAS interface click on SystemSettings > Shell

edit /etc/exports

sudo nano /etc/exports

there you will see a line which shares the NFSDocker dataset. Within the parenthesis you will see a comma separated list of options

IE (sec=sys,rw,anonuid=1001,anongid=1001,no_subtree_check)

at the end of this list Inside the parenthesis add a comma and no_root_squash to the list

(sec=sys,rw,anonuid=1001,anongid=1001,no_subtree_check,no_root_squash)

save and exit the file then run the command sudo exportfs -ra

Now if you reboot the virtual machine which is running docker you should then be able to create the nextcloud instance in portainer.

hope this helps!

Ah, nice glad you’ve worked it out. I did a bit of googling about no_root_squash, from the looks of it, it doesn’t seem like the best idea. Why we should not use the no_root_squash Option | Server Buddies so I went on a big tangent which is only really worked recently.

I managed to somewhat solve the issue I was having by creating a dummy file in each folder I created for the nfs share. That way docker avoids an initialization step. I don’t have access to my desktop but I found a good post about it on stackocerflow. I also gave up and then just used the truecharts version.

In general my work flow is:

touch test then run chown nfsdocker test and chgrp nfsdocker test

version: "2.1"

services:

code-server:

image: lscr.io/linuxserver/code-server:latest

container_name: code-server

environment:

- PUID=1001

- PGID=3002

- TZ=Etc/UTC

- PASSWORD=password #optional

- HASHED_PASSWORD= #optional

- SUDO_PASSWORD= #optional

- SUDO_PASSWORD_HASH= #optional

- DEFAULT_WORKSPACE=/config/workspace #optional

volumes:

- code_server:/config

ports:

- 8443:8443

restart: unless-stopped

volumes:

code_server:

external: true

This setup has worked for me on a few containers such as: code server, nginx proxy manager, node. However not nextcloud lol. It worked with the db aspect but it wasn’t having it for nc.

I don’t know if you tried tailscale or not, but it’s very picky with what it can find on my network. Running as a subnet router it struggles to find certain ports on my machine, not sure why. Specifically jellyfin running in truecharts.

I want to be able to host nextcloud on the Internet so I’m using nginx proxy manager, which I’ve managed to get to work ish. If anyone is interested in that setup let me know. I used cloudflareddns, nginx proxy manager to get it working. Steps are:

Ddns:

version: '2'

services:

cloudflare-ddns:

image: oznu/cloudflare-ddns:latest

restart: always

environment:

- ZONE=YOURDOMAIN

- PROXIED=true

-API_KEY=YOURKEY

And that’s all you should need to do.

Easiest way around the NFS permission issues for me was to just run containers as the uid:gid of the user on the truenas side, e.g:

version: '2'

volumes:

nextcloud:

db:

services:

db:

image: postgres

user: "3001:3001"

restart: always

volumes:

- /nfs/nextcloud/db:/var/lib/postgresql/data

environment:

- POSTGRES_USER=nextcloud

- POSTGRES_PASSWORD=nextcloud

app:

image: nextcloud

user: "3001:3001"

restart: always

ports:

- 8080:80

links:

- db

volumes:

- /nfs/nextcloud/data:/var/www/html

environment:

- POSTGRES_DB=nextcloud

- POSTGRES_HOST=db

- POSTGRES_USER=nextcloud

- POSTGRES_PASSWORD=nextcloud

Might need to create the necessary structures beforehand as some containers do some hackery internally with su(do), but this fixed nextcloud using the weird uid of 33.

I’m looking to do something similar to this, but including OPNSense and some 10g NICs, and I’m running into juggling the virtualization.

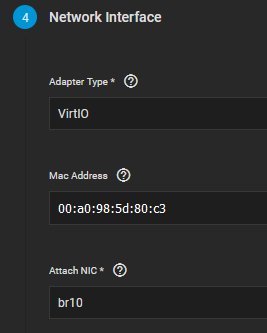

I’m currently running Proxmox on the metal, with 3 VMs: OPNSense, TrueNAS Scale, and an Alpine Linux VM for docker/portainer. I chose Proxmox because I can keep all of the NICs on the host and use OpenVSwitch + VirtIO to handle bridging everything with multiple VLANs and good performance.

This makes the network virtualization easy, but then I run into issues with the disks for TrueNAS. I have 5 spinning disks and 2 NVMe disks for TrueNAS (Proxmox and the VM boot drives are on a ZFS mirror of two additional SATA SSDs). Four of the spinning disks fully populate the onboard SATA controller and that plus the two NVMe can be passed through via their IOMMU groups no problem. The fifth spinning disk has to be on the same SATA controller as the Proxmox SSDs, however. Physically, there is no way to get this last disk into a controller that can be passed through, it’s an ITX build and all PCIe is fully populated (2x M.2 NVMe, M.2 SATA HBA, x16 dual 10G NIC).

I see a few possibilities but I’m not sure which path would be best:

I’ve ended up using regular VLAN interfaces and bridges, instead of open-vswitch. This requires:

If you’re using a LAG, configure bond0 with the appropriate settings and member interfaces:

For each VLAN, create a vlanX interface with bond0 (or the appropriate physical interface, if not a LAG):

For each VLAN, create a brX interface with vlanX as a member interface. If you want the TrueNAS host to have an address on a VLAN, this is where you put it as an alias:

Attach the VMs’ NICs to the appropriate brX interfaces:

So in 2023 are there still issues with the host networking? Don’t want to incorrectly, correctly commit to the workarounds if it’s been fixed. Cheers

Sadly, ixSystems has still not solved the issue. So yeah, the workaround is still needed.

You can see the other guide that’s being updated on this here:

Hope this helps someone out but I was having a bunch of problems with setting up NFS mount on fstab.

Tested on a different setup and found that it was having some issues with nfsv4. Seem to work much better with nfsv3 so the fstab should look like this:

192.168.1.1:/mnt/tank/NFSdocker/nfsdckr /nfs nfs rw,vers=3,async,noatime,hard 0 0

Worked a lot better for me this way.

Here is some info from a netapp forum I found on the differences and it seems like it shouldn’t really affect performance to any meaningful amount.

I had the NFS mount issue on boot as well. After trying a lot of the things mentioned here and elsewhere without success I did some investigation of my own. It seems like the network-online.target doesn’t necessarily wait for the interface/link that is connected to the host network. So it doesn’t really guarantee that docker.service and the nfs.mount will wait for the host network, even though both actually depends on network-online.target by default.

But the systemd documentation: (I can’t post links but do a web search for ‘systemd NETWORK_ONLINE’) have an example, at the end, for putting extra requirements on the network-online.target. So I created and enabled a custom service that will not complete until it can successfully ping the truenas host, and made network-online.target a dependency to the new service (WantedBy=). This is the only thing that have actually worked for me!

# /etc/systemd/system/wait-for-truenas.service

[Unit]

DefaultDependencies=no

After=nss-lookup.target

Before=network-online.target

[Service]

ExecStart=sh -c 'while ! ping -c 1 <truenas-host-ip>; do sleep 1; done'

Type=oneshot

RemainAfterExit=yes

[Install]

WantedBy=network-online.target

Sweet baby jeebus - I’m glad I skipped to the end after 2 hours of fighting this thing.

The “wait-for-truenas.service” resolved my issue - after countless failures using other methods listed above.

All I had to do, was create the file as you described (with updated IP).

Then:

sudo systemctl daemon-reload

sudo systemctl enable wait-for-truenas.service

reboot

Confirm it’s working - cry single tear of joy.

I will also note that permissions issues cropped up next, and following the ACL advice listed here by @Famzilla resolved it for me.

I can’t link, so search for: “I found that by setting the ACL (recursively) for my vm-persistent-storage to these settings actually gets everything to work.”

You can also register for a free limited (3-node) Portainer “business” license, which is the direction I went.