Hello everyone, I decided to write this guide which is an amalgamation of all the solutions found on this post by Wendell:

Post

Disclaimer:

This guide is based on my personal experience on 2 TrueNAS systems, follow at your own discretion. I will try to help if I can, and update this guide if I missed something.

Thanks:

I want to thank the following people:

@wendell for the original guide, and for his help with everything.

A user by the name of Jip-Hop on GitHub, who wrote the guide on how to connect the bridge to avoid VMs not having internet access. And created the original script.

A user by the name of tprelog on GitHub for adapting the Script.

@talung for showing me that the basic Kubernetes implementation is flawed and not efficient, and changes to the script. (See comments)

Main issues:

The first issue this aims to solve, is the fact that TrueNAS Scale uses k3s to run its apps, and that’s both not very performant and not usable for most of us with just one NAS.

The second issue is that VMs on TrueNAS do not see the host, and Wendell showed in his video a solution that is working, but interferes with the solution to the first issue.

The reason I put the solution for VMs here, is because when you implement the script for running Docker natively, they lose access to the internet with the solution Wendell showed.

So a little back and forth with the creator of the script, and I was pointed to Jip-Hop’s post with the solution to have both.

The overhead description:

The solution will look like so:

1. Native Docker and Docker Compose

2. Portainer to manage all other Dockers easily

3. Optional settings and recommendations

4. Virtual bridge to allow VMs to see TrueNAS host shares

Let’s get started!

Table of Content

Stage 2—Making Sure everything is ready for Docker

Stage 3—Getting Docker to run Natively

Stage 4—Optional Upgrades

Stage 5—Portainer

Stage 5a—Optional things in Portainer

Stage 6—Enabling VM host share access

First off, if you have dockers and apps in TrueNAS, be sure to take note of their volumes and docker-compose file if relevant, as you’ll have to recreate them.

Stage 1—Create the Data set

This is obvious, but it has some pitfalls that some new users to TrueNAS and Linux in general might not be aware of.

We’ll need to create a Dataset in the pool you use for operations, and not just cold storage. So if you have a SSD pool or a more powerful pool that is dedicated to operations, you’ll choose that one.

In order to do that, in TrueNAS go to Storage and find the pool you will be using.

Click on the 3 dots to the right of the first line in that pool and click on Add Dataset.

Now you’ll see the creation screen, give it a name, and nothing else. It’s Critical that you don’t change the share setting to SMB, but instead leave it as Generic. SMB breaks chmod, which is crucial for dockers.

Now that you have the Dataset, we’ll prepare the groundwork for the move to docker.

Stage 2—Making Sure everything is ready for Docker

First thing’s first, we’ll have to make sure the physical interface has an IP address, and that you can reach TrueNAS on that IP. We do not want to be stuck out of TrueNAS and have to physically do these things on the server.

So go to Networks > Locate the active physical connection, and click on it.

Now, make sure DHCP is off, and add an alias in the following format:

192.168.0.2/24

where 192.168.0.2 is the IP TrueNAS is responding to and shows the web portal on.

Test and Save the settings, make sure nothing broke, and if you came here from Wendell’s guide continue to delete the br interface.

Don’t forget to exclude this IP in your DHCP or assign it an IP outside the DHCP pool, so that other devices will not get this IP assigned.

To set a DNS in TrueNAS settings, pick your DNS of choice and set the IP in TrueNAS > Network > Global Configuration > Nameserver.

I’ll start off by clearing up things for people who used or set something in the TrueNAS apps, or tried Wendell’s solution with the Bridge.

If you are starting from a fresh TrueNAS, skip to Stage 3.

We’ll have to go to TrueNAS > Apps and stop and remove every app that you may have created. Once that’s done, we’ll go to:

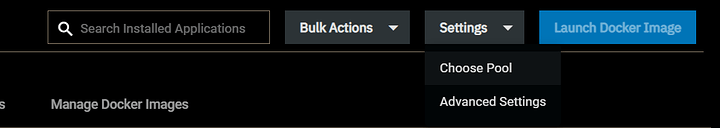

and click on Advanced Settings, there we’ll make sure the IP is not set to a bridge, but to the physical Interface.

Once that’s done, go back to Settings from the image and click on Unset Pool. Give it time, and when it’s done we can move to stage 3.

Stage 3—Getting Docker to run Natively

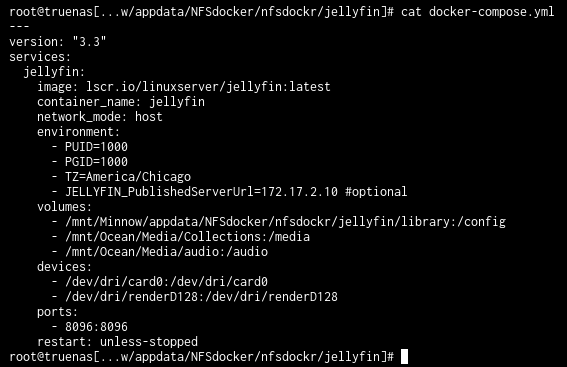

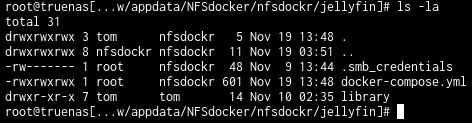

We’ll create a file somewhere that’s accessible to you, if you want you can do it from TrueNAS shell or from a share.

[details=“Enable Docker Script”]

#!/usr/bin/env bash

# Enable docker and docker-compose on TrueNAS SCALE (no Kubernetes)

# Follow the guide in this post before using this script:

# https://forum.level1techs.com/t/truenas-scale-native-docker-vm-access-to-host-guide/190882

# This script is a hack! Use it at your own risk!!

# Edit all the vars under:

# Vars you need to change

# to point to your storage

#

# Schedule this script to run via System Settings -> Advanced -> Init/Shutdown Scripts

# Click Add -> Type: Script and choose this script -> When: choose to run as Post Init

exec 1>/tmp/enable-docker.log 2>&1

mount -o remount,rw /

# Vars you need to change:

# set a path to your docker dataset

docker_dataset="/path/to/Docker"

# set the docker_daemon path on your storage for it to survive upgrades

new_docker_daemon="/path/to/daemon.json"

# apt sources persistence

new_apt_sources="/path/to/aptsources.list"

echo "§§ Starting script! §§"

install-dev-tools

echo "§§ Checking apt and dpkg §§"

for file in /bin/apt* /bin/dpkg*; do

if [[ ! -x "$file" ]]; then

echo " §§ $file not executable, fixing... §§"

chmod +x "$file"

else

echo "§§ $file is already executable §§"

fi

done

echo "§§ apt update §§"

apt-get update -qq

echo "§§ Linking apt sources to your storage for persistence §§"

aptsources="/etc/apt/sources.list"

timestamp=$(date +"%Y%m%d_%H%M%S")

backup_file="/tmp/sources_backup_$timestamp.list"

trap 'rm -f "$backup_file"' EXIT INT TERM

if [[ -f "$aptsources" ]] && [[ ! -L "$aptsources" ]]; then

cp "$aptsources" "$new_apt_sources"

mv "$aptsources" "$aptsources.old"

fi

if [[ ! -f "$new_apt_sources" ]]; then

touch "$new_apt_sources"

fi

if [[ ! -f "$aptsources" ]]; then

ln -s "$new_apt_sources" "$aptsources"

fi

cp "$new_apt_sources" "$backup_file"

echo "§§ Fix the trust.gpg warnings §§"

mkdir -p /etc/apt/trusted.gpg.d

for key in $(gpg --no-default-keyring --keyring /etc/apt/trusted.gpg --list-keys --with-colons | awk -F: '/^pub:/ { print $5 }'); do

echo "Processing key: $key"

gpg --no-default-keyring --keyring /etc/apt/trusted.gpg --export --armor "$key" >/etc/apt/trusted.gpg.d/"$key".asc

done

mv /etc/apt/trusted.gpg /etc/apt/trusted.gpg.backup

echo "§§ Docker Checks §§"

apt-get install -y -qq ca-certificates curl gnupg lsb-release

if [[ ! -f /etc/apt/keyrings/docker.gpg ]]; then

echo "§§ Missing Keyrings §§"

mkdir -p /etc/apt/keyrings

chmod 755 /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/debian/gpg | gpg --dearmor -o /etc/apt/keyrings/docker.gpg

chmod 755 /etc/apt/keyrings/docker.gpg

else

echo "§§ Keyrings Exist §§"

fi

if ! grep -q "https://download.docker.com/linux/debian" /etc/apt/sources.list; then

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/debian $(lsb_release -cs) stable" | tee -a /etc/apt/sources.list >/dev/null

apt-get update -qq

else

echo "§§ Docker List: §§"

cat /etc/apt/sources.list

fi

Docker=$(command -v docker || true)

DockerV=$(docker --version || true)

DCRCHK=$(apt list --installed 2>/dev/null | grep docker || true)

if [[ -z "$Docker" ]] || [[ -z "$DCRCHK" ]]; then

echo "Docker executable not found"

apt-get install -y -qq docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

fi

chmod +x /usr/bin/docker*

install -d -m 755 -- /etc/docker

if [[ ! -f /etc/docker.env ]]; then

touch /etc/docker.env

fi

. ~/.bashrc

echo "§§ Which Docker: $Docker §§"

echo "§§ Docker storage-driver §§"

version=$(cut -c 1-5 </etc/version | tr -d .)

if ! [[ "$version" =~ ^[0-9]+$ ]]; then

echo "version is not an integer: $version"

exit 1

elif [[ "$version" -le 2204 ]]; then

storage_driver="zfs"

elif [[ "$version" -ge 2212 ]]; then

storage_driver="overlay2"

fi

echo "§§ Docker daemon.json §§"

echo "§§ Storage Driver: $storage_driver §§"

echo "§§ Dataset: $docker_dataset §§"

# Add debug logging

echo "§§ Attempting to create JSON configuration... §§"

read -r -d '' JSON <<END_JSON

{

"data-root": "$docker_dataset",

"storage-driver": "$storage_driver",

"exec-opts": [

"native.cgroupdriver=cgroupfs"

]

}

END_JSON

# Log the JSON content

echo "§§ Generated JSON content: §§" >>/tmp/enable-docker.log

echo "$JSON" >>/tmp/enable-docker.log

docker_daemon="/etc/docker/daemon.json"

echo "§§ Checking $docker_daemon §§"

if [[ $EUID -ne 0 ]]; then

echo "§§ Please run this script as root or using sudo §§"

if systemctl list-units --type=service --all | grep -q 'k3s'; then

if [[ "$(systemctl is-enabled k3s)" == "enabled" ]] || [[ "$(systemctl is-active k3s)" == "active" ]]; then

echo "§§ You cannot use this script while k3s is enabled or active §§"

else

echo "§§ k3s is present but not active or enabled §§"

fi

else

echo "§§ k3s is not present on the system §§"

fi

elif ! zfs list "$docker_dataset" &>/dev/null; then

echo "§§ Dataset not found: $docker_dataset §§"

else

echo "§§ Checking file: $docker_daemon §§"

if [[ -f "$docker_daemon" ]] && [[ ! -L "$docker_daemon" ]]; then

rm -rf "$docker_daemon"

fi

if [[ ! -f "$new_docker_daemon" ]]; then

touch "$new_docker_daemon"

fi

if [[ ! -f "$docker_daemon" ]]; then

ln -s "$new_docker_daemon" "$docker_daemon"

fi

current_json=$(cat "$docker_daemon" 2>/dev/null || echo "{}")

merged_json=$(echo "$current_json" | jq --argjson add "$JSON" '. * $add')

if [[ "$merged_json" != "$current_json" ]]; then

echo "§§ Updating file: $docker_daemon §§"

echo "$merged_json" | tee "$docker_daemon" >/dev/null

if lspci | grep -i nvidia >/dev/null 2>&1; then

echo "§§ NVIDIA graphics card detected. §§"

nvidia-ctk runtime configure --runtime=docker

else

echo "§§ No NVIDIA graphics card found. §§"

fi

if [[ "$(systemctl is-active docker)" == "active" ]]; then

echo "§§ Restarting Docker §§"

systemctl restart docker

else

echo "§§ Starting Docker §§"

systemctl start docker

fi

if [[ "$(systemctl is-enabled docker)" != "enabled" ]]; then

echo "§§ Enable and starting Docker §§"

systemctl enable --now docker

fi

fi

fi

echo "§§ Which Docker: $Docker §§"

echo "§§ Docker Version: $DockerV §§"

echo "§§ Script Finished! §§"

Now you’re set from the Docker side of things, you could use docker and docker compose commands freely. However, if you wish to do this with Portainer and have a few extra things, please continue reading.

Stage 4—Portainer

Now that we have docker compose we can create Portainer.

Create a docker-compose.yml somewhere in your pool, and paste this inside:

Portainer

name: portainer

services:

portainer-ce:

container_name: portainer

image: portainer/portainer-ce:latest

mem_limit: "2147483648"

networks:

default: null

pids_limit: 4096

ports:

- mode: ingress

target: 8000

published: "8000"

protocol: tcp

- mode: ingress

target: 9443

published: "29443"

protocol: tcp

read_only: true

restart: unless-stopped

volumes:

- type: bind

source: /var/run/docker.sock

target: /var/run/docker.sock

bind:

create_host_path: true

- type: bind

source: /path/to/Docker/Portainer/data

target: /data

bind:

create_host_path: true

- type: bind

source: /etc/localtime

target: /etc/localtime

read_only: true

networks:

default:

name: portainer_default

Save, and run docker compose up -d .

You should have access to Portainer now on port 29443 of the IP you set for TrueNAS.

Stage 4a—Optional things in Portainer

In Portainer, I have a few things that I prefer to set for ease of use and maintenance.

-

Go to Environments>local>Environment Details, and put the IP of the server from Stage 1, then save. With this, you can easily launch containers directly from Portainer.

-

You can also set Portainer to not ask for a username and password every 8 hours if you are running securely. You can do that by going to Settings>Authentication and setting it to what you feel comfortable with.

-

I like to set up Watchtower to make sure the images and containers are always up-to-date, and I do not need to do this manually. In Portainer create a stack and put this inside:

Watchtower

name: watchtower

services:

watchtower:

container_name: watchtower

image: containrrr/watchtower

command:

- -s

- "0 30 0 * * *"

- --cleanup

volumes:

- type: bind

source: /etc/localtime

target: /etc/localtime

bind:

create_host_path: true

read_only: true

- type: bind

source: /var/run/docker.sock

target: /var/run/docker.sock

bind:

create_host_path: true

restart: unless-stopped

networks:

default:

name: watchtower_default

Watchtower runs 24 hours after it’s created by default, and every 24 hours after that. But in my compose, I set it to run at half past midnight, you can change it in this line: - "0 30 0 * * *"

That is a cron format, so if you do not know how a cron format is built, you can use this:

https://crontab.cronhub.io/ or something other site like this.

Stage 5—Enabling VM host share access

For the last part, if you plan to use VMs and need them to access your host machine, we’ll create a bridge in TrueNAS to enable the VMs to access the host.

This is explained in Wendell’s video, and has not been fixed since. Basically, you need to go to your host via the network card.

Go to Network > Interface > Add and name it br0. Next, make sure DHCP is off, and give it an alias with an IP that’s outside your real network’s segment. i.e., if your network is 192.168.0.1/24 you can give this bridge 192.168.254.1/24.

Test and save your setting, if all goes well we can move to the VMs.

In Virtualization edit your VM and add another NIC to it, by clicking on the VM you want, Devices > Add. In this NIC, it’s important that you choose to attach to br0, then save.

Start your VM, and you’ll be able to set the IP of the second NIC manually. You’ll have to set it as follows:

IP: 192.168.254.2

Subnet Mask: 255.255.255.0

Gateway: 192.168.254.1

Once you save that, you should see the shares on the IP 192.168.254.1.

If not, go to the hosts file of your OS, and add the following line:

192.168.254.1 NameOfYourTrueNASServer

Save, and it should go there directly from now on.

That’s it, I hope this helps you, and saves you the time I had to put into these issues.