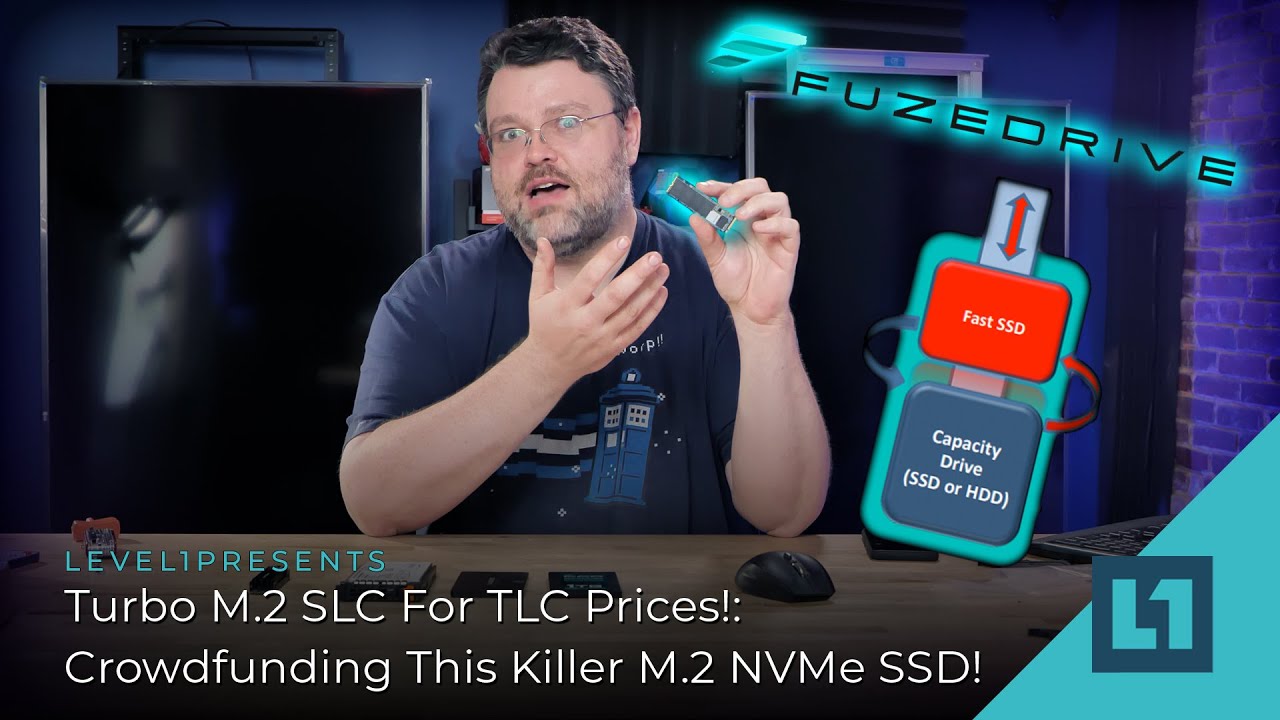

I did a video on the Enmotus FuzeDrive – an SSD with a dedicated 128gb SLC tier, plus the rest as QLC flash. It’s a 1.65tb drive, overall.

When I did the video, a lot of people asked about using this on Linux because it takes a special driver to manage moving data in and out of the tiers safely.

On Linux, however, it is possible for one to use either lvm or bcache with this drive. It can be configured as tiering (aka sir not appearing in this how to) or as a write-back cache.

What is the difference between tiering and a long-lived write-back cache?

The terminology that is used in the Linux kernel modules and how-to documentation is subtly different from what most industry folks say.

With tiering, you always have all the combined space of all levels of the tier. With caches, in general, the cache represents a subset of data elsewhere.

Write-back caching this is a term used when the data is written to the cache first. When the write to cache is completed, the overall write is marked as completed even though technically, at some point, the data written to cache should be flushed and finally written out to whatever the cache is caching.

Write-through caching This typically just means the data is written directly to the underlying device that is being cached, which is typically much slower. The operation is marked as complete only when the cached device signals it has completed the write. Typically the data is retained in the faster level of cache as well.

There is also write-around caching and this is left as an exercise to the reader.

The FuzeDrive SSD shows up as one big block device, on my system as /dev/nvme0n1 just like a regular NVMe device. However, the SLC region is fixed in the following LBA regions on my units*

1.6TB FuzeDrive SSD (~128GB SLC)

SLC region: LBA 0 to 268697599

QLC region: LBA 268697600 to 3027952367

900GB FuzeDrive (~24 GB SLC):

SLC region: LBA 0 to 50380799

QLC region: LBA 50380800 to 1758174767

lsblk

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 29.9M 1 loop /snap/snapd/8542

loop1 7:1 0 55M 1 loop /snap/core18/1880

loop2 7:2 0 255.6M 1 loop /snap/gnome-3-34-1804/36

loop3 7:3 0 62.1M 1 loop /snap/gtk-common-themes/1506

loop4 7:4 0 49.8M 1 loop /snap/snap-store/467

sda 8:0 0 465.8G 0 disk

├─sda1 8:1 0 512M 0 part /boot/efi

└─sda2 8:2 0 465.3G 0 part

├─vgubuntu-root 253:0 0 464.3G 0 lvm /

└─vgubuntu-swap_1 253:1 0 980M 0 lvm [SWAP]

nvme0n1 259:0 0 1.4T 0 disk

├─nvme0n1p1 259:1 0 128.1G 0 part

└─nvme0n1p2 259:2 0 1.3T 0 part

sudo gdisk /dev/nvme0n1

… setup the partitions, and for LVM, change the type to 8E00…

Command (? for help): n

Partition number (2-128, default 2):

First sector (34-3027952334, default = 268697600) or {+-}size{KMGTP}:

Last sector (268697600-3027952334, default = 3027952334) or {+-}size{KMGTP}: 3027953267

Last sector (268697600-3027952334, default = 3027952334) or {+-}size{KMGTP}:

Current type is 8300 (Linux filesystem)

Hex code or GUID (L to show codes, Enter = 8300): L

Type search string, or <Enter> to show all codes: LVM

8e00 Linux LVM

Hex code or GUID (L to show codes, Enter = 8300): 8e00

Changed type of partition to 'Linux LVM'

Command (? for help): p

Disk /dev/nvme0n1: 3027952368 sectors, 1.4 TiB

Model: PCIe SSD

Sector size (logical/physical): 512/512 bytes

Disk identifier (GUID): F31CE44D-FFD5-4E2B-BB42-1980E991D220

Partition table holds up to 128 entries

Main partition table begins at sector 2 and ends at sector 33

First usable sector is 34, last usable sector is 3027952334

Partitions will be aligned on 128-sector boundaries

Total free space is 94 sectors (47.0 KiB)

Number Start (sector) End (sector) Size Code Name

1 128 268697599 128.1 GiB 8E00 Linux LVM

2 268697600 3027952334 1.3 TiB 8E00 Linux LVM

Command (? for help): w

Final checks complete. About to write GPT data. THIS WILL OVERWRITE EXISTING

PARTITIONS!!

**Note: I just let gdisk use the defaults for the last sectors on the 2nd partition instead of keying that in because I’ve done terrible things to my drive. Your numbers might not be precisely the same. **

Using this information, you can partition your NVMe into SLC and QLC regions.

So after partitioning /dev/nvme0n1p1 is 128gb SLC and /dev/nvme0n1p2 is the rest of the drive (QLC).

*TODO: Make sure retail unit LBA regions match.

Now in terms of how to leverage these two partitions most effectively – the jury is still out for me on that [lvm and bcache are in some kind of grudgematch with the ST:TOS battle music playing). It looks to me like dm-writecache , rather than dm-cache , is going to be the winning formula. A lot of the caching stuff on Linux, so far, has really been most tested mixing SSDs and HDDs. But here we’re mixing SSDs only, an d the reads from SSDs (whether QLC or SLC) are generally pretty good, though SLC is a bit better.

There is also bcache. LVM uses dm-(write)cache under the hood, but bcache is something else entirely.

It is possible, of course, to use other drives or just use the 128gb SLC with something that requires a high endurance – like a SLOG for example on a ZFS Pool – but that’s up to you.

Configuring lvmcache

This will be a pretty vanilla LVM setup using dm-cache. This is technically caching, not tiering, meaning the total usable capacity of this implementation is around 1.5tb – a bit less than you’d get on the truly tiering solution on windows. *TODO: See what happened to LVMTS, as 5 years ago I did this as tiering with something from github… *

# All these commands require either a root shell or sudo prefix...

#

# Mark partitions as usable for LVM

pvcreate /dev/nvme0n1p1

pvcreate /dev/nvme0n1p2

# Add to Group

vgcreate VG /dev/nvme0n1p1 /dev/nvme0n1p2

# Make a Logical volume

lvcreate -n notazpool -L 1300G VG /dev/nvme0n1p2

# There will be two cache segments -- cache metadata and

# cache data. Cache metdada should be approx 1000 times smaller than

# the cache data lv, minimum size of 8mb

# I rounded up

lvcreate -n CACHE_DATA_LV -L 120G VG /dev/nvme0n1p1

lvcreate -n CACHE_METADATA_LV -L 200M VG /dev/nvme0n1p1

# finally, we can stitch these things together.

lvconvert --type cache-pool --poolmetadata VG/CACHE_METADATA_LV VG/CACHE_DATA_LV

lvconvert --type cache --cachepool VG/CACHE_DATA_LV VG/notazpool

# and then check your work.

lvs -a vg

lvs -a VG

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

[CACHE_DATA_LV_cpool] VG Cwi---C--- 120.00g 0.01 3.89 0.00

[CACHE_DATA_LV_cpool_cdata] VG Cwi-ao---- 120.00g

[CACHE_DATA_LV_cpool_cmeta] VG ewi-ao---- 200.00m

[lvol0_pmspare] VG ewi------- 200.00m

notazpool VG Cwi-a-C--- <1.27t [CACHE_DATA_LV_cpool] [notazpool_corig] 0.01 3.89 0.00

[notazpool_corig] VG owi-aoC--- <1.27t

Why dm-writecache instead of dm-cache?

Well, here’s a read benchmark:

hdparm -t /dev/nvme0n1p1

/dev/nvme0n1p1:

HDIO_DRIVE_CMD(identify) failed: Inappropriate ioctl for device

Timing buffered disk reads: 4276 MB in 3.00 seconds = 1425.15 MB/sec

root@s500dsktop:/home/wendell# hdparm -t /dev/nvme0n1p2

/dev/nvme0n1p2:

HDIO_DRIVE_CMD(identify) failed: Inappropriate ioctl for device

Timing buffered disk reads: 3794 MB in 3.00 seconds = 1264.66 MB/sec

fio testing, 200g

test: (g=0): rw=randrw, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.16

Starting 1 process

test: Laying out IO file (1 file / 204800MiB)

Jobs: 1 (f=1): [m(1)][100.0%][r=124MiB/s,w=41.3MiB/s][r=31.8k,w=10.6k IOPS][eta 00m:01s]

test: (groupid=0, jobs=1): err= 0: pid=11730: Mon Aug 24 18:05:58 2020

read: IOPS=11.9k, BW=46.6MiB/s (48.8MB/s)(150GiB/3299352msec)

bw ( KiB/s): min= 1000, max=506304, per=100.00%, avg=47694.92, stdev=36133.40, samples=6593

iops : min= 250, max=126576, avg=11923.72, stdev=9033.35, samples=6593

write: IOPS=3973, BW=15.5MiB/s (16.3MB/s)(50.0GiB/3299352msec); 0 zone resets

bw ( KiB/s): min= 272, max=168016, per=100.00%, avg=15900.10, stdev=12058.16, samples=6593

iops : min= 68, max=42004, avg=3975.01, stdev=3014.54, samples=6593

cpu : usr=1.46%, sys=8.10%, ctx=30273155, majf=0, minf=8

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwts: total=39320441,13108359,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=46.6MiB/s (48.8MB/s), 46.6MiB/s-46.6MiB/s (48.8MB/s-48.8MB/s), io=150GiB (161GB), run=3299352-3299352msec

WRITE: bw=15.5MiB/s (16.3MB/s), 15.5MiB/s-15.5MiB/s (16.3MB/s-16.3MB/s), io=50.0GiB (53.7GB), run=3299352-3299352msec

Disk stats (read/write):

dm-2: ios=39316712/13255987, merge=0/0, ticks=82778796/127161724, in_queue=209940520, util=91.61%, aggrios=15990755/13885105, aggrmerge=0/0, aggrticks=63719553/150999489, aggrin_queue=214719042, aggrutil=93.04%

dm-4: ios=1925/12041955, merge=0/0, ticks=47280/64661392, in_queue=64708672, util=25.00%, aggrios=47920375/39648303, aggrmerge=51892/2007014, aggrticks=190478427/440790550, aggrin_queue=516269164, aggrutil=93.63%

nvme0n1: ios=47920375/39648303, merge=51892/2007014, ticks=190478427/440790550, in_queue=516269164, util=93.63%

dm-5: ios=24882286/13257273, merge=0/0, ticks=141016144/124120916, in_queue=265137060, util=92.61%

dm-3: ios=23088056/16356089, merge=0/0, ticks=50095236/264216160, in_queue=314311396, util=93.04%

root@s500dsktop:/mnt# cat fio

cat: fio: No such file or directory

root@s500dsktop:/mnt# cat test-fio.sh

fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=test --bs=4k --iodepth=64 --size=200G --readwrite=randrw --rwmixread=75

Bcache

So, you’d rather use bcache?