Story time

Over 3 years ago, I built my first “home NAS”, based on a Raspberry PI 4B (or 3B, I’m not sure anymore) and USB Attached SCSI (UAS) enclosures for replaced laptop HDDs (at that point in time I have accumulated a small collection of 512GB-1TB HDDs that I replaced with SSDs in my/friends’/families’ laptops). It wasn’t anything fancy, just a PiHole + Samba server.

And thus, the pihost was born.

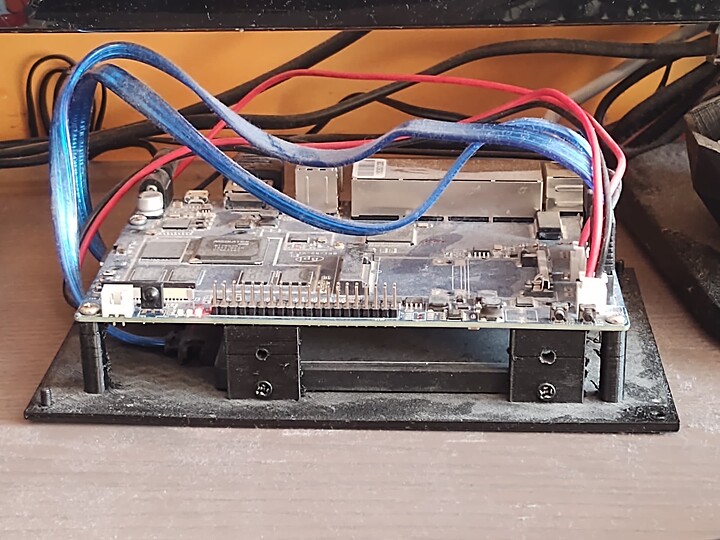

Now that I think about it, I’m pretty sure it was a 3B Pi, because its 100Base-T could have been one of the major reasons for its replacement, which came in December 2020 (yay, pandemic!). Enter Banana Pi R2 and pihost2.

There were several reasons why I replaced the Raspberry Pi solution with something else, but I can hardly remember all of them now (come on, almost 37 months at this point). Access to PCIe lanes without modding was definitely up there (although I never ended up using them), so was raw SATA connectivity. Built-in switch functionality was also nice-to-have and I remember I was even using it to extend my regular home network with its additional (GbE) ports.

The initial transition was almost painless. There was some initial struggle with the software/firmware side (MediaTek…), but as Raspberry Pi didn’t have 64-bit support at that time (does it now?) there was almost no difference in userland. The drives felt more stable as now they were plugged directly into SATA instead of via UAS and USB (which could become unstable after running for weeks).

On the software-storage side, I experimented with btrfs, but got severely disappointed with its performance on the platform. I didn’t even dare to touch ZFS or anything more complex and just ended up with two ext4 drives exported as two separate Samba shares.

On the software-networking side, there were two major advancements, although neither of them had anything to do with the platform itself (although I would never do one of them non 100MbE).

The first one was a VPN gateway service, which essentially bridged my home LAN with work lab VPN, s.t. me & my wife could have access to the work lab network from our home computers without setting up VPNs on them. Essentially we could access specific subnets as if they were local to us, but not vice-verse, i.e. the work lab would only see one VPN client. The setup is defunct by now, but at the time (pandemic) it was really helpful to have access to those resources without routing an unnecessary amount of traffic ![]()

(Also IIRC OpenVPN on Windows has/had some issues with TAP adapters that our lab was using).

The second one was that I got TLS certificates for my LAN services using a small custom tool, Let’s Encrypt and certbot with OVH DNS plugin. I bougth myself a mdl.computer domain (funny it’s not detected as url by Markdown xD) and setup internal DNS for them. The local addresses and services are never exposed but as you can see I’m getting up-to-date certificates for stuff such as my OctoPrint server.

The tool I have for this is currently private (I didn’t consider it mature enough), but if there’s an interest, I can try to polish it up and share it.

On the hardware/firmware side the board wasn’t too bad, but also wasn’t too good. 2GB RAM is/was enough, but not enough for anything more ambitious. I planned to have a small sensor panel (my 3d-printed enclosure even has a cutout for it), but it turned out that the SPI drivers… don’t really work ¯\_(ツ)_/¯. Or rather should work but you have to XYZ… As I recalled the pain of working with DTS and rebuilding it to get the switch part working, I decided to forgo that idea.

Nevertheless, after about two years, stuff started to get flaky. At first one of the drives started having ATA problems and was getting disconnected. I ended up merging both partitions onto a single 1TB SSD and removing HDDs altogether. It was around that time that I started eyeing a future replacement for pihost2, this time possibly something more… robust.

Almost a year has passed, the single SSD was working without problems, until very recently, when I started getting similar ATA errors on the SSD. In the previous setup I suspected power delivery issues, but as those started popping up with much smaller load, I got a bit worried. The drive is very lightly used, not even a full TB written. The previous drives, when inserted into other systems, also report no SMART/filesystem issues, so I’m heavily suspecting issues with the board itself. Using the other SATA/power slot I got it working again for a few weeks, before issues started popping up again ![]() . Still, no FS errors (when working), but:

. Still, no FS errors (when working), but:

[2404559.613068] JBD2: Error -5 detected when updating journal superblock for sda1-8.

[2404559.632644] sd 1:0:0:0: [sda] tag#27 UNKNOWN(0x2003) Result: hostbyte=0x04 driverbyte=0x00

[2404559.647922] sd 1:0:0:0: [sda] tag#27 CDB: opcode=0x2a 2a 00 00 00 08 00 00 00 08 00

[2404559.662545] blk_update_request: I/O error, dev sda, sector 2048 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 0

[2404559.679957] blk_update_request: I/O error, dev sda, sector 2048 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 0

[2404559.697240] Buffer I/O error on dev sda1, logical block 0, lost sync page write

[2404559.711617] EXT4-fs (sda1): I/O error while writing superblock

[2404819.847063] sd 1:0:0:0: [sda] tag#28 UNKNOWN(0x2003) Result: hostbyte=0x04 driverbyte=0x00

[2404819.862296] sd 1:0:0:0: [sda] tag#28 CDB: opcode=0x85 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[2406619.879328] sd 1:0:0:0: [sda] tag#29 UNKNOWN(0x2003) Result: hostbyte=0x04 driverbyte=0x00

[2406619.894759] sd 1:0:0:0: [sda] tag#29 CDB: opcode=0x85 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[2408419.912725] sd 1:0:0:0: [sda] tag#30 UNKNOWN(0x2003) Result: hostbyte=0x04 driverbyte=0x00

[2408419.928167] sd 1:0:0:0: [sda] tag#30 CDB: opcode=0x85 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

once it starts, it keeps going. Reboot usually helps, but that’s not a long-term solution, esp. since I sometimes need to access it remotely.

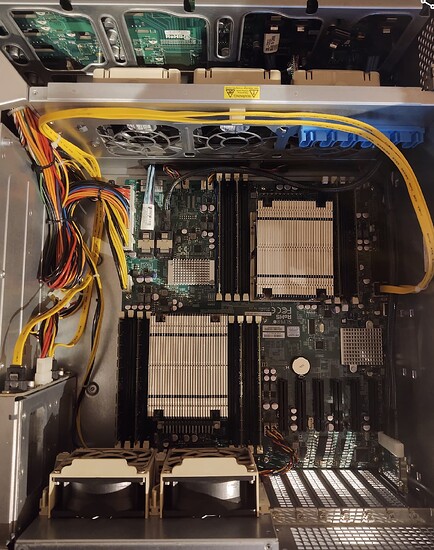

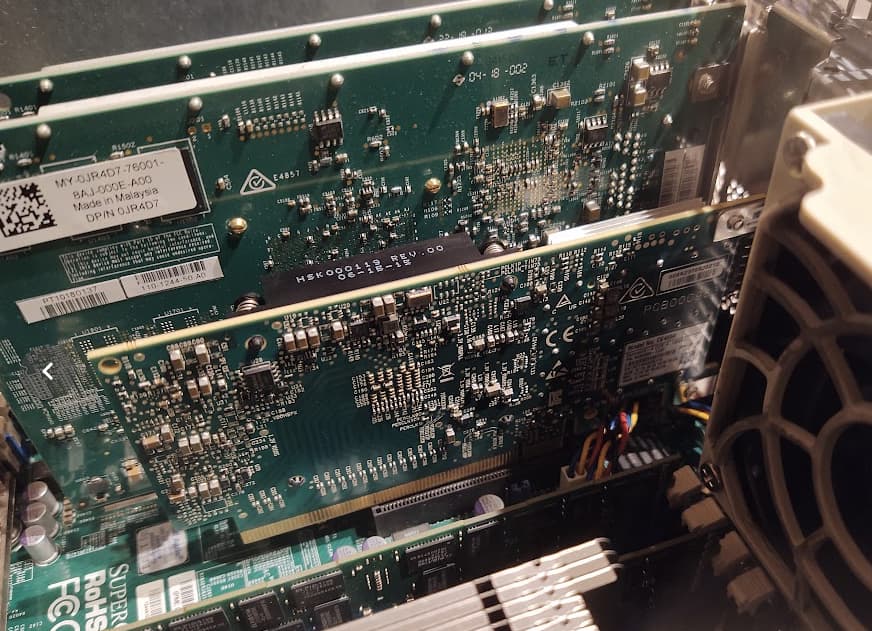

Next time: current state of affairs