Can you point at a seller for that PCIe Gen4 x8 bifurcation carrier AIC for two U.2 SSDs that has been working fine for you?

IMO the real flex here is:

![]()

I’m planning a P5800X with an Ableconn PEXU2-132 adapter on an X670E motherboard. Should I put it in a CPU or chipset PCIe slot? This is what I have:

CPU

2 x PCIe 5.0 x16 slots (support x16 or x8/x8 modes)

Chipset

1 x PCIe 4.0 x16 slot (supports x2 mode)

One of the CPU slots is for a RTX4090, the rest are open.

Looking at the block diagram of the gigabyte x670 aorus master, it’s certainly interesting.

It looks like there’s a lot going on with the x4 chipset link, so any task that’s so needy that it would max out a P5800X would probably notice bandwidth contention issues occurring. In practice, most people are hard pressed to stressing their NVMe drives.

On the other hand, I bet that if you tested the 4090 in both x16 and x8 modes, you’d likely see only 1-3 frames of difference at worst. So you could likely safely go x8x8 and give the P5800X the other slot if that’s worth it to you.

Sorry, I should have been more specific about the motherboard: it’s the Asus “ProArt X670E Creator WIFI”. It has two x16 slots for the CPU, so I think I can put the 4090 on one and the P5800X on the other.

I had another thought: RAID 0 is great but with SSDs has the downsides of 1) increased risk, and 2) worse random RW. The P5800X mitigates both of those, yes? Is it possible to run two P5800X in RAID 0?

In that case, I wonder if I could get both P5800X connected to the CPU? As mentioned, on the CPU I’ve got only one free x16 slot, but I’ve also got two free M.2 (PCIe 5.0, 4x). I see there are M.2 to U.2 adapters, which would mean running a cable to the P5800X. Above Wendell says, “a cabled adapter will not work”. Is that really a no go? Isn’t the drive designed to be plugged into a U.2 with a cable?

I found this x8 PCIe 4.0 dual U.2 AIC that looks perfect for running two P5800X. That’d let me put them both on CPU lanes, and still have two M.2 slots on the CPU open. I bought it, so we’ll see!

Veidit and aBav.Normie-Pleb were looking for this in a now closed thread (I wonder if linking them pings?).

All ryzen 7000 motherboards should be very similar. They absolutely do not have 32 lanes to supply two x16 slots

What is done is if only the first slot is occupied, then it’ll get the full x16 lanes. If both slots are occupied, it should automatically bifurcate to giving x8 lanes each slot.

I would very closely look at the manual to see if that second slot can further bifurcate from x8 to x4x4, otherwise the card you mentioned in the other post won’t allow both drives to be seen. The slot must support bifurcation down to x4, or you need to get an (expensive) card that has a PLX chip that allows for PCIe switching.

Edit

It may actually be supported. Looks like asus are fucking retarded and insist on renaming bifurcation to “raid mode”.

Page 57

It’s 2022 and Asus still don’t put topology diagrams in their motherboard manuals ![]()

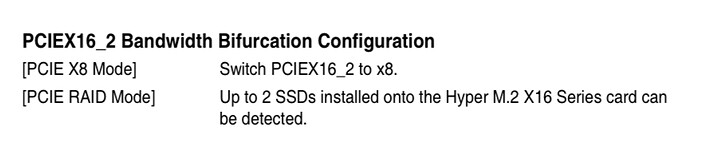

Thanks for pointing that out, I’m not super familiar with how this works. The manual shows:

AMD Ryzen™ 7000 Series Desktop Processors*

2 x PCIe 5.0 x16 slots (support x16 or x8/x8 modes)

Seems you are right, using the 2nd CPU slot makes them both run at x8, bummer. I found some anecdotes about losing ~10 FPS running a 4090 at x8, still getting 100+ FPS at 4K. That sounds OK for my needs. Edit: here’s a thorough review showing the difference is relatively minimal.

The next part is: can the 2nd slot running at x8 bifurcate to x4x4. The manual links to this page. It says it’s specifically about a “Hyper M.2” AIC, so it’s not clear if it applies to other cards. Maybe? Anyway it shows:

ProArt X670E Creator WIFI

PCIEX16_1X4+X4+X4+X4orX4+X4

PCIEX16_2X4+X4

PCIEX16_3X2

Does this indicate slot 2 supports bifurcation to x4/x4? Does it seem likely the dual U.2 card I found will work in slot 2 with a 4090 in slot 1? It seems silly to only given specifications for the Hyper M.2 AIC. I would hope that the same applies to any AIC.

BIOS manual says:

Use [PCIE RAID Mode] when installing the Hyper M.2 X16 series card or other M.2 adapter cards. Installing other devices may result in a boot-up failure.

Seems like there is hope for the dual U.2 card, though no guarantee.

Yes, should all work fine, just put the PCIEX16_2 slot into PCIe RAID mode and it will bifurcate PEG lanes to x8/x4/x4.

Don’t worry too much about running the GPU at Gen4 x8. It should be fine unless you are constantly churning VRAM and need to maximize throughput to shave a few minutes off a long render or what have you. The nice thing is that you’ll be ready to run a future gen GPU at Gen5 x8.

The “Hyper M.2 AIC” is just their own M.2 bifurcation card, you can use that card in other systems with bifurcation, or you can use other bifurcation cards in the asus system. There are no proprietary “security features” on these that prevent you from using them interchangeably. I myself have both a 4 slot M.2 asus card, as well as an Abelcon U.2 adapter that I’ve used in Asrock and Tyan systems. When the ASUS adapter came out, it was the best value M.2 bifurcation card available, though there is finally a lot more competition now.

The single caveat is that some bifurcation cards aren’t going to be able to keep a clean enough signal for PCIe 4.0. In practice, if the card lists 4.0 compatibility then it’s a pretty safe bet it’ll just work.

Cards are much more reliable at sending a viable signal to drives than cables are.

Does this indicate slot 2 supports bifurcation to x4/x4?

Yeah that seems correct. We’re I buying it for that purpose, I’d see that as confirmation it’ll do just that.

Thanks guys! That card and two P5800X 800GB are now on the way! I’m hyped! None of the P5800X reviews show RAID 0 results, so that’ll be interesting. There weren’t any 1.6TB in stock anywhere, but 2x800GB will be enough for me (and cut the price nearly in half).

Hey @wendell, could you please share the fio commands that you used to obtain your 4k QD1 results?

Right now, I am getting better 4k QD1 performance from a pci3 m.2 → u.2 connection than a pci4 connection (using an Ableconn card) when running this command:

fio --loops=5 --size=1000m --filename=/dev/nvme0n1p1 --ioengine=pvsync2 --hipri --direct=1 --name=4kQD1read --bs=4k --iodepth=1 --rw=randread --name=4kQD1write --bs=4k --iodepth=1 --rw=randwrite

It is about 7% faster when using pci3, on an x670e motherboard. I would be interested to try this with your fio command to see if this trend holds.

I think its posted here skmewhere will dig out later. Pcie4 into the cpu should be best unless there are intermittent pcie wrrors. Turn on aer in bios.

Would also be worth setting the ableconn card slot to pcie3 to see if its just pcie3/4. If ablecomm is same as the pcie3 adapter then the pcie4 connection is erroring

Heya, I did try looking through this thread, but couldn’t find any such commands myself.

But some interesting results came out of what you suggested I try… For each of the below 3 scenarios, I ran the exact fio command I shared in my last post 5 times in a row, with a 1 second sleep between each run (this is why there are 5 results per test case):

First, Ableconn, pcie4 (with AER enabled – no errors reported in dmesg):

read: IOPS=117k, BW=458MiB/s (480MB/s)(5000MiB/10928msec)

write: IOPS=87.8k, BW=343MiB/s (360MB/s)(5000MiB/14580msec); 0 zone resets

read: IOPS=120k, BW=467MiB/s (490MB/s)(5000MiB/10708msec)

write: IOPS=90.3k, BW=353MiB/s (370MB/s)(5000MiB/14175msec); 0 zone resets

read: IOPS=121k, BW=475MiB/s (498MB/s)(5000MiB/10536msec)

write: IOPS=92.9k, BW=363MiB/s (381MB/s)(5000MiB/13771msec); 0 zone resets

read: IOPS=120k, BW=469MiB/s (491MB/s)(5000MiB/10668msec)

write: IOPS=91.3k, BW=357MiB/s (374MB/s)(5000MiB/14025msec); 0 zone resets

read: IOPS=118k, BW=461MiB/s (483MB/s)(5000MiB/10849msec)

write: IOPS=88.1k, BW=344MiB/s (361MB/s)(5000MiB/14530msec); 0 zone resets

Second, Ableconn, pcie3 (with AER enabled – no errors reported in dmesg):

read: IOPS=109k, BW=425MiB/s (446MB/s)(5000MiB/11755msec)

write: IOPS=88.8k, BW=347MiB/s (364MB/s)(5000MiB/14415msec); 0 zone resets

read: IOPS=110k, BW=430MiB/s (450MB/s)(5000MiB/11639msec)

write: IOPS=93.5k, BW=365MiB/s (383MB/s)(5000MiB/13685msec); 0 zone resets

read: IOPS=105k, BW=412MiB/s (432MB/s)(5000MiB/12147msec)

write: IOPS=83.9k, BW=328MiB/s (344MB/s)(5000MiB/15259msec); 0 zone resets

read: IOPS=112k, BW=438MiB/s (459MB/s)(5000MiB/11412msec)

write: IOPS=94.5k, BW=369MiB/s (387MB/s)(5000MiB/13551msec); 0 zone resets

read: IOPS=108k, BW=423MiB/s (443MB/s)(5000MiB/11824msec)

write: IOPS=84.6k, BW=330MiB/s (347MB/s)(5000MiB/15129msec); 0 zone resets

Third, and most interestingly, pci3, with the m.2 → u.2 adapter cable that comes with Optane 905p drives:

(EDIT: this third case is actually running at pci4, not pci3 – see my next post)

read: IOPS=122k, BW=475MiB/s (498MB/s)(5000MiB/10519msec)

write: IOPS=95.8k, BW=374MiB/s (392MB/s)(5000MiB/13363msec); 0 zone resets

read: IOPS=124k, BW=484MiB/s (508MB/s)(5000MiB/10322msec)

write: IOPS=97.6k, BW=381MiB/s (400MB/s)(5000MiB/13114msec); 0 zone resets

read: IOPS=120k, BW=468MiB/s (491MB/s)(5000MiB/10675msec)

write: IOPS=90.9k, BW=355MiB/s (372MB/s)(5000MiB/14085msec); 0 zone resets

read: IOPS=126k, BW=494MiB/s (518MB/s)(5000MiB/10123msec)

write: IOPS=99.3k, BW=388MiB/s (407MB/s)(5000MiB/12886msec); 0 zone resets

read: IOPS=126k, BW=494MiB/s (518MB/s)(5000MiB/10121msec)

write: IOPS=99.0k, BW=387MiB/s (406MB/s)(5000MiB/12923msec); 0 zone resets

So, you are correct that pci4 is faster than pci3 for the same connection method… but for some reason, the m.2 → u.2 adapter results in measurably more IOPS (it is not quite 7% in this test, though).

P.S. Adding one additional test result for you, as well… this is a single run of the above tests, still using the m.2 → u.2 connector, but this time, on a /dev/mapper/p5800x encrypted target:

read: IOPS=64.6k, BW=252MiB/s (265MB/s)(5000MiB/19810msec)

write: IOPS=46.2k, BW=180MiB/s (189MB/s)(5000MiB/27713msec); 0 zone resets

Feel free to let me know if you have ideas at all on how I might be able to speed that one up ![]() I assume that there isn’t much that can be done for the encrypted case. I would be happy to try anything at all though.

I assume that there isn’t much that can be done for the encrypted case. I would be happy to try anything at all though.

Be aware that just because the BIOS shows a PCIe Advanced Error Reporting Option and let’s you enable it doesn’t automatically mean it’s actually working. What specific motherboard model are you using?

While I don’t have a P5800X I got a few other fast PCIe Gen4 U.2/U.3 SSDs and I’ve tested them with the M.2-to-SFF-8639 adapter that came with an Intel Optane 905P. These work fine with PCIe Gen3 but when using them with a PCIe Gen4 SSD they introduce PCIe Bus Errors.

Hi aBav, thanks for the feedback. I am on an ASUS Proart x670e.

Very importantly, based on what you wrote, I realized an error on my part (I will edit my post, above, after this). The M.2-to-SFF-8639 adapter that I was using for the third test case in my last post was not limiting the connection to pcie3, as I thought. It was actually still running at pcie4.

I confirmed this by observing a large difference in 4k QD1 performance (about 15%) when setting that link speed to pcie3 in the motherboard BIOS when using the 8639. It still doesn’t explain why the Ableconn on pcie4 performed worse than the 8639 on pcie4, though.

As for AER errors… I am seeing none at all in dmesg, and I just ran 1tb worth of read/write data through the drive using badblocks, and it all was read back correctly. This is a basic test, but it is still something. Doing reads with “dd” also does not produce any AER messages. I do see this when running “dmesg | grep -i aer”, though, so I think it is enabled:

[ 0.473305] acpi PNP0A08:00: _OSC: OS now controls [PCIeHotplug PME AER PCIeCapability LTR]

[ 0.982068] pcieport 0000:00:02.1: AER: enabled with IRQ 28

[ 0.982542] pcieport 0000:00:02.2: AER: enabled with IRQ 29

[ 1.336761] aer 0000:00:02.2:pcie002: hash matches

Interestingly, when using the 8639 and pcie4, “dd” has a max read/write speed of 3.0GB/s and 4.065GB/s.

When using the Ableconn at pcie4, “dd” has a max read/write speed of 5.5GB/s and 5.8GB/s. I don’t care about sequential speeds at all, but this is interesting to observe – the 4k QD1 performance is still slightly higher on the 8639 over pcie4.

Any thoughts on how I might confirm if AER errors are occurring? Badblocks seems like the most efficient way to see if some data is not being correctly read/written, but maybe there is a better way.

I also just noticed another oddity – the usual fio test that I run does the read and write test at the same time:

fio --loops=5 --size=1000m --filename=/dev/nvme0n1p1 --ioengine=pvsync2 --hipri --direct=1 --name=4kQD1read --bs=4k --iodepth=1 --rw=randread --name=4kQD1write --bs=4k --iodepth=1 --rw=randwrite

Sample output:

read: IOPS=125k, BW=488MiB/s (511MB/s)(5000MiB/10253msec)

write: IOPS=97.4k, BW=381MiB/s (399MB/s)(5000MiB/13136msec); 0 zone resets

But, if I run only the read test on its own, with no write test being done at the same time (on either connector), it apparently drastically reduces my performance:

fio --loops=5 --size=1000m --filename=/dev/nvme0n1p1 --ioengine=pvsync2 --hipri --direct=1 --name=4kQD1read --bs=4k --iodepth=1 --rw=randread

Sample output:

read: IOPS=75.1k, BW=293MiB/s (308MB/s)(5000MiB/17046msec)

I hope Wendell will be able to dig up the fio commands/files that he used in the past, as I feel like I must be doing something wrong here…

Just to be sure: This motherboard explicitly shows a PCIe Advanced Error Reporting (AER, not ARI) option in the BIOS?

I’m asking since I’ve been using a few ASUS AM4 motherboards (Pro WS X570-ACE, ProArt B550-CREATOR, ProArt X570-CREATOR WIFI) and these don’t offer the PCIe AER option.

Contrary to that many ASRock AM4 motherboards do.

But on any AM4 system I’ve seen so far where PCIe AER is working it is only working on PCIe lanes that come directly from the CPU, errors on chipset PCIe lanes stay hidden.

It does show AER specifically. It was sort of hard to find, in “Advanced → AMD CBS → NBIO Configuration → Advanced Error Reporting (AER)”.

If I disable the option, then the struck through lines no longer appear in dmesg:

[ 0.473305] acpi PNP0A08:00: _OSC: OS now controls [PCIeHotplug PME AER PCIeCapability LTR]

[ 0.982068] pcieport 0000:00:02.1: AER: enabled with IRQ 28

[ 0.982542] pcieport 0000:00:02.2: AER: enabled with IRQ 29

In my case, I am using an m.2 slot whose 4 pcie lines are directly from the CPU. I was sure to avoid chipset based lanes.

AER reports working on my Pro WS X570-ACE with Ryzen 5900X

[me@home~]$ dmesg | grep -i aer

[ 0.863356] acpi PNP0A08:00: _OSC: OS now controls [PCIeHotplug PME AER PCIeCapability]

[ 1.112645] pcieport 0000:00:01.1: AER: enabled with IRQ 28

[ 1.112794] pcieport 0000:00:01.2: AER: enabled with IRQ 29

[ 1.112916] pcieport 0000:00:03.1: AER: enabled with IRQ 30

However, it does not with a Ryzen 5700G