I feel silly quoting myself - but after a quick read of StoreMI, it is limited to HDD devices connected directly to the MOBO via SATA.

That sucks since Enmotus, the developer behind StoreMI has had a product called “FuzeDrive” where you could combine two drives, no matter if SATA/AHCI or PCIe/NVMe and it was for you to decide which one was the fast, which one the “storage” tier.

I always find it sad to see a regression in technological levels personal consumers can easily get, something like a “dumbing down”. Just like that thing that has been happening with Social Media and human society in general for the last ten years

Have anyone seen any test online for these units on older hardware, like pcie gen3?

Would be nice to see how how much it bottleneck if used to retrofit an server on a bit older hardware?

Subjective review.

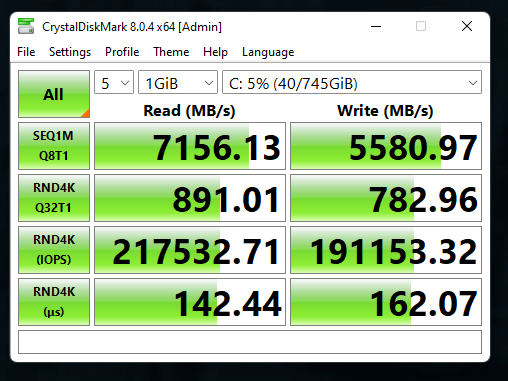

Going from an 905p to a p5800x makes my day to day computer usage, mostly a crap ton of browser tabs significantly snappier.

Wasn’t actually expecting to see very much of a difference.

So expensive of an upgrade for that use case

How’s everyone checking errors?

Both my P5800x and my cd6 are showing up as pcie 4.0x4 via crystaldiskinfo.

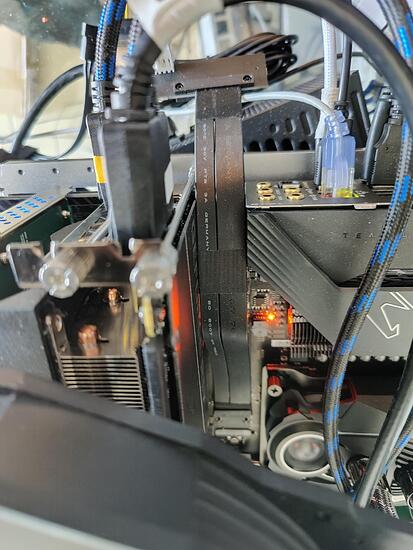

I’m using PCIE addon cards, I’ve tried M.2 Module connectors but those did not work at full speed.

Using an Ableconn and a DLinker.

The Ableconn specifically says its a PCIE 4.0 card.

(And yes, it’s an expensive upgrade, but for me absolutely worth it. If I was trying to save a bit I’d get a smaller p5800x and use primocache to use it as an L2 cache)

Almost, instead of the U.2 SFF-8639 connector on the drive end I’d need the square U.2 SFF-8643 one to connect to a backplane.

A thingy similar to the one on your photo shipped with Intel 905P U.2 SKUs, for example, to use them with “normal” consumer motherboards that commonly only sport M.2 or regular PCIe slots and no U.2 at all.

How are you checking CRC error rate?

If on AMD platforms the UEFI option “Platform-first Error Handling” (PFEH) is disabled you see PCIe errors from NVMe SSDs under load in Windows Event Viewer or NVMe transfer errors in Linux’ system log.

What sucks: On current Ryzen 5000 systems AMD doesn’t show this option anymore in the UEFI (as of AGESA 1203B), but when installing a Ryzen 3000 CPU or 4000G APU in the very same motherboard you might see that option…

This option is also relevant for the use of ECC memory since the chipsets themselves don’t have a proper OS-independent error log.

When PFEH is enabled, the OS isn’t getting any notification of these kinds of corrected hardware errors which sucks if you want to validate a system configuration’s stability

Any program that shows “SMART” details will do. I’m using the “Intel Memory and Storage Tool” to manage my Intel drives.

So seeing how a 800GB Optane has an endurance of over 100PB (with bigger sizes being even more ridiculous), most users could use this P5800X for 100+ years and not even come close to breaking the 3DXpoint. However, even this P5800X here wouldn’t actually last for like 100 years because the controller would break way before that, right? It’s really insane to just think about it though.

Of course, I realize that in 100 years technology will have advanced so much that even this Optane will look primitive to the point where no one wants to use it anymore anyway, but I still find the notion of an essentially indestructible SSD quite otherworldly.

Hope that I haven’t overlooked this being mentioned somewhere:

How much does the performance for non-sequential loads tank when using the P5800X in PCIe Gen3 mode?

Does performance per watt change much?

Not much tanking. It’s limited to 4gbps.

You lose a lot of iops burst overhead. I mean 1+ million iops is pretty conservative tbh

Thanks for the details!

Have you by chance have had any experience with basic Gen4 PCIe NVMe switches without the SATA/SAS part?

Am thinking of the Broadcom P411W-32P ( P411W-32P ), x16 to “32” lanes, the only thing I don’t like is uncertainty regarding the cables.

Broadcom’s older HBA 9400 line that also supports NVMe used non-standard U.2 cables and the cables Broadcom lists for the P411W-32P are all 1 m in length which sucks if you want to build a compact DIY NVMe drive shelf with Icy Dock backplanes (a new revision uses OCuLink for Gen4 instead of U.2).

(I really dislike proprietary cables  )

)

800GB size, with 100PB endurance would not really fit a typical use case; yes, it might run for ages, but a person would not keep such a drive running more than 10 years in a computer before upgrading to larger capacity, if only as a cache drive.

Where it would work, is automation, like car logging drives, which bricks a whole car when it reaches max write cycles on a TLC drive…

Hey all, I found a solution that has been working so far with using a cable and adapter. I’m a new user here so it’s not currently letting me officially link…

The one cable I found on Amazon that lists it’s able to support PCIe 4.0 speeds:

LINKUP - Internal 16G U.2 Cable (85Ω 85ohm PCIe Gen 4 Mini SAS HD to U.2/SFF-8643 to SFF-8639 Cable) with SATA Power

The m.2 adapter I used is basically the only black colored one that is 110 in size… again sorry its not letting me link.

Do you have a system that supports PCIe AER on an UEFI level and is the P5800X connected via CPU PCIe lanes?

Getting PCIe Gen4 SSDs to “work” with M.2-to-U.2 adapters, SFF-8639 for a direct SSD connection or 8643 cables for a backplane isn’t “hard”, the pain comes when you look at error logs.

On Ryzen systems only CPU PCIe properly supports AER and doing a standard CrystalDiskMark benchmark run with a Samsung PM1733 that tops off at 7.400 MB/s sequential read causes ca. 50 PCIe errors with passive adapters and cables without PCIe redrivers (best scenario I’ve seen so far).

In Windows these errors are logged as WHEA ID 17 entries.

I would; I would love nothing better than to have a live-long permanent storage device.

If we get to a point where everyone needs _ TiB for normal usage, I would still like to have all my high-density data (including plain text things like personal notes, diary (if you keep such things), contacts, etc.) on such a drive, almost like an annex of my brain.

Granted, if one treats a storage drive like this, it needs to be well encrypted; so one would need to be careful to remember that encryption key very well, especially if it is cycled every few decades. Over multiple decades, cycling keys would likely be a necessity, either to limit potential leakage by the devices used to access the drive in that time, or as part of re-encrypting with safer algorithms.

Though if it really is so long lived, even if the password is forgotten, one could keep it for many years without worry in case the password is later remembered.

One could accomplish something similar with an encrypted partition/disk-image or ZFS dataset that one carries over from system to system in the same way, but I think having a physical drive would reduce the need to micromanage this kind of thing.

Even outside of daily use, a nigh-permanent drive would let you keep a long term, no-maintenance backup with family, a friend, or in safe-deposit box (if you have the funds for such a thing).

As I see it, assuming I am not missing any fatal caveats, Optane might be the first credible replacement to good ink+paper for long term storage; if I had the money, I would want to test conformal-coating the internals, to see if that could further stretch longevity.

The problem is that Optane is only rated for a 3-month data retention, just like enterprise SSDs. Your data might be safe for longer, but if you don’t connect your P5800x to power here and there it’s gonna be bad news.