What on earth is this?

Note: This how-to assumes you are familiar with the underlying technologies at least a bit: Comfortable on the Linux CLI, familiar with installing packages on your distro, familiar with SSH and key-based authentication. At least a vague notion about nginx, web services, port forwarding and network address translation (NAT).

If you’re a tinkerer chances are you’ve forwarded a port from your home router to some machine inside your network.

In an effort to make things more newb-friendly, a protocol called UPnP was created where in a device, like an Xbox, could tell your router to forward it an in-bound connection. (Sometimes you see complaints about “strict NAT” not permitting inbound connections with these devices…)

What I want to do is setup something like:

nextcloud.wendell.tech

plex.wendell.tech

emby.wendell.tech

vpn.wendell.tech

wiki.wendell.tech

mail.wendell.tech

and offer services on them. I know many of our community run things like NextCloud in the cloud, but I want to run these on my home internet connection. The main problem is that I don’t want to have DNS resolution lead directly to my home IP. I also want an extra layer of filtering and protection than just what I would get with my home firewall.

Why my home connection? While bandwidth and latency is not as good as “the cloud” the compute and storage costs are much, much lower. As in, just barely, cost-of-electricity-only much lower. It’s a sunk cost that I’ve got all these old drives and computers laying around – they can run my infrastructure.

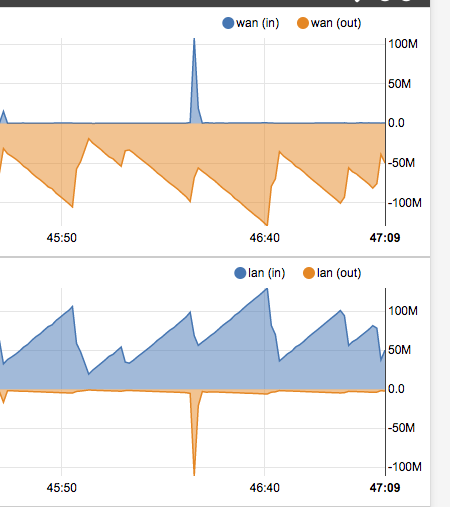

In truth, 5-10 megabit of upload is a sort of lower-bound to do “OK” with these services, but 25-100 megabit upload and you’ll be hard-pressed to tell any difference with a cloud setup. If you’re a data hoarder, then this is a great setup.

It means the ideal setup is to have these DNS entries, and services, terminated on something in the cloud (such as a linode machine – http://linode.com/level1techs – ) and then proxy, or forward, connections to the service and port on my home machine.

Introducing HAProxy

http://www.haproxy.org/

The Reliable, High Performance TCP/HTTP Load Balancer Yes, most people will load balance to just a single node on their home/homelab connection. Or maybe not? haha. The Dramble.

HAProxy is in every major distro. You can even configure it to work with SSL/HTTPS and the configuration is pretty straightforward.

Just forward Port 80/443 from your public IP to the internal machine running HAProxy, and then HAProxy can forward to any number of internal machines that you configure.

For email, you can use the “TCP” forwarding mode of HAProxy and forward port 25 to a non-standard inbound port on your home machine (almost all ISPs block inbound port 25-- and for good reason. Most people do not know how to securely maintain an email server!)

Too complicated? – Need GUI?

Enter HAProxy-WI

One person’s (apparently) labor of love – a reasonable GUI to manage the madness. While it is open-source, if you want prebuilt buinaries or a docker image, you are supposed to subscribe.

I am not sure how I feel about this, but I did talk the author into adding a lower-cost subscription tier for home/personal use.

I am a believer in supporting creators, so I subscribed to try it out and I used the docker image, as well as the source on github. Both seemed to work more or less equivalently.

I would feel better about it if it were formally audited. I would also feel better about it that, rather than using binaries, that I build my solution directly from github in an automated way.

( Any precocious forum users want to create a docker-compse yaml that builds an appliance from github?  )

)

This project is a little more sophisticated than just a front-end for HAProxy, too, which is fully free (libre) and open source, is a proxy that is intended to serve as a single-endpoint that forwards requests to a pool of many servers behind it.

HAProxy-WI gives us a nice gui for that, but also NGINX (which is a nice and sophisticated web server), and KeepaliveD. It is fairly straightforward to manage these processes directly from the command line – and for a home users these services mostly work fine on a low-power device, like a raspberry pi (for tens to hundreds of megabits of bandwidth, anyway).

Because most home internet connections only have a single IP, it is possible for HAProxy to be “the” server running services such as HTTP, HTTPS, etc directly from that IP and then, through the magic of packet sniffing (or, in the case of HTTPS then SNI) and figure out which internal server you intended to connect to.

You do NOT need this gui to use the knowledge in this little how-to, but it is a quality of life improvement.

More Complex Setups

Some ISPs filter inbound connections on HTTP/HTTPS. That’s okay. You can run haproxy-wi on a very small instance on Linode for example (http://linode.com/level1techs) and then forward inbound port 80/443 on your linode machine to port 52135 on your home internet connection. No ISP is filtering port 52135. And because of the way the proxy system works, the web clients hitting your website don’t know the traffic is really coming from your home computer on a non-standard port.

Linode IP HAProxy Home IP (public) Router Port Forward Internal IP

45.45.45.45:25 > 8.6.5.2:5221 (tcp forwarding) > 192.168.1.1:25

45.45.45.45:443 > 8.6.5.2:8443 (tcp forwarding) > 192.168.1.2:443

> 8 .6.5.2:8444 (tcp forwarding) > 192.168.1.3:443

etc.

While it is possible to skip the “cloud endpoint” and run this on your home internet connection, I think I should probably write that up separately.

This is a great way to have a giant media collection “online” but not have it directly on the internet.

(And, not with HAProxy-WI, but with doing it manually, you can layer on filtering and a web application firewall, like Snort to help protect your home network that much more).

Furthermore, you can restrict inbound traffic to your home IP only from your Linode machine (or other server on the internet) in case your ISP starts snooping on your traffic.

Getting Started with HAProxy-WI

It’s not time for that yet! To use HAProxy-WI, you need working HAProxy and (ideally) Nginx!

The first step is setting up a VM which has a public IP address (ideally) and getting your dns setup. Since HAProxy-WI is just a front-end, you also need to go ahead and install HAProxy and NGINX (the web server) on your cloud host.

If you want a setup like mine, just setup a dns wildcard A/AAAA record like

*.wendell.tech

that has your linode public IP(s) – 46.xx.yy.zz / [::] etc for example.

Once you’ve done that, I’d recommend also setting up docker, and then configuring haproxy-wi as a docker image.

https://haproxy-wi.org/installation.py

**Note that the step that says to run it on port 443 – instead I suggest to run it on another port such as 8443 if you are planning to use this same IP address for your wildcard domain. **

Linode/Cloud IP

45.45.45.45 : 443 (HAProxy service itself)

45.45.45.45 : 8443 (HAProxy-WI gui)

You see what HAProxy-WI does is connect, via ssh, to the server you setup and then it updates the haproxy and nginx configs.

I found it convenient to just run Haproxy-WI in a docker container and have it connect back to localhost via ssh.

Ideally, you setup a user that is a limited user, but that has access to update haproxy and nginx configs. It isn’t recommended that you use the root user because any vulnerability in haproxy would expose a key that you could connect back to your gateway machine as root. Not good.

I am not going to cover that in this how-to. But if you’re a total newb and lost, maybe I can cover that in another how-to. Speak up and let us know.

For the purposes of “set it and forget it” I would recommend manually starting and stopping the docker container only when you need it. You lose the monitoring capabilities a bit, but this is a safer.

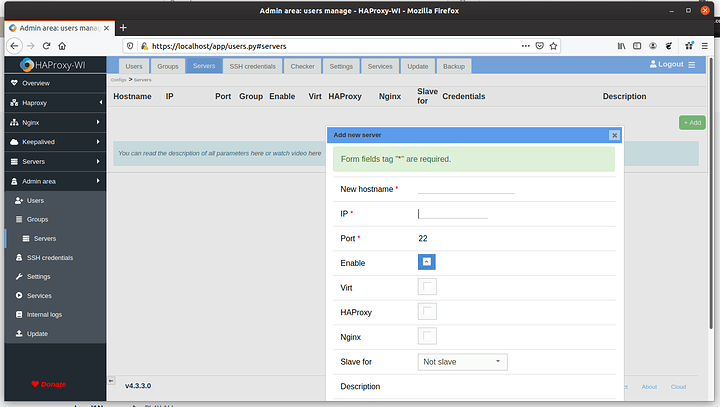

Add a server in HAProxy-WI –

If this is the same setup I’ve been describing, just enter localhost and setup your ssh keys. Probably port 22 as well.

If this is a different machine on the internet, enter its IP and SSH credentials.

The idea is that HAProxy will connect via SSH to your machine running haproxy and nginx, and configure it for you, via the gui.

Navigate to Admin Area, Servers and add a server. This is the server on which you have already installed haproxy and nginx, probably though apt, dnf or your distro’s package manager.

If that is NOT the case, then you will need to forward all the ports you set on your router to the IP Address of your HAProxy-WI machine (or docker container) here, and then use your internal IP address.

For example:

Public IP (your router internal/external address)

5.10.15.20 -> 192.168.0.1

HAProxy IP

192.168.0.5

Fancy Pants PiHole IP

192.168.0.10

Your client computer

192.168.0.20

You would configure your router to forward port 52135 (tcp and udp, ideally, or at least tcp) to 192.168.0.5 and then in the HAProxy WI gui, set the IP to 192.168.0.5 and the port to 22.

Once you do that, you can load the config and setup an HTTP load via the haproxy-wi gui.

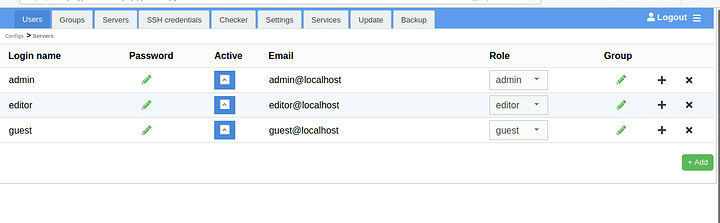

Be aware that, by default, there are 3 users created on HAProxy-WI. You’ll ant to disable the ones you don’t use and set a secure password on the ones you do:

PFSense/ OPNSense Users take note

PFsense has built-in a reasonable and perfectly functional HAProxy gui. It works fine. If you are using PFsense for your router, just use that. It doesn’t have as many bells and whistles as HAProxy-WI, but it does the job.

Configure HAProxy

Ideally configure via HAProxy-WI, but you can do it via the CLI no problem.

The idea is to create a front-end that looks at the incoming hostnames and routes it to the appropriate backend.

In our example, we have plex, nextcloud and a wiki running on different nonstandard ports.

wiki.wendell.tech will proxy to some.random.customer.isp.net port 58212 and

plex.wendell.tech will proxy to some.random.customer.isp.net port 582132 and so on.

In the haproxy config for each of these backends, you can elect to use encrypted or unencrypted http connections. Use ssl to use ssl.

Plex is a little special and requires a few more options to work properly with HAProxy:

#/etc/haproxy/haproxy.cfg snippet

...

frontend main

mode http

bind :::443 v4v6 ssl crt /etc/letsencrypt/live/wendell.tech/fullcert.pem

acl url_static path_beg -i /static /images /javascript /stylesheets

acl url_static path_end -i .jpg .gif .png .css .js

acl host_plex hdr(host) -i plex.wendell.tech

acl root_dir path_reg ^$|^/$

acl no_plex_header req.hdr_cnt(X-Plex-Device-Name) -i 0

acl host_radarr hdr(host) -i radarr.wendell.tech

acl host_sonar hdr(host) -i sonar.wendell.tech

acl host_sabnzb hdr(host) -i sabnzb.wendell.tech

acl host_synology hdr(host) -i syn.wendell.tech

acl host_nc hdr(host) -i nc.wendell.tech

use_backend synology if host_synology

use_backend sonar if host_sonar

use_backend sabnzb if host_sabnzb

use_backend radarr if host_radarr

use_backend nc if host_nc

redirect location https://plex.wendell.tech/web/index.html code 302 if no_plex_header root_dir host_plex

use_backend plex_any if host_plex

use_backend static if url_static

default_backend app

...

So this is an HAProxy frontend directive. We will have only one of those, because we have only one public IP and it is listening on port 443.

[ Note that I am using letsencrypt here (see below for letsencrypt if you are lost and don’t know how to get your free letsencrypt SSL certs) ]

I am telling HAProxy to listen on ipv4 and ipv6 port 443 (ssl) and the parth to my LetsEncrypt key+certificate. Depending on what the hostname is, HAProxy will route your http request to a specific backend in the HAProxy vernacular. Each backend is defined as a host and port that HAProxy will connect to.

So we end up with an haproxy config something like

#/etc/haproxy/haproxy.cfg

backend app

mode http

balance roundrobin

server app1 127.0.0.1:81 check

# The ssl verify none here means to use ssl, but ignore if the cert

# is self-signed.

backend plex

balance roundrobin

server 8.6.5.2:8001 check ssl verify none

# This is not an ssl connection. Bad idea for security!

backend synology

mode http

balance roundrobin

server synology 8.6.5.2:8006 check

# This is an nginx instance running on port 81:

backend app

mode http

balance roundrobin

server app1 127.0.0.1:81 check

# and so on, with many more backends defined.

# One for each of the front-ends defined above.

# you will NOT be able to copy-paste this config.

# think it through.

to service all our inbound hostnames that all map to this one IP address.

(haproxy also supports tcp connection proxying! but things like our acls for filtering by host won’t work in that case…)

On the nginx side it is very simple:

server {

return 302 https://$host$request_uri;

listen 81 default_server;

listen [::]:81 default_server;

server_name wendell.tech.com;

return 404;

}

HAProxy gets the inbound connection on port 80, and by default forwards it to this local nginx server running on the internet. It just serves static content. So anyone going to this server that doesn’t know the magic words or urls will not be able to use the proxy to get their traffic forwarded. This layer is a bit security-by-obscurity but it is mostly effective at preventing bots from scanning for things like plex installations or nextcloud installations, or from those services being indexed.

Plex Is special!?

Note the plex config also. Plex is a bit weird to proxy/forward, so I had to add some extra rules. Those rules should be copy-pastable if you desire a similar setup with HAProxy and Plex Media server.

The documentation is quite good on haproxy as well, and has lots of examples for various use-cases.

Your Local Firewall

On your local firewall, at your home connection, you can restrict inbound connections so that only your Linode (haproxy machine) is permitted to access these non-standard ports. It’ll keep snoops away, and if your ISP notices traffic to those ports they won’t be able to connect themselves to see what it is.

For troubleshooting, you can also permit inbound connections from anywhere on mappings like ome.random.customer.isp.net port 58212 . For example, if that mapped to your pi-hole, then in the browser you should be able to load:

http(s):/s/ome.random.customer.isp.net:58212 and see the page content, even before we add HAProxy to the mix.

Let’s Encrypt!?

It IS possible to configure a the HAProxy host to support lets encrypt. This is largely outside the purview of HAProxy-WI, though HAProxy-WI can manage certs in a more traditional way for you.

I can’t give you a clear-cut recipe or how-to, yet, but I’m working on it.

Here’s a rundown so you can DIY it if you’re handy at the CLI:

-

Setup your DNS forwarding/wildcard (e.g. foo.wendell.tech)

-

Setup nginx to listen on port 80, and set it to do a redirect to https (http is port 80, https is port 443):

server { listen 80; ... return 302 https://$host$request_uri ... }

On your haproxy machine, use certbot standalone to stop nginx, and haproxy, then run certbot to get your cert for all the domains you’re actually using (wildcard cert is also possible with dns challenges) then, cat the certificate chain and private key into a new file. You can specify that file in haproxy ssl configuration, and have haproxy listen on port 443.

Here’s the renew script I use, which stops haproxy and nginx:

#!/bin/bash

# - Congratulations! Your certificate and chain have been saved at:

# /etc/letsencrypt/live/wendell.tech/fullchain.pem

# Your key file has been saved at:

# /etc/letsencrypt/live/wendell.tech/privkey.pem

service nginx stop

service haproxy stop

certbot --expand --authenticator standalone certonly \

-d sonar.wendell.tech \

-d plex.wendell.tech \

-d nc.wendell.tech \

-d syn.wendell.tech \

-d wendell.tech \

-d radar.wendell.tech \

-d sabnzb.wendell.tech

# didn't work?

# --pre-hook "service nginx stop && service haproxy stop" --post-hook "service nginx start && service haproxy start"

sleep 2

#Let's Encrypt Needs the cert *and* the key in one file. This cat command will fix you up. Be sure the paths match what you've set in your HAProxy conifg

cat /etc/letsencrypt/live/wendell.tech/fullchain.pem /etc/letsencrypt/live/wendell.tech/privkey.pem |tee > /etc/letsencrypt/live/wendell.tech/fullcert.pem

sleep 2

service nginx start

service haproxy start

Just add this to /root/auto_renew.sh and run it weekly. Be sure to adjust the paths and filenames to match what certbot is actually updating. Don’t be tempted to use the nginx plugin for certbot, as it’ll wreck things in this setup.

With haproxy listening on port 443, and the app front end configured properly, you can now route things based on those ACL directives in the HAProxy config.

At this point, the HAProxy-WI gui should reflect the work you’ve done here, if you want to go that route. It has a gui text-file editor for setup you’ve done beyond the initial gui config.

It is possible to accomplish this same thing but entirely without nginx. You can just have HAProxy do the redirects. Personally, I like to also setup an nginx site on this same machine so that I can offer a flat html website.

Anyone going to this IP address or with the base domain (or an unused subdomain) will see the placeholder page. Only when you go to plex.wendell.tech will you get the plex media server. Note that the Letsencrypt SSL certificate will “give away” all the subdomains you are using since the registry of all certs requested via LE is public, and since all the domains are listed on the cert itself.

You can layer on HTTP autorization and even HTTP client-side certificate authorization for real security, but that’s perhaps best left to a future how-to.

Troubleshooting

Don’t forget if you’re setting this up on CentOS or Fedora, that SELinux makes things pretty locked down by default:

You may need to allow haproxy/nginx to make outbound connections. This is disabled by default.

You may need to allow http/nginx to listen on non-standard ports. Also disabled by default.

*You may need to run firewall-cmd to permit inbound traffic/open ports. *

etc.

# by defualt can't make outbound proxy connections, for security.

setsebool -P haproxy_connect_any 1

#by default port 81 isnt allowed for nginx to listen on

semanage -P port -a -t http_port_t -p tcp 81

Wrapping Up

See the thing is ALL the inbound traffic goes through HAProxy-WI. It ends up deciding which machine to send the traffic to based on the hostname you’re trying to connect to, which is a relatively new trick in networking. This is, of course, unnecessary if everything can have its own IP address. Most people don’t have that type of a setup, except maybe with IPv6.

, and I use this role for acme.sh:

, and I use this role for acme.sh: