I thought you were planning to install Linux (1 GPU) and run Windows as a VM, which is given the other GPU to run games.

I have never used Linux before. It seems scary, but I have been wanting to try it. Once I get a spare HDD I'll try it out.

I picked up the 1070 for 350 on a deal. I picked up the 480 originally as the main card but then found out that I'd need an Nvidia card for PhysX games (which when I tried to run a physX game wow... just wow. So bad.) I also found that my RX 480 runs emulators a lot smoother than my 1070. So I'm building somewhat of a hybrid build of my current 1070 streaming machine and my old 480 MAME machine.

How exactly are you planning to use both cards then?

Initially I was going to try my hand at something I saw in a Linus video years back. While I don't need both cards running in the same machine (and depending on the results post build I might just put the RX card into my lan-box) I don't really feel like parting from them right now. I suppose that I could test out differences between Gsync and Freesync monitors with the cards.

Allright. If what you are planning works in general - switching GPU as you desire - I assume it should work with Ryzen as well.

With the X370 Asus prime board you picked you would have no issues with the graphics card combo you mentioned. But be aware for game titles that don't support DX12 asynchronous (implicit) multigpu won't ever utilize anything but the primary card. DX12 (explicit) multigpu will utilize both cards if they are matched features and vram as far as I'm aware. But you may have good results in video editing applications or anything that uses OpenCL to perform compute. Generally OpenCL stuff is vendor agnostic and is just looking for as many devices as it can find to share the workload.

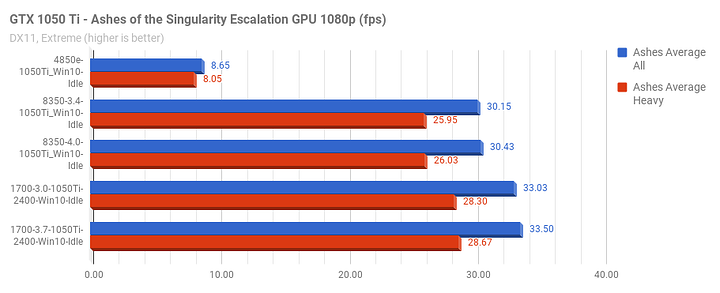

Alright bed time. I'll post the full writeup when I wake up, but here are the conclusions:

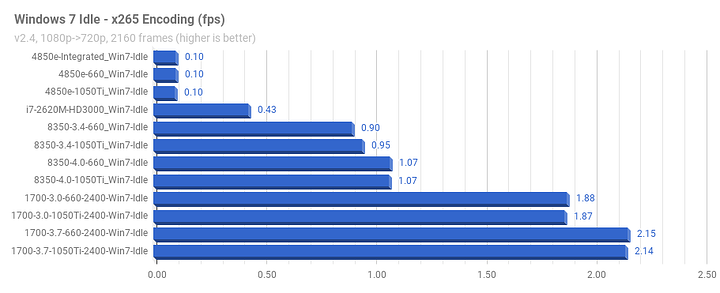

- Synthetic, or CPU bound tasks have a 2x performance increase, roughly.

- Most games do not even care. The ones that do get maybe a 5% or less increase but sometimes, due to SMT, you get huge performance hits.

So dual-cores suck at encoding basically. More cores/threads at higher clock speeds = better. Worth every penny for productivity. AMD only. Intel's compute per monies stinks across the board.

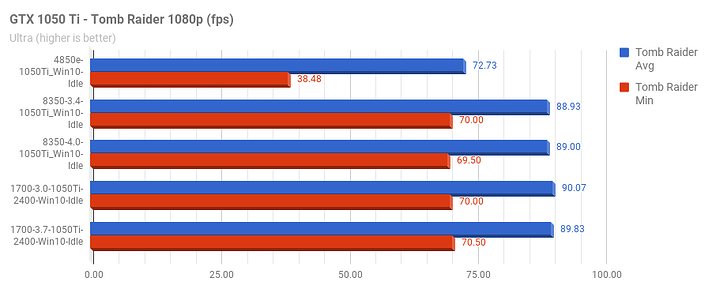

Tomb Raider does not care what CPU you use or at what frequency. It just does not. It also does not even care if you are encoding in the background /while playing/ (not shown, will post later). Awesome. Just awesome.

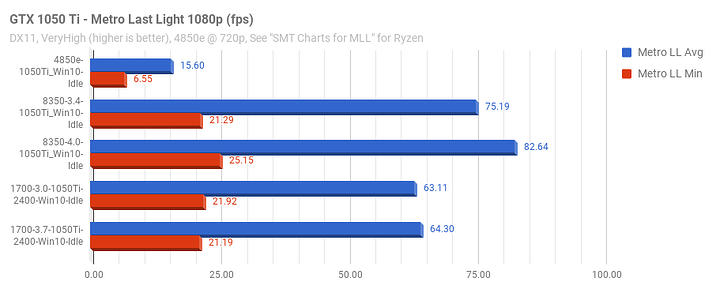

If you have an old game that does not respond well to SMT and will not be getting updated, expect massive performance decreases. Or just like...disable SMT while playing for those games.

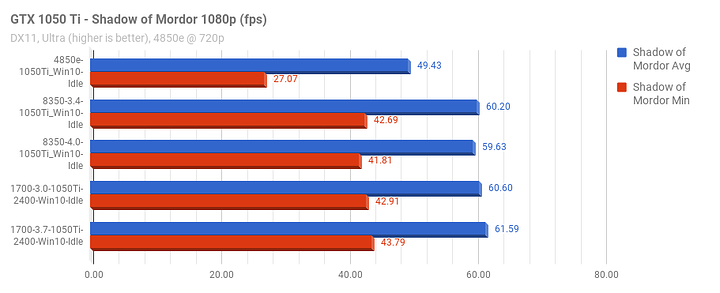

Shadow of Mordor scoffs at 16 threads. Even 2 threads is playable on Win10. (Win 7 is different story...)

Yey scaling! Finally! A full 3 fps. Hurrah!

Note that these tests are with a 1050 Ti. A 1070 might scale better.

Handy info to have. Had I the need for the 1050 ti It'd be solid for what I usually play. Hopefully the extra threads will help with video editing and streaming.

Whats that? do you mean Xeon 3400?

Whats that? do you mean Xeon 3400?

Surprised tho at Peanut's charts, expected for the 1700 to do a lot better against the 8350.

I guess there's still significant power savings involved in the upgrade. edit: Also obviously gpu bound. But still... wow.

That doesn't even exist. It'd be an i5-3470 at best. And if it were a Xeon I'm sure he'd write it correctly?

Unless he has an ancient 2009 X nehalem arch xeon

https://www.intel.com/pressroom/archive/releases/2009/20090908comp.htm

Yeah. No he's GPU bottlenecking so hard it's not even funny.

Those charts are WORTHLESS given a 1050Ti being used.

(No offense @Peanut253 , but that's just the truth)

The shadow of mordor & tomb raider bench graphs are the best indicator of that.

That's why I found it so confusing, what is a core 17 and why was 3400 behind it, as for the xeon 3400, that was google's guess.

PS. I didn't write i7 so don't misquote like that, just seems wrong(mostly saying that because some people in other threads might get ideas).

Fine I changed quote to the original poster.

But "quote-corrections" are fine on the forum.

post edited to I7 6700

Thanks for the correction, that makes a lot more sense.

not sure why i put I7 3400, lack of sleep perhaps.

I can vouch for that, has happened to me plenty of times, but the coffee is just too addictive, can't stahp consuming it. (ps. send help)

And to actually contribute something on topic: as far as I know it will be possible to use the other vendors graphics card for compute only, with exception perhaps of Vulkan/dx12. If we are assuming simultaneous use.

The point of the charts is that it is not really surprising that a 8350 @ 3.4 Ghz ($180) can keep up with a modern $120 video card (1050 Ti, 5,766 Passmark). Even modern dual-core like the g4560 ($70) could also shift the bottleneck to the GPU. The only CPU on that list that creates a CPU bottleneck is the 4850e from 2008, ($45). The only video card that can shift the bottleneck back to the CPU, assuming an 8350, is perhaps a 1070 or higher ($500, 11,023). It is unlikely a 1060 6GB can ($250, 8,717).

So when considering...say...GPU bound workloads like games, it does not make sense to upgrade one's CPU. It really is necessary to spend 3x+ more monies on the GPU than CPU for the CPU to ever become a limiting factor. A 8350 at 4.0 Ghz+ ($180) probably pairs well with a GTX 1070 (assuming $350). That said, there are some cavets related to OS version and specific games.

edit: typos

edit2: Full charts are now up.

- AMD FX 8350 versus AMD Ryzen 7 1700 Scaling on Windows 10 1703

- Windows 7 versus Windows 10 1703 Benchmarks

- Windows 10 1703 Idle versus Load Performance on FX and Ryzen

- Nvidia GeForce GTX 1050 Ti Scaling with FX and Ryzen on Windows 10 1703

There are also some Windows 7 focused ones externally available.

Possible? -- Yes.

Useful outside of virtualization or other niche uses? -- Hell no fam