I have been following a couple of tech youtubers for a while, especially Wendell, and thought I would finally jump into the tech forums as I start down the road of building a new NAS and expand my home lab. I just completed a 3 node cluster, running proxmox with ceph. The hardware I had laying around or got it from the company I was working for, which went out of business this year.

Cluster Specs

3x ASRock B450M PRO4

3x Ryzen 5 6core 3.6Ghz

3x Silicon Power 256GB NVMe(OS)

3x 64GB G.Skill TridentZ 3200

6x Adata SU800 512GB(OSD)

3x Adata SU800 256GB(DB)

3x Intel 540 10GbE Nics

3x Intel quad port 1GbE Nics

Still going through and tightening security as I learn more about Proxmox.

Here is my goal, I want to build the following NAS using TrueNAS Scale. Yes it is extreme over kill but I have a plan to utilize it as much as I can.

AsRock Rack ROMED8-2T

Epyc 7272 12-Core 2.9 GHz

Crucial 256GB DDR4 3200

Icy Dock Cremax RD MB516SP-B 16BAY

8x PNY CS900(to start)

16x 8TB Seagate Exo(from my old job)

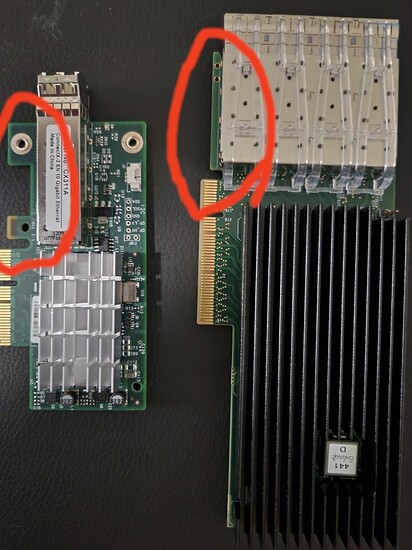

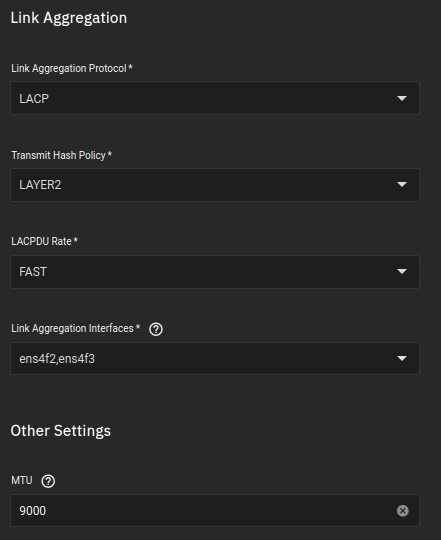

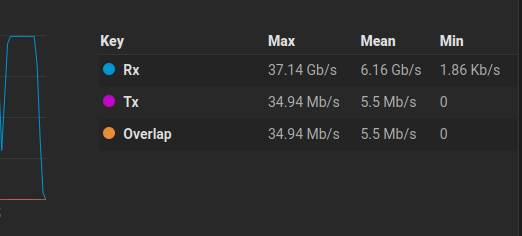

Silicom PE310G4I71LBEU-XR-LP(Intel 710X)

Qlogic 16Gb HBA

LSI HBA IT-mode(internal connection to SSD)

LSI HBA IT-mode(External for HDD)

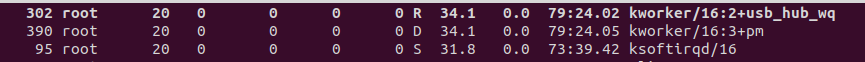

I have a spare MSI x99 mobo with a 10-Core Zeon processor that I will be using to test configurations and performance to make sure certain parts work with TrueNas even though the forums say they do. Silicom Quad port 10GbE for example says its basically an Intel 710X rebranded but I have burned a few times trying to use hardware that is suppose to work but doesn’t. So when the Silicom card gets here that will be the first thing I will test. Next will be the Icy Dock 16bay and 8 SSDs, which should be here the first week of the new year. If all of those work I will purchase the rest of the hardware.

Now this NAS will be used for personal and business. Proxmox backups will go to the NAS along with Plex, with hw acceleration, running as a VM as well as hosting storage for my entire family. Also working out a plan ,with a friend, to dump his companies data to my NAS which I will then purge to a LTO 6 tape. Oh yeah, I also have a MSL4048 with one LTO6 tape drive that my former boss was going to throw out.

So this is going to be a long journey to the finally goal. Look forward to sharing my mistakes and successes as I build this out.