Update … #10?

Anyways. After engaging with ASRock support, we were able to get the OS to stop complaining about the downgraded links. Not only that, there is a bug in their beta bio that was causing a 100% thread lock when using slot 6. This issue went away when I down graded to a stable bios. I have to say that ASRock’s support has been amazing to work with compared to some of their competition. Not to name any names…cough Gigabyte cough

Now that I have been able to get everything stable, the SSD pool still performs as expected(stripped raid-z) and can max the 9400-16i without any issues with fio. This will be the main NFS pool and possibly app storage.

Also purchased a RIITOP 4 Port M.2 NVMe to see if it would work with the bi-furcation on this motherboard. I installed 4x Silicon Power(SP) 256GB NVMe gen 3 for testing and set the designed port to 4x4x4x4. The motherboard has no problem picking up each drive as well as TrueNAS. And yet again fio was able to max out the throughput with out any issues. Here a few tests and configurations I did.

Raid-z

Run -1

fio --bs=128k --direct=1 --directory=/mnt/test --gtod_reduce=1 --ioengine=posixaio --iodepth=32 --group_reporting --name=rw --numjobs=12 --ramp_time=10 --runtime=60 --rw=randrw --size=128M --time_based

rw: (g=0): rw=randrw, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=posixaio, iodepth=32

Run status group 0 (all jobs):

READ: bw=15.5GiB/s (16.6GB/s), 15.5GiB/s-15.5GiB/s (16.6GB/s-16.6GB/s), io=929Gi (997GB), run=60003-60003msec

WRITE: bw=15.5GiB/s (16.6GB/s), 15.5GiB/s-15.5GiB/s (16.6GB/s-16.6GB/s), io=929GiB (998GB), run=60003-60003msec

Run-2

fio --bs=128k --direct=1 --directory=/mnt/test --gtod_reduce=1 --ioengine=posixaio --iodepth=32 --group_reporting --name=randrw --numjobs=12 --ramp_time=10 --runtime=60 --rw=rw --size=256M --time_based

randrw: (g=0): rw=rw, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=posixaio, iodepth=32

Run status group 0 (all jobs):

READ: bw=14.4GiB/s (15.5GB/s), 14.4GiB/s-14.4GiB/s (15.5GB/s-15.5GB/s), io=866GiB (930GB), run=60003-60003msec

WRITE: bw=14.4GiB/s (15.5GB/s), 14.4GiB/s-14.4GiB/s (15.5GB/s-15.5GB/s), io=867GiB (931GB), run=60003-60003msec

Stripped Mirror

Run-1

fio --bs=128k --direct=1 --directory=/mnt/test --gtod_reduce=1 --ioengine=posixaio --iodepth=32 --group_reporting --name=rw --numjobs=12 --ramp_time=10 --runtime=60 --rw=randrw --size=128M --time_based

rw: (g=0): rw=randrw, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=posixaio, iodepth=32

Run status group 0 (all jobs):

READ: bw=15.4GiB/s (16.5GB/s), 15.4GiB/s-15.4GiB/s (16.5GB/s-16.5GB/s), io=923GiB (991GB), run=60003-60003msec

WRITE: bw=15.4GiB/s (16.5GB/s), 15.4GiB/s-15.4GiB/s (16.5GB/s-16.5GB/s), io=923GiB (991GB), run=60003-60003msec

Run-2

fio --bs=128k --direct=1 --directory=/mnt/test --gtod_reduce=1 --ioengine=posixaio --iodepth=32 --group_reporting --name=randrw --numjobs=12 --ramp_time=10 --runtime=60 --rw=rw --size=128M --time_based

randrw: (g=0): rw=rw, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=posixaio, iodepth=32

Run status group 0 (all jobs):

READ: bw=17.3GiB/s (18.6GB/s), 17.3GiB/s-17.3GiB/s (18.6GB/s-18.6GB/s), io=1038GiB (1114GB), run=60003-60003msec

WRITE: bw=17.3GiB/s (18.6GB/s), 17.3GiB/s-17.3GiB/s (18.6GB/s-18.6GB/s), io=1038GiB (1114GB), run=60003-60003msec

Now these are just some quick tests and could use some tuning depending on how I plan to use this pool. Thinking about using it for iSCSI between proxmox and vmware. Also will be looking at 2TB NVMe but something with a better IC seeing than the Silicon Power NVMe because they bounced up and down drastically on performance. Hopefully a better controller will help to flat line the performance.

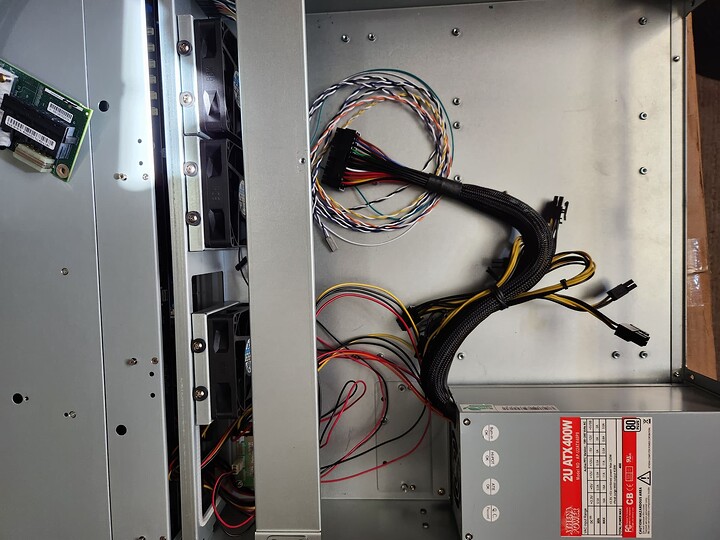

Now with everything in place, its time to start getting serious and seeing how much I can push this hardware. The plan is to utilize every slot and add a JBOD for 8TB SAS3 drives I have waiting to be used. Those will be my cold storage pool and possibly provide storage to storj network in hopes to make a little money to pay for power. Also, have a MSL 4048 with an LTO6 drive that I would like to use for backup. Anyone use a tape library with Scale? If so, what software did you use? Bacula?

Anyways, will keep updating as I continue to expand. Just hope sharing what works and what doesn’t helps someone else design and build their NAS. Safe someone else from going bald like me. ha ha!