@Atomic_Charge Submitted for the month of doing it.

The goal of my encrypted NAS project is to set up encrypted storage which is automatically unlocked when the system is at home but will become locked if it is removed. This protects against someone physically stealing or removing the server. Obviously for more sensitive information you would keep it encrypted and unlock when needed to protect against someone gaining access to the server. But in my case that would be impractical and unnecessary.

The original plan was to store keyfiles on my phone, so that the NAS could only be unlocked if it was on my local network and my phone was also connected to the network. But this would have been a problem if I needed to reboot the server remotely. For my new plan I will be storing the keys on a VPS. As the keys can only be accessed over the local network but the VPS is physically not in my house. This protects against a scenario in which someone just steals everything. Alternatively you could get a raspberry pi with a solar panel and hide it up a tree, or any other way of having the keyfiles logically available within a certain proximity but physically distant from the server.

This blog isn't really meant as a guide but I will be including a lot of the steps (as well as links to guides I used at the bottom) which may help if someone wants to do something similar. I'd also like to say that I'm totally not an expert on any of this, and there are bound to be plenty of massive security flaws in this plan. Feel free to let me know what they are :P

The original NAS

I am not beginning this project with empty disks. I already have a NAS system with around 20TB of data on it. But because I am using individual disk (not RAID or ZFS etc.) I can move data off a disk, encrypt it and move it back. It's going to take a while but it's straight forward enough. If you have a full backup then you could just encrypt everything then load the backup, but I don't have a full backup.

Also if you're using btrfs then good news! You can encrypt in place. I have a 4TB btrfs array which I use for backups which I was able to encrypt in place, although it took about a week. Not only can you encrypt in place but you also don't have to take the array offline. So you can still use it while it's encrypting. So you can add that to the pile of reasons why btrfs is pretty cool.

I'll go in to more detail on that later.

Not only do I have a ton of data but it's on a lot of disks, across two systems. Currently I have only encrypted the btrfs backup and two of the 2TB data disks from one of the servers, but all up I have 12 2TB disks and 4 4TB disks to do. So, for now I will be writing up the general process for setting it all up and later, once it's all done I will write about how well (or not) it works.

Encrypting the disks

I will be using dm-crypt with LUKS (the NAS is running linux by the way) for the disk encryption as well as a couple of directories which will be encrypted with ecryptfs and encfs. The encryption process is pretty straight forward but I didn't have enough space to move files of each disk in order to encrypt them. So I bought a new disk which will end up as a second parity disk for the second NAS once I'm done encrypting everything.

Only took a week to arrive, so much for express shipping...

Also, here's a quick script I made so I could copy two of the 2TB disks over to the new 4TB disk and leave it running while I was at work or sleeping.

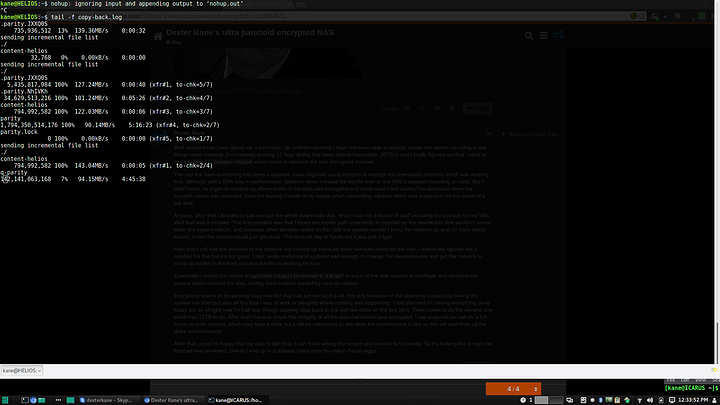

#!/bin/bash

exec >> /home/kane/copy.log

DISK1=/mnt/data1

DISK2=/mnt/data2

cp -rav $DISK1 /mnt/hyron/parity2/

wait

sync

cp -rav $DISK2 /mnt/hyron/parity2/

wait

syncSo with some free space cleared up I can start encrypting the disks. First though I need to make some key files. To do that I'll use dd to generate some random 4kb files.

dd if=/dev/urandom of=keyfile.key bs=1024 count=4

I made a keyfile for each of the disks I'm going to encrypt, I could use the same key for each one but if I'm not having to type a different passphrase in for each disk then it's just as easy to use different keys.

Now that I have the keyfiles and some empty disks I can create the encrypted volumes.

cryptsetup -v --key-file=/path/to/keyfile luksFormat /dev/sda

I'm just using the default settings but if you want to use a different cipher or key length you can. You also want to make sure you're doing this to the right disk because this is a good way to lose you data if you make a mistake.

Then open the volume

cryptsetup --key-file=/path/to/keyfile luksOpen /dev/sda data1

data1 here is the name of the volume, so now the volume will be located at /dev/mapper/data1. You can also use a UUID here instead of /dev/sda.

Before formatting the new volume I'll zero the disk with dd first. This prevents an attacker from being able to recover files from the disk that haven't been overwritten by the new encrypted volume. It also prevents someone from seeing how much encrypted data there is. When you zero and encrypted disk the data on the actual disk will just appear like random data.

dd if=/dev/zero of=/dev/mapper/data1 bs=128M

This was the output once the command completed (this takes while)

3202+6477894 records in

3202+6477893 records out

2000396836864 bytes (2.0 TB) copied, 16647.5 s, 120 MB/sI'm pretty happy with that speed, it's a small loss in performance but still fast enough to saturate a gigabit link. Not sure if I will see similar results with actual data transfers however.

Once that's done I formatted the volumes with ext4 using mkfs.ext4 /dev/mapper/data1

Now I can mount that and start using the encrypted disk.

For btrfs the process is similar, except I didn't need to free up any disk space first. You do have to unmount the btrfs array once but only for a short time in order to encrypt one of the disks, after that you can mount it again and keep using it while it rebuilds.

umount /mnt/backups

This unmounted the backups btrfs volume. Now I can encrypt one of the disks (you have to do this one disk at a time, although if your using RAID6 you can probably do two at a time)

cryptsetup -v --key-file=/path/to/keyfile luksFormat /dev/sdk

cryptsetup --key-file=/path/to/keyfile luksOpen /dev/sdk backups1At this point you can mount the btrfs array again in degraded mode

mount -o degraded /mnt/backups

Before adding the encrypted disk to the btrfs array I'll zero it, after that I'll add it to the array.

dd if=/dev/zero of=/dev/mapper/backups1 bs=128M

btrfs device add /dev/mapper/backups1 /mnt/backupsThis will add the encrypted /dev/mapper/backups1 volume to the /mnt/backups btrfs array. Now I'll delete the missing (original, unencryoted) disk which will write data to the new encrypted volume. This takes a long time, but the array is still usable while this is happening.

btrfs device delete missing /mnt/backups

Once that's done I repeated the process for the second disk, but it will work equally well for an array with more disks.

Automatic Mounting

Once the encrypted disk are configured I need to set them to auto mount on boot. To do this I first need to add the disks and keyfiles to the /etc/crypttab file. This is what mine currently looks like, but I will add more once I add encrypt more disks.

data1 /dev/disk/by-uuid/853e9bdf-bf4a-481e-b873-ba6cd39d7011 /home/kane/keys/helios.data1.key luks

data2 /dev/disk/by-uuid/4b2250bd-d606-4530-babd-e9494362bfd1 /home/kane/keys/helios.data2.key luks

backups1 /dev/disk/by-uuid/209e9133-67fa-4023-8bfa-fc1ce11ac4e3 /home/kane/keys/helios.backups1.key luks

backups2 /dev/disk/by-uuid/197ec56d-af4b-4b66-88e3-dcdfa258c4ab /home/kane/keys/helios.backups2.key luksThen I added mount options to /etc/fstab for the ext4 and btrfs filesystems.

/dev/mapper/data1 /mnt/data1 ext4 defaults,errors=remount-ro 0 2

/dev/mapper/data2 /mnt/data2 ext4 defaults,errors=remount-ro 0 2

UUID=eeb06b1d-4009-4442-a5e5-7531ca3196ad /mnt/backups btrfs defaults,nobootwait,nofail,compress=lzo 0 2Also in /etc/fstab I have configured the samba share from the VPS to auto mount, this is where key files are stored. Because the keyfiles are required to open the LUKS volumes they need to be available before crypttab is run. However crypttab runs before ftab so I needed to eddit another file: /etc/default/cryptdisks and add this line:

CRYPTDISKS_MOUNT="/home/kane/keys"

This is the mount point for the VPS samba share from /etc/fstab so now that should mount before running crypttab and mounting the encrypted volumes. I haven't actually tested this however as I don't want to reboot the server while moving files around. If it turns out not to work I will update this.

At the point everything is essentially set up the way I want. The server will mount the encrypted disks automatically on boot without needing a password but fail to do so if it is removed from the network. But there's still a little more work to do.

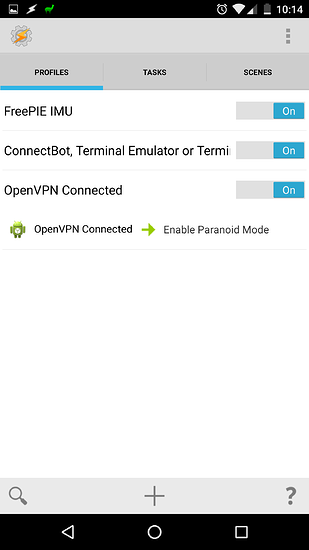

Paranoid mode

In paranoid mode all systems (both NAS servers and the VPS) will periodically check that they are in contact with each other and lock down if they aren't. I may even add other triggers which will cause the NAS to lock the disks such as if the network is disconnected. Hell, I could even wire up a mercury switch that will lock the disks if the server is moved. There is of course a point of diminishing returns here as if an attacker has access to the server I can no longer rely on the server to lock itself down. If an attacker who knows what they're doing has access to the disks in an unencrypted form they will be able to prevent them from locking and there's little that can be done about that.

Paranoid mode is a countermeasure against an attacker who causes the disks to lock and then tried to reattach the server to the network in order to get it to unlock again.

I have tested my paranoid mode scripts on the VPS but not on the NAS yet as I'm still working on the disks and will need to wait until everything's done before I can reboot or unmount anything.

This is the paranoid mode script for the NAS

#!/bin/bash

#Check to see if paranoid mode is enabled

#If disabled then exit script

PARANOID=$( < /home/kane/.paranoid )

if [ $PARANOID -eq 0 ]

then

exit

fi

#In paranoid mode disks will unmount and close if key files are unreachable

#Check to see if key files exist

if [ -s /home/kane/keys/helios.data1.key ]

then

exit

else

echo "Key file cannot be accessed, unmounting encrypted disks"

/bin/bash /home/kane/scripts/lock-disks.sh

fithis script with be run every minutes, or 5 minutes by cron. To enable or disable paranoid mode i echo 1 or 0 to a file which the script checks. Eventually I will streamline the process and have a shortcut on my phone which can enable or disable paranoid mode across all three machines. I may also add a ping test to the script in addition to checking that the keyfiles still exist.

the lock-disks script will just unmount and close all the encrypted disks, depending on how reliable this is I may just have the system shut down.

On the VPS paranoid mode will cause the key files to be encrypted. Or they would be if the VPS wasn't lacking the kernel modules needed to run an encrypted filesystem like ecryptfs. So instead I have an encrypted tar file containing the keys. This script unpacks that tar file:

#!/bin/bash

#Decrypt key archive

openssl aes-256-cbc -d -a -in /home/administrator/keys/keys.tar.aes -out /home/administrator/keys/keys.tar

wait

#Extract key files from tar

tar -xvf /home/administrator/keys/keys.tar -C /home/administrator/

wait

#secure erase tar file

shred -u /home/administrator/keys/keys.tar

wait

#restart samba server

service smbd restartThe tar file is decrypted using a password, so it has to be manually unlocked by me.

This script will lock the keys by shredding (secure erase) the plaintext files and leaving just the encrypted tar file.

#!/bin/bash

#Stop samba server

service smbd stop

wait

#Secure erase key files

shred -u /home/administrator/keys/*.keyIt also stops the samba server preventing any access to the VPS.

This is the paranoid mode script which is run by cron. It checks that it can ping both HELIOS and HYRON (the two NAS servers) and locks the keys if it can't.

#!/bin/bash

#Check to see if paranoid mode is enabled

#If disabled then exit script

PARANOID=$( < /home/administrator/.paranoid )

if [ $PARANOID -eq 0 ]

then

exit

fi

#In paranoid mode keys will be locked if either HELIOS or HYRON are unreachable.

#Check to see if HELIOS is reachable

if ping -c 1 10.1.1.20 &> /dev/null

then

HELIOS=1

else

HELIOS=0

echo "HELIOS unreachable"

fi

#Check to see if HYRON is reachable

if ping -c 1 10.1.1.20 &> /dev/null

then

HYRON=1

else

HYRON=0

echo "HYRON unreachable"

fi

#If both servers are reachable then exit, else lock the keys

SERVERS=$(($HELIOS + $HYRON))

if [ $SERVERS -eq 2 ]

then

exit

else

echo "Servers unreachable, locking keys"

/bin/bash /home/administrator/scripts/lock-keys.sh

fiThe end, for now

So this is where I'm up to. It will probably take most of the week to finish encrypting the disks, after which I can test some more things and modify my scripts. So far things look promising. I will update once I have something to update.

Please feel free to ask me any questions or tell me how horrible an idea this is and how terribly implemented it is ;)

Links

http://www.cyberciti.biz/hardware/howto-linux-hard-disk-encryption-with-luks-cryptsetup-command/

https://cowboyprogrammer.org/encrypt-a-btrfs-raid5-array-in-place/