Hi,

I’m creating this thread to warn other users and vent my own frustration about the abyssmal performance of AMD’s X570 motherboard software RAID performance for SATA SSDs.

While I understand that SATA/AHCI isn’t getting much love anymore and NVMe is the future, I still highly value this technology for being very reliable at this point in time.

I made these observations while I was setting up a new daily-driver system that was supposed to have SATA RAID1 for its operating system (Windows 10), after many frustrating hours, SSD erases and operating system reinstallations I’m currently also trying out RAID10 which performs similarly bad.

Simplified overview of my expectations:

-

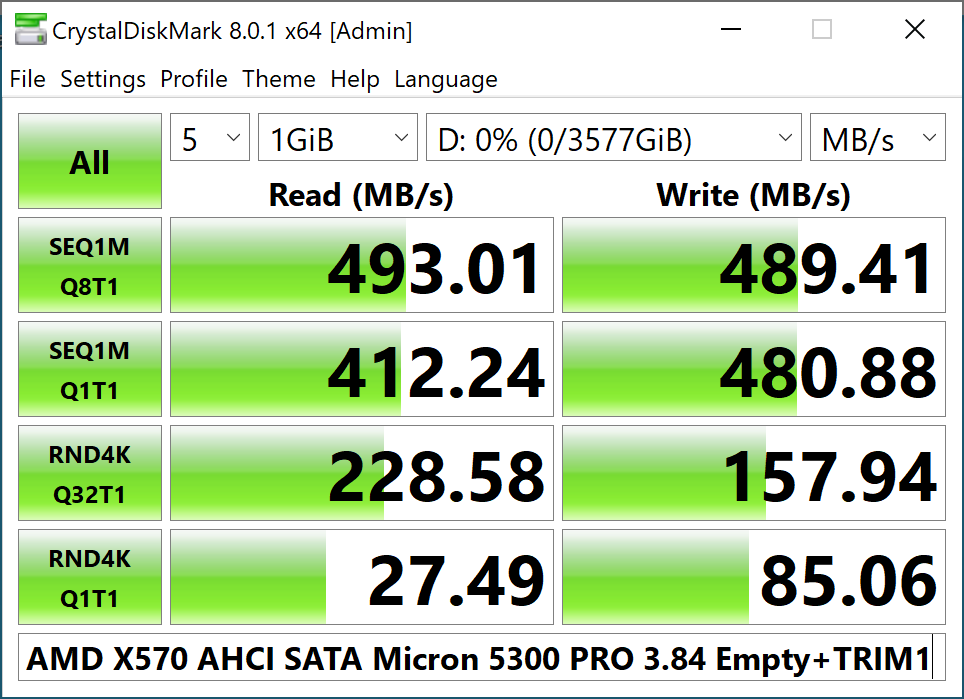

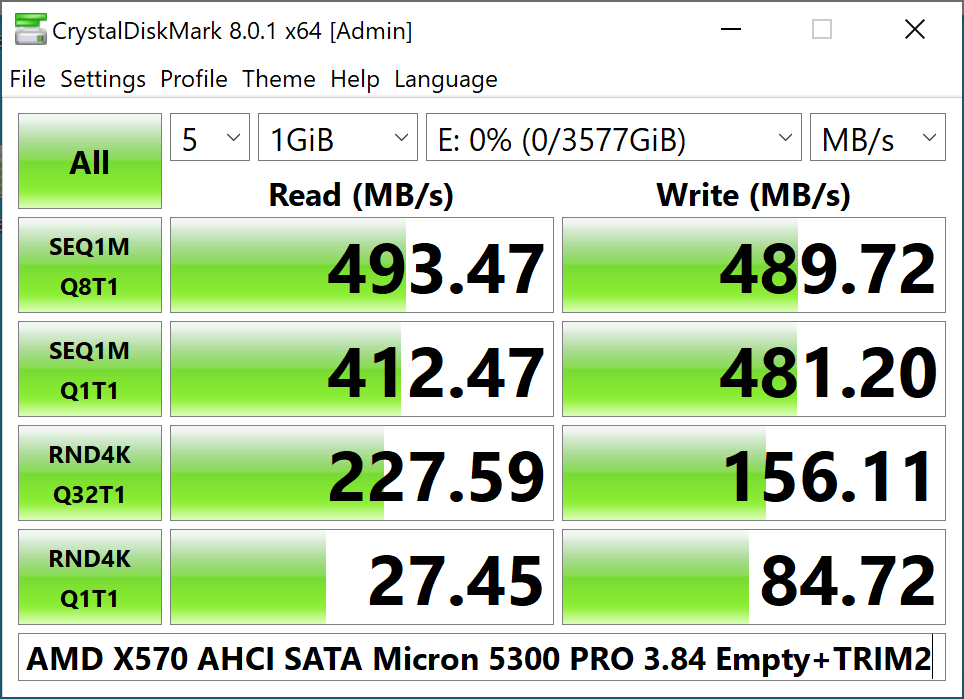

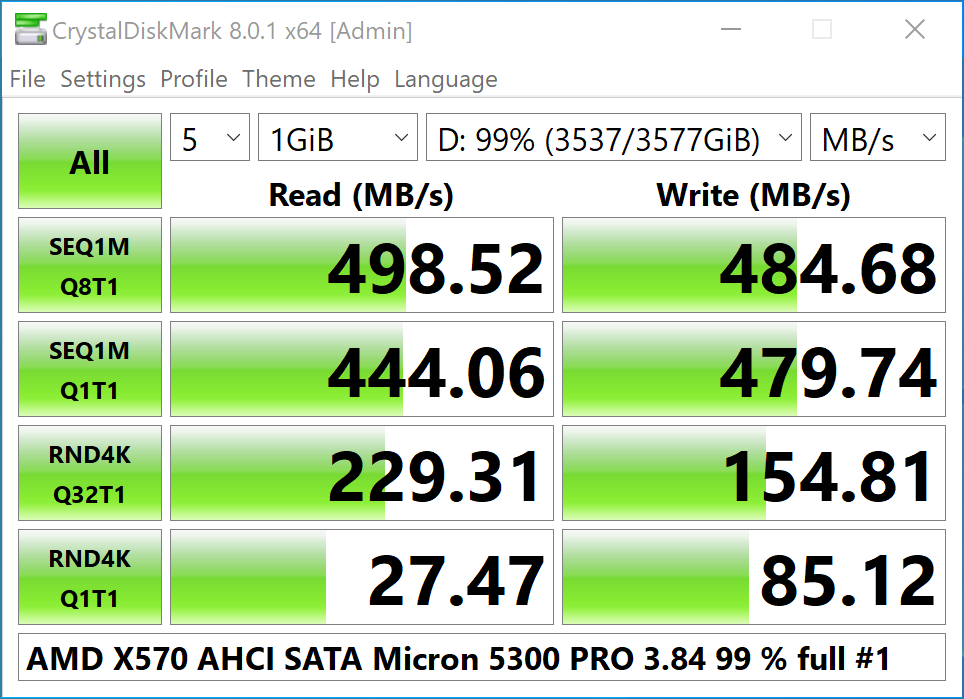

RAID1 (2 SSDs): 2n read performance, 1n write performance (only looking at sequential speeds);

-

RAID10 (4 SSDs): 4n read performance, 2n write performance (only looking at sequential speeds);

Reality with AMD’s software RAID1 and 10:

-

RAID1 (2 SSDs): <2n read performance, 0.5n write performance (only looking at sequential speeds);

-

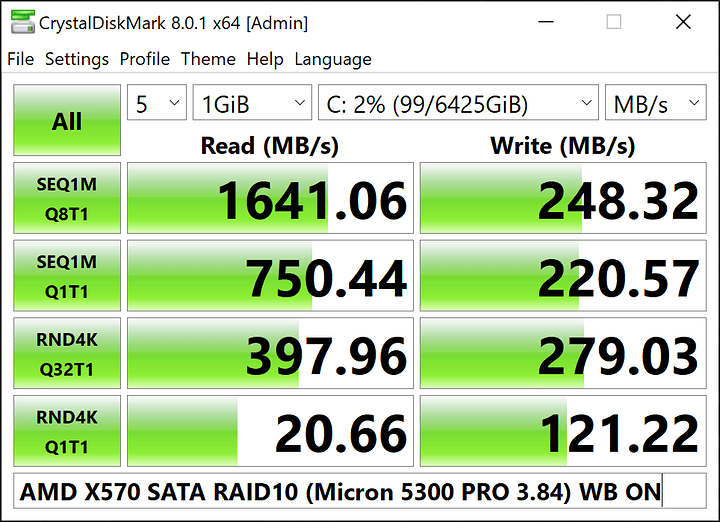

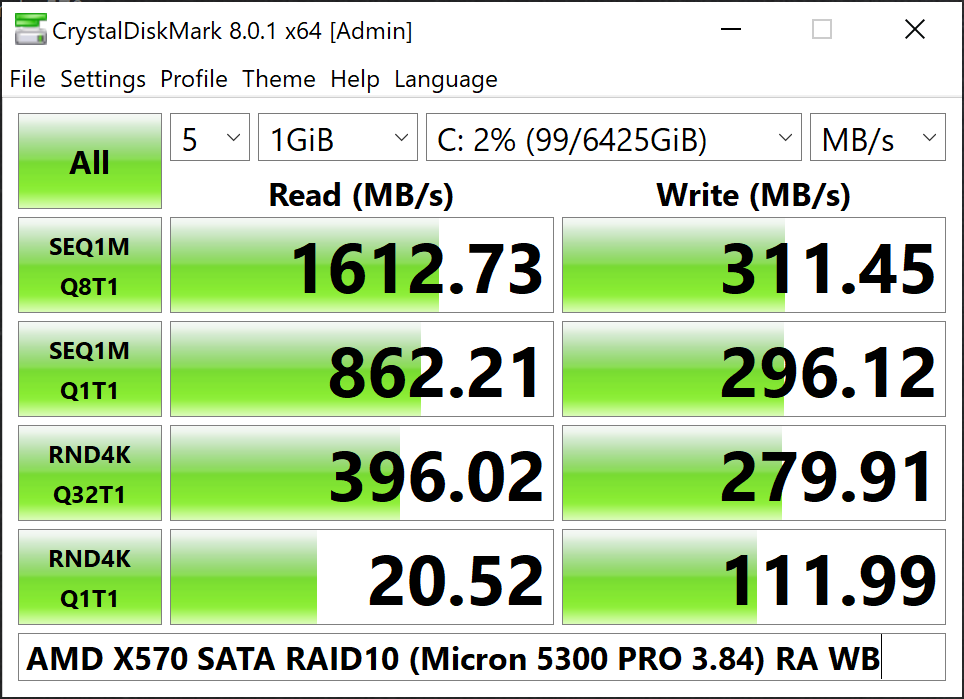

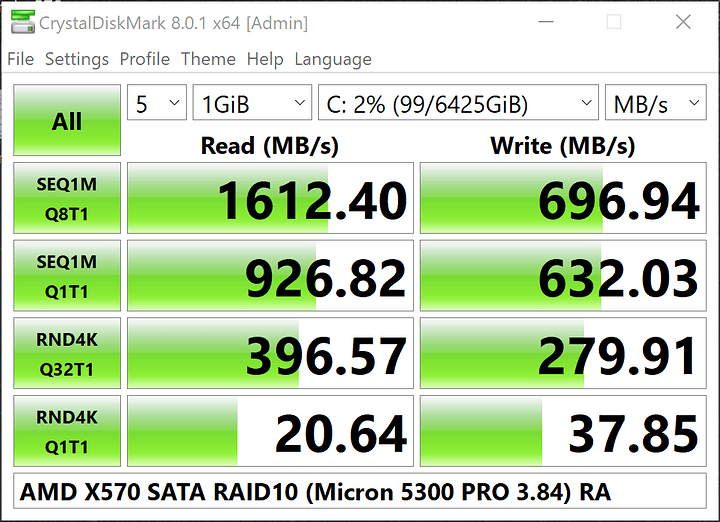

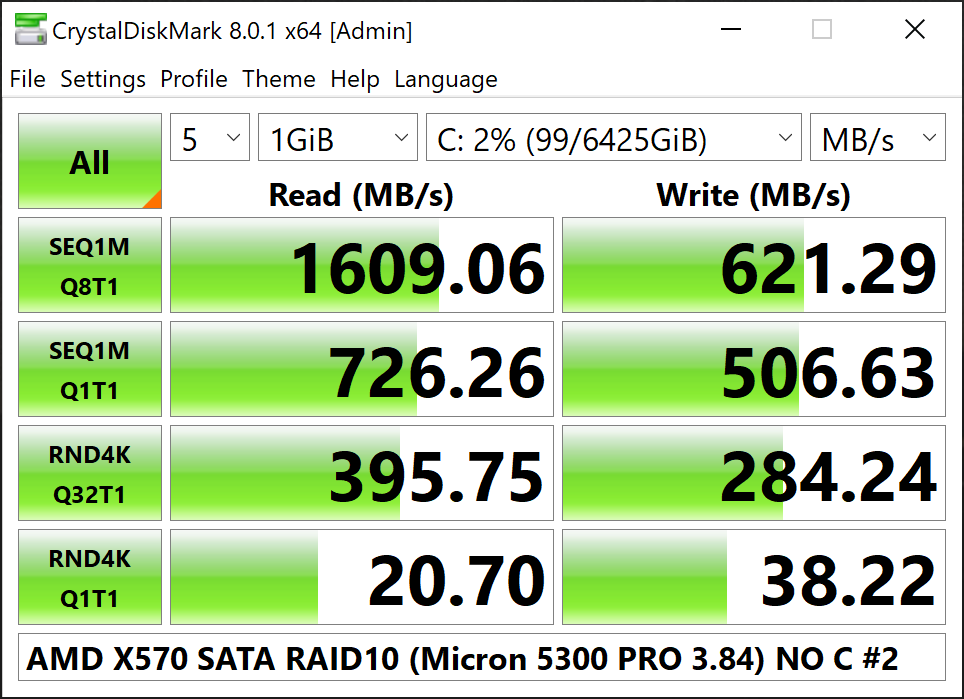

RAID10 (4 SSDs): <4n read performance, 0.5n write performance (only looking at sequential speeds);

The performance is so bad that when I use the motherboard’s 2.5 GbE NIC to copy data to the RAID array the responsiveness of applications is tanking (reminder: operating system installed on it) since the copy process with its a little over 200 MB/s is maxing out the RAID array to 100 % load according to Task Manager or HWiNFO.

This is absurd.

The source of these issues seems to be bugs but I haven’t been able to recognize a repeatable pattern yet.

Switching through the available RAID array cache settings in AMD’s RAID management software I can sometimes see write values reach the normal expected speeds of 1n/2n but this is only temporary.

I have absoluetely no idea what the cause for the bottleneck is

-

Tried Windows 10 20H2 and 21H1 with all Windows Updates, device firmwares and drivers up-to-date;

-

Checked that Windows is not doing something in the background (also physically disconnected ethernet adapter connections to be 99 %-sure);

-

Erased all SSDs and tried again;

-

Created the RAID arrays with completely default settings, with changed stripe sizes (AMD’s documentation warns against this), with and without initialization;

-

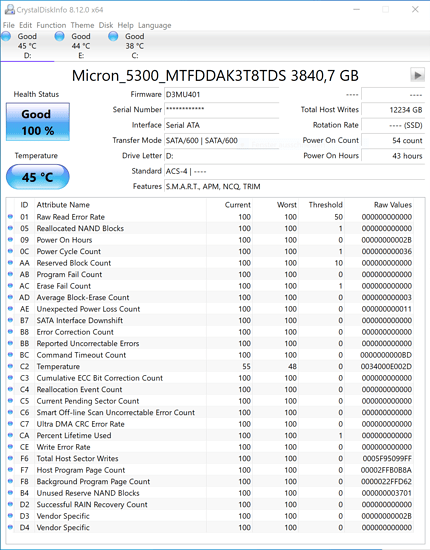

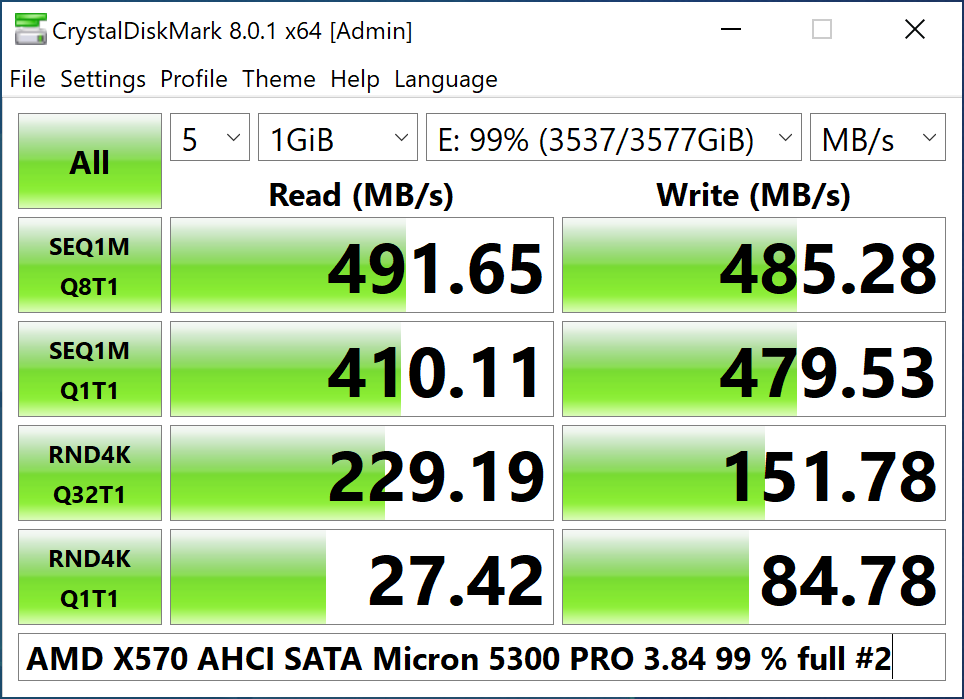

I’m using Micron 5300 PRO 3.84 SSDs (tested 6 units), while not the fastest SATA SSDs on the planet they are enterprise-class ones that can sustain their performance even when filling them up and more importantly have complete powerloss protection to ensure a RAID array’s data integrity in PSU/motherboard failure scenarios;

-

All SSDs get a clean SATA 6 Gb/s connection to the motherboard, all C7 SMART values are still completely unchanged at their factory-new values implying there are no electrical communication issues between the SSDs and the motherboard’s SATA controller;

-

Tested all 8 of the motherboard’s X570 chipset SATA ports;

Observations where I’m not sure of regarding their impact on the situation

-

Even when choosing all member drives of an array to be “SSDs”, the AMD RAID drivers are presenting the RAID array to Windows as a mechanical HDD;

(Fixed with new driver package released on May 25th, 2021) -

TRIM is not supported by AMD’s RAID drivers, would be pretty bad when using consumer-grade NAND SSDs;

System hardware configuration:

- ASRock X570 Taichi Razer Edition, P1.50

- 5950X

- 128 GiB RAM ECC

- PCIe x16 #1 (CPU): GPU, PCIe 4.0 x8

- PCIe x16 #2 (CPU): Ethernet adapter, PCIe 3.0 x8

- PCIe x16 #3 (X570): Thunderbolt 3 adapter, PCIe 3.0 x4

Appendix:

RA: Read-ahead cache

WB: Write-back cache