As per my posts above - it looks to me like you’re seeing read-modify-write cycles when trying to write, which is tanking performance.

This can happen with spinning disks if you make incorrect choices regarding sector/stripe/filesystem block sizes, but the correct numbers are much more well known in traditional hard drive land.

SSDs are still new, the firmware does lots of stuff in the background, and its very much an edge case.

There’s a reason enterprise flash arrays use their own custom os/firmware/etc.

Also this.

You’re very much in “edge case” land and the knowledge isn’t commonly available for what you’re trying to do.

I do have enough experience with enterprise storage however to tell you that it isn’t as simple as you are expecting it to be, and the symptoms you describe (poor write, read ok) are consistent with the read-modify-write cycles I describe above.

Your hardware is probably working just fine and not causing the issue, it’s just being instructed to do a lot more work by the multiple layers of abstraction not lining up correctly due to incorrect sizing of the various data sizes involved causing read-modify-write.

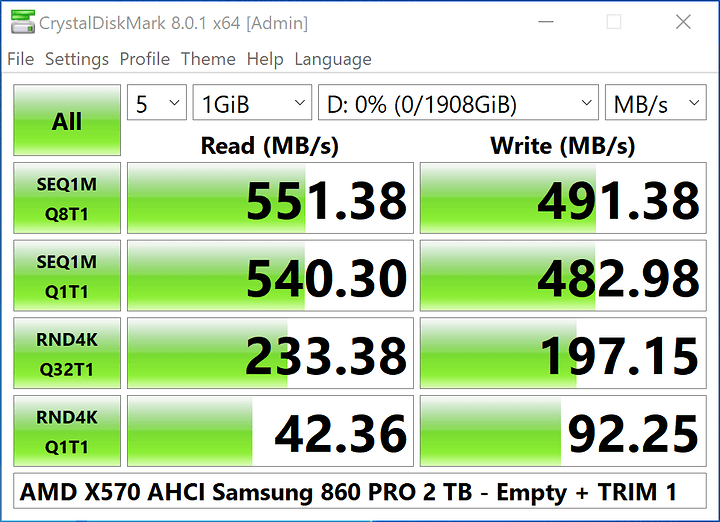

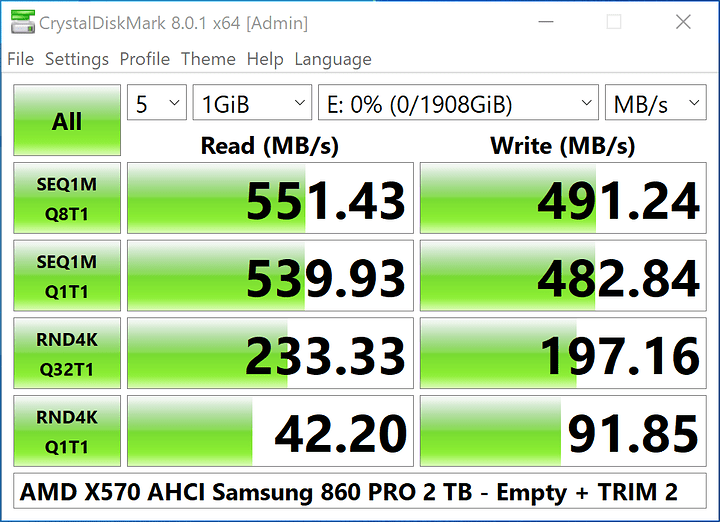

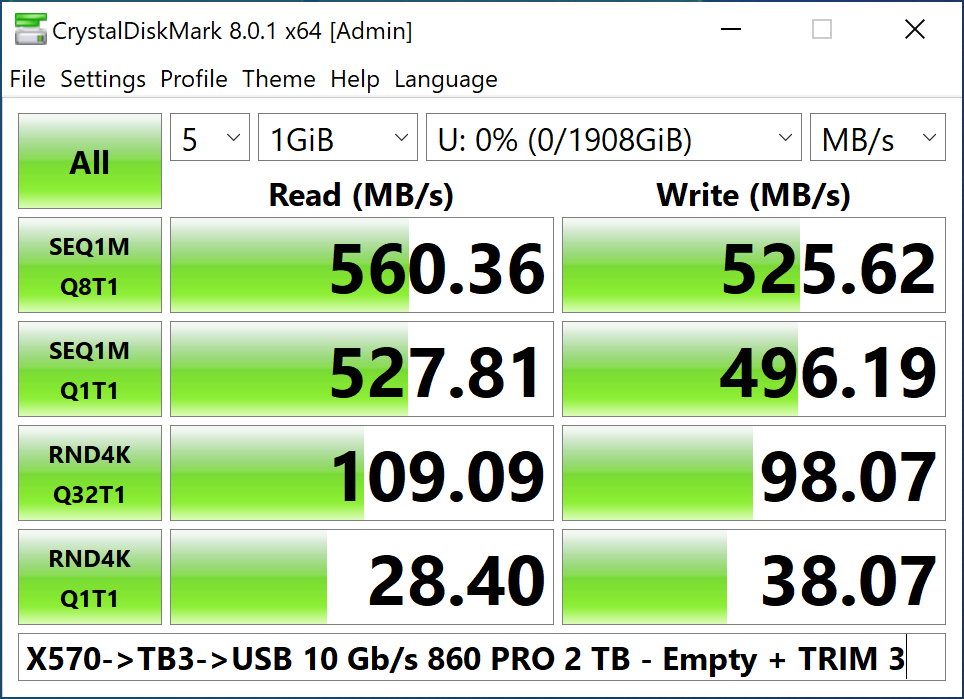

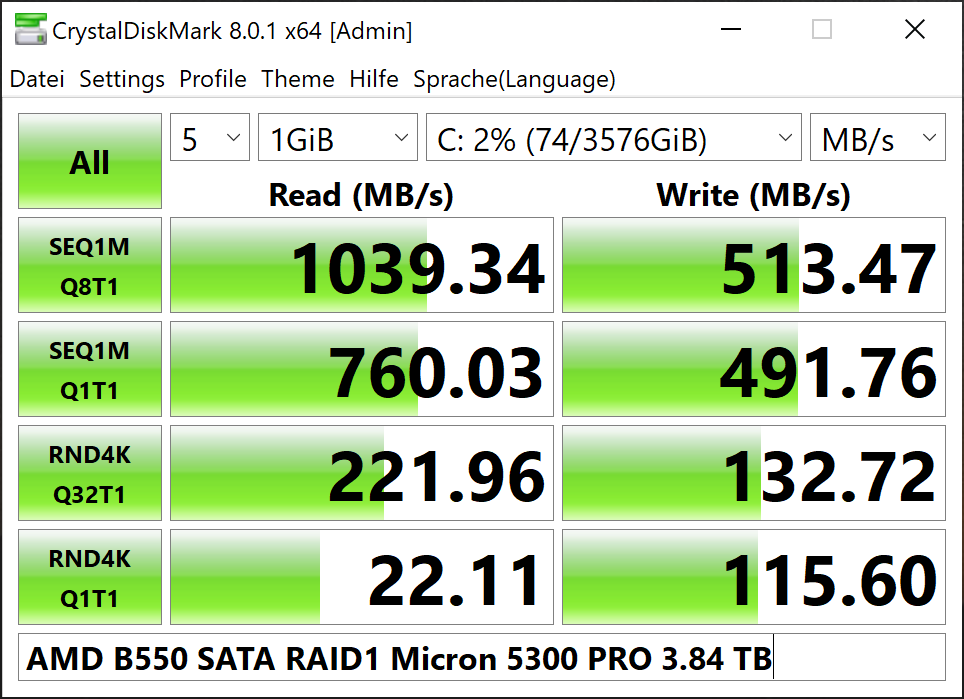

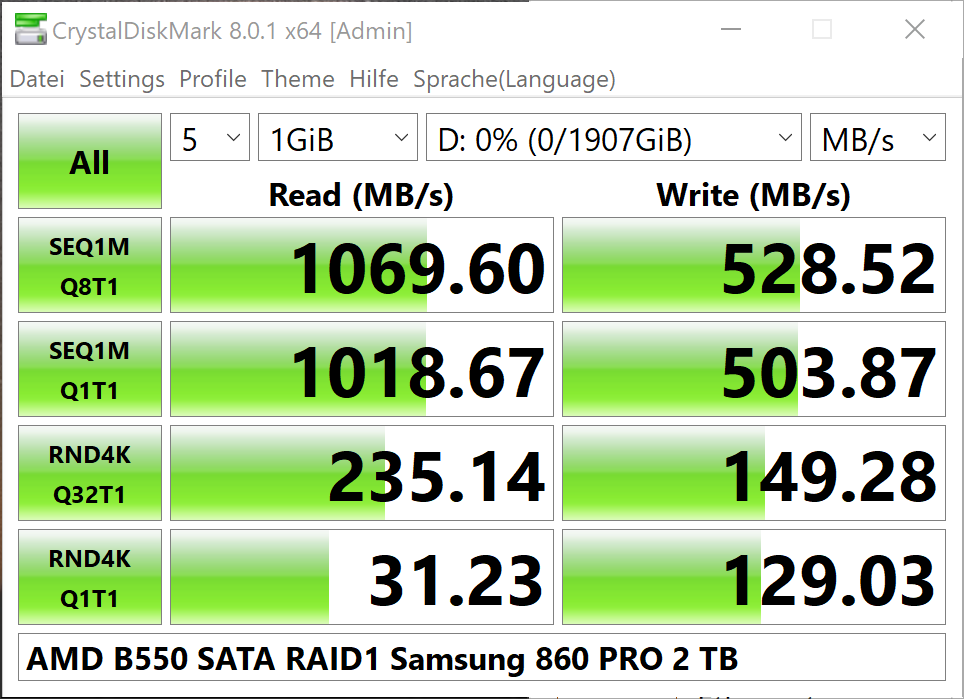

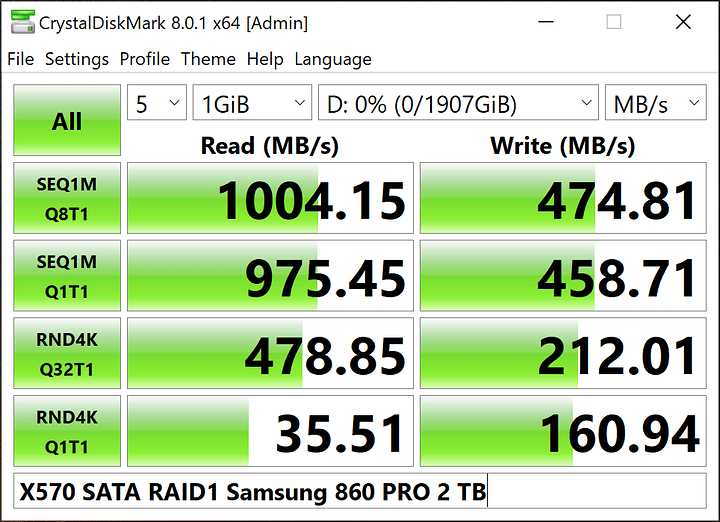

The larger (1M) writes are less/virtually not affected because they are mostly “full page/sector/stripe” writes that do not require read/modify/write cycles for every block (a full block is fully changed so the old state isn’t required to preserve via read first). This is why you are seeing pretty close to theoretical max performance on those.

4k? its a crapshoot. Your latest write figures are pretty much exactly the sort of penalty I’d expect if there was read-modify-write involved on the 4k writes - somewhere within one or more of the layers of abstraction between your OS filesystem and the cells on the SSD.

Different performance between different SSDs could be down to different SSD controller optimisations for different NAND, longevity strategy, different cache amounts, etc…

One more thing. The OS will hopefully do write caching (in RAM) if you tell it to, to mask this somewhat. This is ALSO why caching hardware RAID controllers exist - and why ZFS uses 20 (30? memory hazy) second transaction groups in RAM (to buffer lots of smaller writes into bigger chunks that don’t tank performance so bad). I’m not sure if crystal diskmark deliberately invalidates/disables this OS level write cache to measure “raw” disk performance.

So - maybe confirm if your actual use case suffers so bad, or its just diskmark… its unlikely you’re constantly doing 4k writes, unless you’re using some very specific niche application…