I was planning to continue my existing thread where I started off by sanity checking my idea of buying a kit and then placing it what’s needed but due to many many things, I ended up building my own SFF computer!

…with off-the-shelf parts and some hacks ![]()

So, here’s the boring stuff…

| Part | Name | Quantity | Price per unit | Total Price |

|---|---|---|---|---|

| Chassis | LOUQE Ghost S1 Mk III | 1 | $351.56 | $351.56 |

| Cooling | NOCTUA NH-L9i chromax.Black CPU Air Cooler | 1 | $65.69 | $65.69 |

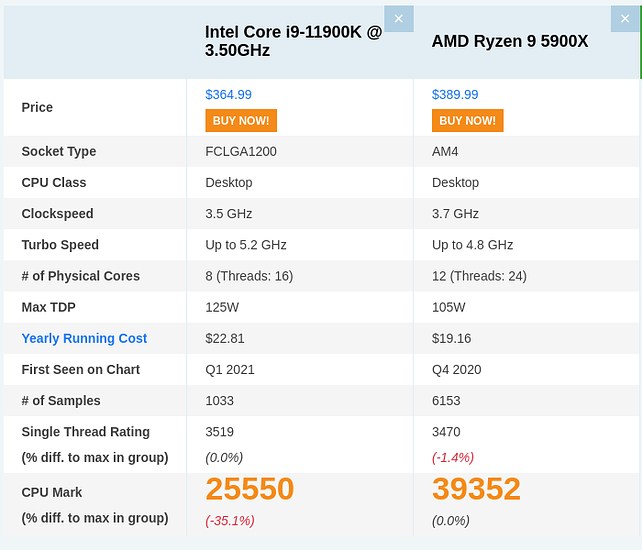

| Processor | Intel Core i9-11900K | 1 | $441.92 | $441.92 |

| Storage | Sabrent 4TB Rocket Q4 NVMe PCIe Gen4 SB-RKTQ4-HTSS-4TB | 1 | $755.07 | $755.07 |

| Storage | Samsung 870 Evo 4TB 2.5″ SATA III 6GB/s V-NAND SSD MZ-77E4T0BW | 3 | $508.60 | $1525.8 |

| Memory | G.Skill F4-4400C19D-64GTZR Desktop Ram Trident Z RGB Series 64GB (32GBx2) DDR4 4400MHz | 1 | $528.35 | $528.35 |

| Power Supply | Corsair SF600 Platinum Fully Modular Power Supply, 80+ Platinum | 1 | $141.54 | $141.54 |

| Motherboard | Gigabyte Z590I VISION D (rev. 1.0) | 1 | $380.48 | $380.48 |

| External Networking | QNAP USB 3.0 to 5GbE Adapter (QNA-UC5G1T) | 1 | $161.60 | $161.60 |

| Total | $4352.01 |

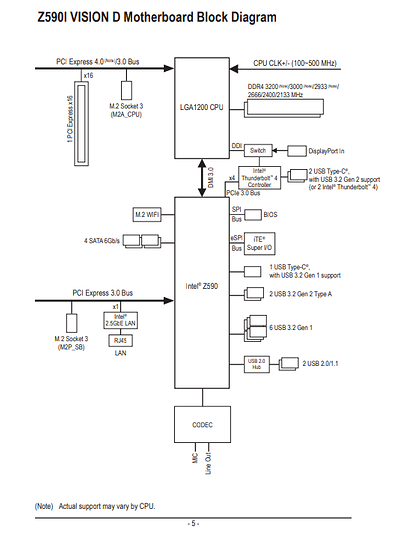

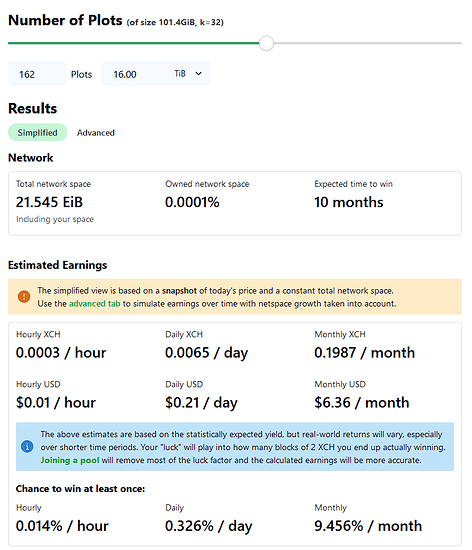

Now these are standard off the shelf parts that’ll fit into the chassis no problem and do everything as required with no jank whatsoever. Well, no jank = no fun and when deciding to pick my motherboard, I came across the Z590I’s manual (source) and found their block diagram.

This page shows the presence of an M.2 slot that I wasn’t expecting at all (labelled as M2P_SB) and searching for that term yielded me a particular beauty.

So, I have a PCIe 3.0 x4 slot at my disposal… hmm… now, for Thunderbolt 10GbE, the authority more or less seems to be the “OWC Thunderbolt 3 10G Ethernet Adapter (OWCTB3ADP10GBE)” and it does not seem to be capable of Thunderbolt daisy-chaining… at least if these images are to be believed.

Now, I find it kinda odd that I should give up a 40Gbps Thunderbolt 4 port for 10Gbps Ethernet. I could get a dock but they’re pricey and I’ll be forced to import it, which will attract heavy costs.

So, why don’t we use this PCIe 3.0 x4 slot available as an M.2?

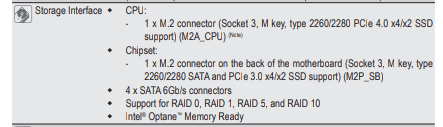

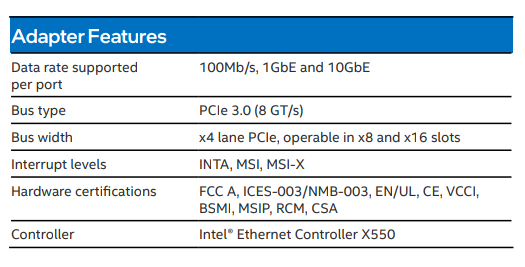

Now, we need a card that’ll behave with such a slot, so I started hunting for a PCIe NIC that’ll take good advantage of those four lanes and after a lot of searching and reading product specifications, the Intel X550T2BLK’s product brief had this to say…

Alright, you have my full attention.

So that figures what we’re gonna put in there, now the question is, how do I bridge this?

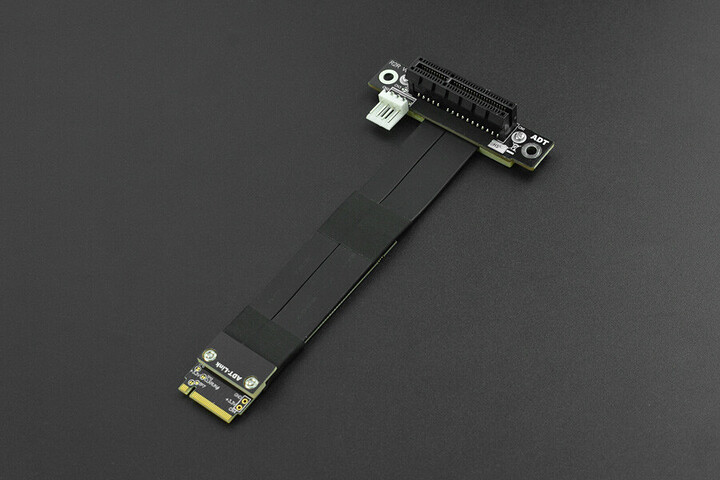

I remembered of a video where Linus Tech Tips tried to bridge an LattePanda Alpha with a Titan RTX card (source) and I figured, alright, unlike a Titan, we are mapping 4 lanes to 4 lanes so, let’s get what we need but less jank and through enough Google-fu, found the R42SR M.2 Key-M PCI-E x4 Extension Cord for LattePanda Alpha & Delta.

Alright, everything checks out, M-key male to M-key female, 4x (M.2) to 4x (adapter) to 4x (card) but wait, there’s a need for a 4-pin 12V input.

Well, shit.

Attempting to find a Molex to floppy 4-pin connector resulted in being charged outrageous prices, to the tune of $28.82, which I’m not paying. After going through multiple online retailers, I remembered I have a spare non-modular Corsair 450W PSU that’s collecting dust that has a cable that goes Supply-SATA-Molex-Molex-4PIN…

So… if I snip off the cable where it interfaces between SATA and Molex, I got myself a dinky little adapter, for free ![]()

We’re back in business! The SF600 has a Molex female and naturally as our makeshift adapter is from a PSU, it too has a female connector so we just need a Molex male-to-male adapter, which thankfully costs a more reasonable… $4.70 (still too much money but the alternative would be to go to a faraway place and spend more in transport than on the cable)

So, we have the ingredients ready for 10GbE within the case. I plan on installing a graphics card but due to one slot being taken up by networking, we are restricted to single-slot cards, which is perfect as AMD makes workstation single-slot cards and I only have a 600W power supply.

I’m waiting on a workstation version of the rumored RX7800 (source) but yeah, I’m glad!

| Part | Name | Quantity | Price per unit | Total Price |

|---|---|---|---|---|

| Network Card | Intel Corp X550T2BLK Converged Network Adapter | 1 | $475.75 | $475.75 |

| Converter | R42SR M.2 Key-M PCI-E x4 Extension Cord | 1 | $46.56* | $46.56* |

| Converter | Generic 4-pin Molex Male to 4-pin Molex Male Connector cable | 1 | $3.88 | $3.88 |

| Converter | Generic 4-pin Molex Female to 4-pin Floppy Connector | 1 | $0 | $0 |

| Total | $526.19 |

* - the extension cable unfortunately had to be imported by me and the cost includes shipping and a 75% import tax

Now, one drawback (if you can call it that) of the Ghost S1 Mk III is that there’s no front IO. Which, for my use case, is perfectly fine. But that also means, if we go back to the block diagram of the Z590I VISION D, we’re leaving quite a lot of connectivity on the table.

We can’t have that!

Unfortunately, we cannot use the standard USB header to PCIe slot-mounted ports as we simply don’t have the slot real estate so we go for the next best thing, using a USB DOM to hold TrueNAS Scale.

Unfortunately, there is absolutely nobody selling a USB2 DOM, let alone a USB3 DOM, not even server vendors in my area. So, I still don’t want my ports being wasted and since I was reading into SFF builds, I remembered that these things exist…

It’s for a Lian-Li case but I’ll snag one… thanks! So, we got ourselves a USB3.2 Gen 1 port. My initial thought was to buy a flash drive but then I remembered that when it comes to the pecking order of NAND, flash drive NAND tends to be kinda crappy.

I have a 970 EVO Plus that I used to have as my boot drive on my current rig just collecting dust and now it has a time to shine! Well, I’ll need an enclosure as I don’t own one so I just bought one which seemed reputed enough and there we have it, a makeshift USB DOM except it’s not a DOM and it’s really messy but it works ![]()

| Part | Name | Quantity | Price per unit | Total Price |

|---|---|---|---|---|

| Storage | Samsung 970 EVO Plus 250GB PCIe NVMe M.2 (2280) Internal SSD | 1 | $0 | $0 |

| Converter | ASUS ROG Strix Arion Aluminum Alloy M.2 NVMe External SSD Enclosure | 1 | $62.49 | $62.49 |

| Converter | Lian Li LANCOOL II-4X 3.1 Type C Cable for LANCOOL II - LANII-4X | 1 | $13.67 | $13.67 |

| Total | $76.16 |

I’ve bought double sided tape and a dremel for a super secret update which I’ll document if I don’t bung it up.