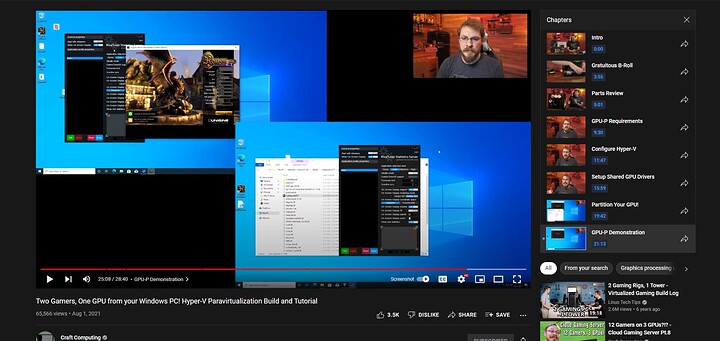

Hello , I have followed this youtubers technique nd have managed to run it successfully , now I am not understanding the part how to use the other monitor separately with a separate kb and mouse , I am using windows 10 btw .also having pasrsec error -15000 .I am not being able to comprehend parsec . Here is the pic of the tutorial I followed

@cheif22 I just added what I hope to be Windows 10 20H1+ support to the script, please try it out.

@jamesstringerparsec Thanks for the update! When I get another day free this week I will give it a go. Had issues with a Windows 11 version but I believe it was USER error on my part.

I’ll give it a go again this week.

Thanks!

Update:

I was looking through the script and you use this line.

Add-VMGpuPartitionAdapter -VMName $VMName -InstancePath $DevicePathName

Windows 10 20H1 or other versions besides Windows 11 do not have the

-InstancePath switch when looking at Powershell ISE. It does have -AdapterID but not certain that it performs the same function.

I can try this to see if it works but It may not.

Anyone cracked the Geforce Experience code completely yet? I can’t even get it installed even with the identified work around.

thanks for reply, but as said i dont have those 2 or even 1 of those files, which i dont understand since everyone seems to have em, so my question is how i get the files

Windows 11 Pro

Nvidia Geforce RTX 3060 Laptop GPU With latest update yet the files are not here, and not sure if it work if any could send me those 2 folders.

Yes I see the issue now, on Windows 10 the adapter is automatically assigned, and it doesn’t seem to be possible to query what adapter is assigned to the VM since Get-VMGPUPartitionAdapter and its corresponding WMI query does not return something like InstanceID that can be used to inform the script which driver should be copied over.

In the case where the user only has one GPU on Windows 10, I can make it work since it can be assumed that this GPU will be assigned by Windows 10 to the VM.

There may be some way of figuring out what GPU Windows 10 will assign by default (maybe in multi gpu system it picks the first high performance GPU in the system) but this is an annoying way of figuring this out and will take a lot of trial and error.

@cheif22 I figured out a solution with Windows 10, and updated the script to support it. With Windows 10 you must leave the GPUName field in the script params as “AUTO”. I figured out that Windows 10 just uses the first GPU available for partitioning, so “AUTO” just uses the first GPU returned from the WMI query.

In this case it will work correctly (tested with a multi GPU system on Win10 21H2).

Manual GPU selection doesn’t work in Windows 10, but works fine in Windows 11.

Did my first setup of all this with a Win 11 host and 2080 Ti. Thanks for everyone for this awesome thread.

A few things I noticed and I’m guessing they’re normal based on some of the comments here, but want to confirm.

1: No matter what, the VM always shows an old driver (from like 2016?) instead of the actual Nvidia driver?

2: Nvidia control panel and geforce experience don’t work in the VM?

3: GPU-Z and most of dxdiag shows nothing?

4: The drivers in use on my host were nv_dispsi.inf_amd64 and NOT nv_dispi.inf_amd64 (not the extra S), I am using the studio driver though. Nvidia control panel on the host reports the new driver I installed. Is that difference OK? (both folders exist on the host, but when I look in device manager, the one with the extra S in dispsi. is the one being used by the card, so that’s the one I copied to the VM). No Code 43 error and it seems to be accelerated properly.

Has anybody tried to use GPU-P with proxmox or unraid? I need linux at the base, but maybe GPU-P will work in Windows VM.

Tried the search, but did not find anything.

if your looking for partitioning cards in proxmox. Craft computing did this video for that. I believe jeff followed up on this later on with some Tesla GPUS as well.

Maybe this would work better than trying to partition a gpu for a vm inside of a vm which I can see having more issues.

I’d look at this first to see if this would fit your needs.

Hi,

Hope to confirm some things.

-

the VM driver for the card will for some reason give a different year no matter what. I’ve gotten a 2005-2006 label on the year myself for a 2070 non-super

-

Someone had gotten Geforce experiance to install but no it seems to be a broken mess still in the VM unless someone had figured out how to fix it.

-

GPU-Z I cannot explain, its hyper V and I wonder if its trying to look at the hyperV viewer driver vs the gpu which could be possible.

-

I guess I never caught on to the driver folder “nv_dispi.inf_amd64” being changed to “nv_dispsi.inf_amd64”. But yes looking up the driver file location in Device manager can help with locating the correct file location. The photo I put in the above comment, lists my 2070 driver without an S but I’m running the game ready drivers so possibly it is a studio driver thing.

I may or may of not mistyped any information on this and anyone can correct me if I’m wrong, this is just from my experience with HyperV and GPU-P

I have tried absolutely everything to achieve my goal, but at this point I have to accept defeat, at least without further assistance. I blame MS and NV for making this such an arduous improbable task. Everything with them is like getting blood from stone.

My goal: 2 GPU’s 1 PC

The main GPU would be a (slightly weaker) GTX 1080 that I already have and otherwise works great. This one would run OBS capturing a VM and streaming to Twitch.

The secondary GPU, which I also already happen to have, would actually be the main GPU inside the VM, used to play games.

The problem, is that I cannot realistically upgrade right now, so when buggy games or bottlenecks take down my entire PC with BSOD’s, my stream goes with it. These kinds of issues kill any momentum my stream had and I lose viewers (that I mostly didn’t have anyway, which is why when I get even 1 and lose them to a crash, it makes me pretty upset).

The solution, I have come up with at least, is to operate all of the games in one VM on their own with nothing else running, whilst capturing the VM using OBS on the main desktop and sending the feed to Twitch.

The idea, is that one GPU in a very controlled environment is running games with zero interference. Whilst another GPU, which the game does not touch, is being used by OBS. This way, a crash will take down only the VM and I can just boot it up again.

Now, I’m unsure of whether OBS/Hyper-V/Windows will support this or if it will work at all, but as it is I cannot get past “code 43” error and get the graphics card running the VM.

What I’ve done:

Followed the guide and copied all driver files over, then customised the script and ran it. It took some work to get rid of all the errors but the script works perfectly now. Note, I searched high and low, trying every trick to specify which GPU to partition but, in the end, I can only come to the conclusion that (in the most Microsoft way possible) it just doesn’t work on Windows 10. I still get code 43 no matter what I do or don’t try and which GPU it picks.

And I am removing the GPU partition first and after each test in case you’re wondering.

Next, I went through the process of connecting both GPU’s to my main desktop, identifying them properly long-term (renamed in devmgmt) and disabling the main GPU, running the script so that it ran on the secondary GPU, re-enabled the main GPU and disabled the secondary GPU. Into VM again and still code 43.

I’m now at a loss as to what else I can do to achieve my goal, if it’s clear that my setup is not supporting the passthrough successfully and I’ve done everything imaginable, I can only blame Nvidia antics.

Is there anyone that can assist?

Has anyone got the AMD encoder working inside the VM?

I am able to use the encoder on my host machine but not the VM.

Hardware:

CPU: 2700x

Mem: 16 GB

GPU: 6700XT

That script is awesome. Thank you!

For some reason didn’t work with my existing VM. But, that was not working to start.

how can i allocate only 512 mb of my nvidia gtx 1050 ti 4 gb ؟

every time i try it show error 43 !

Hi,

First: @jamesstringerparsec thanks for the script!

I tried it on W10 and it worked perfectly, the only issue remaining is below.

Anyone found a solution to make the encoder/decoder works on Parsec for AMD cards?

I’m using a RX570, GPU-P is working and performance are pretty decent but RDP is laggy.

Parsec is throwing a 15000 error when trying to connect on the Host. If I try to connect from the VM to another Parsec Host, I got a -14 error. It seems like the encoder/decoder is not working on the VM.

Thanks

I can see this being a use case for that, this wouldn’t be a good idea. Sorry to say this, I would probably suggest looking into the proxmox GPU setup for doing something like that. Or possibly using unraid to fully dedicated a gpu to a computer in a more reliable manner. doing this though means you would have to look into something like the 2 gamers 1 cpu guide on linus tech tips. so you have two VMs dedicated to the computer to work with specific hardware. Having USB assigned will need a PCIE USB card with individual lane support to have a dedicated USB per VM.

HyperV used for this at the moment I don’t feel is stable enough for streaming. If you are using Windows 10, specifically assigning a GPU does not work. Windows 11 has this feature.

I don’t want to kill any dreams of doing this cause this is a usecase for many I see being a good idea. I’d try looking into the 2 gamers 1 CPU idea with unraid which might be much more reliable possibly.

I like “Newbie1” have no such folders, in fact even searching the entire C: drive for “nv_dispi.inf_amd64” shows no results…

Could it be that NV updated their drivers and changed the name of their folders?

I do see a folder that is referenced in driver file details called “nvmdi.inf_amd64_” that shows as being a “Display.NvContainer\NVDisplay.container.exe”

Ya it appears NV renamed the folder, if you use the “nvmdi.inf_amd64_” folder in place of the missing “nv_dispi.inf_amd64" folder it works.