TL;DR

QCOW2 (and raw) volumes on top of a gen4 nvme ZFS pool are much slower than ZVOLs (and QCOW2 on ext4) for backing a Windows 10 VM, I did not expect that.

Background

I’ve got 4x 1Tb nvme:s (WD SN850, gen4) for backing VMs and /home on my Linux workstation. I’m trying out and benchmarking different storage models for the VMs, and I’m seeing (for me) unexpected differences in performance between ZVOLs on one hand, and QCOW2/RAW files on the other. So I’m asking the experts here if I’m doing something wrong, or if conventional wisdom simply has changed with new hardware.

In the past I’ve been mostly using ZVOLs for VMs, on both hdd- and ssd-based pools. However, ever since reading about Jim Salter’s tests of ZVOL vs. qcow2 for VMs, where he found the performance loss with QCOW2 to be tiny, I’ve been considering using QCOW2-on-ZFS for my next machine (this one). However, the performance hit with QCOW2 for my Windows 10 VM is much larger than is observed (with Linux VMs) in the linked blog post. I’m going to present representative tests here, and see what L1Techs has to say.

Test setup

System: EPYC 7252 (8c 3.1-3.2GHz), 64Gb RAM in 4 channels, Supermicro H12SSL-I

Host: Linux 5.13.0-28-generic #31~20.04.1-Ubuntu SMP (Kubuntu 20.04.3)

Storage: 4x WD SN850 1Tb (4k format, striped mirrors zpool, ashift = 12)

ZFS version: zfs-0.8.3-1ubuntu12.13, zfs-kmod-2.0.6-1ubuntu2

KVM Guest (via libvirt): Windows 10 Pro, virtio-scsi storage driver (0.1.190)

NTFS cluster size: 4k (default)

Libvirt details:

Device model = virtio-scsi

Cache mode = none

IO mode = native

Discard = unmap

Detect Zeroes = off

Test software (in guest): CrystalDiskMark 8, "nvme ssd" preset (for exact tests see results below)

Zdb info

nvme:

version: 5000

name: 'nvme'

state: 0

txg: 258406

pool_guid: 1480964832145129513

errata: 0

hostid: 255178082

hostname: 'snucifer'

com.delphix:has_per_vdev_zaps

vdev_children: 2

vdev_tree:

type: 'root'

id: 0

guid: 1480964832145129513

create_txg: 4

children[0]:

type: 'mirror'

id: 0

guid: 9182964367811329375

metaslab_array: 146

metaslab_shift: 33

ashift: 12

asize: 1000118681600

is_log: 0

create_txg: 4

com.delphix:vdev_zap_top: 129

children[0]:

type: 'disk'

id: 0

guid: 7877337056442423979

path: '/dev/disk/by-id/nvme-WDS100T1X0E-00AFY0_2139DK441905-part1'

devid: 'nvme-WDS100T1X0E-00AFY0_2139DK441905-part1'

phys_path: 'pci-0000:81:00.0-nvme-1'

whole_disk: 1

create_txg: 4

com.delphix:vdev_zap_leaf: 130

children[1]:

type: 'disk'

id: 1

guid: 8728541461798927995

path: '/dev/disk/by-id/nvme-WDS100T1X0E-00AFY0_21413J443312-part1'

devid: 'nvme-WDS100T1X0E-00AFY0_21413J443312-part1'

phys_path: 'pci-0000:84:00.0-nvme-1'

whole_disk: 1

create_txg: 4

com.delphix:vdev_zap_leaf: 131

children[1]:

type: 'mirror'

id: 1

guid: 6136475571192416831

metaslab_array: 135

metaslab_shift: 33

ashift: 12

asize: 1000118681600

is_log: 0

create_txg: 4

com.delphix:vdev_zap_top: 132

children[0]:

type: 'disk'

id: 0

guid: 15888088810331018339

path: '/dev/disk/by-id/nvme-WDS100T1X0E-00AFY0_2139DK445807-part1'

devid: 'nvme-WDS100T1X0E-00AFY0_2139DK445807-part1'

phys_path: 'pci-0000:82:00.0-nvme-1'

whole_disk: 1

create_txg: 4

com.delphix:vdev_zap_leaf: 133

children[1]:

type: 'disk'

id: 1

guid: 7038756282300661913

path: '/dev/disk/by-id/nvme-WDS100T1X0E-00AFY0_21413J448714-part1'

devid: 'nvme-WDS100T1X0E-00AFY0_21413J448714-part1'

phys_path: 'pci-0000:83:00.0-nvme-1'

whole_disk: 1

create_txg: 4

com.delphix:vdev_zap_leaf: 134

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

The tests

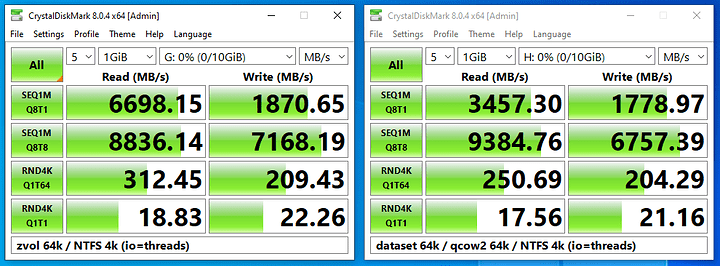

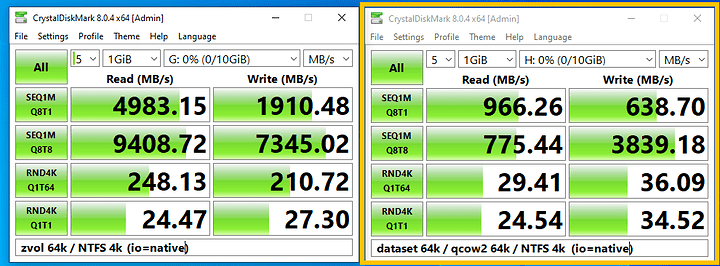

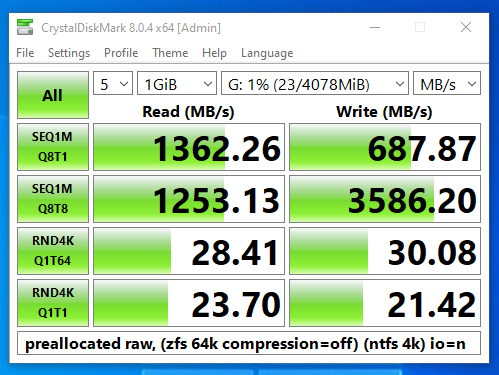

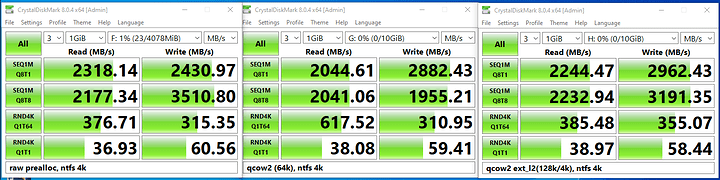

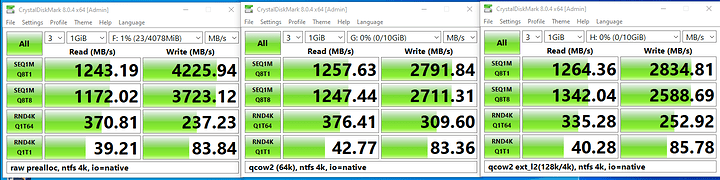

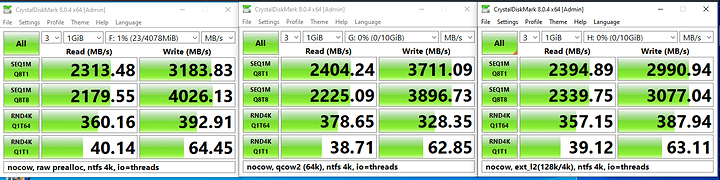

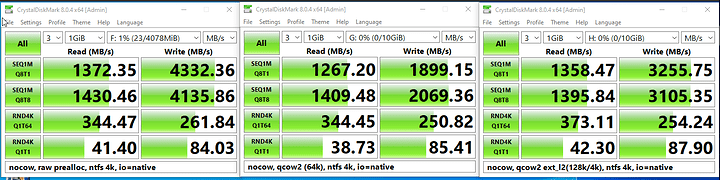

I tried a lot of different options, and I’m not reviewing all here. The results below are representative. ZVOLs are much faster than QCOW2 except for 4k IO at tiny queue depths. I tried with ARC both on and off (primarycache=all vs. metadata).

NB: In my limited testing with RAW files, they seem to perform pretty much as QCOW2. So these tests can generally be seen as ZVOL vs. File-backed IO, rather than QCOW2 specifically.

Use the drop-down lists below to compare any two sets of results.

ZVOL Tests

ZVOL properties

NAME PROPERTY VALUE SOURCE

nvme/testvol64k type volume -

nvme/testvol64k creation ons dec 15 21:45 2021 -

nvme/testvol64k used 4,90M -

nvme/testvol64k available 1,74T -

nvme/testvol64k referenced 4,90M -

nvme/testvol64k compressratio 4.81x -

nvme/testvol64k reservation none default

nvme/testvol64k volsize 10G local

nvme/testvol64k volblocksize 64K -

nvme/testvol64k checksum on default

nvme/testvol64k compression lz4 inherited from nvme

nvme/testvol64k readonly off default

nvme/testvol64k createtxg 42056 -

nvme/testvol64k copies 1 default

nvme/testvol64k refreservation none default

nvme/testvol64k guid 9876788460306791810 -

nvme/testvol64k primarycache (varies, see below)

nvme/testvol64k secondarycache all default

nvme/testvol64k usedbysnapshots 0B -

nvme/testvol64k usedbydataset 4,90M -

nvme/testvol64k usedbychildren 0B -

nvme/testvol64k usedbyrefreservation 0B -

nvme/testvol64k logbias latency default

nvme/testvol64k objsetid 174 -

nvme/testvol64k dedup off default

nvme/testvol64k mlslabel none default

nvme/testvol64k sync standard default

nvme/testvol64k refcompressratio 4.81x -

nvme/testvol64k written 4,90M -

nvme/testvol64k logicalused 23,3M -

nvme/testvol64k logicalreferenced 23,3M -

nvme/testvol64k volmode default default

nvme/testvol64k snapshot_limit none default

nvme/testvol64k snapshot_count none default

nvme/testvol64k snapdev hidden default

nvme/testvol64k context none default

nvme/testvol64k fscontext none default

nvme/testvol64k defcontext none default

nvme/testvol64k rootcontext none default

nvme/testvol64k redundant_metadata all default

nvme/testvol64k encryption off default

nvme/testvol64k keylocation none default

nvme/testvol64k keyformat none default

nvme/testvol64k pbkdf2iters 0 default

Test results (primarycache=all)

[Read]

SEQ 1MiB (Q= 8, T= 1): 8780.844 MB/s [ 8374.1 IOPS] < 945.28 us>

SEQ 128KiB (Q= 32, T= 1): 5294.938 MB/s [ 40397.2 IOPS] < 787.53 us>

RND 4KiB (Q= 32, T=16): 217.263 MB/s [ 53042.7 IOPS] < 4945.57 us>

RND 4KiB (Q= 1, T= 1): 47.461 MB/s [ 11587.2 IOPS] < 85.98 us>

[Write]

SEQ 1MiB (Q= 8, T= 1): 3399.262 MB/s [ 3241.8 IOPS] < 2431.31 us>

SEQ 128KiB (Q= 32, T= 1): 2871.813 MB/s [ 21910.2 IOPS] < 1455.00 us>

RND 4KiB (Q= 32, T=16): 162.814 MB/s [ 39749.5 IOPS] < 10739.13 us>

RND 4KiB (Q= 1, T= 1): 41.492 MB/s [ 10129.9 IOPS] < 98.27 us>

...

OS: Windows 10 Professional N [10.0 Build 19043] (x64)

Comment: vioscsi zvol64k 4k

Test results (primarycache=metadata)

[Read]

SEQ 1MiB (Q= 8, T= 1): 4657.319 MB/s [ 4441.6 IOPS] < 1793.65 us>

SEQ 128KiB (Q= 32, T= 1): 3700.376 MB/s [ 28231.6 IOPS] < 1131.19 us>

RND 4KiB (Q= 32, T=16): 178.914 MB/s [ 43680.2 IOPS] < 8398.90 us>

RND 4KiB (Q= 1, T= 1): 15.363 MB/s [ 3750.7 IOPS] < 265.91 us>

[Write]

SEQ 1MiB (Q= 8, T= 1): 2981.145 MB/s [ 2843.0 IOPS] < 2783.38 us>

SEQ 128KiB (Q= 32, T= 1): 2202.196 MB/s [ 16801.4 IOPS] < 1895.05 us>

RND 4KiB (Q= 32, T=16): 131.413 MB/s [ 32083.3 IOPS] < 13802.99 us>

RND 4KiB (Q= 1, T= 1): 14.597 MB/s [ 3563.7 IOPS] < 279.71 us>

...

OS: Windows 10 Professional N [10.0 Build 19043] (x64)

Comment: vioscsi zvol64k 4k

ZVOL Comments

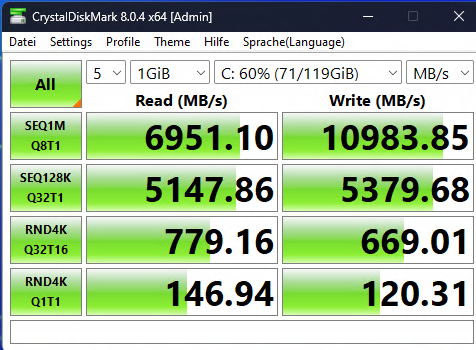

Here performance is around what I’d expect for zvols. I used a 64k volblocksize in these tests, but the default 16k did not make much difference. (I generally tried lots of other options that I don’t show here).

QCOW2 Tests

ZFS properties

NAME PROPERTY VALUE SOURCE

nvme/testbed64k type filesystem -

nvme/testbed64k creation tis dec 14 20:35 2021 -

nvme/testbed64k used 8,79M -

nvme/testbed64k available 1,74T -

nvme/testbed64k referenced 8,79M -

nvme/testbed64k compressratio 4.07x -

nvme/testbed64k mounted yes -

nvme/testbed64k quota none default

nvme/testbed64k reservation none default

nvme/testbed64k recordsize 64K local

nvme/testbed64k mountpoint /nvme/testbed64k default

nvme/testbed64k sharenfs off default

nvme/testbed64k checksum on default

nvme/testbed64k compression lz4 inherited from nvme

nvme/testbed64k atime off inherited from nvme

nvme/testbed64k devices on default

nvme/testbed64k exec on default

nvme/testbed64k setuid on default

nvme/testbed64k readonly off default

nvme/testbed64k zoned off default

nvme/testbed64k snapdir hidden default

nvme/testbed64k aclinherit restricted default

nvme/testbed64k createtxg 39146 -

nvme/testbed64k canmount on default

nvme/testbed64k xattr sa inherited from nvme

nvme/testbed64k copies 1 default

nvme/testbed64k version 5 -

nvme/testbed64k utf8only off -

nvme/testbed64k normalization none -

nvme/testbed64k casesensitivity sensitive -

nvme/testbed64k vscan off default

nvme/testbed64k nbmand off default

nvme/testbed64k sharesmb off default

nvme/testbed64k refquota none default

nvme/testbed64k refreservation none default

nvme/testbed64k guid 16607139284652682178 -

nvme/testbed64k primarycache (varies, see below)

nvme/testbed64k secondarycache all default

nvme/testbed64k usedbysnapshots 0B -

nvme/testbed64k usedbydataset 8,79M -

nvme/testbed64k usedbychildren 0B -

nvme/testbed64k usedbyrefreservation 0B -

nvme/testbed64k logbias latency default

nvme/testbed64k objsetid 168 -

nvme/testbed64k dedup off default

nvme/testbed64k mlslabel none default

nvme/testbed64k sync standard default

nvme/testbed64k dnodesize legacy default

nvme/testbed64k refcompressratio 4.07x -

nvme/testbed64k written 8,79M -

nvme/testbed64k logicalused 35,3M -

nvme/testbed64k logicalreferenced 35,3M -

nvme/testbed64k volmode default default

nvme/testbed64k filesystem_limit none default

nvme/testbed64k snapshot_limit none default

nvme/testbed64k filesystem_count none default

nvme/testbed64k snapshot_count none default

nvme/testbed64k snapdev hidden default

nvme/testbed64k acltype off default

nvme/testbed64k context none default

nvme/testbed64k fscontext none default

nvme/testbed64k defcontext none default

nvme/testbed64k rootcontext none default

nvme/testbed64k relatime off default

nvme/testbed64k redundant_metadata all default

nvme/testbed64k overlay off default

nvme/testbed64k encryption off default

nvme/testbed64k keylocation none default

nvme/testbed64k keyformat none default

nvme/testbed64k pbkdf2iters 0 default

nvme/testbed64k special_small_blocks 0 default

Test results (primarycache=all)

[Read]

SEQ 1MiB (Q= 8, T= 1): 2692.726 MB/s [ 2568.0 IOPS] < 3099.41 us>

SEQ 128KiB (Q= 32, T= 1): 2545.741 MB/s [ 19422.5 IOPS] < 1643.67 us>

RND 4KiB (Q= 32, T=16): 165.651 MB/s [ 40442.1 IOPS] < 10757.95 us>

RND 4KiB (Q= 1, T= 1): 77.860 MB/s [ 19008.8 IOPS] < 52.32 us>

[Write]

SEQ 1MiB (Q= 8, T= 1): 1507.677 MB/s [ 1437.8 IOPS] < 5528.01 us>

SEQ 128KiB (Q= 32, T= 1): 1269.009 MB/s [ 9681.8 IOPS] < 3295.35 us>

RND 4KiB (Q= 32, T=16): 117.596 MB/s [ 28710.0 IOPS] < 16458.89 us>

RND 4KiB (Q= 1, T= 1): 59.874 MB/s [ 14617.7 IOPS] < 68.01 us>

...

OS: Windows 10 Professional N [10.0 Build 19043] (x64)

Comment: vioscsi fs64k qcow2 4k

Test results (primarycache=metadata)

[Read]

SEQ 1MiB (Q= 8, T= 1): 513.418 MB/s [ 489.6 IOPS] < 16144.88 us>

SEQ 128KiB (Q= 32, T= 1): 377.859 MB/s [ 2882.8 IOPS] < 11042.59 us>

RND 4KiB (Q= 32, T=16): 21.714 MB/s [ 5301.3 IOPS] < 93358.63 us>

RND 4KiB (Q= 1, T= 1): 16.399 MB/s [ 4003.7 IOPS] < 249.20 us>

[Write]

SEQ 1MiB (Q= 8, T= 1): 466.400 MB/s [ 444.8 IOPS] < 17852.84 us>

SEQ 128KiB (Q= 32, T= 1): 326.851 MB/s [ 2493.7 IOPS] < 12753.79 us>

RND 4KiB (Q= 32, T=16): 25.728 MB/s [ 6281.2 IOPS] < 79446.86 us>

RND 4KiB (Q= 1, T= 1): 15.033 MB/s [ 3670.2 IOPS] < 271.44 us>

...

OS: Windows 10 Professional N [10.0 Build 19043] (x64)

Comment: vioscsi fs64k qcow2 4k

QCOW2 Comments

Here is where it gets much slower than expected, except for Q1T1 4k writes. In essence, QCOW2 backed volumes performs < 50% of ZVOLs for sequential IO, 50-60% for large queue-depth 4k random IO, and 150% for low queue-depth 4k random IO.

The performance hit for primarycache=metadata (vs. all) is much larger on QCOW2 compared to on the ZVOL. This suggests to me that qemu’s IO subsystem really struggles unless it can pool together operations and access larger chunks of data. I used a recordsize of 64k (rather than default 128k) in order to align with the 64k QCOW2 cluster size.

Other observations

CPU Utilization

I did not fully monitor CPU utilization with every test. However my impression was that the CPU had to work more with ZVOL compared to QCOW2, suggesting against the CPU being a bottleneck.

Adjustments I tried, that did not change the pattern

compression=off (vs. lz4)

recordsize=8k,128k (vs. 64k)

volblocksize=8k,128k (vs. 64k)

NTFS cluster size = 8k,64k (vs. 4k)

virtio disk model (vs virtio-scsi)

All disks performed as expected on their own

QCOW2 on Ext4 performs well

I’m pretty sure I tried mounting the QCOW2 file on the host and test with fio, and that it performed well, but I don’t find the results right now

Last but not least, RAW files gave the same result as QCOW2 as far as I investigated

Adjustments not tried

ashift = 13 (vs. 12)

Linux guest (vs. Windows 10)

Changing libvirt options except device model (IO mode, cache mode, …)

raidz (vs. striped mirrors)

The question

So what’s going on here? The performance loss for file-backed IO is surprisingly large, especially looking at Jim Salter’s results from 2018. The fact that RAW files give similar bad results, suggests to me it could be an issue with how libvirt interacts with ZFS.

Thanks for reading this far! Any input on whether my expectations are reasonable, and if so what I could try next?