Ok, I tested btrfs as a control condition, I hope I did the device creation right!

Preparations and setup

root@(redacted):/dev/disk/by-id# mkfs.btrfs -d raid10 -m raid10 -f nvme-WDS100T1X0E-00AFY0_(redacted) nvme-WDS100T1X0E-00AFY0_(redacted) nvme-WDS100T1X0E-00AFY0_(redacted) nvme-WDS100T1X0E-00AFY0_(redacted)

btrfs-progs v5.16.2

See http://btrfs.wiki.kernel.org for more information.

Performing full device TRIM nvme-WDS100T1X0E-00AFY0_(redacted) (931.51GiB) ...

NOTE: several default settings have changed in version 5.15, please make sure

this does not affect your deployments:

- DUP for metadata (-m dup)

- enabled no-holes (-O no-holes)

- enabled free-space-tree (-R free-space-tree)

Performing full device TRIM nvme-WDS100T1X0E-00AFY0_(redacted) (931.51GiB) ...

Performing full device TRIM nvme-WDS100T1X0E-00AFY0_(redacted) (931.51GiB) ...

Performing full device TRIM nvme-WDS100T1X0E-00AFY0_(redacted) (931.51GiB) ...

Label: (null)

UUID: 0c784342-7400-4868-9ea1-cc006c36190f

Node size: 16384

Sector size: 4096

Filesystem size: 3.64TiB

Block group profiles:

Data: RAID10 2.00GiB

Metadata: RAID10 512.00MiB

System: RAID10 16.00MiB

SSD detected: yes

Zoned device: no

Incompat features: extref, skinny-metadata, no-holes

Runtime features: free-space-tree

Checksum: crc32c

Number of devices: 4

Devices:

ID SIZE PATH

1 931.51GiB nvme-WDS100T1X0E-00AFY0_(redacted)

2 931.51GiB nvme-WDS100T1X0E-00AFY0_(redacted)

3 931.51GiB nvme-WDS100T1X0E-00AFY0_(redacted)

4 931.51GiB nvme-WDS100T1X0E-00AFY0_(redacted)

root@(redacted):/mnt# mount /dev/disk/by-uuid/0c784342-7400-4868-9ea1-cc006c36190f /mnt

root@(redacted):/mnt# dd if=/dev/zero of=non-sparse.img bs=1M count=4096

root@(redacted):/mnt# qemu-img create -f qcow2 -o preallocation=metadata,lazy_refcounts=on testdisk.qcow2 10G

Formatting 'testdisk.qcow2', fmt=qcow2 cluster_size=65536 extended_l2=off preallocation=metadata compression_type=zlib size=10737418240 lazy_refcounts=on refcount_bits=16

root@(redacted):/mnt# qemu-img create -f qcow2 -o preallocation=metadata,lazy_refcounts=on,extended_l2=on,cluster_size=128k testdisk_el2.qcow2 10G

Formatting 'testdisk_el2.qcow2', fmt=qcow2 cluster_size=131072 extended_l2=on preallocation=metadata compression_type=zlib size=10737418240 lazy_refcounts=on refcount_bits=16

root@(redacted):/mnt# ls -lsh

total 4.1G

4.0G -rw-r--r-- 1 libvirt-qemu kvm 4.0G Oct 4 21:44 non-sparse.img

1.8M -rw-r--r-- 1 libvirt-qemu kvm 11G Oct 4 21:44 testdisk_el2.qcow2

1.8M -rw-r--r-- 1 libvirt-qemu kvm 11G Oct 4 21:44 testdisk.qcow2

Is this a correct setup for a raid10 volume? “Filesystem size: 3.64TiB” is not what I expected for 4 1Tb drives in raid10. Either way the raid topology should not affect the issue we are investigating!

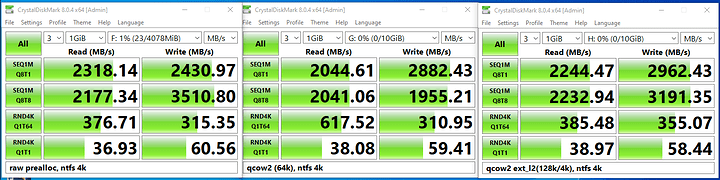

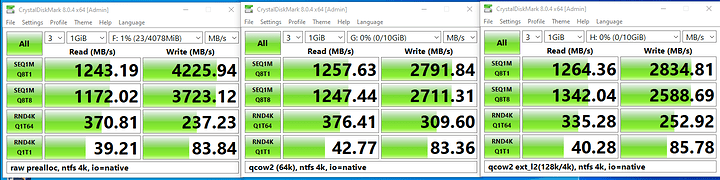

I tried three types of backing files: preallocated raw (with dd), qcow2 with 64k clusters, and qcow2 with 128k clusters and 4k subclusters (extended_l2=on).

Results

Interestingly, read speeds are lower for io=native, but write speeds are generally the same or faster. Although the baseline speed (for io=threads) is generally lower for 1M blocksize, compared to zfs. Looking at only 1M reads it seems like the same problem appears with btrfs, but not based on the other tests. I don’t know what to conclude, it is harder to say when the baseline speeds are so much lower. (Why? Did I in fact fail to create a raid10-style volume?)

For 4k it’s the opposite, btrfs seems faster than zfs. The 4k advantage for btrfs could have to do with the fact that my btrfs ended up with 4k “Sector size” by default, whereas my zfs setups always had at least 64k recordsize (I rather not go lower as that would impact compression negatively). There are surely options to play around with here.