Build “day 1” - literally 8h or work (with small brakes).

Basically, “day 1” - thats why I decided to post about this build.

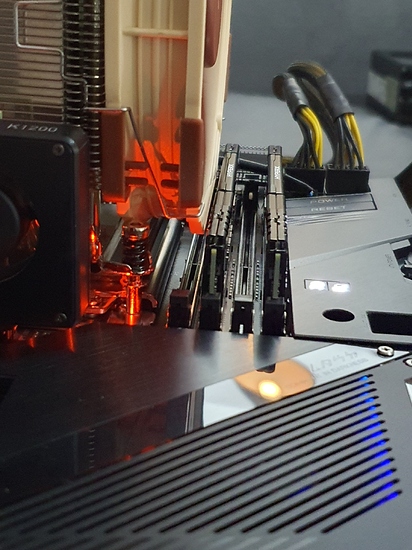

In the begging everything looked normal, you put CPU into the socket. You put ram modules. You connect the PSU cables. On the table. Board has power button builtin. Mobo has dual BIOS with two switches to control BIOS auto switching or select manually.

Most importantly it is a new mobo build just for new CPU line that only recently just had expanded to 3 CPUs in total.

Well, 4h later, still no post.

Fun fact #3: If mobo has a POST-code display, do not test it upside down to your position - you will most likely mistake b0 code for 60.

Happily enough, I only lost 1h for looking for 60 code meaning (the manual have listed it - but for most of those codes they could put as well just hashcode of the GIT commit the code was introduces in the code of the firmware. Internet helps, but for new hardware, not so much.

The most commonly known solution to everything is to flash the new BIOS.

But before I finally got to flashing I run through following try-and-errors:

- just one new RAM module

- just one old RAM module from AM4 200GE build I have

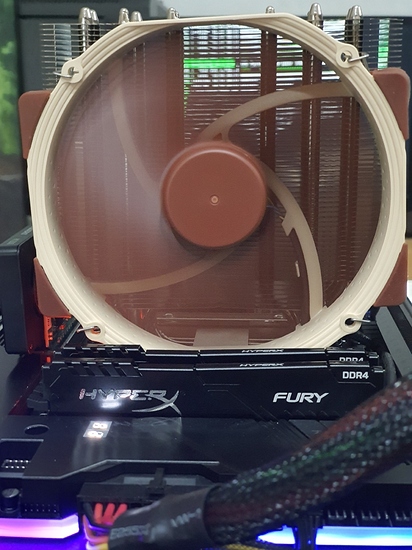

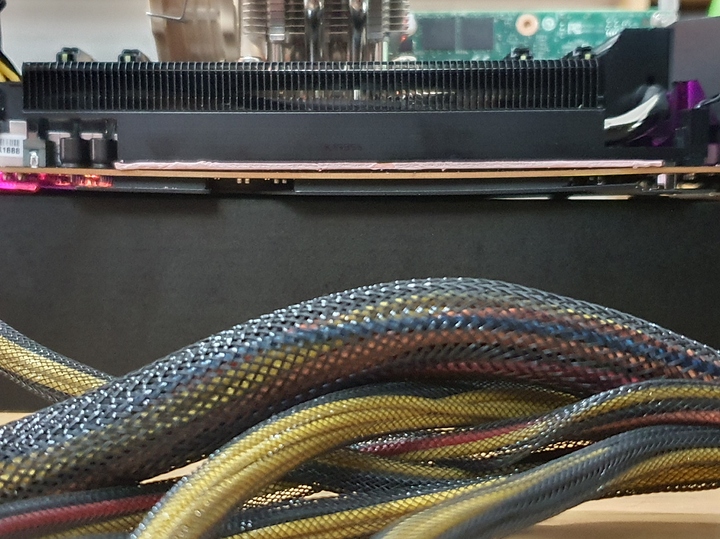

- 3 different GPUs (no video output on all of them): Quadro 1200K, some GTX 530, GTX Titan Xp

- just to see if something different will happen:

- no ram modules at all

- no CPU

- no GPU

- both new and old PSU

Fun fact #4 Titan Xp, is waiting for PSU testers that I ordered just today (yes 2 separate testers), after it (Titan) worked (lid the Geforce logo) for a 30sec and than with very short but distinct sound

logo went dark - it could have been Titan on its own - as It is post-AIO-cooling card (I reassembled card after moving AIO to the 1080Ti). But I think it still worked for few months after I reassembled it to stock cooling but I’em not sure).

Fun fact #5 The old PSU I took from my other computer, emits very small dose of oil/paste smell that used in it to conserve some elements when it powers on (that computer is not used much - collectively maybe few weeks of work since that computer inception 3 months ago) - I simply state what my heightened senses detected after Titan - probable failure.

Build “day 1” - (literally 4h) finale.

How to update BIOS in year 2020 on motherboard dedicated to only 2-3 CPUs which it is not working with anyway? Ok, to be fair there could have been many reasons why there were no post or no sound of the speaker I specially connected.

Fun fact #6 Probably all manuals and/or official web sites, contain copy-paste instructions like “here is the flash “BIOS” button, it does flash the BIOS”.

Here are the facts omitted, either on official website or manual, for this board (Gigabyte TRX40 Arous Extreme). Sometimes official site will mention something that manual does not, or vice versa.

To use Q-flash plus you must (on this board):

-

prepare USB pendrive

- FAT32

- USB 2.0 - YES!, I managed to find one USB2.0 pen-drive that I still have, backup its content as it is ESXi installation that I still use) . And YES, the USB3.0 pendrive I used to update BIOS on AM1, X99, AM4 platform, did not work. It might have been a flux (of that specific 3.0 pendrive) but also actually only one of two (manual or website) mentions that it need to be 2.0 USB drive. And YES! the led on the pendrive was flashing not just once but few times, as something was reading the directory tree consisting of one file only on this 3.0 pendrive).

-

and here is something that I think every instruction was omitting: “Q-Flash Plus” not works “maybe/possibly” (that were actually more informative versions of instructions) without CPU and/or RAM, it **exclusively works only ** when there is no CPU and there is no RAM. Sometimes it is missing “only”, sometimes RAM is not mentioned at all.

So now I have posting TRX40 motherboard. It correctly detects CPU and 128GB of ram, and at least one GPU is working.

Tomorrow I will need to:

- put paste on CPU cooler (after first application, expecting many re-sittings, I stopped using it)

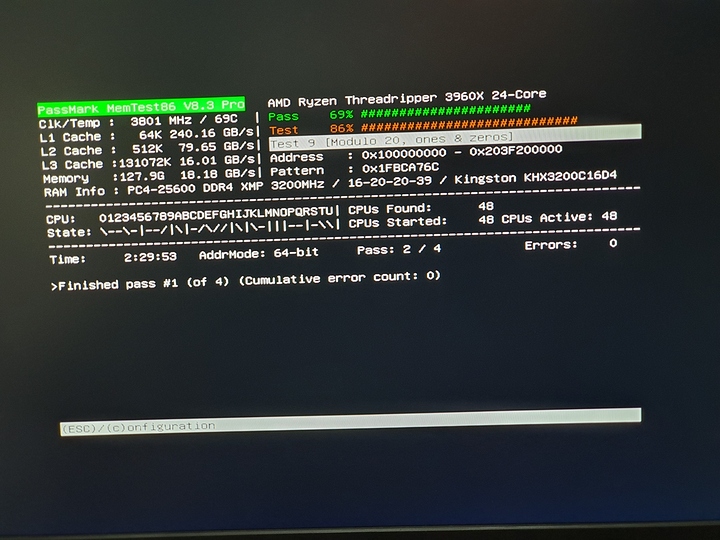

- ran memory tests

Without PSU testers I’m not moving forward more than that with this build.

, connecting in the evening with bad light) . Well, no worries I anyway generally use 16 port USB hub connected to the back.

, connecting in the evening with bad light) . Well, no worries I anyway generally use 16 port USB hub connected to the back.