So folks despite trying a hack around Im back to square 1

If it makes myself feel any better I ended up exactly where @wendell left off. Forcing routes to route correctly.

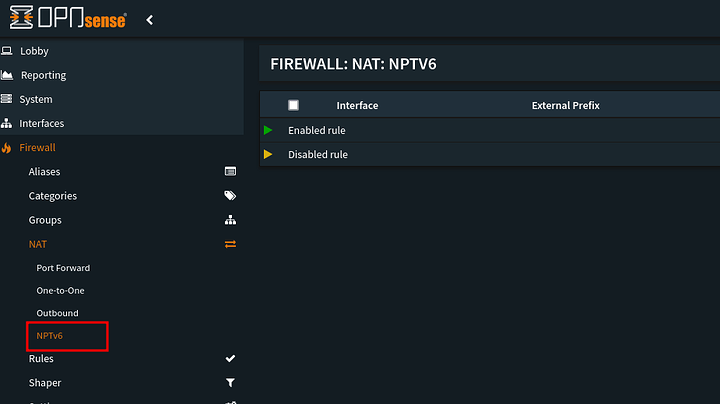

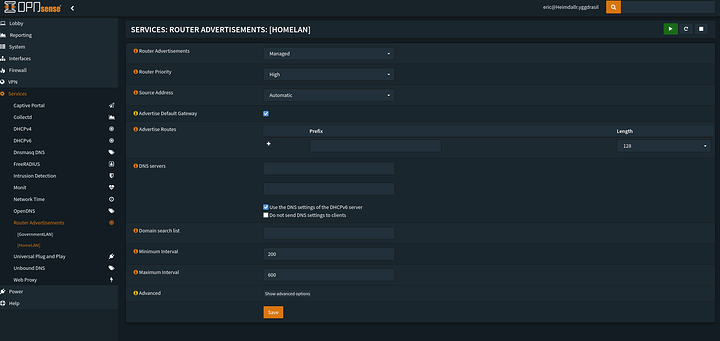

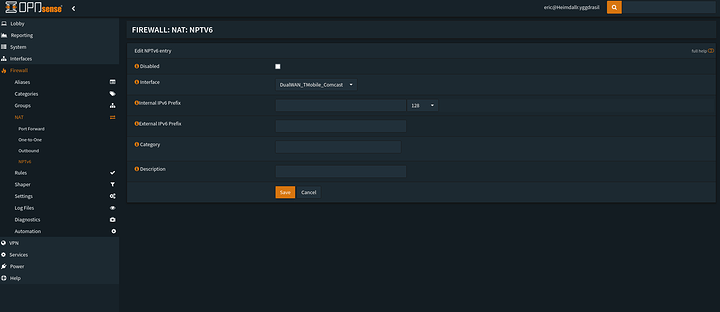

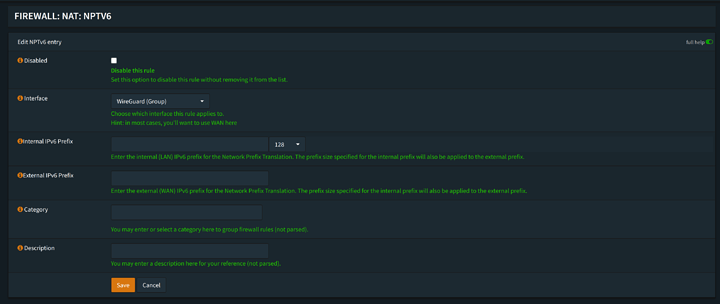

Now there is one option I still have yet to try… Using NPTv6 and Managed mode in my RA to advertise the routes to take.

Im already in a stateful DHCPv6 mode because of this

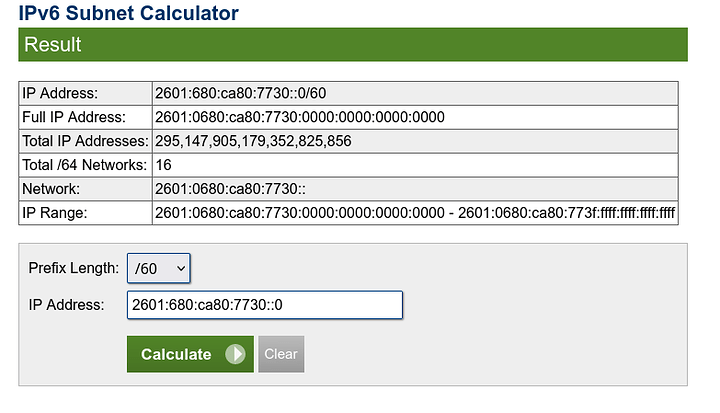

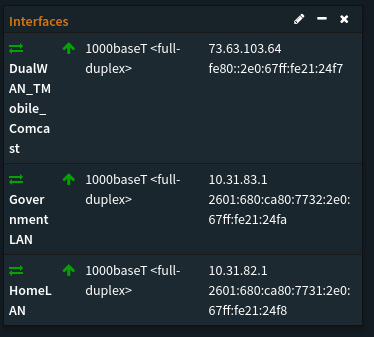

Comcast actually gives me 16 networks to play with at home. Which is absolutely retarded amounts of addresses. Thanks for the two hundred ninety five quintillion , one hundred forty seven quadrillion , nine hundred five trillion , one hundred seventy nine billion , three hundred fifty two million , eight hundred twenty five thousand , eight hundred fifty six address

What was tried by both novasty and I much to our own networks complete and total breakage:

Adding my following allowed IPs to dance around accidently looping back around the LAN address of the gateway peer.

# 2601:680:ca80:7731::/71, 2601:680:ca80:7731:200::/73, 2601:680:ca80:7731:280::/74, 2601:680:ca80:7731:2c0::/75, 2601:680:ca80:7731:2e0::/82, 2601:680:ca80:7731:2e0:4000::/83, 2601:680:ca80:7731:2e0:6000::/86, 2601:680:ca80:7731:2e0:6400::/87, 2601:680:ca80:7731:2e0:6600::/88, 2601:680:ca80:7731:2e0:6700::/89, 2601:680:ca80:7731:2e0:6780::/90, 2601:680:ca80:7731:2e0:67c0::/91, 2601:680:ca80:7731:2e0:67e0::/92, 2601:680:ca80:7731:2e0:67f0::/93, 2601:680:ca80:7731:2e0:67f8::/94, 2601:680:ca80:7731:2e0:67fc::/95, 2601:680:ca80:7731:2e0:67fe::/96, 2601:680:ca80:7731:2e0:67ff::/97, 2601:680:ca80:7731:2e0:67ff:8000:0/98, 2601:680:ca80:7731:2e0:67ff:c000:0/99, 2601:680:ca80:7731:2e0:67ff:e000:0/100, 2601:680:ca80:7731:2e0:67ff:f000:0/101, 2601:680:ca80:7731:2e0:67ff:f800:0/102, 2601:680:ca80:7731:2e0:67ff:fc00:0/103, 2601:680:ca80:7731:2e0:67ff:fe00:0/107, 2601:680:ca80:7731:2e0:67ff:fe20:0/112, 2601:680:ca80:7731:2e0:67ff:fe21:0/115, 2601:680:ca80:7731:2e0:67ff:fe21:2000/118, 2601:680:ca80:7731:2e0:67ff:fe21:2400/121, 2601:680:ca80:7731:2e0:67ff:fe21:2480/122, 2601:680:ca80:7731:2e0:67ff:fe21:24c0/123, 2601:680:ca80:7731:2e0:67ff:fe21:24e0/124, 2601:680:ca80:7731:2e0:67ff:fe21:24f0/125, 2601:680:ca80:7731:2e0:67ff:fe21:24f9/128, 2601:680:ca80:7731:2e0:67ff:fe21:24fa/127, 2601:680:ca80:7731:2e0:67ff:fe21:24fc/126, 2601:680:ca80:7731:2e0:67ff:fe21:2500/120, 2601:680:ca80:7731:2e0:67ff:fe21:2600/119, 2601:680:ca80:7731:2e0:67ff:fe21:2800/117, 2601:680:ca80:7731:2e0:67ff:fe21:3000/116, 2601:680:ca80:7731:2e0:67ff:fe21:4000/114, 2601:680:ca80:7731:2e0:67ff:fe21:8000/113, 2601:680:ca80:7731:2e0:67ff:fe22:0/111, 2601:680:ca80:7731:2e0:67ff:fe24:0/110, 2601:680:ca80:7731:2e0:67ff:fe28:0/109, 2601:680:ca80:7731:2e0:67ff:fe30:0/108, 2601:680:ca80:7731:2e0:67ff:fe40:0/106, 2601:680:ca80:7731:2e0:67ff:fe80:0/105, 2601:680:ca80:7731:2e0:67ff:ff00:0/104, 2601:680:ca80:7731:2e0:6800::/85, 2601:680:ca80:7731:2e0:7000::/84, 2601:680:ca80:7731:2e0:8000::/81, 2601:680:ca80:7731:2e1::/80, 2601:680:ca80:7731:2e2::/79, 2601:680:ca80:7731:2e4::/78, 2601:680:ca80:7731:2e8::/77, 2601:680:ca80:7731:2f0::/76, 2601:680:ca80:7731:300::/72, 2601:680:ca80:7731:400::/70, 2601:680:ca80:7731:800::/69, 2601:680:ca80:7731:1000::/68, 2601:680:ca80:7731:2000::/67, 2601:680:ca80:7731:4000::/66, 2601:680:ca80:7731:8000::/65

IP settings were (to begin with):

AllowedIPs = 10.31.84.5/32, fd31:ea5a:3182::5/128, 10.31.82.0/24

Endpoint = [2601:680:ca80:7731:2e0:67ff:fe21:24f8]:51820

Status: Routable but routing gets locked in strange loops. Reverted settings due to failures