My build is documented here:

- Asrock Rack ROMED6U-2L2T

- EPYC 7402P 24-Core Processor

- Noctua NH-U9 TR4-SP3 Air Cooler

- 128GB ECC Unbranded DRAM 2x32GB, 4x16GB, the ROMED6U has 6 dimm slots)

- Proxmox VE as main OS

- NVidia 3070TI passed through my main Windows WM (for Gaming)

- Sapphire GPRO e9260 8 GB passed through my main OSX VM (for Working)

- 2x Fresco Logic FL1100 USB 3.0 Host Controllers, passed through either my main VMs, connected using two of the ROMED6U Slimline connectors to external PCIe 8x slots

I have gone the route of 2PC on 1 host full tilt, each VM has a dedicated GPU->Displayport->Input on a 43" Display (used to be a Philips BCMxx, now an LG)

The server is in my attic, video and USB are transmitted using HDBaseT Extenders

I also am using 2 keybords/2 mouses/2 audio interfaces, two fully separated machines

That was my setup until 3 months ago when I finally gave up on OSX-Hackintosh and had my company buy me a mac mini

I have then removed the Sapphire GPU and the USB card, swapped in a 4xNVME card and shuffled my other stuff around to promote the Epyc machine to forbidden router duty as well using Vyos

The reason for moving away from OSX on KVM had nothing to do with KVM, a lot to do on the poor efficiency of OSX running on an Epyc machine and legacy GPU …

I am still using the windows VM, and in order to get decent performance out of it I had to implement shielding of the VM cores, pinning as well and I went the full monty and pinned the interrupts for the GPU and USB controller to the vm cores as well

I adapted an existing set of proxmox helper scripts to implement pinning,interrupts masking, using a custom governor and made them available on github:

I can start and stop my VMs from my Stream Deck, remotely attached to a raspberry pi, the streamdeck has also commands to start/stop the server using ipmi commands, and I can do that with custom keypresses on a 4x4 mini pad as well from the bottom of my desk …

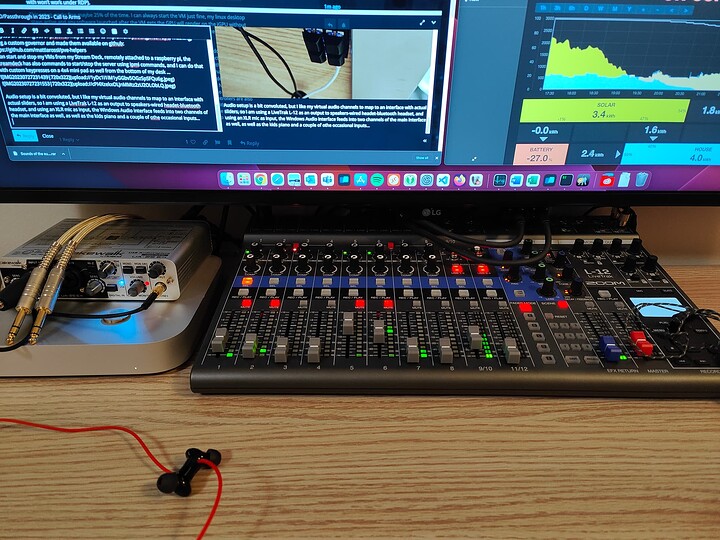

Audio setup is a bit convoluted, but I like my virtual audio channels to map to an interface with actual sliders, so I am using a LiveTrak L-12 as an output to speakers-wired headet-bluetooth headset, and using an XLR mic as input, the Windows Audio interface feeds into two channels of the main interface as well, as well as the kids piano and a couple of othe occasional inputs…