> Enter The PC Giveaway Here! <

I am working on updated guides for VFIO in 2023 and I want to know what has and has not worked for you in your build.

The documentation that exists out there on the internet has become a bit dated and I am going to try to organize and connect the dots. The reddit vfio community is very active, but I am confident I have more permanence of information on the intenret than reddit and other vfio communities that have sprung up.

The VFIO 2023 Project

The first thing iis going to be a giveaway and build video, thanks to AMD and Corsair.

It turns out that the Radeon 7000 gpus mostly DO support vfio, but there may be some rough edges around certain motherboard/bios/agesa combo and 7000 series gpus (whereas 6000 series can work fine).

This is obfuscated a bit because you can get the card into an impossible reset situation when resize bar windows are off (there are two, and it seems important that the two bar windows are kept in sync).

I have been using the threadripper pro system I have FOR YEARS (with unupgraded gpus) and I still love it. I see a lot of enthusiasm here, but I’d like to do quick guides like I used to that really cut through.

VFIO Quality of Life - Looking Glass and more

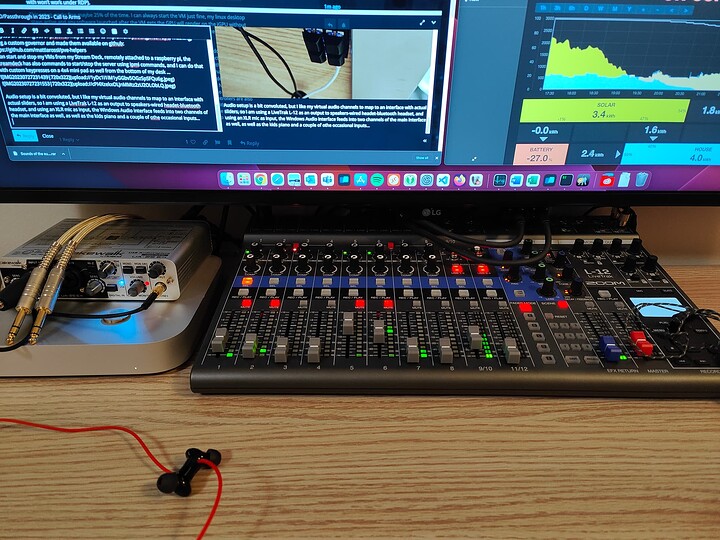

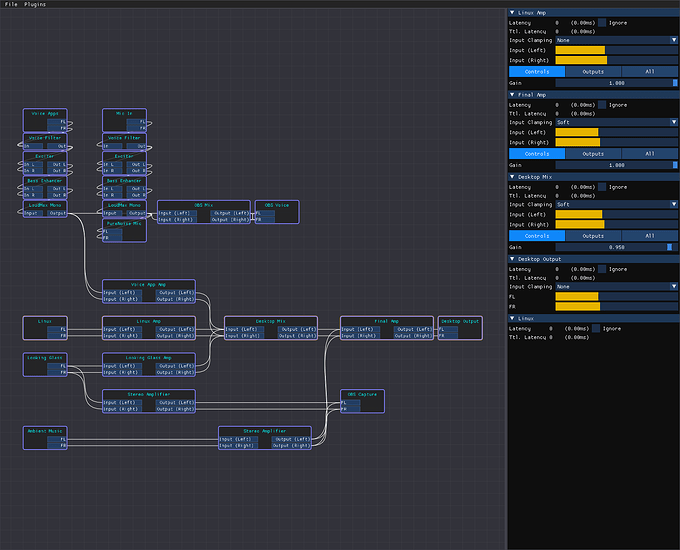

Secondarily, there is a whole software ecosystem to support this use case. Pipewire, some new exciting stuff from @gnif and gold old standbys like gnif’s looking glass… also need a whole suite of howtos.

Building curated VFIO community resources

I’m either going to write them, or link them.

So, as succinctly as you can, share your vfio success story or failure. Be aware I may split off your replies about your build into separate threads as this thread evolves, but your stories will be linked to from here.

Finally, sr-iov on the intel arc a770, whether officially or unofficially is on the horizon. I am thinking I can lay out what needs to be done and setup a bounty to connect the dots here. This is already a thing in china with the A770… more details on that soon (and separate thread).

More Soon ™