I try it with every bios update, but the result is always the same, system hangs when booting shortly after grub

Just asked out of curiosity, I don’t have a problem but my system always gives this error message when starting a VM, does anyone else have this on AM5?

[11885.106086] ------------[ cut here ]------------

[11885.106086] Trying to free already-free IRQ 44

[11885.106087] WARNING: CPU: 29 PID: 17092 at kernel/irq/manage.c:1893 free_irq+0x226/0x3b0

[11885.106090] Modules linked in: iscsi_tcp libiscsi_tcp bridge stp llc qrtr rpcrdma sunrpc rdma_ucm ib_iser libiscsi scsi_transport_iscsi ib_umad rdma_cm ib_ipoib iw_cm ib_cm mlx5_ib ib_uverbs ib_core intel_rapl_msr intel_rapl_common edac_mce_amd kvm_amd vfat snd_hda_codec_hdmi snd_usb_audio fat kvm snd_hda_intel joydev amdgpu snd_intel_dspcfg snd_usbmidi_lib crct10dif_pclmul snd_intel_sdw_acpi mousedev crc32_pclmul snd_ump amdxcp mlx5_core drm_buddy polyval_clmulni snd_hda_codec snd_rawmidi polyval_generic gpu_sched eeepc_wmi asus_nb_wmi snd_seq_device gf128mul snd_hda_core i2c_algo_bit asus_wmi ghash_clmulni_intel mc drm_suballoc_helper snd_hwdep ledtrig_audio drm_ttm_helper sha512_ssse3 i8042 sparse_keymap snd_pcm ttm aesni_intel serio platform_profile rfkill wmi_bmof usbhid drm_display_helper crypto_simd snd_timer r8169 cryptd mlxfw cec snd psample rapl ccp tls realtek video soundcore pcspkr k10temp sp5100_tco ucsi_acpi mdio_devres i2c_piix4 libphy typec_ucsi pci_hyperv_intf typec wmi gpio_amdpt roles gpio_generic

[11885.106111] mac_hid zfs(POE) zunicode(POE) zzstd(OE) zlua(OE) zavl(POE) icp(POE) zcommon(POE) znvpair(POE) spl(OE) dm_multipath tcp_dctcp crypto_user fuse dm_mod loop bpf_preload ip_tables x_tables ext4 crc32c_generic crc16 mbcache jbd2 nvme nvme_core crc32c_intel xhci_pci xhci_pci_renesas nvme_common vfio_pci vfio_pci_core irqbypass vfio_iommu_type1 vfio iommufd

[11885.106119] CPU: 29 PID: 17092 Comm: rpc-libvirtd Tainted: P W OE 6.5.5-1-MANJARO #1 e9399f16590e7769efcdcd9f039e557ef90af6c1

[11885.106120] Hardware name: ASUS System Product Name/ProArt B650-CREATOR, BIOS 1710 10/05/2023

[11885.106121] RIP: 0010:free_irq+0x226/0x3b0

[11885.106123] Code: 95 02 00 49 8b 7f 30 e8 c8 54 1d 00 4c 89 ff 49 8b 5f 50 e8 bc 54 1d 00 eb 3b 8b 74 24 04 48 c7 c7 c8 dc e5 ac e8 2a f8 f5 ff <0f> 0b 48 89 ee 4c 89 ef e8 3d 5d c5 00 49 8b 86 80 00 00 00 48 8b

[11885.106124] RSP: 0018:ffffb76d8c1ebc58 EFLAGS: 00010086

[11885.106125] RAX: 0000000000000000 RBX: ffff9441c1fff828 RCX: 0000000000000027

[11885.106125] RDX: ffff94585ff616c8 RSI: 0000000000000001 RDI: ffff94585ff616c0

[11885.106126] RBP: 0000000000000246 R08: 0000000000000000 R09: ffffb76d8c1ebae8

[11885.106126] R10: 0000000000000003 R11: ffff94585f7fffe8 R12: ffff9441c8f6e1c0

[11885.106127] R13: ffff9441c8f6e0e4 R14: ffff9441c8f6e000 R15: ffff944e67acb9a0

[11885.106127] FS: 00007f27c37fe6c0(0000) GS:ffff94585ff40000(0000) knlGS:0000000000000000

[11885.106128] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

[11885.106129] CR2: 00007f27b805fa40 CR3: 0000000dcab68000 CR4: 0000000000750ee0

[11885.106130] PKRU: 55555554

[11885.106130] Call Trace:

[11885.106131] <TASK>

[11885.106131] ? free_irq+0x226/0x3b0

[11885.106133] ? __warn+0x81/0x130

[11885.106134] ? free_irq+0x226/0x3b0

[11885.106136] ? report_bug+0x171/0x1a0

[11885.106137] ? prb_read_valid+0x1b/0x30

[11885.106139] ? handle_bug+0x3c/0x80

[11885.106140] ? exc_invalid_op+0x17/0x70

[11885.106141] ? asm_exc_invalid_op+0x1a/0x20

[11885.106143] ? free_irq+0x226/0x3b0

[11885.106145] ? free_irq+0x226/0x3b0

[11885.106147] devm_free_irq+0x58/0x80

[11885.106148] i2c_dw_pci_remove+0x59/0x70

[11885.106149] pci_device_remove+0x37/0xa0

[11885.106151] device_release_driver_internal+0x19f/0x200

[11885.106153] unbind_store+0xa1/0xb0

[11885.106154] kernfs_fop_write_iter+0x133/0x1d0

[11885.106155] vfs_write+0x23b/0x420

[11885.106157] ksys_write+0x6f/0xf0

[11885.106159] do_syscall_64+0x5d/0x90

[11885.106161] ? syscall_exit_to_user_mode+0x2b/0x40

[11885.106162] ? do_syscall_64+0x6c/0x90

[11885.106164] ? syscall_exit_to_user_mode+0x2b/0x40

[11885.106164] ? do_syscall_64+0x6c/0x90

[11885.106166] ? syscall_exit_to_user_mode+0x2b/0x40

[11885.106167] ? do_syscall_64+0x6c/0x90

[11885.106168] ? exc_page_fault+0x7f/0x180

[11885.106169] entry_SYSCALL_64_after_hwframe+0x6e/0xd8

[11885.106171] RIP: 0033:0x7f27cd3fd06f

[11885.106173] Code: 89 54 24 18 48 89 74 24 10 89 7c 24 08 e8 19 4d f8 ff 48 8b 54 24 18 48 8b 74 24 10 41 89 c0 8b 7c 24 08 b8 01 00 00 00 0f 05 <48> 3d 00 f0 ff ff 77 31 44 89 c7 48 89 44 24 08 e8 6c 4d f8 ff 48

[11885.106174] RSP: 002b:00007f27c37fd460 EFLAGS: 00000293 ORIG_RAX: 0000000000000001

[11885.106175] RAX: ffffffffffffffda RBX: 000000000000001d RCX: 00007f27cd3fd06f

[11885.106175] RDX: 000000000000000c RSI: 00007f27b80725c0 RDI: 000000000000001d

[11885.106176] RBP: 000000000000000c R08: 0000000000000000 R09: 0000000000000001

[11885.106176] R10: 0000000000000000 R11: 0000000000000293 R12: 00007f27b80725c0

[11885.106177] R13: 000000000000001d R14: 0000000000000000 R15: 00007f27c07792d1

[11885.106178] </TASK>

[11885.106178] ---[ end trace 0000000000000000 ]---

I can check if you tell me where to look

dmesg --level=err,warn

not sure what you mean.

You probably should just create a separate post.

I can’t remember much from the video but was that one of the things Wendell was working on solving?

Nope, the only row written after I launch my VM is this:

[ 4530.216145] sched: RT throttling activated

Just wanted to mention that the “static ReBAR” patch for qemu I talked about in this post was merged into master in commit b2896c1b on May 10th, and while I’m not sure which exact release it made it into, it’s definitely in as of v8.1.0. That means that anyone using a recent qemu who boots their 7900XTX-based VM while the graphics card has the increased BAR size should have SAM recognized and available in the Radeon software (it can also be confirmed in Device Manager).

I have passthrough working on the Asrock B650m PG Riptide, Fedora/Nobara host, Windows guest. Setup was quick, I can post more details on what options were needed.

My 5700g/6900xt ITX build is still going strong. Finally enabled resizable bar, in November, and things went well.

Only complaint is I wish AM4 had more memory bandwidth. ![]() Looking Glass has been super reliable for me, and the greatest surprise to me was when audio support landed. <3

Looking Glass has been super reliable for me, and the greatest surprise to me was when audio support landed. <3

As call to arms as much are a call to pass. We do need more pci lanes… Im jelly of threadripper.

I’m a bit late to the game (just watched the call to arms video yesterday). I’ve been doing VFIO in one manner or another at work for years now. I’ve decided to make Linux my main OS at home and run Windows on a VM for any games that warrant it given the anti-consumer / anti-privacy direction that Microsoft has been headings towards.

I’m mostly settled on:

AMD Ryzen 7 7800X3D 4.2 GHz 8-Core Processor

MSI MAG X670E TOMAHAWK WIFI ATX AM5 Motherboard

G.Skill Trident Z5 Neo 64 GB (2 x 32 GB) DDR5-6000 CL30 Memory

and reusing the rest of what I have in my old machine

I’ve been strongly looking at the 7950X3D because it’d be nice to have 8 of the lower performing cores for the host while allocating 8 of the higher performing cores to the VM. However, there is a lot of conflicting information about the host using core0 which sits on the 3D cache CCD. I feel like if I’m going to be stuck with 7 cores on the VM, then I might as well just roll with a 7800X3D and save the $200.

I don’t know if AMD is still talking to level1tech about VFIO, but this sort of thing has definitely muddied the waters on figuring out what part to purchase. The reports that I’ve read on the IOMMU grouping (network and misc. I/O) for the x670 is also a bit aggravating.

Been using 7950X3D for months, with zero issues.

Although I have set my UEFI setting to “Prefer Frequency cores”, Linux uses all of them, no matter what (Kernel 6.6.3).

I was afraid of the Core0 issue, but with or without core isolation* I don’t have any issues with either OS while using both.

My “Individual Core Usage” widget, shows even 100% usage on all 3D cores, while playing, and host doesn’t seem to be bothered.

*I am using vfio-isolate with “move” parameter, but even without it, I didn’t see any difference.

My first post here but pretty long time vfio user. I built a Team Red system early this year but hadn’t gotten VFIO working until recently. I was previously using an Intel Skylake & an RX580 which is where I had the most experience with VFIO.

Might also be worth mentioning that I mostly use VFIO to mess around with other Linux distributions so it’s possible I’m missing some Windows nuances. Of particular note, my interest in getting VFIO working again was renewed because of the Plasma 6 beta and managing 4+ distroes in a multi-boot scenario is annoying.

I built the following system:

AMD 7950x

ASUS B650 PRIME PLUS

32GB memory

PowerColor RX 7900XT

Fresco Logic FL1100 USB 3.0 Host Controller (PCIe USB expansion card)

Gentoo Linux as the host OS, 6.6.5-gentoo-dist (sys-kernel/gentoo-kernel), OpenRC

I haven’t tried to get resizable bar working yet, so I have Above 4G Decoding Enabled and Resizable BAR disabled in my BIOS. Otherwise my BIOS setup was pretty standard (SVM, IOMMU, etc). I have an iGPU on my 7950x so I switch that to my primary display when I want to boot in VFIO mode.

These are the key adjustments I made that seemed to be important to getting it working that maybe weren’t exactly straight forward:

- I’ve never had luck with the q35 chipset on the old Skylake system. That seems to be the case on this system as well. I tried using q35 initially for a long time, but I didn’t have any results until I switched to i440FX. The guest would initialize but the hardware wouldn’t.

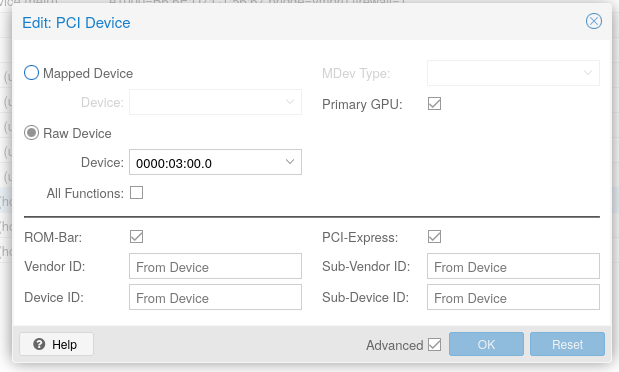

- Disable ROM BAR on the Graphics/VGA portion. libvirt threw errors when trying to start a VM that it couldn’t get a ROM for 03:00.0

- My 7900XT has four “components” and I couldn’t get things working until all of those components were bound to vfio-pci. The Graphics and Audio portions were easy to get bound but there were two other components that were getting bound to i2c_designware_pci and xhci_pci. I made some kernel config adjustments for those to be compiled as modules instead of builtin so vfio-pci could bind to those components without having to restort to shell scripting shenanigans in my initramfs.

- Some guests seem like they may be sensitive to the defined CPU topology. I was having horrible micro freezes in an Arch Linux guest that seemed to go away after defining the topology more accurately

Related to the ROM BAR error, this was what I saw in the libvirt logs when I had that option enabled:

2023-12-07T18:28:33.359928Z qemu-system-x86_64: vfio-pci: Cannot read device rom at 0000:03:00.0

Device option ROM contents are probably invalid (check dmesg).

Skip option ROM probe with rombar=0, or load from file with romfile=

Related to the CPU topology thing, this is what I added to my xml that seems to have been the trick:

<cpu mode="host-passthrough" check="none" migratable="on">

<topology sockets="1" dies="1" cores="10" threads="2"/>

<feature policy="require" name="topoext"/>

</cpu>

I’m using 10c/20t of my 16c/32t which is how I got those numbers.

Not sure if it was the topoext feature or the topology definition itself. I think by default libvirt was treating each “thread” as its own CPU, like I had 20x 1c/1t CPUs.

Related to the components bullet, I found that libvirt logs at least a portion of the output when it’s starting a VM to /var/log/libvirt/qemu/[VM name].log. Sometimes the errors that are displayed in virt-manager aren’t particularly useful, but for example I saw this error in one of those log files:

2023-02-27T22:18:41.369723Z qemu-system-x86_64: vfio: Cannot reset device 0000:03:00.0, depends on group 15 which is not owned.

That’s what helped me determined that I actually needed to worry about the stuff getting bound to i2c_designware_pci and xhci_pci even though they were in separate IOMMU groups from my graphics and audio. Of course, “group 15” relates to the IOMMU group.

Finished my ASRock B650 Live Mixer Build with AMD 7950X3D, 96GB RAM, AMD RX 6600, GeForce 3080ti with Proxmox. Initial Impression is a bit mixed.

Unfortunately I couldn’t pass through any other onboard USB controller besides group 24 (one row USB 2.0 and another row 3.0 ports, plenty for me) anything else will instantly reboot the host. It doesn’t help that 80% of the internal devices have no device descriptor at all ![]() Devices in other ports can still be accessed and grabbed via the USB port passthrough. So I had to forgo the 10GB NIC and added a Fresco 3.0 USB card instead. The mechanical HDDs of my storage PC are limiting the transfer speed anyways, so 2,5 GB is still plenty. Boot up takes a long time, 1 min to 2 total after enabling IOMMU and the second GPU. A lot of time is spend booting the host OS - I’ll dig through dmesg to find out if anything is stalling. It’s a fresh install with nearly no modification.

Devices in other ports can still be accessed and grabbed via the USB port passthrough. So I had to forgo the 10GB NIC and added a Fresco 3.0 USB card instead. The mechanical HDDs of my storage PC are limiting the transfer speed anyways, so 2,5 GB is still plenty. Boot up takes a long time, 1 min to 2 total after enabling IOMMU and the second GPU. A lot of time is spend booting the host OS - I’ll dig through dmesg to find out if anything is stalling. It’s a fresh install with nearly no modification.

UEFI display is a big buggy, set to iGPU it will display a blinking cursor unless the screen is switched on and off, but the Grub bootloader and Login prompt will show normally without intervention.

In the VM Latency is reduced and the system feels less congested while doing data transfers. This is especially noticeable in VR.

Proxmox Build

I opted for a Proxmox build as I found it easier to pass my single GPU. I found most guides for KVM/QEMU hooks to be Nvidia-only or dated.

Specs

| Category | Part |

|---|---|

| GPU | XFX Speedster SWFT 210 RX 6600 |

| CPU | R9 7950X |

| Mobo | x670e taichi |

| RAM | G.Skill Trident Z5 Neo RGB (2x32GB) DDR5-6000 CL30 |

| PSU | EVGA SuperNOVA 850 G7 |

Software

- Proxmox 8.1.3

Using Proxmox as a host, I passed the GPU to a Windows 11 virtual machine. I also had success passing the GPU to a MacOS Sonoma virtual machine.

Motherboard

- Disable resizable bar

- Use internal GPU

- Running the RAM speed on auto, which sets it to 4800; I have not experimented with changing this.

What Does Not Work

iGPU passthrough at the moment causes the system to crash.

Resources

-

Windows 11 VM for gaming setup guide (Proxmox Forum)

A recent and useful guide. A lot of tutorials out there for a Windows VM have you install virtio tools which seem to be no longer necessary.

Subbed! Can you share them? ![]()

I’m getting really annoying crashes passing through my new 4090 to a Windows gaming VM. No reset bug though, which is a big upgrade from my Radeon card.

B650I Aorus Ultra, 96GB RAM@6400 (stable for 48 hours of memtest86), 7950X, running NixOS. Guest receives CCD1 and 64GB memory, and of course the GPU.

What happens is, after between fifteen minutes and 24 hours, the system will just reset. Screen blanks, fans max out, then the computer POSTs normally. journalctl is empty, and dmesg --follow and journalctl --follow over ssh also report no errors within the last couple minutes. Nothing particularly odd appears to happen in either host or guest before a reset, nor does there seem to be a pattern in what I do (it’s happened overnight when the computer was just idling, mid-game in Starfield and Baldur’s Gate 3 a few times, when I’ve been watching video streams, and in Solidworks.

I’d read that booting with pci=noaer could help for situations such as these, but it hasn’t. System is otherwise stable for weeks at a time if I don’t run the VM, including activities like gaming (on Linux). But if I boot the Windows guest, the computer eventually crashes. The computer won’t reset if the guest isn’t running, if the guest shuts down it seems to be just as stable as if it had never been started in the first place.

Is this the only RAM stability test you have done? If yes, first thing I’d try is stock RAM settings, because

- Memtest is not the most stressful test. The ram oc people seem to use Karhu, hci memtest, etc.

- RAM that runs stable on its own may not be stable anymore when a 4090 is dumping 500W of heat into the case.

- I experienced myself worse stability in VMs than in native linux (including while gaming) on AM5 which I could solve by going back to stock settings.

I have tried running the memory at stock speed, it still crashes.