So at this point, I’m not sure if I’m going to be using my personal blog or this thread, but I figured that if the community wants to get involved, this will be a good place to have discussion, since I don’t have any comment features on my blog yet.

Without further adieu, I present my pledge:

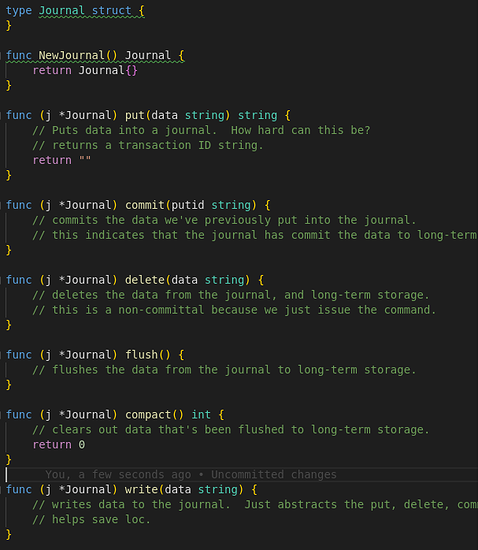

I, @sgtawesomesauce, will participate to the next Devember. My Devember will be Writing backup software in Go. I promise I will program for my Devember for at least an hour, every day of the next December. I will also write a daily public devlog and will make the produced code publicly available on the internet. No matter what, I will keep my promise.

With that, it’s probably good to describe the purpose of this project at a high level. The project has a number of goals, outlined below. I’m going to expound upon them as the month goes on, but frankly, I’m not a great writer, so this will probably look a bit more like ABI docs and frustration rants than anything else.

Versions will be:

Deduplicated

Versions will be, first and foremost, deduplicated. We’re not looking for anything all that special here, just want to do some basic deduplication so the compression algorithms don’t have to work so hard in the secondary stage.

Compressed

Versions needs to be compressed. There are plenty of file types that cannot be deduplicated, but can be compressed. We’re going to do that. At the end of the day, Versions is all about efficiency in storage and retrieval. We want to be able to store data and retrieve it very efficiently.

Checksummed

Data is no good if it’s not accurate. This goes for anything, backups are no different. We will checksum the data, so we can always know the data is good without even testing the restore!

Mirrored

Eventually, once we have the network (not a priority for Devember) aspect of Versions working, we’re going to have a solution to mirror data to multiple targets.

Self-Healing

When backing up to multiple sources, Versions will have the ability to self-heal a damaged archive during archive verification by finding a good copy of the damaged data (or Chunk) in a sister source and copying it over.

Open Source

Version is to be released under the GPL v2, to better protect both the copyright holders and the users freedoms. Github repo to be announced.

Link to Versions github repo:

Link to Versions blog tag, for those interested: