They say an image is worth a thousand words. So here’s 2000 words worth in image form:

Preamble

I’ve never considered myself to be a programmer, or particularly aspired to be one. I’ve done some BASIC programming as a child, but I quickly lost interest. What got me was design and production. I have professional experience as a graphics illustrator, as a web designer and as a video producer, and I have done 3D graphics, music production, web development and CAD work on the side.

Pretty soon, I started gradually acknowledging several pretty much universal problems with software that increasingly impeded me in pursuing my goals, the more software I learned and used the more I found software to be:

- too bloated, buggy and inefficient

- too expensive and inaccessible

- too needlessly fragmented

- too complicated and limited

It didn’t take long before my questions went from “how do I do this and that” to “why do I need to do this and that” and “why can’t I do this and that instead”, and the answers have always and only been “If you don’t like it, make your own X”, which I do realize was just a polite way of telling me to go forth and self-multiply, yet since I did not found this possible or practically useful, I decided to take it in the literal sense instead.

So exactly 10 years ago, I set on the seemingly ridiculous journey to get into programming and create better software than what the industry leaders, with their hordes of programmers, mountains of money and decades of experience.

About two steps into that journey, I stumbled in exactly the same as the above problems with programming. And it makes sense, after all, that is still software. I spent like 2 months just figuring what language and framework to start learning. The easy ones were inefficient, and the efficient ones were hard. The general purpose ones were slow to work with, the purpose specific were useless outside their scope.

Programmers appear to almost universally believe that this is not only inevitable, but actually a good thing, and that a universal programming language but a feeble dream, a sort of unattainable Utopia, a pink unicorn that simply cannot be. Maybe programmers see things in a specific way, because even back when I was a complete newb, I could see why those things are, and that there’s no intrinsic reason for it to be that way.

I’ve identified a few key reasons:

-

The evolution of programming appears to always have been “adding more” to overcome problems and limitations, to the point nowadays it is recommended to have 32 gigs of ram to run a decent IDE on an average sized project. Programmers build on top of the same paradigm, rather than trying something radically different.

-

The notion of “code” is orthogonal to clarity, software shouldn’t be written in a textual form, and then hope the compiler gets your intent as intended, and then hope the runtime produces the intended results. It is my understanding that software should not be written but designed, and that a good design can accomplish a set goal with but a fraction of the code that goes in a traditional workflow. So far this has been corroborated by my experience with the project.

-

Math is not essential to programming, or “the language of the universe” for that matter, that would be logic, to which math is just a subset of. This used to be the case back in the days when only scientists had computers, and they used them to quickly solve equations, but computers have gone a long, long way since then. In fact, one can be a perfectly good programmer with like… 3rd grade math, even cutting edge stuff like AI, 3D or multimedia is not mathematically complex, it is all basic arithmetic, the results do not arise from mathematical complexity but computational throughput. I think that what makes a good software designer is above all creativity, and I don’t think math bolsters that, and even suspect it may be impeding it, in short - math is for computers, and pushing a human to be better at a machine thing always comes at the expense of other aspects of human ability. And no matter how much you push a human to be a computer, it will be vastly inferior to even the most basic computer. So Umium tries to do its best to focus more on the human side of productivity and sweep technical nonsense under the rug, without detrimental effects on the end result.

So what is Umium exactly?

I will start with what it is NOT - a programming language.

Umium is not a “language”, it is a graphical tool, it has no syntax, and by extension - no room for syntax errors.

Umium is not strictly about software development, that is just one of the things it is intended to do, and what lies in its very core. But Umium is about much more, it wouldn’t be universal if it was limited in what it can do.

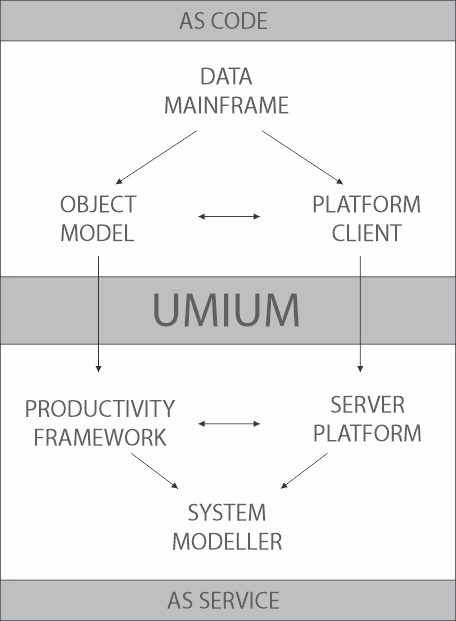

Umium is a high level tool for low level abstract logic design. It is a complete departure from the traditional way in which software development has been done:

Traditional programming: model > code > compilation > execution > result

Umium: model > result

Umium is a real time modeller, it is a tool that directly manipulates raw computer memory, no intermediary representations, only basic safeguards.

Umium is a runtime, compiler, IDE, platform, version control and ecosystem all in one.

Umium is not for just for programmers, or just for computer using creatives. The ultimate goal is that it is useful to everyone. Kinda like don’t need to be a professional orator to get tremendous benefit from being able to produce and understand speech, and you don’t need to be a professional writer to get tremendous benefit from being able to read and write.

It is all about the use of information - speech and writing have played a monumental role in human development, and now that computers are everywhere, the prospect of using, exchanging and processing information has exponential increased. Yet I can’t shake the feeling humanity is not making the optimal use of computers, and even possibly getting a rather short end of the deal.

I remember back when smartphones were becoming a thing. I was rather thrilled by the prospect of having a computer in my pocket, just imagine all he things we could do. But that didn’t really manifest, all due to the lack of software to enable it. I in fact dare say that on the grand scale, people’s personal use of such devices is totally eclipsed by the industry’s use of people through those devices.

Umium is not just about solving technical issues, but all creativity and collaboration related issues as well. Especially problems around licensing, my goal is to make licensing effortless and sort of automatic, so users don’t have to waste time, money and worry on what they can or can’t do. I am still figuring this one out, meaning that my own licensing is not yet finalized, which is also why for the time being I abstain from publishing any code, but for all intents and purposes, all of Umium will be eventually open source.

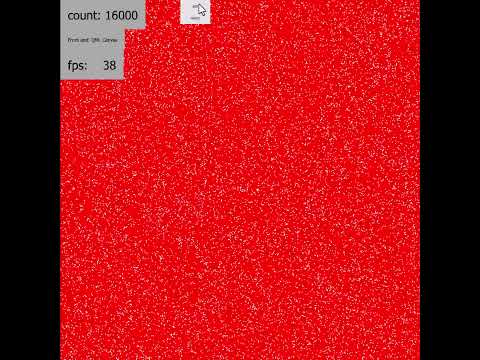

Umium is insanely scalable and efficient, as a universal tool it is not optimized for any particular usage, but designed to work everywhere, from tiny MCUs to supercomputers. Here’s some meaningless theoretical specs, figures are “up to”:

- minimum object size : 1 byte

- maximum object size : 1 gigabyte

- maximum number of base types: ~ 4.3 billion

- maximum number of base objects: ~ 4.6 quintillion

- maximum addressable physical memory: 4 exabytes

- maximum addressable virtual memory: unlimited*

- maximum number of virtual objects: unlimited*

*unlimited - Umium employs a lightweight data compression mechanism that can trade off CPU time for theoretically unlimited amount of memory. This makes it practically feasible for its allocated virtual memory to exceed the used physical memory by many orders of magnitude, while maintaining useful performance levels. So while being scalable beyond the foreseeable limits of physical hardware, it is designed to be extremely efficient in memory constrained scenarios.

Short term goals:

- complete the GUI

- finalize licensing

- release a native client for Windows, Linux and Android

- release a web client via WebAssembly

- add WebSockets support

- add cryptography facilities

- add file system facilities

- support basic multimedia - images, audio, video

Struggle, failures and lessons learned

When I started this endeavor, I didn’t have the slightest idea what I want to do, much less how, just the gut feeling that I must do it. I’ve done some DOM scripting in JS and AS before, but when it came to writing a full fledged program, I didn’t know even where to start. After learning the gist of several programming languages, I eventually settled for C++, for its power and efficiency, and adopted Qt, as the most comprehensive application framework available for C++.

I spent the next 3 years learning programming and trying smaller and less ambitions things, and then went on with my first real attempt to write Umium. It was a very traditional, by the book approach to software development, the good old “lets start writing some code and see where it goes”, without a carefully designed architecture and strategy. It was slow and tedious process, the pace was slow and demotivating, and clearly not suited to my task, it was a “outside in” approach that wasn’t hard to project would stretch into the hundreds of thousands of lines of code and many many years. Something still feasible for a company with many developers, but totally inapplicable for a one dude project.

So I gave myself 2 more years of learning more before my second attempt, and I did manage to take that one much further with far less efforts. I had an almost complete version, one that made excessive use of Qt and QML facilities, and one that relied on contrived schemes to dynamically describe and generate QML code and execute it on the fly. It turned out, by my own standards, extremely slow and inefficient. Most people in my place would have released that, as it would have been still usable in many scenarios, and it did constitute a significant amount of work, and by doing so, commit to that design indefinitely. But I didn’t, I knew that wasn’t it.

Two failures in a row, and 5 years “wasted” felt a bit discouraging. But what took precedence was that despite this, the lessons that I’ve learned have in no way diminished the feasibility of my undertaking, those were just indications that what I was doing wasn’t an effort in the right direction. This was the first time in my life that I didn’t really know what I need to do to achieve my goal and didn’t have anyone I could ask that, and had to figure it out by process of elimination and wandering into uncharted territory.

I spent another 2 years leaning, but this time I didn’t emphasize on programming. Clearly, more of the same wasn’t going to help, it wasn’t the problem of not being enough, just not being the right way to do it. Instead I took my time to learn lower level concepts, how the actual hardware works and how operating systems work at a kernel level. This led me to eventually commit to my current and from the looks of it, final design, and I went straight for raw memory access, the very thing programming has progressively been moving away from.

Needless to say, it still wasn’t a clear cut path to getting it done. I’ve found the level at which to do it, and I have figured it must be an “inside out” approach, but with still quite a lot of figuring out left to discover what exactly that is, and it took another 2 years and 3 implementations rewritten from scratch to finalize the fundamental design so I can focus my attention on the front end.

I entered last year’s Devember competition under the name of UM. Since then I decided that it is not that original if the name of the thing sounds like “hmm”, so it was promoted to Umium, which does actually sound like a thing.

Last year I couldn’t bring my work to completion, I kinda got lost in case studies and micro-optimizations. Programmers say that premature optimizations are a bad practice, and that’s true if you are on the clock and you need to deliver results. But I do not consider my previous efforts wasted, because those premature optimizations did reveal vital information, which did ultimately help me commit to a fundamental design. Trust me, to change fundamental design later on is about the worst idea one can possibly get.

Another lesson learned - while it is a huge time saver to rely on other people’s code, when you want to accomplish something that no one ever has, relying on code written under a different paradigm turns out to be an extremely bad idea.

I did put a non-trivial amount of effort making my data model work with Qt’s own, fixed roles, abstract model interface, with the intent to leverage its existing model view facilities. I figured it will save me some time, and I can always do my own GUI later on. A HUGE mistake on my behalf, turns out my requirements to present any data in any way is extremely orthogonal to Qt’s own internal design. Different types of views were incompatible with one another, you could not arbitrarily nest or mix and match things like I wanted to. In the months that I struggled to make Qt do what I needed it to, I discovered and reported dozens upon dozens of bugs, arising from unforeseen by its developers “outside the box” use. And with no solution to most of them in sight, I ultimately decided to scrap this effort, and develop my own model / view architecture from scratch (the Blackjack and hookers are implied).

That’s what I spent the last couple of weeks doing, the model part already more or less finalized, and the view work still in its infancy. In addition to that, I took some extra steps to facilitate an eventual complete removal of Qt as a dependency. My current binary is about 25 MB, and some 95% of that is just Qt, so in the midterm, Umium is set for a massive reduction in size.

My “almost production ready” design from 5 years ago never felt right to release and commit to long term, and I dare say the extra 5 years I put into the effort were worth it - yielding around 50x reduction in code size and an even more impressive, in excess of 500x reduction of memory overhead. I can’t give any number for the performance improvement at this stage, but I do expect it to be massive as well.

Looking back at this journey, what I appear to have done is spend 5 years learning “how its done” and another 5 years to shake almost all of it off, before I am left with the few key components that I actually needed all along. And this also possibly explains how come nobody has done this rather trivial in hindsight thing, in the at least a couple of decades it’s been technically feasible to do. It is a huge d’oh moment and real shame it took this long to realize a rather obvious thing - that one simply cannot solve the issues of legacy software development by doing more legacy software development.

Disclaimer

This post does not, in any capacity, endorse the acts of gambling and prostitution.