Hi @wendell and #everyone , I’ve just finished setting up a VM - launch it and get into a EFI grub menu rather than windows, I’m assuming I’m doing something incorrectly with the drive:

This is the output:

UEFI Interactive Shell v2.2

EDK II

UEFI v2.70 (EDK II, 0x00010000)

Mapping table

BLK0: Alias(s):

PciRoot(0x0)/Pci(0x1,0x0)/Floppy(0x0)

BLK1: Alias(s):

PciRoot(0x0)/Pci(0x1,0x0)/Floppy(0x1)

BLK2: Alias(s):

PciRoot(0x0)/Pci(0x9,0x0)/Sata(0x0,0xFFFF,0x0)

BLK3: Alias(s):

PciRoot(0x0)/Pci(0x9,0x0)/Sata(0x0,0xFFFF,0x0)/HD(1,MBR,0x073C329B,0x800,0xFA000)

BLK4: Alias(s):

PciRoot(0x0)/Pci(0x9,0x0)/Sata(0x0,0xFFFF,0x0)/HD(2,MBR,0x073C329B,0xFA800,0x19000000)

BLK5: Alias(s):

PciRoot(0x0)/Pci(0x9,0x0)/Sata(0x0,0xFFFF,0x0)/HD(3,MBR,0x073C329B,0x190FAFC1,0x2119A83F)

BLK7: Alias(s):

PciRoot(0x0)/Pci(0x9,0x0)/Sata(0x0,0xFFFF,0x0)/HD(4,MBR,0x073C329B,0x3A296000,0xEF000)

BLK6: Alias(s):

PciRoot(0x0)/Pci(0x9,0x0)/Sata(0x0,0xFFFF,0x0)/HD(3,MBR,0x073C329B,0x190FAFC1,0x2119A83F)/HD(1,MBR,0x00000000,0x190FB000,0x2119A800)

Shell> SC in 1 seconds to skip startup.nsh or any other key to continue.

Shell>

I’m currently trying to set the VM to boot from a SSD I’ve got plugged into sata port x on the mb. Linux sees it as /dev/sdd and it detects there is a Windows install on that device. My VM config for the drive looks like this:

<disk type='block' device='disk'>

<driver name='qemu' type='raw' cache='none' io='native'/>

<source dev='/dev/sdd'/>

<target dev='hda' bus='sata'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

Device info

Device Boot Start End Sectors Size Id Type

/dev/sdd1 * 2048 1026047 1024000 500M 7 HPFS/NTFS/exFAT

/dev/sdd2 1026048 420456447 419430400 200G 7 HPFS/NTFS/exFAT

/dev/sdd3 420458433 975788031 555329599 264.8G f W95 Ext'd (LBA)

/dev/sdd4 975790080 976769023 978944 478M 27 Hidden NTFS WinRE

/dev/sdd5 420458496 975788031 555329536 264.8G 7 HPFS/NTFS/exFAT

I would like to passthrough a nvme but the problem is it’s hard to determine what are my NVMe’s IOMMU/PCI tags as they are WD Black and don’t appear correctly or rather there is no mention of them in the lspci list/script list when grepping.

Here is the output from the IOMMU list script:

IOMMU Group 0 00:01.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 10 00:08.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Internal PCIe GPP Bridge 0 to Bus B [1022:1454]

IOMMU Group 11 00:14.0 SMBus [0c05]: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller [1022:790b] (rev 59)

IOMMU Group 11 00:14.3 ISA bridge [0601]: Advanced Micro Devices, Inc. [AMD] FCH LPC Bridge [1022:790e] (rev 51)

IOMMU Group 12 00:18.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 0 [1022:1460]

IOMMU Group 12 00:18.1 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 1 [1022:1461]

IOMMU Group 12 00:18.2 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 2 [1022:1462]

IOMMU Group 12 00:18.3 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 3 [1022:1463]

IOMMU Group 12 00:18.4 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 4 [1022:1464]

IOMMU Group 12 00:18.5 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 5 [1022:1465]

IOMMU Group 12 00:18.6 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric Device 18h Function 6 [1022:1466]

IOMMU Group 12 00:18.7 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 7 [1022:1467]

IOMMU Group 13 00:19.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 0 [1022:1460]

IOMMU Group 13 00:19.1 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 1 [1022:1461]

IOMMU Group 13 00:19.2 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 2 [1022:1462]

IOMMU Group 13 00:19.3 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 3 [1022:1463]

IOMMU Group 13 00:19.4 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 4 [1022:1464]

IOMMU Group 13 00:19.5 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 5 [1022:1465]

IOMMU Group 13 00:19.6 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric Device 18h Function 6 [1022:1466]

IOMMU Group 13 00:19.7 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Data Fabric: Device 18h; Function 7 [1022:1467]

IOMMU Group 14 01:00.0 USB controller [0c03]: Advanced Micro Devices, Inc. [AMD] Device [1022:43ba] (rev 02)

IOMMU Group 14 01:00.1 SATA controller [0106]: Advanced Micro Devices, Inc. [AMD] Device [1022:43b6] (rev 02)

IOMMU Group 14 01:00.2 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Device [1022:43b1] (rev 02)

IOMMU Group 14 02:00.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 300 Series Chipset PCIe Port [1022:43b4] (rev 02)

IOMMU Group 14 02:04.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 300 Series Chipset PCIe Port [1022:43b4] (rev 02)

IOMMU Group 14 02:05.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 300 Series Chipset PCIe Port [1022:43b4] (rev 02)

IOMMU Group 14 02:06.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 300 Series Chipset PCIe Port [1022:43b4] (rev 02)

IOMMU Group 14 02:07.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 300 Series Chipset PCIe Port [1022:43b4] (rev 02)

IOMMU Group 14 04:00.0 Ethernet controller [0200]: Intel Corporation I211 Gigabit Network Connection [8086:1539] (rev 03)

IOMMU Group 14 05:00.0 Network controller [0280]: Intel Corporation Device [8086:24fb] (rev 10)

IOMMU Group 14 06:00.0 Ethernet controller [0200]: Intel Corporation I211 Gigabit Network Connection [8086:1539] (rev 03)

IOMMU Group 15 08:00.0 Non-Volatile memory controller [0108]: Sandisk Corp Device [15b7:5002]

IOMMU Group 16 09:00.0 VGA compatible controller [0300]: NVIDIA Corporation GP104 [GeForce GTX 1070] [10de:1b81] (rev a1)

IOMMU Group 16 09:00.1 Audio device [0403]: NVIDIA Corporation GP104 High Definition Audio Controller [10de:10f0] (rev a1)

IOMMU Group 17 0a:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Device [1022:145a]

IOMMU Group 18 0a:00.2 Encryption controller [1080]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Platform Security Processor [1022:1456]

IOMMU Group 19 0a:00.3 USB controller [0c03]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) USB 3.0 Host Controller [1022:145c]

IOMMU Group 1 00:01.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge [1022:1453]

IOMMU Group 20 0b:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Device [1022:1455]

IOMMU Group 21 0b:00.2 SATA controller [0106]: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] [1022:7901] (rev 51)

IOMMU Group 22 0b:00.3 Audio device [0403]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) HD Audio Controller [1022:1457]

IOMMU Group 23 40:01.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 24 40:01.2 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge [1022:1453]

IOMMU Group 25 40:02.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 26 40:03.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 27 40:03.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge [1022:1453]

IOMMU Group 28 40:04.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 29 40:07.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 2 00:01.2 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge [1022:1453]

IOMMU Group 30 40:07.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Internal PCIe GPP Bridge 0 to Bus B [1022:1454]

IOMMU Group 31 40:08.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 32 40:08.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Internal PCIe GPP Bridge 0 to Bus B [1022:1454]

IOMMU Group 33 41:00.0 Non-Volatile memory controller [0108]: Sandisk Corp Device [15b7:5002]

IOMMU Group 34 42:00.0 VGA compatible controller [0300]: Advanced Micro Devices, Inc. [AMD/ATI] Ellesmere [Radeon RX 470/480] [1002:67df] (rev c7)

IOMMU Group 34 42:00.1 Audio device [0403]: Advanced Micro Devices, Inc. [AMD/ATI] Ellesmere [Radeon RX 580] [1002:aaf0]

IOMMU Group 35 43:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Device [1022:145a]

IOMMU Group 36 43:00.2 Encryption controller [1080]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Platform Security Processor [1022:1456]

IOMMU Group 37 43:00.3 USB controller [0c03]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) USB 3.0 Host Controller [1022:145c]

IOMMU Group 38 44:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Device [1022:1455]

IOMMU Group 39 44:00.2 SATA controller [0106]: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] [1022:7901] (rev 51)

IOMMU Group 3 00:02.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 4 00:03.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 5 00:03.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe GPP Bridge [1022:1453]

IOMMU Group 6 00:04.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 7 00:07.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

IOMMU Group 8 00:07.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) Internal PCIe GPP Bridge 0 to Bus B [1022:1454]

IOMMU Group 9 00:08.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 00h-0fh) PCIe Dummy Host Bridge [1022:1452]

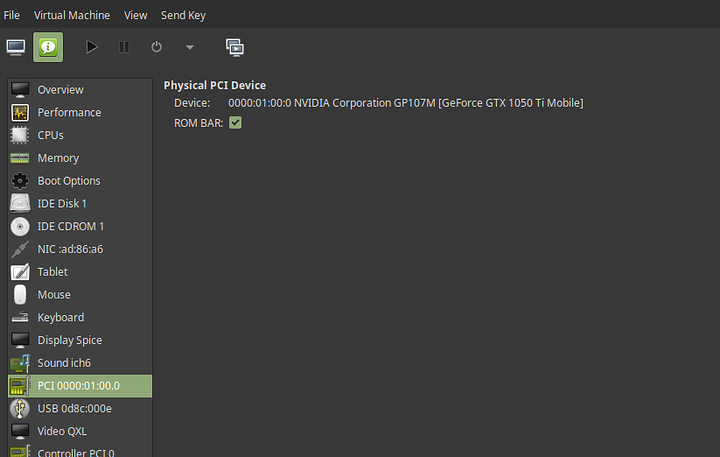

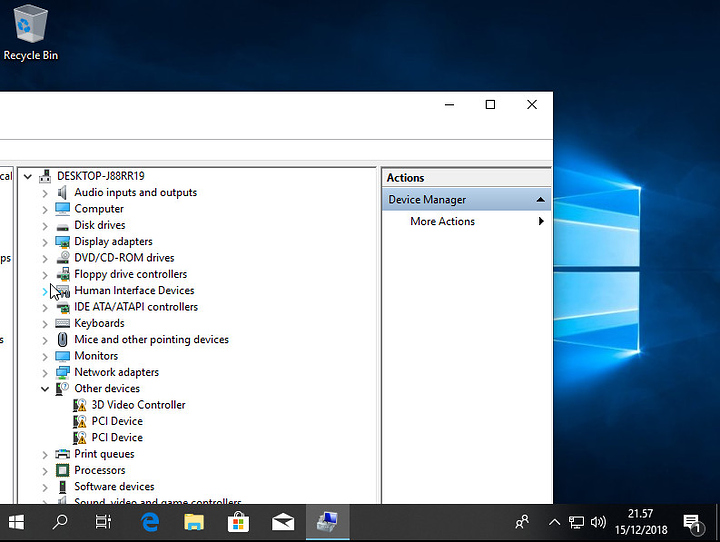

I’m getting successfull output from the GPU on a secondary monitor when launching the vm - which leads me to believe that that is fine.

Specs listed below:

Ryzen 1920x - Asrock Taichi x399 (full size)

8 GB Ram (I’m getting more just using this to setup)

RX 480 8 GB (host)

GTX 1070 (passhtrough) - *I’ve done the code 43 workaround

2 x WD Black 500 GB NVMe drives running linux off of one want the other for windows

2 x 4 TB Drives (Raid 1) - irrelevant

PSU - irrelevant

I have no other devices connected to the MB.

Apologies for the long post - first time poster here !

If you need config files etc. please ask.

Sad news.

Sad news.