I’ve been dealing with containers lately, as can be seen above. Because of some curiosity, I wanted to see if gitlab was able to run in lxd. It seems there’s some limitation to redis if ran inside a container. Gitlab has a systemd service dependency on redis-gitlab, which never starts.

I’ve tried both nixos and debian 12 to run gitlab in lxd, with no luck. Literally the exact same issue. Leading me to try lxd vms, which are supposedly full VMs using qemu, but with a lot less cruft of the normal libvirt. Well, obviously lxd won’t run as it should on non-supported systems. In my scenario, it’s not allowing to launch VMs because it’s failing some qemu check, despite me running qemu 7.1, lxd 5.9 and lxc 5.0.1.

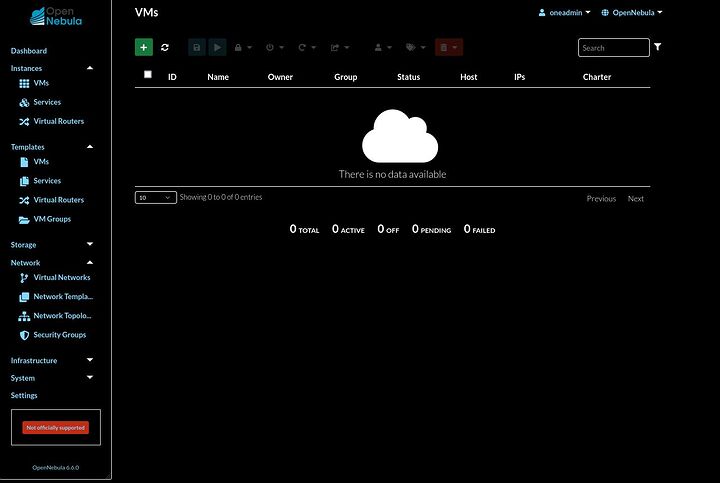

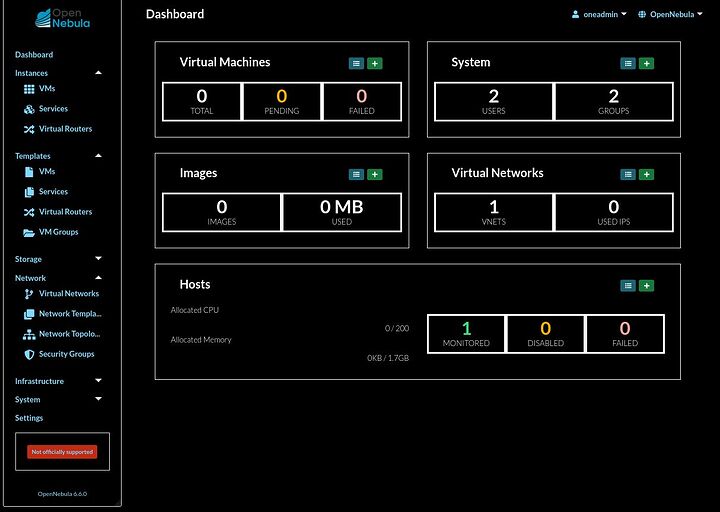

This is part of the reason why I wanted a firecracker hypervisor, but the only way I knew how to get it was either aws or opennebula. This is why I was meddling with opennebula a few weeks back. But now I’ve just discovered microvm.nix. It can run anything from full qemu, firecracker and crosvm, along other tools I’ve never heard of. The crosvm is interesting, I’d need to read more about it vs firecracker. Seems like firecracker was a fork of crosvm, which I wasn’t aware of.

Unfortunately, it seems like the only way to live migrate OSes as of now is qemu’s tooling and for linux and OCI containers, using CRIU. Technically, CRIU should be able to handle any kind of RAM data migration, but I haven’t yet found people trying to migrate firecracker. The idea of “serverless” workloads is that they are ephemeral and short-lived, meaning that you should be spinning up microVMs on-demand when needed and kill them when the demand is lower.

But that’s lame. My idea is to use existing technologies to build redundant home self-hosting highly available infrastructure without breaking the bank and using as few resources as possible.

If the hypervisor is unable to live-migrate the programs one wants to run, then one should move the task over to the guest OS, coupled together to form a cluster resource. Pacemaker and corosync are certainly good tools for this, but I don’t believe they act as fast to disruption. The idea is: service goes down, it gets immediately relaunched on another host.

When a host dies, in HA at the hypervisor level, you already have the same data copied in memory on another system and you just resume activity pointing to the same disk resources. In an OS cluster, your program dies and you launch another identical instance immediately that takes over the burden. But your service needs to be able to account for disruptions. For example, if you have a webserver, it dying and getting launched again on another host won’t impact much, except maybe the current session of a user, but it’s not going to be very disruptive.

But for something like a DB, say postgres, you launch the service on another host, postgres sees it crashed and it re-reads its last activity logs. This can cause a massive disruption while pg tries to figure out what happened and resume activity. A PG cluster with synchronous streaming replication helps, but if your service doesn’t have its own built-in support for something like that, you’re kinda screwed without live migration when a host dies.

Typically hosts dying is not as big of a problem as people make it to be, but it can happen and I’m pretty sure it can happen more frequently in a home environment. Many people with homelabs don’t do any kind of HA because of the high costs, even when their services are important, like self-hosted email.

Well, for that matter, many VPSes don’t offer you HA either, if the host ever goes down, they have a SLA that you’ll have your VM up in at most n hours. And if they can’t respect their SLAs, you don’t get compensated, they just offer you a deal to reduce your costs as an apology, but your users won’t be happy when the service they use is unavailable. I put an accent on VM, because if your service doesn’t come back online because of a crash, it’s still up to you to fix it.

Anyway, the rant devolved into too many things. I mostly wanted to talk about linux containers and lightweight virtualization. NixOS certainly does a lot of interesting things. I’m undecided now if I want to run a nixos hypervisor or if I want to continue pursuing opennebula. On one hand, opennebula is a cloud infrastructure software. Managing clouds is not exactly my thing. It can work as a datacenter software, but you’re using a sledgehammer to hammer in small nails. It can work, but it’s overkill.

Given my discovery of microvms.nix, I could realistically now run a single host for my needs, unlike with opennebula requiring multiple hosts (or at least VMs) to run different kinds of contexts, like a hypervisor for vms, one for microvms and another for containers, when microvms.nix and containers.nix could do fine for what I need.

Don’t get me started on containers.nix. It uses systemd-nspawn, but I haven’t looked into how to make it work with bridges instead of tap / NAT. I prefer everything be on the main network I assign it to, not follow the docker hidden / inaccessible network model (I can’t be bothered to deal with port-forwarding and furthermore, I’d rather use default ports and different IPs and hostnames per container, instead of having different ports mapped to each container).

The only problem that I see with containers.nix is that the containers are mapped to the host’s nix-store. Saves space, but might cause conflicts on access. If microvms.nix proves to be lightweight enough compared to containers, it’d probably be the way forward. A kernel image shouldn’t be too large and emulating a lot of legacy cruft shouldn’t be necessary.

Thinking about it, I feel I’m very biased towards nix now. Seems I dug into a hole I can’t escape now. I’m also interested to learn more about nix flakes and nixops. From what I can tell, some people already did the work on terraform-nixos provider, because terraform is more of a standard than nixops. There’s a lot to learn, but I feel nixos would be an easier and more advantageous technologically than ansible (because you don’t have to write more code to remove things previously installed, nix takes care of that and later you can garbage-collect).

In the pursuit of efficiency, let’s say we use linux containers (or containers.nix) for most of the services. MicroVMs still allows us to overcome limitations of the containers, so at the very least, it should be a complementary tool.