Print pays my salary (or at least a lot of it), but none of that has anything to do with CUPS…

Hey, don’t attribute that to me! I had the same thought though…

My issue was that a scrub was preventing SMART tests from completing.

WD Greens… kill them with fire…

Err, why the hate? They’re not “enterprise” hardware (but neither are WD Reds…), but I’m guessing the poster mostly meant they were now using ECC memory etc. when referring to “enterprise hardware”, as that is likely what one would skimp on when building a NAS on a tight budget (eg. by repurposing one’s old desktop).

Wouldn’t use them for a corporation, but for private use if on a budget? Why not? Have a few that have over 6 years of uptime and if doing what the poster suggested, namely tuning the idle timer, they’ll likely last even longer. I feel the need for more storage would be the more likely reason to replace them over disk failure.

Obviously buying drives for the correct application is preferable since it means less fighting the firmware to get it to do something it wasn’t designed for, so I wouldn’t call it a great idea, necessarily, and I definitely wouldn’t recommend it to the unknowledgeable, but it’s not like they’ll fail within 10minutes and eat all your data, or anything along those lines…

That is, unless more recent Greens have additional undesirable properties I’m not aware off, of course, mine are, after all, quite old…

I figured that literally burning them was likely not on the agenda

If sarcasm was intended then it totally passed by this non-native English speaker as it still came across as using WD Greens was considered rather the bad idea.

It wasn’t sarcasm, just an overstatement. I guess you can make greens work but i wouldn’t say it’s a good idea. A good idea would be to use drives that don’t need firmware tweaks to function reliably for your use-case.

closes HxD

Yes, it was hyperbole but “why the hate”?

Because greens are a very poor choice for a NAS, and they aren’t even that much cheaper than something that, as mentioned, doesn’t have deliberately RAID-Crippled firmware in it from the factory.

Does WD even make green drives anymore? I’ve seen a green SSD recently but no spinning.

Hopefully not.

It’s not just greens either.

I had a number of WD blacks when i ran hard drives (they were fast, reasonably quiet, etc. - used to be my “go to” drive), until WD decided to take the decision to cripple the firmware in them via a change which would cause them to continually drop out of RAID. i.e., they had drives that originally worked in RAID, that they crippled with a firmware update as a running model change.

I bought new drives because i thought i was having hard drive failure in my RAID1, and they did the same thing. Then later i discovered WD had deliberately crippled the firmware so they could charge more for reds with the crippling removed.

Fuck WD. They are blacklisted as a company from anything i build.

That sort of gets at the heart of it. You may be able to get something to work in an environment outside of its intended use, but down the line, something may change and break that functionality. And when that happens, you won’t have any recourse. It’s not guaranteed to bite you in the ass, but it is relatively high risk.

Yeah, and that’s fine.

Thing is, these were “performance” desktop drives running with intel’s desktop RAID software. And WD made no announcement that they were going to do this.

So yeah, pretty pissed at WD about that.

As you say though, this is the risk you run using consumer gear for server-ish stuff.

Hmm, this has me thinking then, does either Seagate or Samsung do this to their spinning rust HDD’s ?

I have some Software RAID arrays I use in my gaming rig under Windows 10 Pro and they have been working fine for a couple of years now.

I’m not sure, but the specific feature that WD disabled was TLER (time limited error recovery).

I think seagate and others call the same feature something else.

Essentially as i understand it (it was 10 years ago when it started or so and i’m not educated on the specifics), the drives without TLER will not “wait” long enough for “recovery” (or spin up or whatever) in a RAID set, and the OS/PC (or hardware RAID controller) assumes that the drive has dropped out of the array. Thus necessitating a RAID rebuild - on a regular basis. In a RAID0, maybe it looks like your RAID is toast.

The drive is fine… and a new drive will do the same damn thing.

ZFS is unaffected by this as

- ZFS will not require a full disk rebuild if a drive goes away and comes back, just a differential re-silver (which will be instantaneous if the drive takes a second or two to “recover” for example).

- ZFS on Linux and FreeBSD will wait a lot longer due to OS design before assuming the drive is dead in the first place

edit:

wiki

IIRC drives without TLER will wait indefinitely on a sector. TLER waits (usually 7 seconds) and then reports a bad sector and moves on.

Apparently you can enable TLER In the firmware on the green drives, but you have to do it in Windows or FreeDOS and there was some mention of it possibly voiding the warranty. I think the same goes for the blacks.

Ah that would explain why the OS thinks the drive dropped - if it hangs indefinitely.

Linux (and FreeBSD?) software raid/ZFS times out after 30 seconds irrespective of what the drive says (if i understand).

Either way; my RAID array went from perfectly working, before WD disabled TLER in the blacks, to continually broken and i spent hours and a number of new drive purchases before figuring it out (and putting them in a FreeNAS box, where they lasted for about 6 years more).

Well, wouldn’t say the hate is all that hyperbolic if you blacklisted them as a vendor  Given what happened I’d likely do the same as well, and I expected something like this to be the basis of such a reply, so this is exactly the discussion (and information) I hoped to get

Given what happened I’d likely do the same as well, and I expected something like this to be the basis of such a reply, so this is exactly the discussion (and information) I hoped to get

Most of my drives are ancient (going over 7 years of uptime), so they might have dodged the firmware stuff. Just curious how they managed to do the firmware update though, Windows Update? Or was it an unlisted change? Kinda don’t see how they’d force firmware updates through on *nix.

My experience with Greens is mostly that their firmware sleep behaviour was harmful, especially in appliances, my parents bought them for their Synology and they’d spin up-and-down all day (quite literally), which killed them in short order.

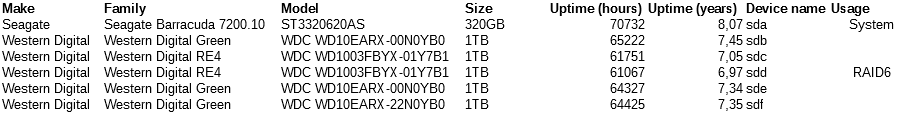

As for desktop drives, I’ve simply started avoiding WD ones as they’ve been falling like flies even with regular desktop usage, so putting them in something more demanding isn’t even on the radar. The enterprise drives (RE4) otoh have been doing great.

FWIW this is the current hdd setup of my server:

The reason for the gula build is to get data off of that ancient array (and also moving to ZFS, which wasn’t even an option when I built this system)

Actually, I have some really really old drives (think ~1Gb IDE drives) lying around that I tested for fun a little while ago (as in: will they even spin-up?), maybe those results would be interesting, or at least entertaining, to see.

The potential issue would be if a drive died, you replaced it and they had changed something in a hardware revision that causes it to behave differently. You’d have no way of knowing, and they’d have no obligation to document it since you’re using the drive for something it’s not intended for.

Anyway, like I said before, it’s not that it’s guaranteed to fail, just higher risk.

i might have asked this here before, but is the Register client hostname from DHCP requests in USG DNS forwarder option in Unifi unreliable for anyone else? It works fine for some of my hosts, but not at all for others. I don’t get it. There used to be an explicit switch to use dnsmasq, but they removed it at some point. Not sure what’s going on under the hood now. /etc/hosts is basically empty, so that’s not what they’re using…

On the bright, side Ubiquiti has finally implemented sane update notifications.