@CHESSTUR I haven’t finalised all the details yet, just worrying about the CPU right now, one thing at a time. I’ll definitely be getting some nice PSU, not trying to cheap out at all but don’t want to get some overpowered beast. Don’t want to waste money. Basically, my use is quite simple:

My mobo can support 12 SATA drives out of the box which is what I’ll be getting to start out with.

If or when I expand, I’ll add another 12 drives as I’ll be using ZFS. So I’ll need 2 more HBAs for that.

I’ve got a Quadro K4000 graphics card which I want to use for compute. According to spec sheets, it consumes 80W max. [/quote]

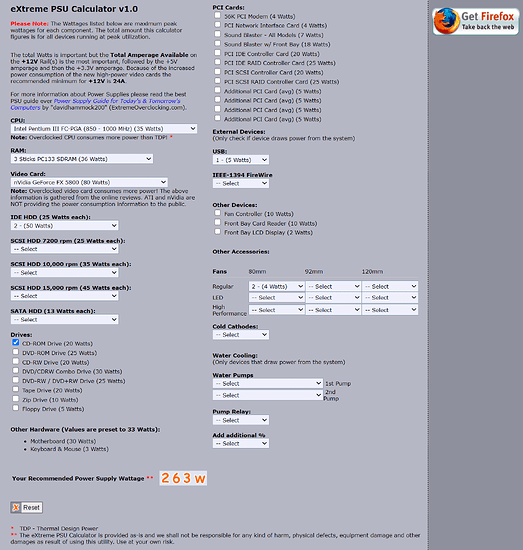

Okay, so tack another 100 Watts onto that 450 figure and start adding the wattage per hard drive and the rest of the peripherals you’ll be using. It’ll be easy to find yourself in the ball park of say, 750 to 800 Watts nominal. This is why I recommend erring on the side of caution. You may be happy with only 24 drives for the present but what about future proofing? What with the smart PSUs they use today it’s not like your system will always be eating that much power anyway unless you’re running everything full bore 24/7, including your CPU. What would you call an over powered beast? A modest Thread Ripper would tear my beast to shreds.

I run 34 drives on one PC. 4 of those drives are external. I use them for backups. So, 30 inside the case. The case cost me around $300 Canadian. (Admittedly, that was a good deal back then.) Here’s the case:

So now, the math: 12 TB EXOS under sled #5. (1) 10 TB HGST in sled #5 (2). Sleds 1-4 Toshiba CMR drives, 2x3TB and 2x4TB both pairs in RAID 1. That’s a total of 6. A small raid cage in the front for RAID 0. (8) Another raid cage in the front connected to an LSI HBA card in IR mode sporting 4 Velociraptors and 4 SSDS in RAID 10 (that makes another 8) so now we’re at 16 drives. Open the door on the case and there’s another cage inside supporting an additional 6 drives. That’s 22. In addition to this I have another 8 drives natively connected to SATA, all of them being SSDs because they’re slim and can sit nice and neatly on top my blue ray CD/DVD ROM drive, which in itself, is yet another drive. So, 22 + 9 = 31. Then there are my two NVMe drives so that’s 33. Now, I just recalled that I unplugged an SSD from my HBA IT card because port 6 kept hijacking the port 0 position in my interface for some mysterious reason so I’m sitting at 33 drives (not counting the 4 external hard drives I use for backup). So all 33 drives are inside the case with one more port to spare and plenty of extra Sata power handy. All my drives are hot plugged and a good many of them are in sleds.

Sure. It’s not a server case. It’s an EATX case and it serves my purposes. My drives are surprisingly cool except for the NVMe, which I have little control over. (I suppose I could invest in some thermal pads.) I made several modifications in the way of air cooling, but the whole thing runs amazingly cool and quiet compared to a server case. I never experience thermal throttling and my CPU is OC’d to a modest 4.2 GHz, which isn’t too shabby for an old 6900K chip.

So I recommend getting an old EATX case regardless of the size of your mobo and a CPU that can give you 40 lanes of bandwidth. (Or more) You don’t have to go with the latest greatest bleeding edge, state of the art, hardware to do this. In fact, if you shop around you can get a used CPU that can give you this sort of spread for a song. Some folks would call my PC an old relic. Others would call it an over powered beast. I don’t care what they call it as long as it serves my needs.

There are plenty of people here that can help you with building a server. I’m just offering a little in the way of advice where I have direct experience and knowledge. I’ve had a lot of experience with hardware but when it comes to setting up servers I’ll defer to the experts here. I hope this helps.