IF you shop around you could prob find a used one online. Never regretted buying it. The thing has so much storage capacity and those huge, slow moving fans do a swell job keep it cool. I realize it does look a bit militant and perhaps slightly vintage but it suits my needs and that’s what matters. I’ve made many mods to it but it takes a kickin’ and keeps on tickin’. ![]()

IMO ZFS shouldn’t be used without a UPS regardless of the media, the majority of unrecoverable failures with ZFS I’ve seen are from unexpected power losses. I say unrecoverable but on a few of those we were able to use a data recovery service to recover most of the dataset.

Noted.

Getting back to my OP. I just wanted to document what I’ve dug up over the weekend.

1.) I found:

Which basically answers my question:

What is the maximum power consumption for my processor?

Under a steady workload at published frequency, it is TDP. However, during turbo or certain workload types such as Intel® Advanced Vector Extensions (Intel® AVX) it can exceed the maximum TDP but only for a limited time , or

- Until the processor hits a thermal throttle temperature, or

- Until the processor hits a power delivery limit.

So TDP is the power consumption at full load. For some CPUs that I saw on the Intel website, exact idle and turbo power consumed figures are shown:

Not sure why Intel isn’t consistent in its published figures but basically, that’s that.

2.) I was obsessed with finding the ultimate power supply for my build i.e. so that it lived in the efficiency sweet spot for most of its life, thereby minimising power consumption. I found this book (which is free btw):

Pages of interest are 133-135. Basically, you can see that oversizing a PSU at system idle doesn’t significantly increase power consumption due to inefficiencies. Similarly operating at the limits of PSU capabilities (i.e. “optimally sized PSU”) vs running an overpowered PSU in the efficient range, saved perhaps 10W. Basically, don’t worry too much about this unless you’re running hundreds of servers and have no choice but to optimise PSUs for your workload and system setup.

Since few years ago ,TDP has become more of a suggest than a limit. Its still good number to plan around,as long you understands its limitations and dont rely on it.

Its not an official standard, so both intel/amd have been silently playing around its definition to get better marketing numbers.

Intel has done the most of the rule bending, due to lithography issues and general desperation to match amd performance edge.

I short, there no hard and fast effecttive rules between actual power consumption and TDP. If you are overclocking, then you throw the number in the bin wholesale.

If you are building around SPR cpu, just use the top of line number 350W and be done with it. If there are actual guidelines, they usually proprietary knowhow to system integrator.

Supermicro board documentation might help, they are reasonably open to customers.

EDIT → actual psu sizing notes.

After rereading the thread, Iˇve notice potentially dangerous omissions in the discussion.

- Power supply calculators take trivial approach and make silent assumptions about final use. They are not good for specialized use cases like actual server.

No power calculator is going to warn or check if your PSU can actually handle powering 24 HDD simultaneously.

Listed PSU wattage is no guarantee that internal design provisions enough power on sata power rails. Thats something you have to check manual againts low level technical specs. - Perform detailed planning per psu power rail, best case is take worst case load of each component and add it all up, per rail. Then compare it to desing specs of candidate psu. If such information is not available for target psu, remove it from consideration.

- select from know good psu suppliers and target at least 80 gold, ideally 80 platinum plus PSUs. Price difference is small and this is the worst area to be penny pinching, especially considering the value of the rest of the platform

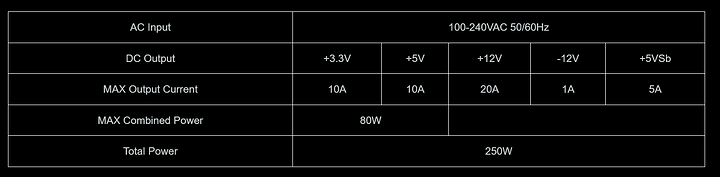

E.g. operation specs for hdplex 250W GAN adaptor:

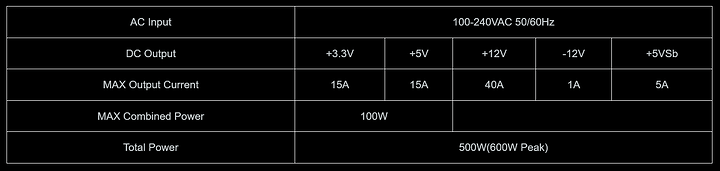

500W variant:

Now notice +5V rail power and amperag limits. Gus what uses it? SATA power.

@greatnull Thanks, researching PSU specs right now. This has always been a mystery to me. Time to change that.

@twin_savage Just watched this video:

And just wanted to spread the word. ATX 3.0 is unlikely to take off. Refer to 8:07. Basically, there’s going to be a new connector i.e. 3.1.

Does having a ZIL mitigate this problem?

ATX 3.0

Thats very wrong conclusion to take from the video. ATX 3.0 is extension of older standard and already shipping since 4000 gpu lauch, where it was needed.

ATX_12VO standard hasn’t made any headway in consumer sector, because it requires both new psus and new motherboards. And motherboard redesign will be radical.

Outside of consumer sector, it has been present for almost decade in OEM sector. Guess what was inside those lenovo , dell and hp workstations with proprietary motherboards and psus?

12VO, because it was both cheaper to build and more power efficient. While extensibility is then limited to what oem offers with some leeway here and there, it dead easy to get desktop pc with 10-15W idle power.

It does not, ZFS makes some fundamental assumptions about how the write cache in hardware works that does not match up with reality on modern hardware.

What I hear you say is that ZFS urgently needs a full rewrite, addressing this issue and probably others too, in order to reflect progress made in hardware design since ZFS was originally conceived.

If I was king I’d either:

-

Force the storage hardware manufactures to honor cache flush commands, then ZFS wouldn’t require any extra work. Although this would likely result in performance drops for many applications because we’ve become so use to relying on storage hardware controllers to opaquely cache so much.

-

A slightly more pragmatic solution would be to add new commands to NVME/SATA/SAS to flag when and what the controller is caching so that logic to account for the volatile data could be written into ZFS.

I suppose the most pragmatic approach right now is to just use a UPS.

Is this the sort of problem that PLP in enterprise SSDs helps with? E.g. a write is cached to a drive, there’s a sudden power loss, but the PLP enables the drive to flush the cache before it fully loses power?

correct

So I’ve been researching PSUs and I’ve got a decent idea about them now. As good an idea as you can get after 2-3 days of research. Anyway, I’m stuck on estimating power needed by the HDDs. I’m seeing conflicting as well as a lot of incomplete and non existent information.

As I said, before, I’m going to be initially starting out with 12 drives and ideally I would like a case that houses a total of 24 drives.

Anyway, from:

There are two graphs shown, lets look at the current of the 12V line that powers the motors that spin the platters first.

We see that 45 HDDs peak @50A. The drives in question are:

Seagate 4TB (Model# ST4000DM000)

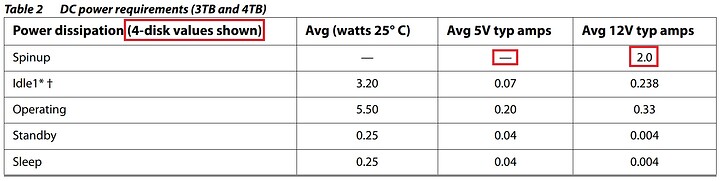

Refer to:

https://www.seagate.com/www-content/product-content/desktop-hdd-fam/en-us/docs/100799391e.pdf#page12

However, on page 11 we see this:

I don’t know what “4 disk values shown” means and if it’s important but 45 * 2A = 90A, not 50A.

And then the 5V rail apparently is crossed out for the consumer drives yet 45 drives reported 17A!

Basically, I can’t marry up the 45 drive results to the spec sheet of those drives.

The spec sheet to those drives doesn’t look right, I’ve never ran across a sata or sas hdd that doesn’t pull from the 5v during startup.

I’d stick with the number 45 drives got, they at least look realistic.

Is it possible to max out everything on your computer at the same time? Run some kind of memtest to max out memory, CPU benchmark, GPU benchmark, use HDDs, run a dummy load on your NIC, you name it, all at the same time?

IIRC, I’ve seen CPU and GPU running at 100% at the same time, but I’ve never seen everything running at the same time.

Many of the problems faced with server builds are the fact that people want them in the same room as their workstations.

This poses problems because servers are generally packed with cooling fans and they are often loud.

Commercial grade stand alone servers especially may contain up to a dozen fans, and usually a redundant power supply.

And its best to place them in a dedicated soundproofed room.

Rack mount servers do not have many fans but the rack itself makes up for it with high volume airflow.

Plus the enclosures muffle the sound a bit.

A single supply? Well it can never be too large depending on how important the data is.

In some instances slaved power supplys can serve here to take up the extra load.

All you would need is fuzed relays to turn the other power supplies on.

You need an engineering degree in electronics to make that work, safely. And deep pockets to fund it all ![]()

Nope, that’s (one of) the unsafe way(s) ![]()

Never, EVER, connect 2 or more PSU’s to the same power rail w/o significant modifications to the control-loop in said PSU’s. The alternative is assured destruction of the PSU’s and all connected hardware inside that machine. And your insurance isn’t gonna pay for your burnt-down house either. ![]()