I recently upgraded my server. I was a bit nervous about buying old parts for the core of the system (mainboard, cpus, memory), but everything works great. Although the core hardware is old, it’s still a huge upgrade from my dual-Opteron server built in ~2010. That was a totally reliable machine, but the performance was quite poor even when it was new  .

.

In addition to giving a summary of the hardware & software, I hope to save somebody some time by explaining a few things that took me some hours to figure out.

My “new” server:

Hardware

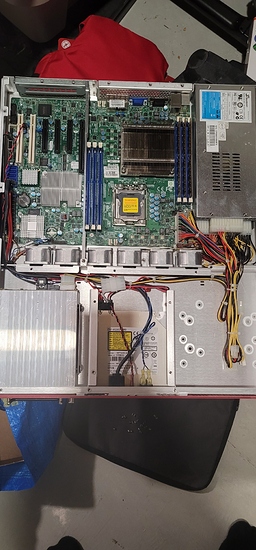

Case: Fractal Design Define 7 XL

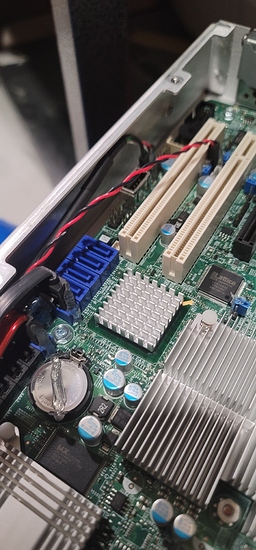

Mainboard: Supermicro MBD-X9DRD-iF

CPUs: Xeon E5-2650 v2 (x2) (total 16c/32t)

Memory: 128GB (8 x 16GB) DDR3-1600 REG ECC

Onboard NIC: Intel I350 (2 ports)

PCI-E NIC #1: Intel I350-T2 (for OPNsense)

PCI-E NIC #2: Intel X520-DA1 (10 Gbps connection to my PC w/ cheap DAC cable)

PCI-E SAS/SATA: LSI SAS 9201-16e (connection to external drive tower)

SSD #1: WD Blue 500GB

SSD #2: Crucial MX500 1TB

HDDs: 6x 6TB WD Red in raid6 (Linux MD, will switch to ZFS next time I buy drives)

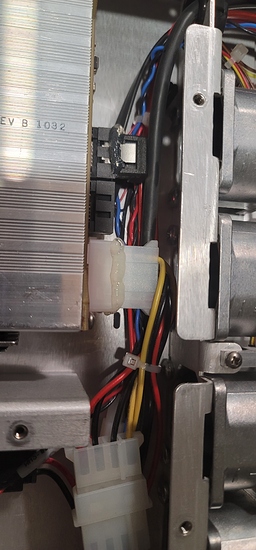

Fans: 140mm (x2) + 120mm in the top (Noctuas), 140mm (x2) in front + 140mm in back (factory installed)

Software

Host OS: Proxmox VE

Guest-1: (KVM) OPNsense: using passed through Intel I350-T2

Guest-2: (LXC) Debian Linux: Bitwarden, Plex, Pi-hole, Unifi controller, etc.

Guest-3: (KVM) Debian Linux: development & regular use

Guest-4: (KVM) Windows 10 Pro: no big plans for this yet

CPU Allocation

CPU0: Host OS (PVE), OPNsense, LXC containers (just 1 now)

CPU1: all other VMs

1G Hugepages

To use 1G hugepages efficiently in my config, I allocate only 2GB from CPU0’s memory for OPNsense, and 56GB from CPU1’s memory for other VMs. Since I didn’t want my hugepages evenly distributed between the 2 nodes, I couldn’t allocate them on the Linux kernel’s command line (but my command-line does specify 1G as the size of hugepages). Hugepages (like all pages) must be contiguous allocations, so they must be allocated ASAP in system bootup and the best way to do that (apart from the kernel command-line) is to modify the initial ramdisk. It’s easy to do this:

/etc/initramfs-tools/scripts/init-top/hugepages_reserve.sh:

#! /bin/sh

nodes_path=/sys/devices/system/node

if [ ! -d $nodes_path ]; then

echo ERROR: $nodes_path does not exist

exit 1

fi

reserve_pages()

{

echo $3 > $nodes_path/$1/hugepages/hugepages-$2/nr_hugepages

}

reserve_pages node0 1048576kB 2

reserve_pages node1 1048576kB 56

Then update the initrd:

% chmod 755 hugepages_reserve.sh

% update-initramfs -u -k all

After some investigation, I found out that when you don’t allocate hugepages on the kernel command-line, by default Proxmox won’t play nice and use the hugepages you’ve allocated. The fix turns out be simple: in the .conf for each VM you have to specify keephugepages: 1. I don’t know who benefits from the default behavior, but ok.

PCI Passthrough of I350-T2 for OPNsense

This was a little tricky, since the Supermicro X9DRD-iF has an onboard I350 that I don’t want to pass through. As above, the solution is to take action early in bootup. I bind the 2 devices(ports) in the I350-T2’s IOMMU group to the VFIO driver before the “actual” driver has a chance.

/etc/initramfs-tools/scripts/init-top/bind_vfio.sh:

#! /bin/sh

# you have to find the path(s) for your own adapter's IOMMU group obviously

echo "vfio-pci" > /sys/devices/pci0000:80/0000:80:02.0/0000:83:00.0/driver_override

echo "vfio-pci" > /sys/devices/pci0000:80/0000:80:02.0/0000:83:00.1/driver_override

Kernel Command-Line

Here’s a piece of my /etc/default/grub that may be of interest:

# the rootdelay is to prevent an error importing the root ZFS pool at bootup

GRUB_CMDLINE_LINUX_DEFAULT="rootdelay=10 quiet"

GRUB_CMDLINE_LINUX="root=ZFS=rpool/ROOT/pve-1 boot=zfs"

GRUB_CMDLINE_LINUX="$GRUB_CMDLINE_LINUX intel_iommu=on iommu=pt"

GRUB_CMDLINE_LINUX="$GRUB_CMDLINE_LINUX intel_pstate=disable"

GRUB_CMDLINE_LINUX="$GRUB_CMDLINE_LINUX kvm-intel.ept=y kvm-intel.nested=y"

GRUB_CMDLINE_LINUX="$GRUB_CMDLINE_LINUX default_hugepagesz=1G hugepagesz=1G"

FIN

There’s a bunch more details I didn’t cover (e.g. getting Docker to run efficiently in an LXC container, launching SPICE to a VM from a desktop shortcut), since I wanted to keep this short(ish). I hope the notes I’ve provided will help someone.

.

.