Glad to help anyway I can

Ok good, so most of that checks out and looks like its fine. But there is a few small things to try first.

- First, try getting huge pages setup, it looks like its enabled, but ram was not reserved

That’s the value of the reserved hugepages.

If you were reserving 20 GB, that value would be 10240 (Not including extra for safety)

Calculating; take ram being passed into the VM and multiply that by 1024mb, then divide by 2mb, for safety add 1-10% extra.

16gb example:

16 x 1024MB = 16384MB

16384MB / 2MB = 8192 huge pages

8192 * 10% = 819.2, 8192 + 819.2 = 9011.2 rounded 9010

8192 * 2% = 163.8, 8192 + 163.8 = 8355.84 rounded 8355 (Some recommend only 2% needed for safety)

8355 - 9010 hugepages would be a good value for 16 GB of ram.

Now making the system reserve those hugepages, can be a bit different. For my gpu passthrough setup I just edited the:

/etc/sysctl.conf added the line at the end of the file:

vm.nr_hugepages = 13056

Is what I had for 25gb being passed through. After a system reboot it showed with cat:

cat /proc/meminfo | grep Huge

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

FileHugePages: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 13056 kB

Hugetlb: 0 kB

Keep in mind that is the “old way”, and there is a possibility to have conflict with numa enabled. With numa you may use an echo command directly manipulating the hugepage files for each node

echo /sys/devices/system/node/node<NODID>/hugepages/hugepages-<SIZE>kB

or using a the utility: hugeadm (Note I have not personally used it, but may be required with the dual cpu system)

Heres a few pages I found that may be useful:

https://help.ubuntu.com/community/KVM%20-%20Using%20Hugepages

Huge pages does reserve system memory, so it may cause annoying problems, if this doesn’t work you can just remove the entries for the huge pages or set it back to 2048 kb and rebooting again.

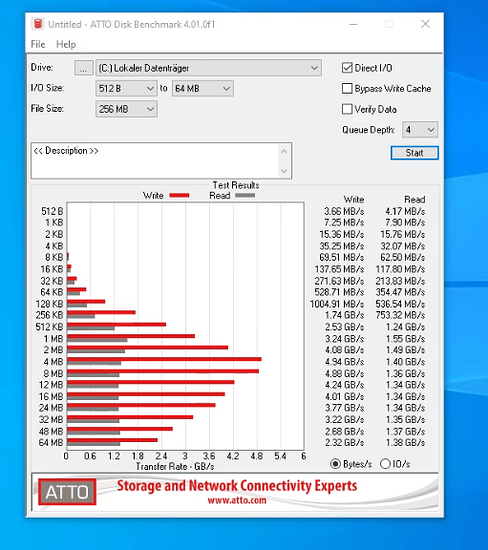

I will note, for my ryzen setup pci passthrough setup with ddr4. Without hugepages setup, using the passmark benchmark tool to look at ram speed it showed it with a score of something around 450 score, while bare metal people were getting 3332 on average. With the huge pages enabled it jumped up to something like 1800-2.2. Still not even close to bare metal for my memory, but a lot better. (I’m sorry I can’t provide exact statistics, that’s going off of memory heh he pun not intended)

Please Note: this was using ubuntu qemu/kvm with libvirt. Things may be different for proxmox (Waiting on ram from ebay to get more into proxmox).

Now to change gears, specifically because of you said the host CPU was pegged while doing tests on the guest.

Hugepages is supposed to help with IO responses on windows, because windows can be a trash fire at dealing with IO.

The main thing about a VM, is its supposed to seperate the host from the guest, so if the guest is at 100% load, the host shouldn’t drop down at all, except for the resources it is allowed to utilize.

I found a bunch of people complaining specifically about windows 1803, causing tuns of problems to the HOST machine.

A few Examples:

- 100% Pegged CPU on host, while the guest was idle with low cpu usage.

- Under even small load on the guest windows VM, 100% usage on the host

- Under small load would make the host unresponsive to keyboard and mouse input, with 30+ second delays with host.

- 100% CPU usage on all windows guests and 100% cpu usage on host

The one fix I could find was removing all unused devices for the VM, network adapters, virtio keyboard, mice, serial adapters/ devices, virtual hubs, usb devices etc. (An example is a network adapter being passed into the vm was constantly trying to configure itself glitching out the host, another was a usb / serial device in the guest that was also trying to poll a service on the guest, causing the host to lag out)

Some of fixes I could find is setting certain CPU flags on startup that could correct the issue. EI hv_sync && hv_stimer enlightments.

A possible thing to check on that vein of thought, This bug report for Rhel: https://bugzilla.redhat.com/show_bug.cgi?id=1610461

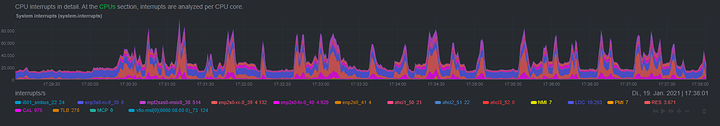

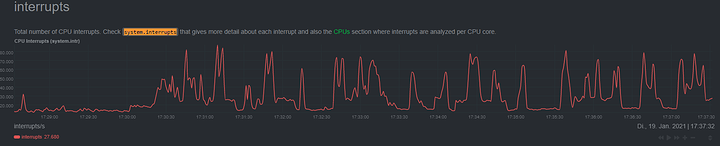

Was reporting window 1803 would have more than 2000 interrupts in second. While on 1709 windows was getting windows only 140-200 interrupts in second. Saying

Adding the flags ‘hv_synic,hv_stimer’ resolve the problem.

I’m not sure but you might be able to run the command:

perf kvm --host stat live --event=ioport on the host to see how many interupts you have while trying to run the guest or run something on the guest.

Sadly I am also somewhat new to the whole virtualization thing, I do feel having the host CPU being maxed out is a very big hint to the problem that is happening.

However it does still feel like throwing a wrench at a car that won’t start, and hoping it will fix the problem.

I really do hope one of these things would help. Good luck

At least the context is understandable, lol.

At least the context is understandable, lol.