Could this video get a link to the adapter that was found?

The video showed the page to the cable but what is the pcie redriver?

Could this video get a link to the adapter that was found?

The video showed the page to the cable but what is the pcie redriver?

To the best of my knowledge:

M.2-to-Gen-Z adapter: M.2 M-key PCIe Gen4 with ReDriver to Gen-Z 1C(EDSFF) Adapter

Gen-Z-to-SFF-8639 Cable: PCIe Gen4 Gen-Z 1C Male to U.2 (SFF-8639) Cable

I think the m.2 insanity will only continue, and I think a couple of generations from now we will see eight m.2 slots + 2 PCIe x16 slots on most mainstream desktops. Not making those x16 slots x8/x8 bifurb is just laziness though…

My prediction for AM6 / Gen 18 boards: 40 PCIe lanes, 32 5.0 lanes from CPU. These are divided with clever bifurb to power 2 PCIe slots and up to 6 m.2 slots. Chipset powers the remaining 2-5 m.2 slots.

So get used to your m.2 adapters, you’re going to be needing them! Oh, and in an effort to save money SATA ports will disappear from the motherboard as will all extra fan headers except one for case and one for CPU. All extra stuff comes with m.2 cards from now on.

As for your question, seems to be this one: M.2 M-key PCIe Gen4 with ReDriver to Gen-Z 1C(EDSFF) Adapter

I hate that development, hope that something like non-PRO Threadrippers return giving more easiliy usable PCIe lanes to users who want more than AM4/5 but don’t like the outdated TR Pro release cycle or the high prices AMD wants for the latter or their EPYCs.

Hooray for Intel’s Sapphire Rapids product lines, competition is always needed.

To be fair though - how many PCIe cards do you realistically need, these days, besides GPU? Network perhaps is the only one you really need these days, everything else can already be powered by m.2 adapters, pretty much. And Network can even double as an NVMe slot for a 4x slot.

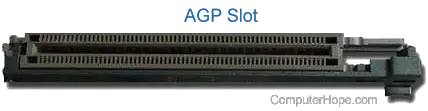

Or to put it another way - how many of these do you use today?

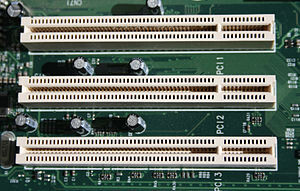

What about these?

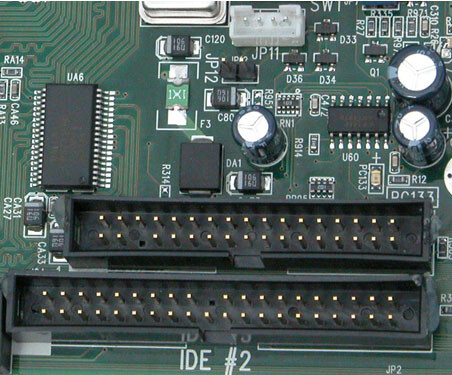

Or heck, even these babies?

Not to mention these…

Computers did get better. m.2 has a lot of advantages (like requiring fewer PCIe lanes). They just suck if you happen to have a bunch of cards already invested for PCIe slots. But then again PCIe sucks if all you have is a bunch of PCI cards, so… ¯\_(ツ)_/¯

Not trying to say PCIe is outdated or obsolete just yet, just that this seems to be where consumer is heading. And part of the reason for this is because PC manufacturers love to have a distinction between pro (PCIe) and consumer (m.2). I think this is a big mistake to segment like that, personally, but greed is probably guaranteeing this split by now.

The wide variant of m.2 isn’t a terrible idea as alternative pcie form factor. The half length genz ish ruler form factor is also not awful.

I recall seeing NVMe-like/sized SSD’s that are directly inserted in a PCIe slot on Aliexpress not that long ago. No examples at the ready, unfortunately.

While I understand that my personal use cases are little above the mainstream consumer needs I lament that HEDT has become so extremely expensive.

I’d be more open about the usage of M.2 (adapters) if there was a reliable solution to get the M.2 lanes out to other devices but I think the only way that would work would be optical transceivers for tunneling PCIe. Basically the thing Thunderbolt was during the development phase (hence the codename “Light Peak”).

I find it absurd that it’s cheaper to get multiple separate full-size consumer systems to get more PCIe lanes compared to a single HEDT platform.

Ironically, the Thunderbolt controllers have all been named after something a little less peaky: Light Ridge, Eagle Ridge, Port Ridge, Cactus Ridge, Redwood Ridge, Falcon Ridge, Alpine Ridge, Titan Ridge, Maple Ridge, Goshen Ridge, etc.

Whereas peak conjures up the image of gleaming sunlight peeking over a snow-topped mountain, ridges—for me—are these darkened hills and mountains sunken into the abyss of the ocean.

It’s a plague. I don’t get rear I/O like I do with a PCIe card. And I don’t get 75W power delivery. I can’t hotplug the stuff and if there are 8x M.2 slots, that’s 16 (!!!) additional cables from all over the PCB if I want to use them for e.g. U.2/3 and even more cables, risers and adapters if I need 8 lanes. Why not use 2x PCIe cards? Let the user decide what to put in there.

Oculink or MiniSAS 8i connectors are just great (some technical flaws, it’s not perfect). Sits on the edge of the board and you can plug breakout cables in there. Easy. Like SATA but scales with PCIe. And my NVMe doesn’t have to sit right below my 80°C GPU (I actually have to plug out to access the NVMe). I could actually cool it properly and allow more capacity/power limits in a 2.5"/3.5" bay. People buying SATA SSDs in 2023(!!) because M.2 sucks and can’t deliver proper capacity for storage.

M.2 is great for laptops and other mobile stuff. We need PCIe slots and OCulink/MiniSAS or some consumer equivalent. People are already soldering NVMe on the PCBs. It only gets worse over time. I fear PCs becoming de-facto embedded-like systems.

If I have an full x16 chipset PCIe slot, I don’t need 4x chipset M.2. It just uses space and makes small form factors lack valuable stuff as well as limiting options.

And if some million PCs run on SFF connectors, prices for cables will fall to SATA cable levels, not some boutique enterprise prices.

Yeah Exard, but people don’t like higher prices…well, they buy 800$ consumer boards and manufacturers got away with exponential increase in board prices. So don’t tell me consumers are scared of 16TB NVMe SSDs because the board would costs 20$ more. People want more storage, not more (chipset) M.2 slots.

And that’s why we need M.2 to U.2 adapters today. There are enterprise M.2 drives out there, just check on the Micron catalogue. But performance and capacity is a limiting factor on the small form factor that is M.2.

how do you configure this weird thing… output swing, flat gain, equalization… is anything hurt if I leave it all on high?

Good question. Would be great if somebody could do a few tests on this. Wendell’s video about it did leave me wondering… There are enough permutations to make a brute-force test rather ugly though (64 I think - swing (2) * flat gain (4) by EQ (8)).

Best guess: all on high is probably OK (that’s the default after all).

I used this until my asrock Rackboard arrived and worked just fine ![]()

NFHK NGFF M-Key NVME auf SFF-8654 Slimline SAS Kartenadapter und U.2 U2 SFF-8639 NVME PCIe SSD Kabel für Mainboard SSD https://amzn.eu/d/5Aoc00H

Anyone have recent benchmarks with a decent U.2 drive vs 990 Pro? As we know the numbers listed on the product labels aren’t the same as real world performance. File copying, video editing, searching, just the basics…

How important is the cable length with a redriver? The 0.5 Gen-Z cable is out of stock and I can only find the 1m version. Will I be okay or will that cause problems?

It seems like there are a bunch of cheaper versions of the cable but I’m not sure how much that will affect the signal.

You don’t want to gamble on that. There are enough PCIe 4.0 certified (![]() ) cables which don’t actually competently carry PCIe 4.0 signals. You should not take a chance on a cable that doesn’t even claim to do it, and definitely not one that is even half a meter.

) cables which don’t actually competently carry PCIe 4.0 signals. You should not take a chance on a cable that doesn’t even claim to do it, and definitely not one that is even half a meter.

Additionally check and validate that your system and the PCIe interface you want to use the cable with support PCIe Advanced Error Reporting (AER) to actually know if the cable’s connection is electrically okay.

NVMe SSDs unfortunately don’t have any SMART values for that, SATA SSDs for example have the C7 CRC value that logs errors caused by a bad connection between the SATA controller and the drive.

The motherboard itself in combination with the log from the operating system has to do this job here with said PCIe AER.

So no cable for me, then? The recommended cable isn’t available but a longer version is, while other brands have the cable in the recommended length. And your response is don’t gamble.

I’m not sure if it does, but I’ll take a look.