Hmm well they do have a security gateway. Do you nat everything behind it and use it as the primary firewall or some other solution

Unifi security gateway is a different ecosystem. I typically use a single-router Edgemax network, with a unifi AP and sometimes an OPNsense device as an additional layer at the edge for IDS and geoblocking.

I use l2tp/IPSec for transport vpn and openvpn for site-to-site tunnels.

isnt that the highend dutchy version of pfsense or are they completely separate. Im familiar with pfsense just not opnsense

Curious why not just unify under openvpn?

Easier to use l2tp/IPSec in macOS which is what my people are using.

Also, for redundancy. If openvpn is my only avenue for logging into a remote site, it becomes very difficult to change the openvpn config because the risk of getting locked out would be very high.

Also, OPNsense is a fork of pfsense. It’s a little more hardened and less feature-rich. It can use libressl which is what initially caught my attention.

got ya… well thats interesting… whats the benefit of libressl?

That was very informative. He talked so slow I ran it at 2.5x

That’s pretty old, but they have various updates over the years on YT if you want to see how it’s progressed. It’s a great example of the catastrophic consequences of poorly maintained code and a toxic level of legacy compatibility. While it would be very difficult to implement libressl everywhere (since it’s not really an option in Linux), I think it makes a lot of sense for reverse proxy or other instances where you can consolidate certificate workload onto a BSD server.

I haven’t actually implemented that yet other than just configuring opnsense to use libre, but it’s on the to-do list.

Lol. Unfriended

Neat

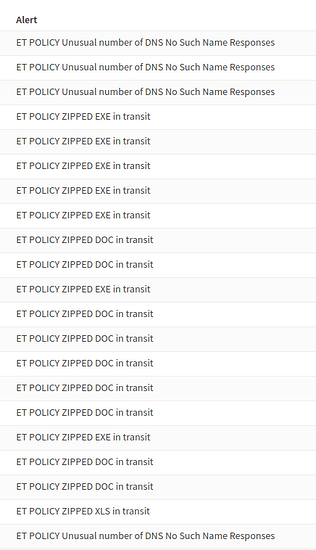

I wonder how I get it to email me when something serious happens…

(Suricata on OPNsense in a transparent filtering bridge configuration).

This is dumb.

# uncomp

[ -f "$1" ] &&

{ # gunzip

gzip --quiet --uncompress --stdout -- "$1" 2>/dev/null ||

# unzip

unzip -qq -p -- "$1" 2>/dev/null ||

# lzip

lzip --decompress --stdout -- "$1" 2>/dev/null ||

# xz

xz --decompress --stdout -- "$1" 2>/dev/null ||

# bunzip

bzip2 --decompress --stdout -- "$1" 2>/dev/null ||

# tar (gzip)

tar --extract --gzip --to-stdout -- "$1" 2>/dev/null ||

# tar (bzip2)

tar --extract --bzip2 --to-stdout -- "$1" 2>/dev/null ||

# tar (xz)

tar --extract --xz --to-stdout -- "$1" 2>/dev/null

} ||

return 1

return 0

Where’d you get that script?

I’m trying to write a universal uncompress script. I just thought it was silly how many there are.

Quickly realized stdout isn’t going to work for directories.

So soon after I updated a FreeNAS box to 11.2u5, I got this alert:

Checking status of zfs pools:

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

freenas-boot 59.5G 4.46G 55.0G - - - 7% 1.00x ONLINE -

jbod1 43.5T 34.0T 9.52T - - 11% 78% 1.00x ONLINE /mnt

pool: freenas-boot

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: http://illumos.org/msg/ZFS-8000-9P

scan: scrub repaired 0 in 0 days 00:00:36 with 0 errors on Thu Jun 27 03:45:36 2019

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

da0p2 ONLINE 0 0 1

errors: No known data errors

-- End of daily output --

Everything seems fine… it’s just a single disk with the OS on it. I’ll have to dig and try to see if I can get a better record of what actually happened. No notifications from SMART.

One checksum error on da0p2 could be something as simple as a loose cable or a bit flipped in ram or a firmware bug. Seems to have not been too important whatever the data was, but this is why it’s a good idea to have redundancy. Boot drives are cheap.

It’s an x9 gen supermicro board, so only one satadom port unfortunately.

Is there any way to see what data was specifically effected and trace it to a specific file?

Might be able to dig something up with zdb but normally it would have shown you in zpool status if a file or other critical object were affected.

I wonder what will come first, CentOS 8 release or my new Model F keyboard…

Working on automating onboarding processes. One hurdle is automatic credential generation. My clients use 1Password which has a cli utility. Here is how to add a login:

#!/usr/bin/env bash

# usage: add_login user password fqdn vault

declare -r username=$1

declare -r password=$2

declare -r domain_name=$3

declare -r vault=$4

declare -r template_json="$( op get template login )"

declare -r user_json="$( echo "${template_json}" |

jq '.fields[] | select(.designation=="username").value = "'"${username}"'" | select(.designation=="username")' --compact-output )"

declare -r password_json="$( echo "${template_json}" |

jq '.fields[] | select(.designation=="password").value = "'"${password}"'" | select(.designation=="password")' --compact-output )"

declare -r item_json="$( echo "${template_json}" |

jq '.fields = '"[${user_json},${password_json}]" )"

declare -r item_encoded="$( echo "${item_json}" |

op encode )"

op create item Login "${item_encoded}" \

--title="${domain_name}" \

--url="${domain_name}" \

--vault="${vault}"

Never used the jq command before. Feels pretty awkward, but still decent when it comes to parsing data serialization formats in bash.

Additionally, I am working on some expect scripts for onboarding Ubiquiti Edgemax equipment. Here is the script for enabling SSH on an Edgeswitch via telnet (ssh is default now, but many that you buy come with older firmware that only have telnet and http out of the box).

#!/usr/bin/env expect

set timeout 4

set host [lindex $argv 0]

set user ubnt

set password ubnt

spawn telnet "$host"

expect {

"'^]'." {

sleep .5;

expect "User:"

send "$user\r"

expect "Password:"

send "$password\r";

expect " >"

send "enable\r"

expect " #"

send "configure\r"

expect "(Config)#"

send "crypto key generate rsa\r"

expect "N] :" {

send "y\r"

}

expect "(Config)#"

send "crypto key generate dsa\r"

expect "N] :" {

send "y\r"

}

expect "(Config)#"

send "exit\r"

expect " #"

send "ip ssh protocol 2\r"

expect " #"

send "ip ssh server enable\r"

expect " #"

send "sshcon maxsessions 2\r"

expect " #"

send "sshcon timeout 5\r"

expect " #"

send "write memory\r"

expect "(y/n)"

send "y\r"

expect " #"

send "reload\r"

expect "(y/n)"

send "y\r"

send \033

expect "telnet>"

send "quit"

}

"Connection" {

sleep 1

exit 1

}

timeout {

exit 1

}

}

exit 0

Configuring Synology to FreeNAS backup wasn’t as easy as it should have been, but I was able to get it to work. In my case, this is going over 10GbE on a secure subnet, so I am opting to use an rsync module instead of ssh.

Synology

Control Panel > File Services > rsync

![]()

![]()

FreeNAS

1. Create a user account with the same name as the rsync account on the Synology

2. Create a file that contains the rsync password somewhere on the FreeNAS (rsync user’s home folder is a good choice"

3. Set the rsync user as the owner of the password file and change permissions to 600

4. Create an rsync task. The fields are as follows:

-

Path: Wherever you want to backup to in FreeNAS

-

User: Select the rsync user you just created

-

Remote Host: IP or fqdn of the Synology

-

Rsync Mode: Rsync module

-

Remote Module Name: The name of the shared folder on the Synology

-

Direction: Pull

-

Check whichever rsync options you want to use.

-

Extra options:

--password-file=PATH_TO_PASSWORD_FILE --exclude \@* --exclude \#*

Basically, the tricky things are:

- No way I found to have Synology push to an rsync module on FreeNAS

- Have to use a password which requires the

--pasword-fileparameter - Synology automatically creates a module for every share when you enable rsync

Looks like the Hyper Backup package might be a more straightforward way to do this, but I guess it’s nice to know how to do it with stock Synology.