Intro

This thread is somewhat of a build log of sorts, with a focus on the thermal issues that I’ve been encountering with this rig, and how I’m attempting to go about fixing them.

I’ve got a few related threads open (links below), but but given the tendency for thermal problems to be like squeezing a balloon, I don’t feel like I’m staying on topic by continuing my saga in those. I’m intending on directing the conversation from those threads here.

Specs

For some quick background, here’s the partial parts list for my build:

Chassis: Rosewill RSV-L4412U

Motherboard: Gigabyte MZ72-HB0

CPUs: Epyc Milan-X 7773X 64 core x 2

CPU coolers: Dynatron A39 3U

Memory: DDR4 3200 ECC 32GB x 16

Storage SSDs: Micron M1100 2TB SSDs x 4

Boot SSD: Kingston NV1 1TB NVMe

PSU: EVGA SuperNOVA 2000 G1+ 2000W

GPUs: Quadro A4000 x 5

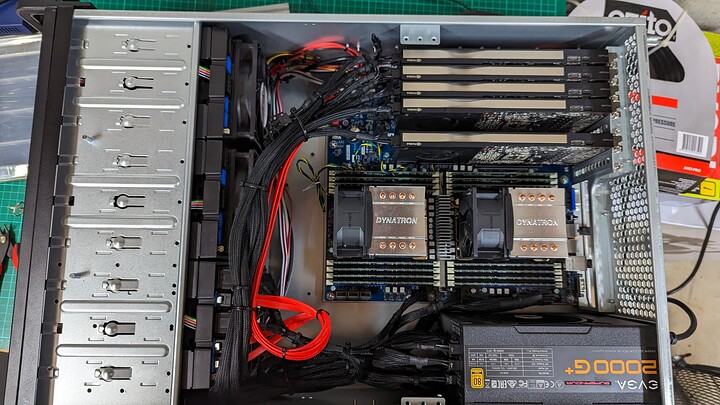

Build photo:

Workloads

This rig is used primarily for a deep reinforcement learning (DRL) workloads, where I have one central learning process that collects experience data from up to 256 worker processes.

The workers each run a copy of the bullet physics engine, known in DRL parlance as the learning environment. Workers relay experience data and rewards that are calculated from this experience back to the learner. Workers run the environment and their neural network inferences entirely on CPU. For the problems that I focus on, the nets are rather small, and the latency of GPU inference is high. I’ve experimented with batched GPU inference, but I find that I get more experience throughput when I run inference on CPU.

The learner looks a lot like a supervised learning workload in that it aggregates the experience data into batches, computes losses, and runs backpropagation to compute updates to the neural network models that power the DRL agent.

Typically when this is running the CPUs are going at full tilt, and the learner is only using a single GPU. I occasionally run N experiments in parallel, in which case I’ll split my worker processes into N process pools, with a single learner for each pool, each utilizing one GPU.

The DRL workload is very taxing on the CPUs, pegging them at 100% for days at a time. However it’s fairly light on the GPUs, with each GPU in use sitting at around 25% utilization for the duration of the experiment.

I also occasionally run supervised learning workloads, including fine tuning open source LLMs. During these runs I tend to max out utilization of all GPUs, and the CPUs sit mostly idle.

Thermal Issues

Overheating ethernet controller (resolved)

On burning in the GPUs with gpu_burn, I found that the onboard 10GbE controller was going into thermal shutdown. After mucking about with a blower fan and trying to replace the OEM heatsink on the part, I solved that issue by 3D printing an external fan shroud, and fitting a screamer of a 120mm fan on there (Sanyo San Ace 120, 1.47 in H20 static pressure, 134.3 CFM, part number 9GA1212P4G001). For more details, see this thread.

Very limited fan control options (unresolved - no fix to trial)

I also bought three more of those San Ace 120 fans to replace the rather anemic Rosewill case fans, however upon installing them I found them to be far too loud. The server is racked in my basement/garage, but the droning/whining fan noise is very audible throughout the main living area of my house.

I went to adjust their curves, but I quickly discovered that the fan curve tooling in the MZ72-HB0’s UEFI settings is rather wanting. You’re only able to have one curve active at a time, with one group of sensor inputs determining one percentage output that’s applied to all fans that are controlled by the curve. From what I can tell, there’s no way to split out e.g. SYS_FAN1 to a curve that’s dictated by the motherboard temperature, while having another curve running for the CPU fans that’s based on CPU and/or VRM temp.

I thought that perhaps I’d set a static curve with all fans maxed out to 100%, and then take control from the host OS once it booted, however I’ve not been able to find any means to do direct fan control from within the host OS. I’m sure there must be some way to set the fan speed via IPMI or SMBus, however I’m not able to find anything documented, and lm-sensors and friends aren’t able to find anything in their probes.

I opened a thread on this topic, however it hasn’t really gained any traction. In the meantime I’ll poke around the IPMI registers as I find time to see if I can’t suss out how to control them manually from the host OS.

CPU temps (unresolved - trialing a potential fix)

As I noted in the workload section above, I typically train with a single learner, but occasionally I run multiple simultaneous experiments by splitting my worker processes into N process pools, and training with N learners.

When I last did this, I noticed that I had critical temperature alarms in the IPMI event log, with CPU0 going as high as 101°C. I’m still a bit confused as to how this happened however, as the CPUs are just as loaded with a single learner workload, and I’ve since tried to reproduce this, and things seem rather stable.

For example, I’ve been running an experiment for the past ~18 hours. My CPU load averages are 170.82, 164.16, 162.95, CPU0 temp is 82, CPU1 temp is 69, and VRM temp is 92. My hottest GPU is the one in use by the learner, and it’s sitting at around 45°C. According to my cheap power outlet power monitor, it’s currently drawing 1005W of power.

Repro or no, I don’t like the way the Dynatron A39 for CPU1 exhausts into the inlet of CPU0’s fan, so I ordered a pair of Noctua’s NH-U14S SP3 coolers. From my measurements it looks these should just barely fit into my Rosewill chassis, and Noctua’s compatibility table shows these as having good headroom for the 7773X chips.

Chassis airflow and fan noise (trialing a fix)

While ordering the new CPU chillers mentioned above, I also grabbed 4 of the Noctua NF-F12 industrialPPC 3000RPM fans, and a pair of NF-A8 80mm fans for the rear chassis fan mounts. I realize these fans are rather anemic compared to the Sanyo San Ace 120s mentioned above, but I haven’t been able to run those as internal case fans due to the noise and inability to configure them to run at a reasonable RPM. As a result, they only really need to outperform the shockingly weak 120mm fans that came installed into the Rosewill chassis in order to be an improvement in this setup. I’d honestly be somewhat surprised if this didn’t improve temps across the board.

VRM temperatures (potential future issue)

My remaining concern is VRM temps. They’re not ideal with the Dynatron A39s and weaksauce Rosewill chassis fans, but they are borderline acceptable, maxing out at ~97°C, as mentioned above. However, I understand (based on @David_dP’s experience in this thread) that VRM temps can become an issue with dual NH-U14S chillers on the Gigabyte MZ72-HB0.

I also saw that @wendell called attention to the potential for this to be an issue in his video on GKH’s build server (related: his thread about the same server). Unfortunately I only noticed these sources after I ordered the NH-U14S chillers, and since they’re already on their way to me, I’m just going to see how it goes.

@wendell - if you had more specifics on your experience with this issue, I’d be much obliged. Assuming the problem repeats in my setup, chances are high that I’ll either send back one of the NH-U14S chillers and either go with the smaller unit that you used in GKH’s server, or I’ll just chuck one of the surplus A39s in there instead.