Sorry, I’ll have to show my ignorance here. I’d love to get into Stable Diffusion and need to replace my old Fury X for that. How do these results stack up to a P40 or a lower end consumer Nvidia card like a 3060? I heard the tensorcores make a massive difference?

Just a rough guestimate of performance difference would be nice, thank you!

TL;DR WMMA / Tensor Cores is 1-4 cycles

Longer read tho it’s a good read and you’ll get a full understanding of the accelerator

3060 should wreck the P40 without any doubt, I had one and it did even better than the P100 found on Google Colab.

I do have a small table comparing the performance on TF benchmarks, hope that’s useful for you:

+-------------------+---------------+----------------+----------------+----------------+----------------+---------------+----------------+----------------+----------------+----------------+-----------------+

| GPU-Imgs/s | FP32 Batch 64 | FP32 Batch 128 | FP32 Batch 256 | FP32 Batch 384 | FP32 Batch 512 | FP16 Batch 64 | FP16 Batch 128 | FP16 Batch 256 | FP16 Batch 384 | FP16 Batch 512 | FP16 Batch 1024 |

+-------------------+---------------+----------------+----------------+----------------+----------------+---------------+----------------+----------------+----------------+----------------+-----------------+

| 2060 Super | 172 | NA | NA | NA | NA | 405 | 444 | NA | NA | NA | NA |

| 3060 | 220 | NA | NA | NA | NA | 475 | 500 | NA | NA | NA | NA |

| 3080 | 396 | NA | NA | NA | NA | 900 | 947 | NA | NA | NA | NA |

| 3090 | 435 | 449 | 460 | OOM | NA | 1163 | 1217 | 1282 | 1311 | 1324 | OOM |

| V100 | 369 | 394 | NA | NA | NA | 975 | 1117 | NA | NA | NA | NA |

| A100 | 766 | 837 | 873 | 865 | OOM | 1892 | 2148 | 2379 | 2324 | 2492 | 2362 |

| Radeon VII (ROCm) | 288 | 304 | NA | NA | NA | 393 | 426 | NA | NA | NA | NA |

| 6800XT (DirectML) | NA | 63 | NA | NA | NA | NA | 52 | NA | NA | NA | NA |

+-------------------+---------------+----------------+----------------+----------------+----------------+---------------+----------------+----------------+----------------+----------------+-----------------+

Does this use the model locally if you have downloaded it or is it going over the web?

Locally

You can set automatic1111 to host over your local network if you want but the work is done on your machine and doesn’t need internet outside of initial set up

Interesting. It must have been why my setup took like 30 seconds as I had downloaded all the stuff prior…perhaps thats it.

I had this going in flight GitHub - huggingface/accelerate: 🚀 A simple way to train and use PyTorch models with multi-GPU, TPU, mixed-precision but never got around to finishing.

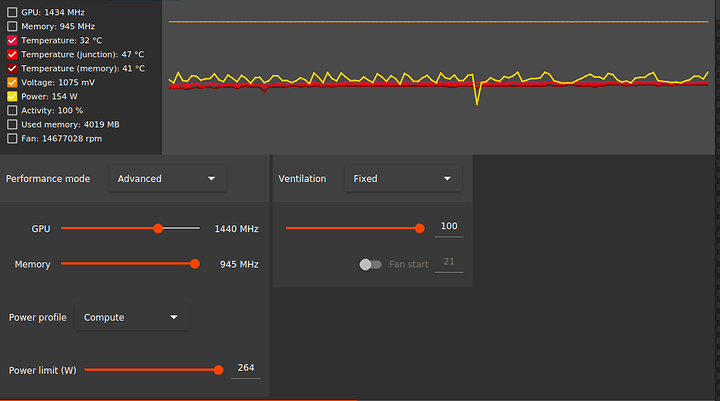

if you want to do blender you won’t need anything more than the wx9100 bios since I haven’t seen it go beyond 155w at 1440mhz

2560x1440 512x512 tile 2048 samples 34 minutes and 12 seconds

You may be able to use the fury, you’ll need to use incredibly old versions of Linux, ROCm, pytorch and stable diffusion and use the commands to use low VRAM and to supplement with system ram

You need to check when they dropped support for it and get the last supported version

Oh interesting! I tried ROCm a few years back and gave up because I was running the fury in my main machine under windows. Since then I’ve started virtualizing things and got system ram out the wazoo. I just need to figure out how to cram it into an Dell R620.

So maybe it’s time to try this again! Thanks for the infos!

*All tested with secondary GPU as output, it/s will be slightly lower and VRAM

prompts used

masterpiece, high quality, highres, dwarf, manly, shoulder armor, armored boots, armor, holding sword

lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, artist name

MI25 WX9100 bios 170w 1348Mhz SET 1170~1225Mhz GET

FP32

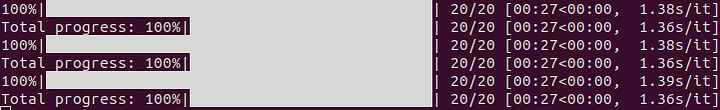

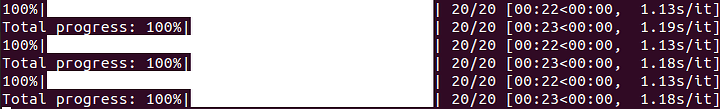

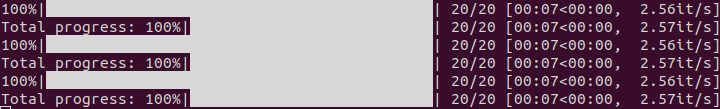

Anygen 3.7 FP32 Euler a 20 step

512x512 7 seconds, 2.56 it/s 8GB

768x768 27 seconds, 1.38 it/s 12 GB

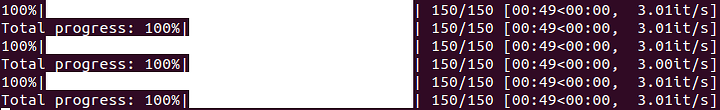

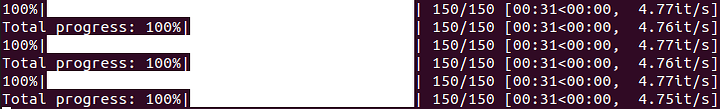

anygen 3.7 FP32 Euler a 150 step

512x512 58 seconds, 2.56 it/s 8GB

768x768 3 minutes, 27 seconds, 1.38 s/it 12GB

FP16

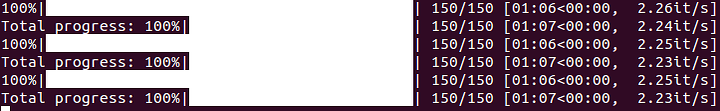

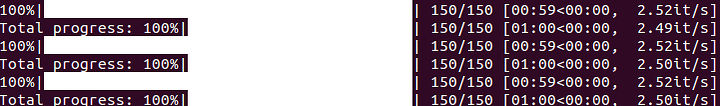

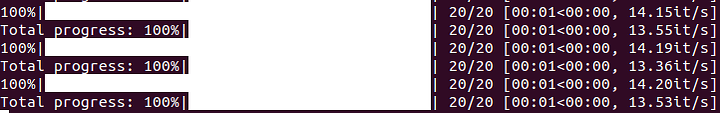

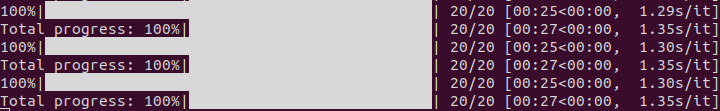

Anything V4.5 Pruned Euler a 20 step

512x512 9 seconds, 2.2 it/s 4.2GB

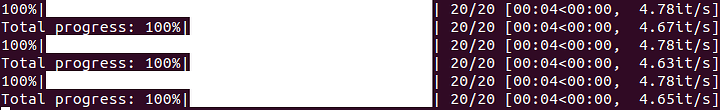

768x768 27 seconds, 1.33 s/it 11GB

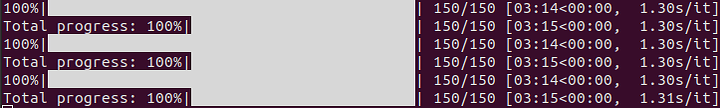

Anything V4.5 Pruned Euler a 150 step

512x512 1 minute, 7 seconds. 2.24 it/s 4.2

768x768 3 minutes, 15 seconds, 1.31 s/it 11GB

Mi25 VegaFE 264w bios SET 1440Mhz GET 1360~1390Mhz

FP32

Anygen 3.7 FP32 Euler a 20 step

512x512 6 seconds, 2.91 it/s 8 GB

768x768 24 seconds, 1.22 s/it 12GB

anygen 3.7 FP32 Euler a 150 step

512x512 51 seconds, 2.9 it/s 8GB

768x768 3 minutes, 6 seconds 1.24 s/it 12GB

FP16

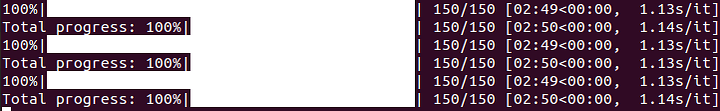

Anything V4.5 Pruned Euler a 20 step

512x512 8 seconds, 2.45 it/s 4.2GB

768x768 23 seconds, 1.15 s/it 11GB

Anything V4.5 Pruned Euler a 150 step

512x512 1 minute, 2.5 it/s 4.2

768x768 2 minutes, 50 seconds, 1.13 s/it, 11GB

Mi210 300w

FP32

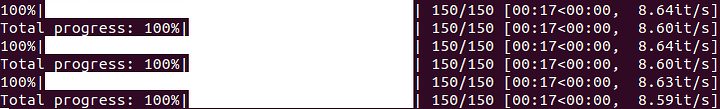

Anygen 3.7 FP32 Euler a 20 step

512x512 2 seconds 8.5 it/s 8GB

768x768 6 seconds, 3 it/s 20GB

anygen 3.7 FP32 Euler a 150 step

512x512 17 seconds 8.6 it/s 8GB

768x768 49 seconds, 3 it/s 20GB

FP16

Anything V4.5 Pruned Euler a 20 step

512x512 1 second, 14 it/s 4.2GB

768x768 4 seconds, 4.6 it/s 11GB

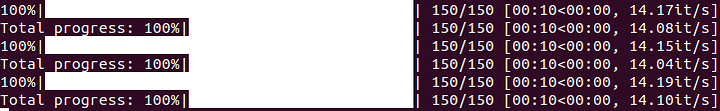

Anything V4.5 Pruned Euler a 150 step

512x512 10 seconds, 14.1 it/s 4.2GB

768x768 31 seconds, 4.7 it/s 11GB

A6000

FP32

Anygen 3.7 FP32 Euler a 20 step

512x512

768x768

anygen 3.7 FP32 Euler a 150 step

512x512

768x768

FP16

Anything V4.5 Pruned Euler a 20 step

512x512

768x768

Anything V4.5 Pruned Euler a 150 step

512x512

768x768

there might be a bug with my the system I tested the mi25 with, getting odd FP16/32 results, will retest

Through some teardown pictures, I noticed a 4-pin connector on the bottom right of the MI25 PCB. On the WX9100, that 4-pin connector is for the fan, and the components on the PCB are the same as the MI25. Have you tested those pins when you flashed the WX9100 bios?

not sure on WX9100 bios but they do on the VegaFE

In that case, ill get one and test it out!

This is pretty impressive performance TBH. With 16GB of VRAM and about 1/2 the speed of an RTX 2060.

Can you tell me what the blower fan you used was? I want to attempt this myself.

I used one wendell had around the office, but I’m pretty sure its the same form factor but a little slower than the ones Craft Computing recommends, I’ll try and get back to you on the exact video and sku

Hello I am having this issue, machine doesn’t even boots. Gives me error 99 and white GPU led giving error. Computer tries to boot 2 times and finally stays at that state. Any idea? I cannot even flash that way. Can you please share also your motherboard model? Mine is an Asus B550-E with Ryzen 3600 CPU. Seller sent me another Mi25 card and is exactly the same thing.

you need to disable CSM and Enable above 4g decoding with the GPU not installed first

I wanted to play with these but there are basically none in Europe, so shipping from US, modding, flashing and all is kinda expensive, almost RTX3060 territory. Is it worth getting 12GB version of RT3060?

Am I understanding correctly that more GPU memory means higher resolutions?

I think people do a second stage, upscaling the image after. I’d say get the most tensor cores you can afford if speeds the goal, most of the SD models seem to be 3-4GB max so they should run on the last 3 generations of cards.

Nvidia does memory compression so I think the 3060 would end up less trouble and about the same perf