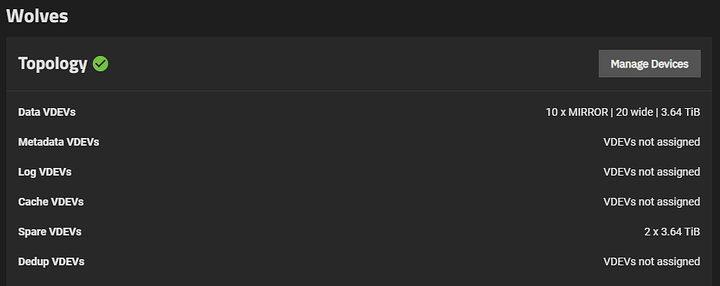

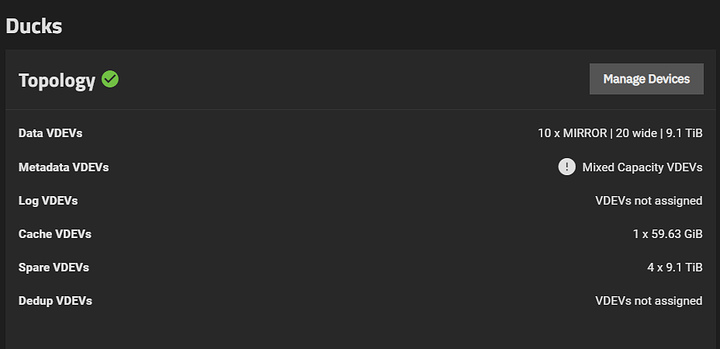

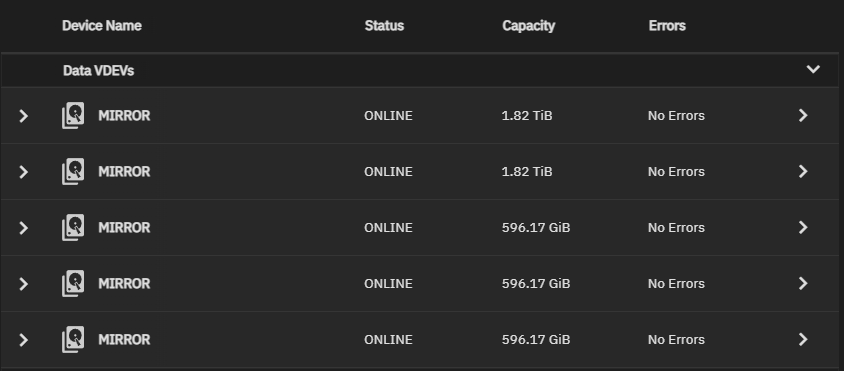

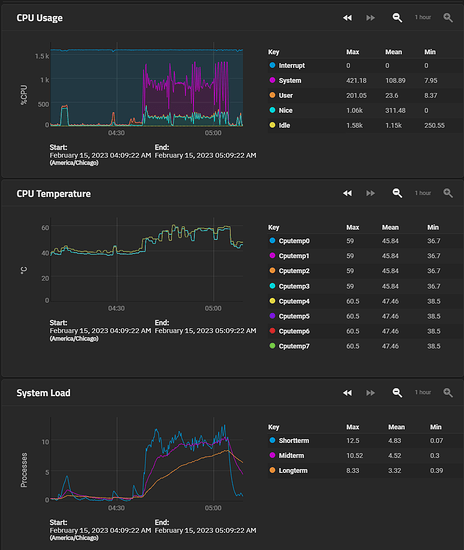

I added --size=5GiB, and fio ran. This was done on the 10x4TB drive mirrors zpool rather than the other one because this way, there are less variables:

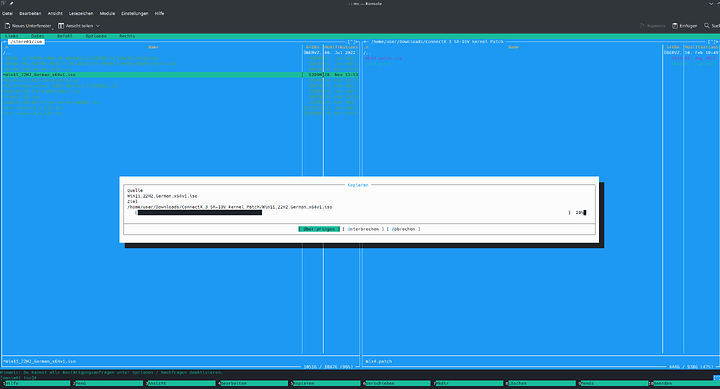

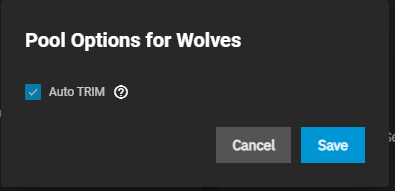

As another sanity check, Auto TRIM is enabled:

# fio --filename=/mnt/Wolves/Test/fio.tmp --direct=1 --rw=randrw --bs=8k --ioengine=libaio --iodepth=256 --runtime=120 --numjobs=4 --time_based --group_reporting --name=iops-test-job --eta-newline=1 --size=5GiB

iops-test-job: (g=0): rw=randrw, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=256

...

fio-3.25

Starting 4 processes

Jobs: 4 (f=4): [m(4)][2.5%][r=315MiB/s,w=315MiB/s][r=40.3k,w=40.4k IOPS][eta 01m:58s]

Jobs: 4 (f=4): [m(4)][4.1%][r=347MiB/s,w=347MiB/s][r=44.4k,w=44.4k IOPS][eta 01m:56s]

Jobs: 4 (f=4): [m(4)][5.0%][r=277MiB/s,w=273MiB/s][r=35.5k,w=34.0k IOPS][eta 01m:55s]

Jobs: 4 (f=4): [m(4)][5.8%][r=339MiB/s,w=342MiB/s][r=43.5k,w=43.8k IOPS][eta 01m:54s]

Jobs: 4 (f=4): [m(4)][6.6%][r=267MiB/s,w=265MiB/s][r=34.1k,w=33.9k IOPS][eta 01m:53s]

Jobs: 4 (f=4): [m(4)][7.4%][r=317MiB/s,w=318MiB/s][r=40.5k,w=40.7k IOPS][eta 01m:52s]

Jobs: 4 (f=4): [m(4)][8.3%][r=278MiB/s,w=278MiB/s][r=35.6k,w=35.6k IOPS][eta 01m:50s]

Jobs: 4 (f=4): [m(4)][9.2%][r=333MiB/s,w=333MiB/s][r=42.6k,w=42.6k IOPS][eta 01m:49s]

Jobs: 4 (f=4): [m(4)][10.0%][r=258MiB/s,w=258MiB/s][r=32.0k,w=32.0k IOPS][eta 01m:48s]

Jobs: 4 (f=4): [m(4)][11.7%][r=266MiB/s,w=269MiB/s][r=34.0k,w=34.4k IOPS][eta 01m:46s]

Jobs: 4 (f=4): [m(4)][12.5%][r=283MiB/s,w=284MiB/s][r=36.2k,w=36.3k IOPS][eta 01m:45s]

Jobs: 4 (f=4): [m(4)][13.3%][r=321MiB/s,w=321MiB/s][r=41.1k,w=41.1k IOPS][eta 01m:44s]

Jobs: 4 (f=4): [m(4)][14.2%][r=264MiB/s,w=268MiB/s][r=33.8k,w=34.3k IOPS][eta 01m:43s]

Jobs: 4 (f=4): [m(4)][15.0%][r=302MiB/s,w=302MiB/s][r=38.7k,w=38.7k IOPS][eta 01m:42s]

Jobs: 4 (f=4): [m(4)][15.8%][r=269MiB/s,w=271MiB/s][r=34.5k,w=34.6k IOPS][eta 01m:41s]

Jobs: 4 (f=4): [m(4)][17.5%][r=274MiB/s,w=275MiB/s][r=35.1k,w=35.2k IOPS][eta 01m:39s]

Jobs: 4 (f=4): [m(4)][18.3%][r=248MiB/s,w=249MiB/s][r=31.8k,w=31.9k IOPS][eta 01m:38s]

Jobs: 4 (f=4): [m(4)][20.0%][r=256MiB/s,w=254MiB/s][r=32.8k,w=32.5k IOPS][eta 01m:36s]

Jobs: 4 (f=4): [m(4)][21.8%][r=279MiB/s,w=279MiB/s][r=35.8k,w=35.7k IOPS][eta 01m:33s]

Jobs: 4 (f=4): [m(4)][22.5%][r=313MiB/s,w=314MiB/s][r=40.0k,w=40.2k IOPS][eta 01m:33s]

Jobs: 4 (f=4): [m(4)][23.3%][r=325MiB/s,w=329MiB/s][r=41.6k,w=42.1k IOPS][eta 01m:32s]

Jobs: 4 (f=4): [m(4)][24.2%][r=295MiB/s,w=294MiB/s][r=37.8k,w=37.6k IOPS][eta 01m:31s]

Jobs: 4 (f=4): [m(4)][26.1%][r=355MiB/s,w=351MiB/s][r=45.4k,w=44.0k IOPS][eta 01m:28s]

Jobs: 4 (f=4): [m(4)][26.7%][r=385MiB/s,w=383MiB/s][r=49.3k,w=48.0k IOPS][eta 01m:28s]

Jobs: 4 (f=4): [m(4)][27.5%][r=359MiB/s,w=357MiB/s][r=45.0k,w=45.7k IOPS][eta 01m:27s]

Jobs: 4 (f=4): [m(4)][28.3%][r=253MiB/s,w=255MiB/s][r=32.3k,w=32.6k IOPS][eta 01m:26s]

Jobs: 4 (f=4): [m(4)][29.2%][r=256MiB/s,w=254MiB/s][r=32.8k,w=32.5k IOPS][eta 01m:25s]

Jobs: 4 (f=4): [m(4)][30.0%][r=279MiB/s,w=280MiB/s][r=35.7k,w=35.8k IOPS][eta 01m:24s]

Jobs: 4 (f=4): [m(4)][31.1%][r=306MiB/s,w=302MiB/s][r=39.2k,w=38.6k IOPS][eta 01m:22s]

Jobs: 4 (f=4): [m(4)][31.7%][r=259MiB/s,w=262MiB/s][r=33.1k,w=33.6k IOPS][eta 01m:22s]

Jobs: 4 (f=4): [m(4)][33.3%][r=290MiB/s,w=288MiB/s][r=37.1k,w=36.8k IOPS][eta 01m:20s]

Jobs: 4 (f=4): [m(4)][34.2%][r=287MiB/s,w=285MiB/s][r=36.7k,w=36.5k IOPS][eta 01m:19s]

Jobs: 4 (f=4): [m(4)][35.0%][r=254MiB/s,w=254MiB/s][r=32.5k,w=32.6k IOPS][eta 01m:18s]

Jobs: 4 (f=4): [m(4)][36.7%][r=275MiB/s,w=275MiB/s][r=35.2k,w=35.2k IOPS][eta 01m:16s]

Jobs: 4 (f=4): [m(4)][38.3%][r=253MiB/s,w=250MiB/s][r=32.3k,w=32.0k IOPS][eta 01m:14s]

Jobs: 4 (f=4): [m(4)][39.2%][r=254MiB/s,w=255MiB/s][r=32.6k,w=32.7k IOPS][eta 01m:13s]

Jobs: 4 (f=4): [m(4)][40.0%][r=272MiB/s,w=273MiB/s][r=34.8k,w=34.9k IOPS][eta 01m:12s]

Jobs: 4 (f=4): [m(4)][40.8%][r=276MiB/s,w=273MiB/s][r=35.3k,w=34.0k IOPS][eta 01m:11s]

Jobs: 4 (f=4): [m(4)][42.5%][r=272MiB/s,w=273MiB/s][r=34.9k,w=34.0k IOPS][eta 01m:09s]

Jobs: 4 (f=4): [m(4)][43.7%][r=225MiB/s,w=225MiB/s][r=28.8k,w=28.8k IOPS][eta 01m:07s]

Jobs: 4 (f=4): [m(4)][44.2%][r=277MiB/s,w=276MiB/s][r=35.4k,w=35.3k IOPS][eta 01m:07s]

Jobs: 4 (f=4): [m(4)][45.0%][r=278MiB/s,w=278MiB/s][r=35.6k,w=35.6k IOPS][eta 01m:06s]

Jobs: 4 (f=4): [m(4)][45.8%][r=279MiB/s,w=278MiB/s][r=35.7k,w=35.6k IOPS][eta 01m:05s]

Jobs: 4 (f=4): [m(4)][47.5%][r=306MiB/s,w=304MiB/s][r=39.2k,w=38.9k IOPS][eta 01m:03s]

Jobs: 4 (f=4): [m(4)][48.3%][r=251MiB/s,w=254MiB/s][r=32.1k,w=32.5k IOPS][eta 01m:02s]

Jobs: 4 (f=4): [m(4)][49.2%][r=179MiB/s,w=181MiB/s][r=22.0k,w=23.1k IOPS][eta 01m:01s]

Jobs: 4 (f=4): [m(4)][50.8%][r=257MiB/s,w=259MiB/s][r=32.9k,w=33.2k IOPS][eta 00m:59s]

Jobs: 4 (f=4): [m(4)][52.1%][r=263MiB/s,w=258MiB/s][r=33.7k,w=33.0k IOPS][eta 00m:57s]

Jobs: 4 (f=4): [m(4)][52.9%][r=270MiB/s,w=271MiB/s][r=34.6k,w=34.7k IOPS][eta 00m:57s]

Jobs: 4 (f=4): [m(4)][53.7%][r=282MiB/s,w=284MiB/s][r=36.1k,w=36.4k IOPS][eta 00m:56s]

Jobs: 4 (f=4): [m(4)][54.5%][r=330MiB/s,w=331MiB/s][r=42.3k,w=42.3k IOPS][eta 00m:55s]

Jobs: 4 (f=4): [m(4)][56.2%][r=392MiB/s,w=394MiB/s][r=50.1k,w=50.5k IOPS][eta 00m:53s]

Jobs: 4 (f=4): [m(4)][57.9%][r=247MiB/s,w=248MiB/s][r=31.6k,w=31.8k IOPS][eta 00m:51s]

Jobs: 4 (f=4): [m(4)][59.5%][r=237MiB/s,w=238MiB/s][r=30.4k,w=30.5k IOPS][eta 00m:49s]

Jobs: 4 (f=4): [m(4)][61.2%][r=226MiB/s,w=224MiB/s][r=28.0k,w=28.7k IOPS][eta 00m:47s]

Jobs: 4 (f=4): [m(4)][62.5%][r=241MiB/s,w=243MiB/s][r=30.8k,w=31.0k IOPS][eta 00m:45s]

Jobs: 4 (f=4): [m(4)][62.8%][r=238MiB/s,w=238MiB/s][r=30.5k,w=30.5k IOPS][eta 00m:45s]

Jobs: 4 (f=4): [m(4)][63.6%][r=252MiB/s,w=250MiB/s][r=32.3k,w=32.0k IOPS][eta 00m:44s]

Jobs: 4 (f=4): [m(4)][65.0%][r=246MiB/s,w=245MiB/s][r=31.5k,w=31.4k IOPS][eta 00m:42s]

Jobs: 4 (f=4): [m(4)][66.1%][r=188MiB/s,w=188MiB/s][r=24.1k,w=24.1k IOPS][eta 00m:41s]

Jobs: 4 (f=4): [m(4)][67.8%][r=22.1MiB/s,w=22.7MiB/s][r=2824,w=2901 IOPS][eta 00m:39s]

Jobs: 4 (f=4): [m(4)][69.4%][r=139MiB/s,w=139MiB/s][r=17.8k,w=17.8k IOPS][eta 00m:37s]

Jobs: 4 (f=4): [m(4)][70.2%][r=93.9MiB/s,w=92.5MiB/s][r=12.0k,w=11.8k IOPS][eta 00m:36s]

Jobs: 4 (f=4): [m(4)][71.7%][r=264MiB/s,w=262MiB/s][r=33.8k,w=33.5k IOPS][eta 00m:34s]

Jobs: 4 (f=4): [m(4)][72.5%][r=262MiB/s,w=260MiB/s][r=33.5k,w=33.3k IOPS][eta 00m:33s]

Jobs: 4 (f=4): [m(4)][73.3%][r=245MiB/s,w=246MiB/s][r=31.3k,w=31.4k IOPS][eta 00m:32s]

Jobs: 4 (f=4): [m(4)][74.2%][r=248MiB/s,w=246MiB/s][r=31.8k,w=31.4k IOPS][eta 00m:31s]

Jobs: 4 (f=4): [m(4)][75.0%][r=276MiB/s,w=272MiB/s][r=35.3k,w=34.8k IOPS][eta 00m:30s]

Jobs: 4 (f=4): [m(4)][75.8%][r=238MiB/s,w=239MiB/s][r=30.5k,w=30.6k IOPS][eta 00m:29s]

Jobs: 4 (f=4): [m(4)][76.7%][r=250MiB/s,w=247MiB/s][r=32.0k,w=31.6k IOPS][eta 00m:28s]

Jobs: 4 (f=4): [m(4)][77.5%][r=245MiB/s,w=243MiB/s][r=31.3k,w=31.1k IOPS][eta 00m:27s]

Jobs: 4 (f=4): [m(4)][78.3%][r=245MiB/s,w=243MiB/s][r=31.4k,w=31.1k IOPS][eta 00m:26s]

Jobs: 4 (f=4): [m(4)][79.2%][r=242MiB/s,w=242MiB/s][r=30.0k,w=31.0k IOPS][eta 00m:25s]

Jobs: 4 (f=4): [m(4)][80.0%][r=255MiB/s,w=255MiB/s][r=32.6k,w=32.6k IOPS][eta 00m:24s]

Jobs: 4 (f=4): [m(4)][80.8%][r=255MiB/s,w=252MiB/s][r=32.6k,w=32.2k IOPS][eta 00m:23s]

Jobs: 4 (f=4): [m(4)][81.7%][r=261MiB/s,w=259MiB/s][r=33.4k,w=33.1k IOPS][eta 00m:22s]

Jobs: 4 (f=4): [m(4)][82.5%][r=231MiB/s,w=232MiB/s][r=29.6k,w=29.7k IOPS][eta 00m:21s]

Jobs: 4 (f=4): [m(4)][84.2%][r=270MiB/s,w=274MiB/s][r=34.6k,w=35.0k IOPS][eta 00m:19s]

Jobs: 4 (f=4): [m(4)][85.8%][r=272MiB/s,w=269MiB/s][r=34.8k,w=34.4k IOPS][eta 00m:17s]

Jobs: 4 (f=4): [m(4)][87.5%][r=79.5MiB/s,w=78.1MiB/s][r=10.2k,w=9997 IOPS][eta 00m:15s]

Jobs: 4 (f=4): [m(4)][89.9%][r=316MiB/s,w=319MiB/s][r=40.4k,w=40.8k IOPS][eta 00m:12s]

Jobs: 4 (f=4): [m(4)][90.8%][r=423MiB/s,w=423MiB/s][r=54.1k,w=54.2k IOPS][eta 00m:11s]

Jobs: 4 (f=4): [m(4)][91.7%][r=244MiB/s,w=245MiB/s][r=31.3k,w=31.4k IOPS][eta 00m:10s]

Jobs: 4 (f=4): [m(4)][93.3%][r=105MiB/s,w=105MiB/s][r=13.4k,w=13.4k IOPS][eta 00m:08s]

Jobs: 4 (f=4): [m(4)][95.0%][r=19.6MiB/s,w=20.2MiB/s][r=2508,w=2587 IOPS][eta 00m:06s]

Jobs: 4 (f=4): [m(4)][96.7%][r=209MiB/s,w=212MiB/s][r=26.8k,w=27.1k IOPS][eta 00m:04s]

Jobs: 4 (f=4): [m(4)][97.5%][r=242MiB/s,w=239MiB/s][r=30.9k,w=30.6k IOPS][eta 00m:03s]

Jobs: 4 (f=4): [m(4)][98.3%][r=228MiB/s,w=226MiB/s][r=29.2k,w=28.0k IOPS][eta 00m:02s]

Jobs: 4 (f=4): [m(4)][100.0%][r=244MiB/s,w=243MiB/s][r=31.3k,w=31.1k IOPS][eta 00m:00s]

iops-test-job: (groupid=0, jobs=4): err= 0: pid=3584699: Tue Feb 14 23:57:32 2023

read: IOPS=32.8k, BW=256MiB/s (269MB/s)(30.0GiB/120001msec)

slat (usec): min=2, max=174369, avg=26.36, stdev=359.56

clat (usec): min=3, max=601144, avg=15563.34, stdev=17856.30

lat (usec): min=41, max=601174, avg=15589.88, stdev=17864.14

clat percentiles (msec):

| 1.00th=[ 4], 5.00th=[ 4], 10.00th=[ 5], 20.00th=[ 6],

| 30.00th=[ 7], 40.00th=[ 9], 50.00th=[ 12], 60.00th=[ 16],

| 70.00th=[ 18], 80.00th=[ 22], 90.00th=[ 29], 95.00th=[ 36],

| 99.00th=[ 87], 99.50th=[ 124], 99.90th=[ 218], 99.95th=[ 247],

| 99.99th=[ 330]

bw ( KiB/s): min=16320, max=579184, per=100.00%, avg=262231.26, stdev=25518.87, samples=948

iops : min= 2040, max=72398, avg=32778.16, stdev=3189.83, samples=948

write: IOPS=32.8k, BW=256MiB/s (268MB/s)(29.0GiB/120001msec); 0 zone resets

slat (usec): min=5, max=492910, avg=90.23, stdev=751.61

clat (usec): min=3, max=601137, avg=15566.67, stdev=17838.24

lat (usec): min=145, max=601289, avg=15657.13, stdev=17944.25

clat percentiles (msec):

| 1.00th=[ 4], 5.00th=[ 4], 10.00th=[ 5], 20.00th=[ 6],

| 30.00th=[ 7], 40.00th=[ 9], 50.00th=[ 12], 60.00th=[ 16],

| 70.00th=[ 18], 80.00th=[ 22], 90.00th=[ 29], 95.00th=[ 36],

| 99.00th=[ 87], 99.50th=[ 124], 99.90th=[ 218], 99.95th=[ 247],

| 99.99th=[ 330]

bw ( KiB/s): min=15792, max=582944, per=99.99%, avg=262011.22, stdev=25442.28, samples=948

iops : min= 1974, max=72868, avg=32750.58, stdev=3180.25, samples=948

lat (usec) : 4=0.01%, 10=0.01%, 50=0.01%, 100=0.01%, 250=0.01%

lat (usec) : 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.01%, 4=7.66%, 10=36.45%, 20=32.00%, 50=21.50%

lat (msec) : 100=1.61%, 250=0.74%, 500=0.04%, 750=0.01%

cpu : usr=2.86%, sys=33.05%, ctx=1580534, majf=0, minf=78

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwts: total=3933550,3930407,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=256

Run status group 0 (all jobs):

READ: bw=256MiB/s (269MB/s), 256MiB/s-256MiB/s (269MB/s-269MB/s), io=30.0GiB (32.2GB), run=120001-120001msec

WRITE: bw=256MiB/s (268MB/s), 256MiB/s-256MiB/s (268MB/s-268MB/s), io=29.0GiB (32.2GB), run=120001-120001msec

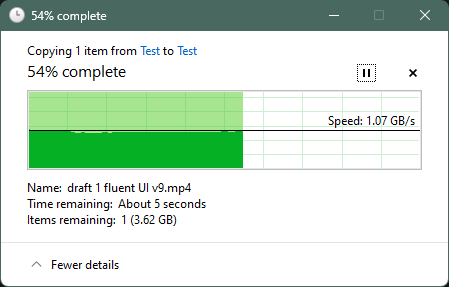

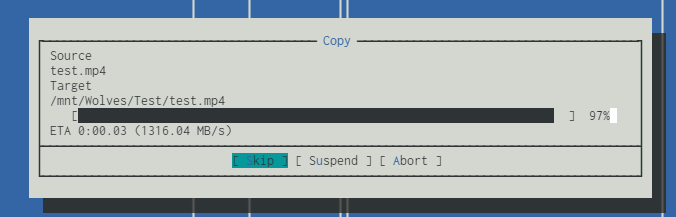

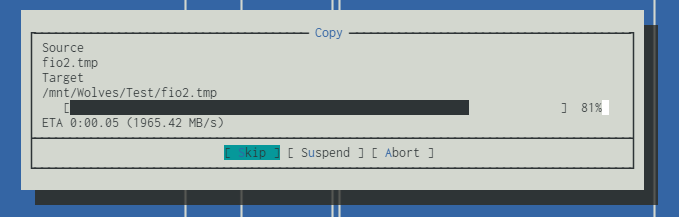

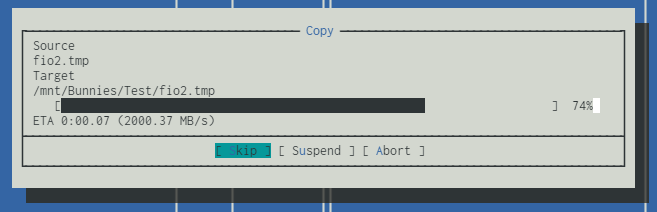

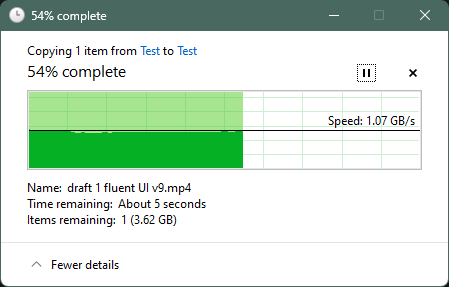

After doing this, I tried another file copy and got the max write speed over 10Gb copper:

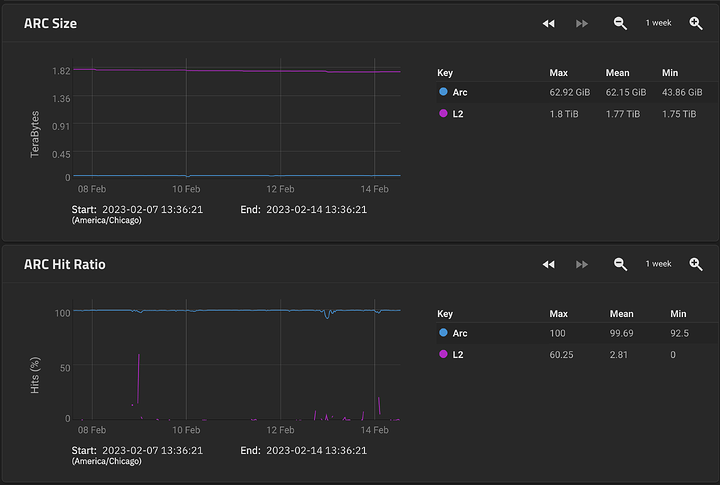

Which means this is probably all writing to Arc right?

But this is a lot higher than what fio was showing. Not sure how to read or understand the fio stats.

If you understand it, here are my zpool iostat stats on this same pool:

# zpool iostat -v Wolves -l

capacity operations bandwidth total_wait disk_wait syncq_wait asyncq_wait scrub trim

pool alloc free read write read write read write read write read write read write wait wait

---------------------------------------- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- -----

Wolves 15.4T 20.9T 0 38 10.6K 4.30M 611us 18ms 597us 1ms 878ns 671ns 4us 17ms 19ms -

mirror-0 2.77T 872G 0 3 985 368K 620us 17ms 618us 1ms 835ns 673ns 983ns 16ms 2ms -

5dfaf5ee-a57f-44cb-acf6-b97130bc2ee1 - - 0 1 492 184K 611us 17ms 608us 1ms 838ns 709ns 982ns 16ms 2ms -

2da54a3c-8725-478e-adaf-1a7a53c0890b - - 0 1 492 184K 630us 17ms 628us 1ms 831ns 637ns 984ns 16ms 2ms -

mirror-1 2.84T 799G 0 2 1.01K 349K 564us 17ms 563us 1ms 830ns 672ns 1us 16ms - -

2bbaffd9-ac67-4239-b3d7-81046dc4bd24 - - 0 1 525 175K 578us 17ms 577us 1ms 854ns 715ns 1us 16ms - -

e631eb85-1ab7-40b5-8161-727e8d1a9a3a - - 0 1 512 175K 550us 17ms 549us 1ms 807ns 630ns 1us 16ms - -

mirror-2 2.88T 768G 0 3 1020 350K 631us 17ms 629us 1ms 839ns 681ns 1us 16ms 2ms -

f287f68c-2293-4d4b-abde-e6e019faf73b - - 0 1 497 175K 643us 17ms 641us 1ms 827ns 728ns 962ns 16ms 2ms -

e471104b-c2ab-4e2b-9c7c-14aa187aef32 - - 0 1 523 175K 619us 17ms 617us 1ms 853ns 634ns 1us 16ms 2ms -

mirror-3 1.23T 2.39T 0 4 1.07K 462K 655us 19ms 632us 1ms 1us 672ns 3us 18ms 19ms -

33dcaafb-6eae-4931-8743-20f9b32d44fd - - 0 2 579 231K 716us 18ms 683us 1ms 888ns 718ns 3us 17ms 32ms -

4fa1a66c-effc-4531-b5c0-8c514293055d - - 0 2 516 231K 586us 19ms 576us 1ms 1us 627ns 4us 18ms 7ms -

mirror-4 1.27T 2.36T 0 4 1.11K 477K 655us 17ms 579us 1ms 856ns 675ns 7us 16ms 42ms -

d2edc92c-4250-40c4-b382-0beb39ab8d6d - - 0 2 586 239K 589us 17ms 554us 1ms 864ns 715ns 5us 16ms 18ms -

0da118f2-7df8-42ee-8a94-fa02dcca1b96 - - 0 2 546 239K 726us 17ms 605us 1ms 847ns 635ns 8us 16ms 68ms -

mirror-5 1.26T 2.37T 0 4 1.09K 480K 553us 16ms 544us 1ms 857ns 667ns 3us 15ms 6ms -

11fcc837-6d7c-466e-b2c0-a0d5e8c82fa5 - - 0 2 568 240K 529us 16ms 519us 1ms 848ns 705ns 4us 15ms 7ms -

435d8322-9143-42fe-803b-7b6abbe75baa - - 0 2 552 240K 580us 16ms 572us 1ms 866ns 629ns 3us 15ms 5ms -

mirror-6 1.26T 2.36T 0 4 1.08K 478K 563us 17ms 560us 1ms 843ns 665ns 5us 16ms 2ms -

dc9cafbd-4c82-4ae0-a3be-b47ed18fa6ac - - 0 2 556 239K 562us 17ms 558us 1ms 844ns 708ns 8us 16ms 2ms -

3bb29f56-8158-4ac9-8d25-8aff78bd1f7f - - 0 2 545 239K 564us 17ms 562us 1ms 842ns 621ns 3us 16ms 2ms -

mirror-7 1.25T 2.37T 0 3 1.09K 456K 580us 18ms 578us 1ms 862ns 663ns 3us 18ms - -

6084d12e-e15c-4f89-8bd6-396bc723fb84 - - 0 1 551 228K 562us 17ms 561us 1ms 877ns 707ns 2us 16ms - -

72fa67ef-c81d-4b98-a7b7-aa192e1625ea - - 0 1 569 228K 597us 20ms 596us 1ms 847ns 618ns 3us 19ms - -

mirror-8 320G 3.31T 0 4 1.06K 488K 633us 19ms 629us 1ms 926ns 667ns 6us 18ms 1ms -

e8bc4881-36f2-45f3-9795-597ce62e0e05 - - 0 2 556 244K 601us 19ms 598us 1ms 934ns 706ns 3us 18ms 105us -

2b55a8d1-a442-45b8-8431-853ca7b10e9b - - 0 2 532 244K 668us 19ms 663us 1ms 918ns 627ns 9us 18ms 4ms -

mirror-9 310G 3.32T 0 4 1.07K 498K 672us 19ms 668us 1ms 917ns 671ns 8us 19ms - -

0c8790ab-d731-4ae2-8b4d-f134b02929d2 - - 0 2 559 249K 712us 19ms 709us 1ms 916ns 714ns 6us 19ms - -

ec03bf0d-4168-418f-b83b-7e21e457ca83 - - 0 2 538 249K 633us 19ms 627us 1ms 917ns 628ns 10us 19ms - -

---------------------------------------- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- -----