I’m having performance problems with my 25Gb fiber SFP28 modules where 10Gb copper Ethernet is faster.

SKIP TO Link speed slower over fiber SFP28 vs cooper SFP+? - #121 by Sawtaytoes. The current situation is the same with the SAME EXACT ISSUE, but the way I present it is better.

Hardware

I have a UniFi USW-Pro-Aggregation switch and a Mellanox ConnectX-6 NIC on my PC connected on a PCIe 4.0 x4 slot. My ConnectX-6 has two SFP28 ports.

Since I have no other 25Gb devices, I’m testing everything to my NAS with its 2x10Gb adapters in LACP mode (meaning I’m limited to a max of 10Gb per connection).

Testing

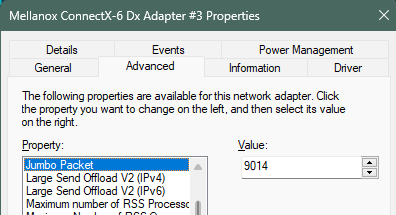

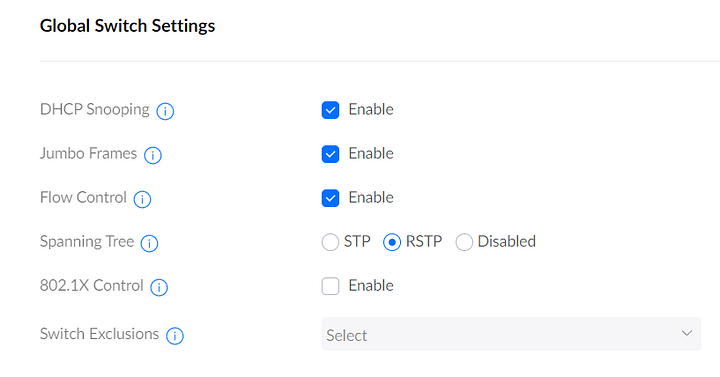

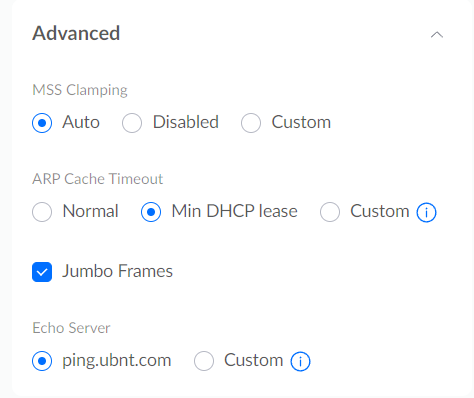

NOTE: I tried Jumbo Frames but had connection issues, so I might need to enable it at the switch level in UniFi.

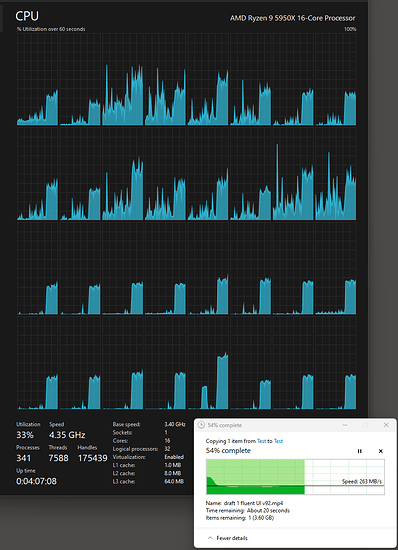

I tested my NIC with both an SFP28 fiber transceiver and an SFP+ 10Gb copper module.

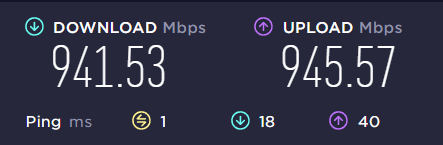

iperf3 was showing the same 10Gb max on both transceivers. This is good.

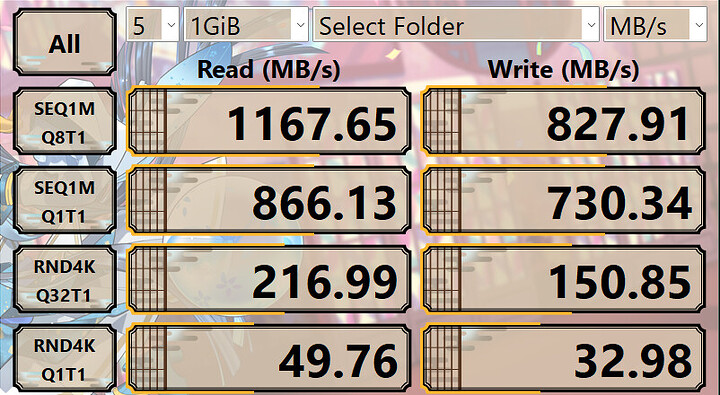

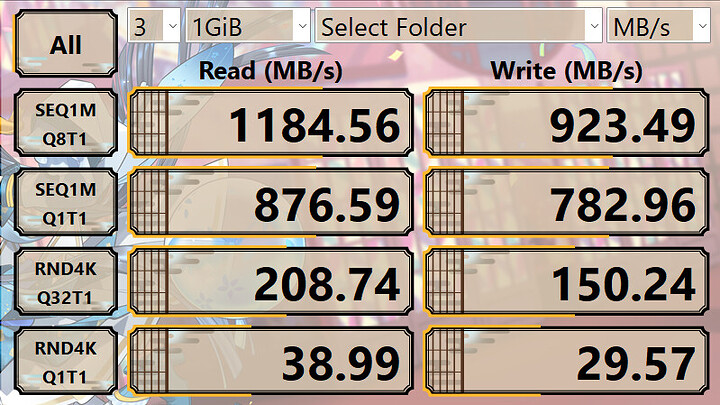

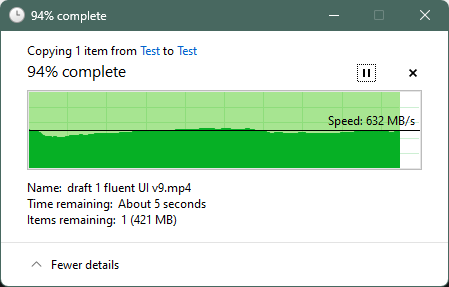

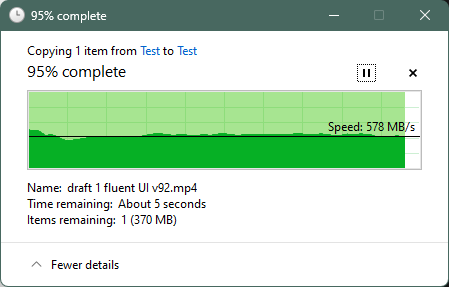

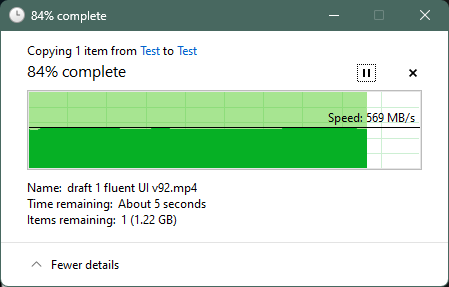

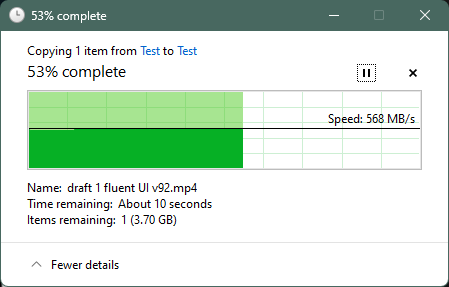

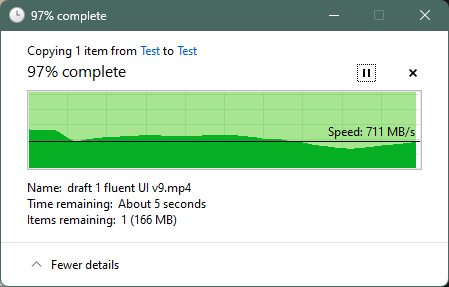

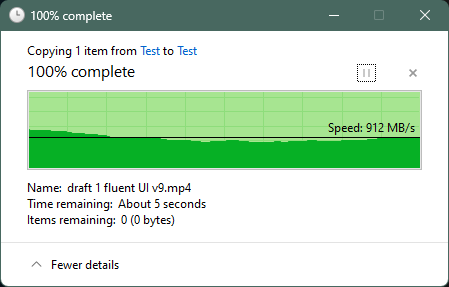

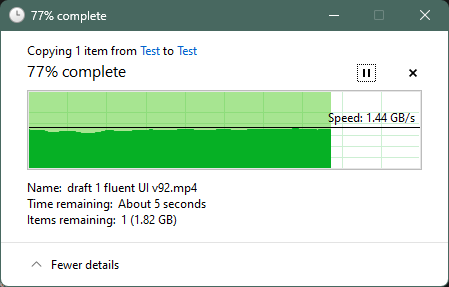

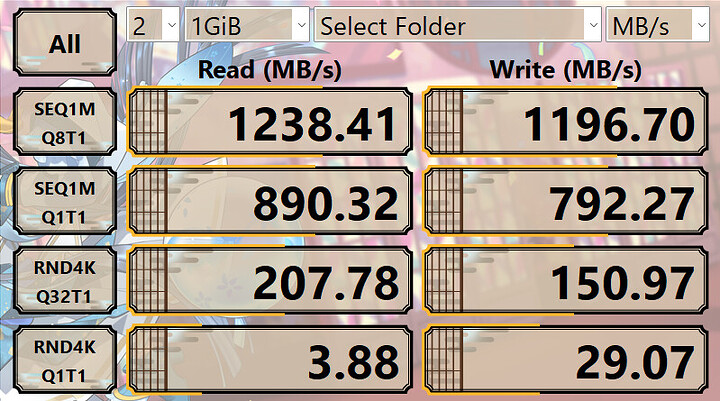

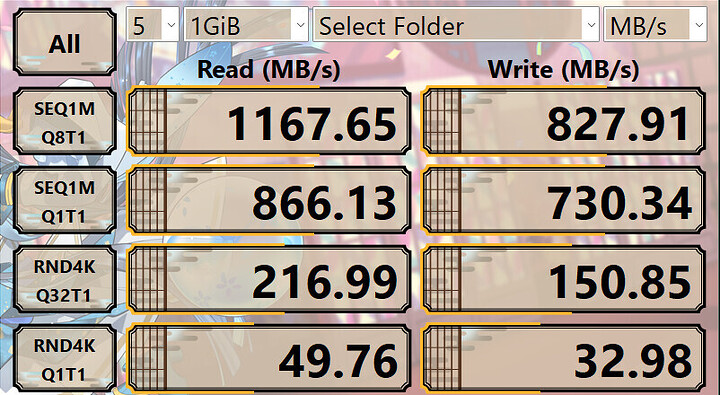

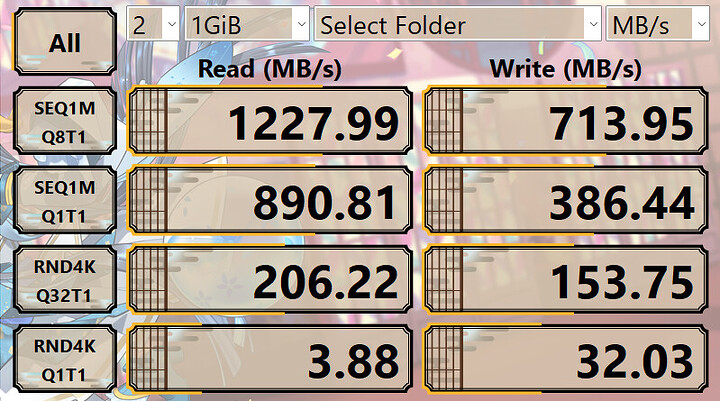

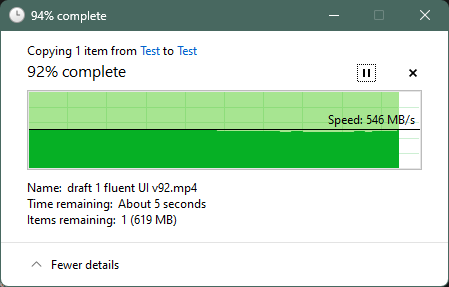

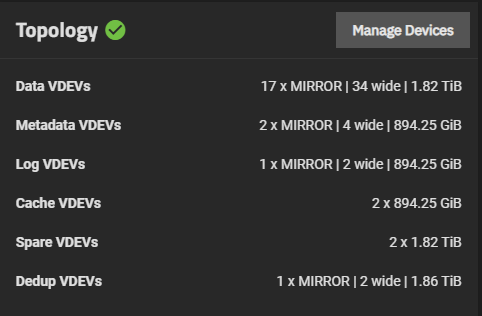

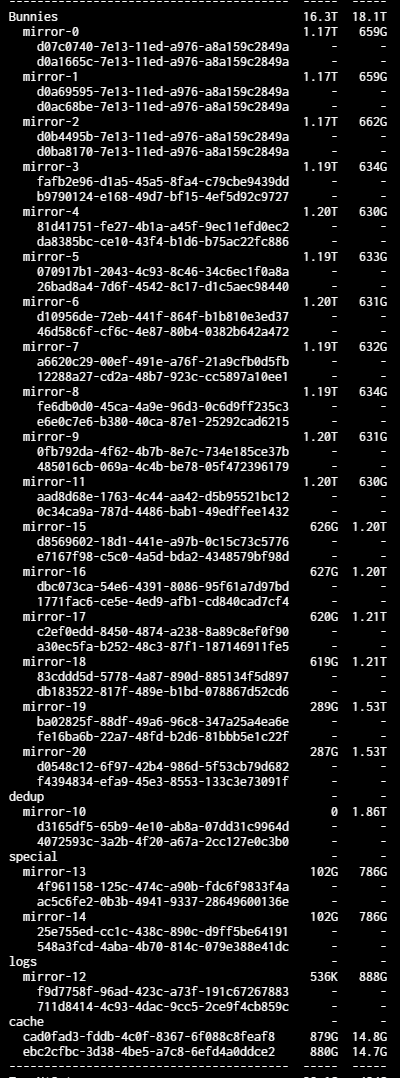

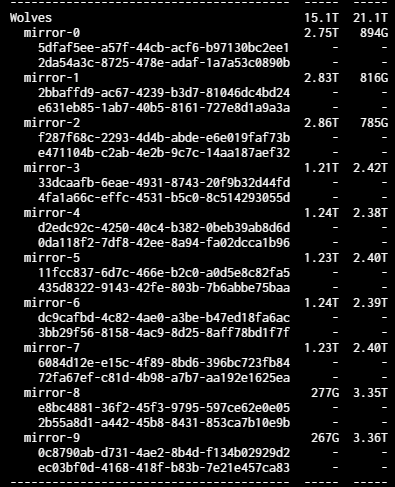

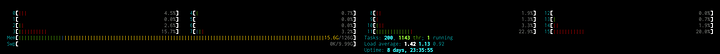

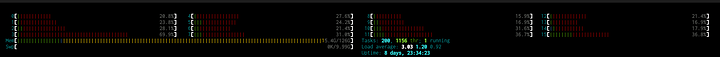

When using SMB though, I noticed some awfully strange results. My NAS is running TrueNAS SCALE and is made up of all SSDs with 60 SSDs spread between two zpools. I should theoretically be able to get some pretty high speeds, but that hasn’t been my experience device-to-device. It maxes out around 700-800MB/s.

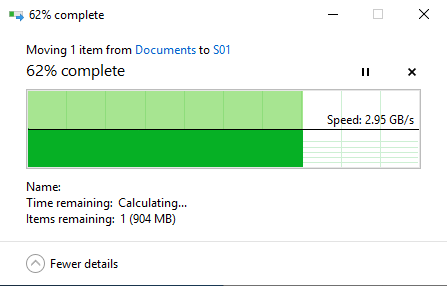

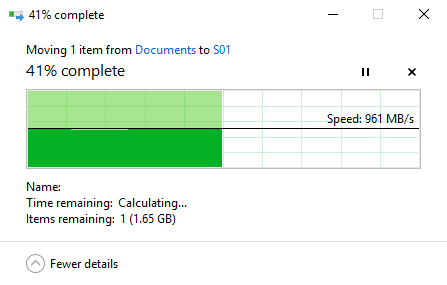

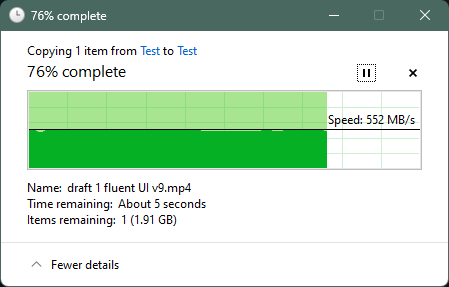

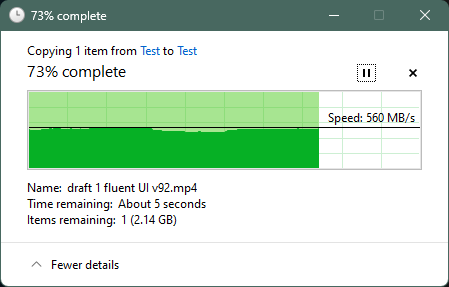

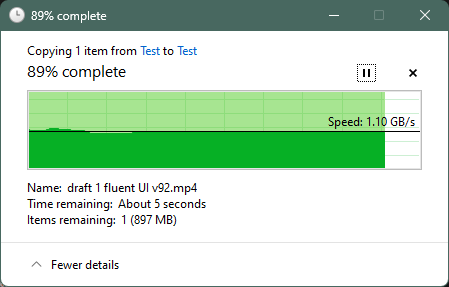

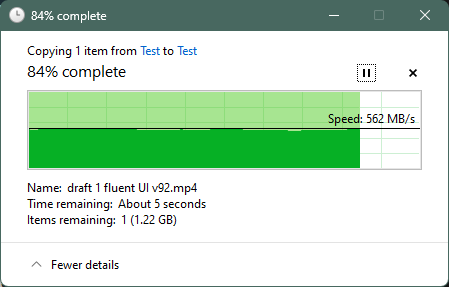

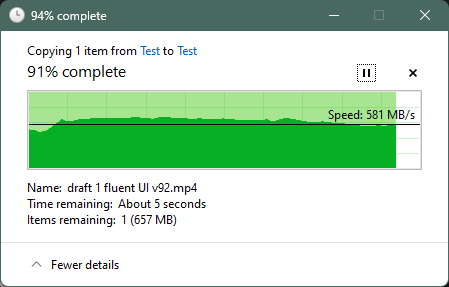

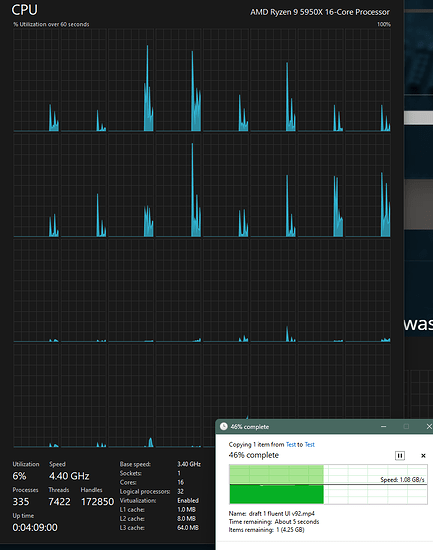

When transferring over 10Gb copper Ethernet, my speeds are ~600/1100MB/s read and write. Why is the write speed so much faster? Beats me. That’s typically much slower.

Using the 25Gb SFP28 module with fiber that I ran last night, I’m getting ~600/600MB/s read and write.

This makes me think something’s wrong:

- Is it the UniFi switch? If so, then why is

iperf3fine? - Is it because of Jumbo Frames not being enabled? If so, then why does 10Gb copper work fine? Or maybe it’s not fine because the read speeds are so slow?

- Is it some configuration with SMB? I don’t have multi-whatever enabled that’s suppose to speed up transfers, but still, why is 10Gb faster?

- Is it some configuration in Windows? There might be some SMB config I’m missing. I remember LinusTechTips talking about it in their 100Gb site-to-site fiber video.

Possible Debugging

- Since I have two all-SSD zpools on the NAS, I can test transfer speeds from one to the other, just that someone needs to give me a command to run.

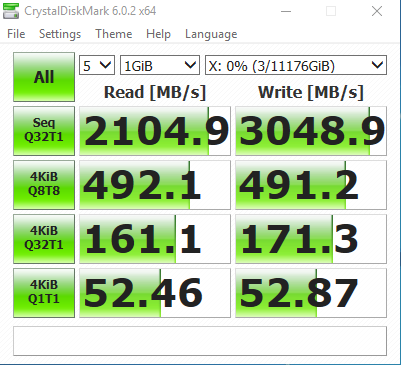

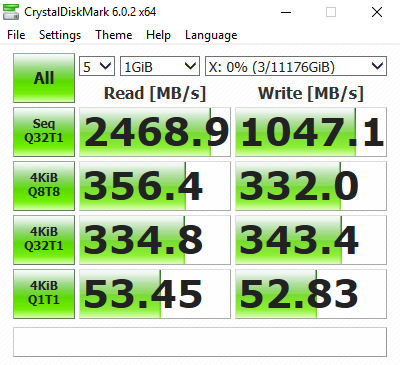

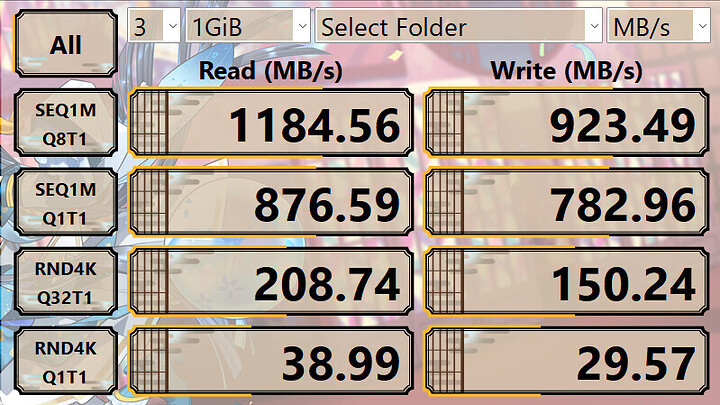

- Can I use Crystal Disk Mark to test network drives? Would that help narrow down SMB issues?

- Is it worth it to enable Jumbo Frames?

- Is my fiber dirty? I don’t have tools to check other than what UniFi or Mellanox drivers show.