I think the OP has to decide whether he/she wants to Yolo and just go with a flat setup, no local servers/caches, minimum effort firewall/security config and hope for the best/hope the attendees behave or … use it as a learning experience …

The switches I linked (Brocade 6610-48s) are a godsend for the latter, because at 200USD a pop you get:

- 48xGbit ports for clients

- 8x10Gbit SFP+ ports for servers

- 2x 40Gbit QSFP ports that can be used with breakout cables for another 8x 10Gbit SFP+ ports

- 2x 40Gbit Stacking ports

The switches are Layer3 capable and (unlike the mikrotiks) they support routing at line rate speeds on each port!

If you combine that with the dual 40Gbit stacking you have an unbeatable price to performance setup

The catch with these switches is that they draw 120w at idle! and generate a lot of heat and noise, so you don’t really want them in your closet/room/house unless you have a dedicated rack in a basement and can afford the electricity cost

For a LAN party, where you would need:

- A lot of ports for clients

- 10Gbit ports for local cache and game servers

- 10Gbit ports to the firewall

- some sort of separation between gamers and game admins

- some sort of internal security filters between the gamers and the servers, and the gamers themselves

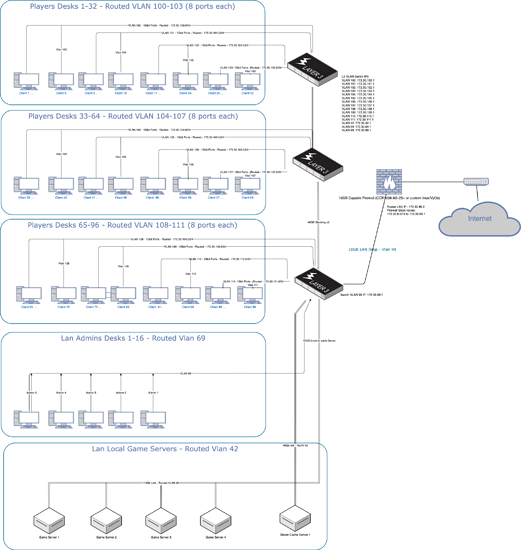

You could leverage the Layer3 capabilities of the switches (that at this point become more like routers) and create a setup like this:

Where you section out gamers on separated VLANs, this example has 8 gamers on each VLAN (ideally one VLAN per desk), a dedicated VLAN for admins and a dedicated VLAN for servers

With this layout you could set up access lists on the Brocades to, say, allow only port 80 and 443 to the game servers VLAN (and all other game ports), section out access to the admin VLANs and section out access to internet (ideally dns and HTTPS traffic only), without bothering the firewall that will have its problems handling nat for a 10Gbit link

You would also have 24 10Gbit ports for servers and 6 40Gbit ports for cache servers, and the means to push data through the servers and the clients at line rate

The learning curve to set this up and secure it would be steep, but once you get it, this scales easily to hundreds, possibly thousands of machines.

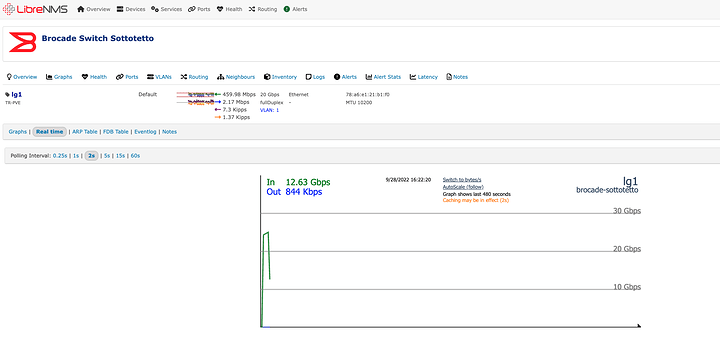

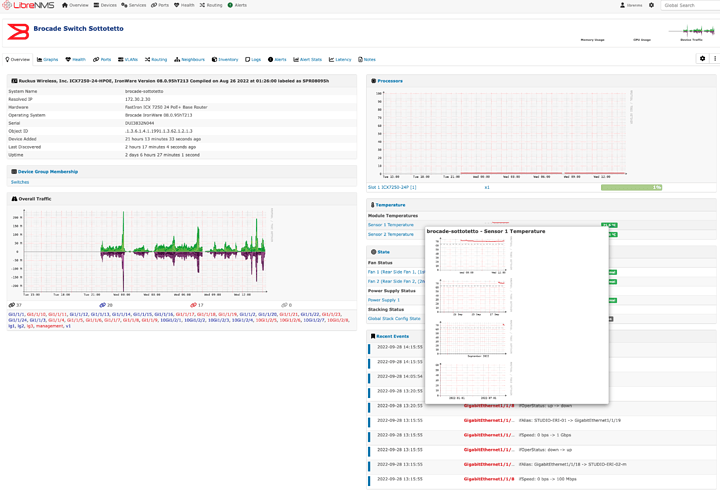

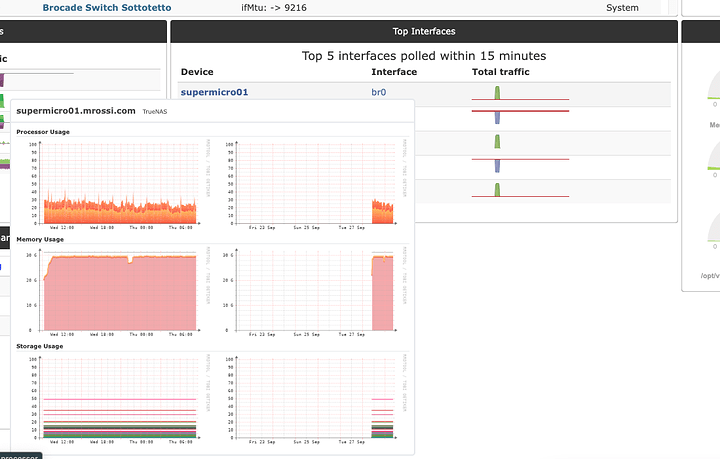

If you want the cherry on top of it you could also set up monitoring on the switches so that the admins can pinpoint data hoarders:

Heck, you could even make it become a source of income if you really got good at it