I prefer not having to deal with HW RAID cards. If it dies, you have to find the same or similar model. Set the card in HBA mode and use ZFS. Just my $0.02.

Looks like IBM ST9900705SS. Pretty generic 900GB SAS drives. From what I can gather the sector size should be 512b.

My main gripe with the PERC 6/i is that if I want to access any of the drives individually, I have to create a RAID-0 array with just that disk. Its dumb.

Oh, and it does not like these drives, that’s another good reason.

I agree, would rather not deal with it. I plan on having the OS drives all be a RAID-1 pair and I don’t mind letting hardware do that, but for everything else I would prefer direct access. To Ebay I shall go!

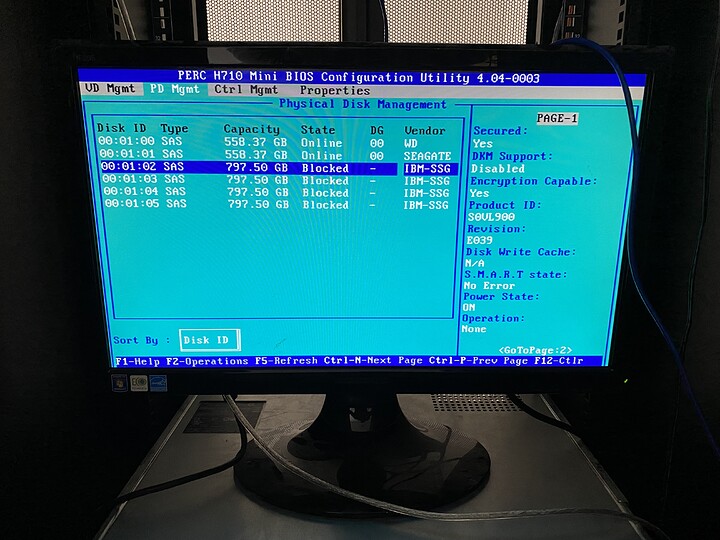

On another note, I spent some time this morning trying to get the drives imported into the R810 (did not recognize drives at all) and the R720 (using a H710 I believe). The R720 just says the drives are “blocked.”

According to a Spiceworks thread I should be able to fix this by updating the firmware. Will have to fuss with iDRAC and see what I can accomplish there.

I hear on the 'Ask Noah" show about a guy who ended up running his own fiber for $8k , and doing all the red tape with the city etc. I love/hate hearing stories about ISP’s…

I spent good time fussing around with things. I mostly focused on the R720 since its the newest hardware I have.

First thing I did was update the device firmware. That didn’t seem to be the problem, no change. Next I flashed it to IT mode. In IT mode it would recognize the drives the server came with but not the new ones, didn’t register at all. Ended up flashing back to “regular” mode.

At this point I broke down and called the hotline for the vendor I purchased the server from. They explained that Dell servers are super picky about drives and the IBM ones wouldn’t work. They would be recognized by the card, but would simply refuse to use them. Apparently Dell has specific vendors that it “certifies” to work with their products and all other vendors, even if the drive is entirely compatible, simply wont work. It is possible to re-flash firmware onto the drives to make them report a supported brand but that is way beyond what I am comfortable with.

In other news, I got a flier from the ISP that initially screwed me over saying that fiber was now available at my address. I called their sales line to confirm and they reported that my block now shows that its ready. I breathed a big sigh of relief and set up an install time.

That was due this morning. No call, no show. I called them back, turns out there still is no fiber in my area. The rep gave the same line they did a few months back, that since there were only three businesses on the block that they really didn’t think it was worth the effort to build out.

At this point I think they are just playing with me.

Current plan is to wait a payday or two then buy supported drives. Will definitely cost more, but oh well. I will do some research and see if maybe HP servers support the IBM drives, if so then ill pick up something that can fit all the drives like a DL380e and make it a CEPH node.

If it turns out the HP servers also don’t support the IBM drives, then I suppose I have 24 hard drives I can use for paper weights or coasters.

is the HBA based on the LSI controller? Can you not just flash it with generic firmware that wont be as picky as Dells?

I could, yes. I know very little about device firmware though. I am not terribly comfortable digging around on the internet for cracked firmware to use on production systems either.

I could likely get a generic SAS controller off NewEgg or whatever to use instead in a PCI-E slot. I will think on it for sure.

Unsolicited food for thought

This might accidentally provide a starting off place to think about redundancy?

If the current controller goes, regardless of the drives, you’d be a bit stuck.

Using SAS, you might be able to multi path? Use two controllers?

Drives die all the time. Controllers less so.

Power supplies maybe a little more often.

Ram less so, and motherboards not so much.

But different parts go wrong at different amounts, so might be worth planning out as well?

Obviously there are practical limits, else you would never start until you can signs a 5 year deal to have 2hour ska on site support for some Dell cabinets, but On a more practical level, you have a bunch of drives, with spares planned in already.

As you have a bit of time to decide which way to go, might be worth considering.

Also, backup strategy?

My current strategy was to consider everything disposable. I have a few components I bought new (UPS units for example) but am mostly using hardware ranging from “made the cables myself” to “certified refurbished” to “literally pulled it out of a dumpster” so I want to plan as if any part could fail at any time. Current plan is to have enough redundancy to lose any one host, or many drives spread through the cluster. Basically resiliency via fault tolerance instead of true redundancy. I am on a budget after all, and I don’t fancy eating ramen forever!

I had not considered much in terms of controller redundancy and using SAS multi-path. I don’t know how well this will translate to CEPH but its worth looking into. I will keep an eye on Craigslist for a disk shelf and some external controllers.

First tier would be to have lots of fault tolerance within the CEPH (or maybe Gluster, it depends) pool so I can safely store backups locally.

Second tier would be sending VM snapshots to a cloud service. I have not settled on any in particular yet, but most services support the S3 API so they should all be inter compatible.

Since everything will be running on shared storage stuff should be fairly tolerant to the loss of a host, but I want to cover my rear in case the building burns down.

no point looking too deep into stuff you are unfamiliar with; You will have to administer it, so you have to know how to use it.

And I guess just a couple machines set up for now, stable, running, and maybe a couple cheap dell servers later. You might not even need disk shelves, might be better to go for fewer, larger drives

Oh, for future reference, and if anyone stumbles upon this in the future, this is the guide I used for flashing my controller.

Linux mdadm (software RAID) has been able to read and use LSI megaraid signatures for a number of years. Since LSI (now Avago) has almost completely taken over the hardware RAID market, you’re pretty well covered.

That’s completely untrue. It’s been a bunch of years since Dell stopped blocking “non-certified” drives. You’ll only a warning in the DRAC and Open Manage status, and work fine otherwise. I manage several dozen Dell servers, and never buy Dell branded drives. That said… I suggested an H700 for a reason… They handle a larger variety of drives better than the default PERC6/i.

You’ll need a non-RAID SAS controller to really test the drives, and use sg_format if needed.

I had a problem with IBM branded SAS SSDs (not HDDs) a while back, myself. They worked fine at first, but when you tried to write to a few sectors about 95% of the way through the drive, it would return an IO error. Some SAN thing where IBM stores specially protected something or other. I tried sg_format with no success on those. Did not try to flash another firmware, just returned them to the seller.

If I’m going to use software RAID, why use mdadm instead of ZFS? That’s not to say there’s no place for mdadm, just that in most server use cases, ZFS is a better bet IMO.

You’re not. It’s just a fallback option if your controller dies on you.

I appreciate the pointer, I’ll give it a shot. I got one off eBay but it will be a while before it gets here with the holiday and all that. I had no luck with the H710, but I imagine a discrete card may do better than the “mini” card stuck to the motherboard. My only real concern is maybe needing to grab different cables, the 610 and 810 looks to use the older style connector and the 720 cable is a bit short.

In the meantime, it looks like I might not be at a total loss for testing things out as a cluster. I realized that I had two “working” 600GB drives in RAID-1 in one server and a 64GB SSD in one of the others. I may not be great at math, but that’s three drives and I have three servers.

I’ll see about standing up a cluster and using Gluster as the test backend with one drive in each server since Gluster is file-based rather than device-based.

A search turned up one hit, specifically saying this model (IBM 45W9609) is 520b sectored by default: https://picclick.com/Ibm-45W9609-900Gb-10K-Sas-Self-Encrypting-25-142704937308.html

NOTE: The H700 card won’t handle the 520b sectors any better than the PERC6/i.

You’ll need a non-RAID SAS card (or flash the H700 to IR firmware) to change that, like so: Drives "Formatted with type 1 protection" eventually lead to data loss | TrueNAS Community

If the drives are 520 then just follow the guide using SCSI tools to rewrite them. It’s not hard just takes time.

like

Well done you, is all I can say

When I was looking at the drive info in iDRAC I think I remember it saying they were 512, but that might also be the controller not knowing what to do with it. It’s worth a shot at least!

Would you have any recommendations on a budget SAS controller that would talk to these drives so I can reformat them? So far I have a variety of controllers, but although they all recognize the drives none of them will expose them to the OS.