This seems… Interesting. When you actually have things up and running, be sure to hit me up with links/pricing/quotes. I very much support independent and smaller hosting developments.

The higher voltage service will really help you when it comes to installing proper cooling. You can find rather powerful and inexpensive ductless mini-split heat-pumps that run on 208/240V, but not so much at 120V. Ground-source is interesting, but you’ll need a much bigger hole to handle the huge amount of continuous heat you’ll need to dump.

Cooling is the most difficult part of a data center. You will need the air conditioner running, even when it’s below freezing outside. What happens when your condenser gets iced-up overnight and your cooling shuts-down, while your servers keep dumping out lots of heat.

Every 5 or so heavily loaded servers (or perhaps 10 sitting idle) is like a 1500W electric space heater. Doesn’t take too many of those before your formerly chilly level turns into a sauna, and equipment drops like flies. At the very least, you need automatic environmental monitoring and alerting, so you get a late-night buzz when everything is overheating, water is leaking in, a circuit breaker has quit, or similar.

On another note, what are you planning to use? Something like Proxmox, oVirt, OpenStack or VMware? And for storage, Ceph?

I’m currently running a OpenStack at home and would like to get into Ceph, which would complement it great.

I wish you the best of luck!

I plan on paying a subscription for Proxmox and using a Ceph backend for storage. I have been using it for years in my homelab and more comfortable with it. I toyed with the idea of using VMware but licensing is $$$.

If Ceph ends up not working out I’ll give Gluster a shot, and if all else fails then ill likely stick with local ZFS. I am crossing my fingers for Ceph though, those HA features look like a ton of fun to play with.

Since you mentioned Ceph and Gluster…

Ceph is great, but it’s not really suitable for small deployments (e.g. <100 disks). It can work, but it’s just too complicated. Kubernetes/Rook make configuring Ceph easier, … but then you have Kubernetes to deal with. (basically you’d make a ceph OSD per physical disk, and host everything in ceph, whilst keeping a teeny tiny boot drive in each server for proxmox or kubernetes)

You might want to also try out Minio once you have your proxmox stuff setup - its reason for existence in my opinion is because Ceph is too complicated - it might end up working for you.

That’s true gluster is great, especially when using it with qemu+KVM. It doesn’t really scale with a lot of files but for some qcows as a vmstore it surely is great. I suppose oVirt has the best gluster Integration, but Proxmox does seem to integrate with it nicely as well! The good thing about oVirt is that it has a self service portal, but the documentation might be a bit lacking for a newbie.

Edit: Just remembered the nice little write-up from servethehome about Gluster on Proxmox: Building a Proxmox VE Lab Part 2 Deploying | ServeTheHome

Until his density goes above certain level, cooling may not be that critical. I ran a similar “datacenter” for a small hosting business for almost a decade with 3~4x low end 2U servers in an office. Being near the equator meant my ambient temperature was always in the 25C to 30C range before anything even heated up the air. Never had a hardware failure while the server were in use and I only replace them every 3~4 years.

There are also commercial experiments for room-temperature datacenters if I’m not wrong.

Quick networking update:

Since it’s going to be a bit before I can get a proper network hookup I decided to call up Comcast and grab another block of IP addresses for my fathers’ business with the goal being to route them upstairs to my setup.

Comcast said this would be no problem, they can get an additional block assigned. I tell them I would like a /28 and they say it will be about 10 minutes and the modem will reboot and we will have the additional block.

A few minutes later the modem reboots aaaaand no internet. I poke and prod at a few things, no internet at all. The modem works fine, says it has our old IP block configured fine, but 100% packet loss at the first hop. I call back and the new rep informs me that I cannot have two blocks of IP addresses under one account, that our old block had been replaced with a larger one instead.

Boy golly would this have been nice to know beforehand, because the POS system is bound to a static IP address and suddenly the static IP that the rest of the shop had services running on went poof and disappeared. They said there was no way to get the old address back since they were randomly assigned and cant be deliberately chosen, so I spent most of the afternoon on the phone with the POS vendor and digging around in our router config trying to reconfigure the WAN interface.

Note to self, stay far away from Ubiquity devices. They say the software is ready for production but it’s beta at best. The only way for me to change the public IP address of the network was to:

- Restore to a configuration backup because simply changing the public IP soft-locks it

- Add a new IP range to the WAN port (0.0.0.0/0, lol)

- Toggle to the “classic settings” menu and switch every internal network to the false IP

- Switch back to the new settings menu and change the “real” IP configuration to the newly assigned block

- Switch back to the classic settings menu and re-assign all the networks to use the new IP

- Reboot

Unfortunately, it looks like there is no way for the UDM Pro to assign external IPs internally so this whole thing may have been a non-starter. I did do my due diligence and make sure the device supported multiple WAN IPs, sure enough, that is on the front page of the marketing literature, but there is no way to disable NAT so you are stuck using exactly one address no matter how many you have added to the WAN port.

I’ll play with it more tomorrow, maybe there is luck, maybe ill just have to deal with NAT.

I have been learning how frustrating Ubiquity stuff is. Been a monthlong process of troubleshooting and fucking around to get it to halfway do what I want.

I would not recommend it for production or even small businesses tbh.

Post-weekend update.

Networking

I spent most of Saturday fussing with the internet facing network. The Ubiquity gear just cant handle this level of routing so I put my Mikrotik router internet-facing with the Ubiquity router behind it. After many frustrating hours of tinkering, it looked like Proxy-ARP was the only way to really do what I wanted with how Comcast assigns the IP blocks.

Unfortunately, out of the /28 we are assigned, the first /30 is taken by them routing to us and the last /30 is stepping on the /28 broadcast address. This means we have the two middle /30s to route. Out of the 16 IPs in the block, I was down to just 4 usable.

I knew what I was supposed to do but I couldn’t for the life of me figure out how to get proxy-ARP to work on the router. At this point I gave up, put the Ubiquity router back internet-facing, and put the Mikrotik router downstream of it on a private /30 link network.

Going forward I don’t know that I’ll spent too much effort here. I am quite discouraged and disappointed in how Comcast wants to handle their routing and their tech support is absolutely worthless.

Although I cannot disable NAT on the Ubiquity device, I can port-forward from the additional WAN IP addresses. I wonder if I could simply port-forward all of the ports on those addresses to me internally, which might effectively act the same. I’ll have to science it if I have the energy.

Infrastructure

On Sunday I spent most of the day in the server room, rather than tinkering with the internet in the downstairs office.

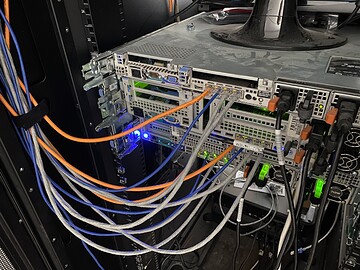

I got the rails for the last server (Dell R720) so I could finally rack it. Its the bottom one here.

The cable management is looking a little less impressive and a bit more messy, but at least I have the cables at least somewhat cut to length. I have seen some pretty ugly racks, and although service loops are real handy I don’t think I can handle the mess.

You can also see in these pictures I got my router plugged in. Its almost looks like I might just know what I am doing, almost.

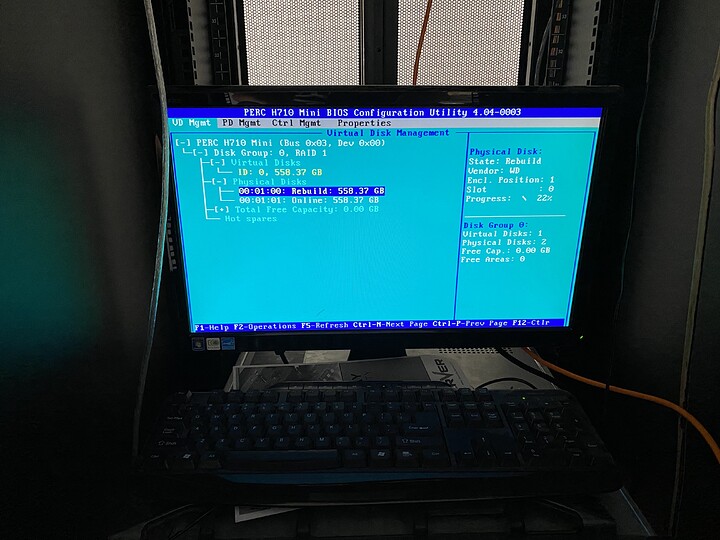

Only one minor hitch is that one of the drives in the new server was reporting in a “failed” state. I figured it was most likely just being bumped in transit so I reseated it real well and let it rebuild the array while I took lunch. I am a little sketched out, but I’ll keep a close eye on it.

In the afternoon I came back and wanted to start putting things in production. I finalized the network setup, the various VLANS for public/private/management traffic, etc. I still have to write firewall rules to disallow the public and private networks from talking to the management network, but I’ll get to that soon.

I had Proxmox installed on the R610 so I used that to test the network configs. Everything seems to check out. The only question I have left is how the bonded network will perform for the storage backend, but that will be pretty difficult to test until I have hard drives ordered.

The last thing I wanted to do before calling it quits was to set up an artificial load to make sure everything would play nice together. I created a Debian VM on the 610 and installed Debian on the 720 bare metal and loaded up the F@H client. I dedicated a combined 30 cores to the effort. Power draw looks to be about 350w and the AC can keep up, so far at least. Everything is looking good.

Plus, it looks like I have completed two work units so far!

Next steps is to grab a pile of hard drives. So, so many hard drives. 25, to be precise. Maybe a few SSDs for good measure. This will have to wait for payday though.

I have some friends and family that are looking to invest some in this business to help get it up and running. I have been saying that the best way to show support is to buy my services so I can see that things are working, but have been running into the problem that these folks are not tech savvy enough to have any use for server space.

I don’t want to just accept their patronage for nothing in return, that doesn’t give me the proper motivation. It raises my bottom line but it feels like a sugar rush, just empty calories that will someday disappear.

So I had an idea. This whole Folding At Home deal is pretty slick. These folks looking to invest could buy VPS off me to dedicate to the project under their own name. This way they can see at least somewhat tangibly that the money they are giving me not only helps me out, but is going to a good cause beyond just handing me money. They can see pretty graphs showing they have X points and have completed Y work units.

What are the L1T thoughts on this?

Having someone invest in your business doesn’t mean it’s a money sink for them - that’s not investment we’re talking about, that’s charity.

If you have some kind of plan to pay them back with interest you could simply do that. That’s what investing is about.

Folding@home isn’t a bad thing though, you can still do that if you have left-over capacity, you just don’t need to “reserve”/“have other people rent hardware from you” for that…

Also, are you sure that you can only use 4 IPv4 addresses from the /28?

Keep in mind that you don’t necessarily have a continuous network segment(?).

(again, my opinion based on nothing, I’m an idiot.)

You are right here. I kept trying to use continuous network segments because that makes routing easy at L3, but Proxy-ARP would allow me to use 12 addresses at L2. Only problem is I cant seem to figure out how to get it to work on my router and troubleshooting this stuff is a royal PITA.

I figure I wont worry about it for now. I will soon have my own internet hooked up and can tinker with it without fussing with another businesses network.

I just need to somehow get past T2 support for Comcast to get things properly routed. I have a strong suspicion that their network techs have never actually taken any networking courses and just get to the “that’s not possible” part of the flowchart and go no further.

I dig it. Its a service. Some people would love to contribute but do not have the skills to do so.

Companies can pay a carbon tax, IMO people should be able to pay a “I can’t set it up but I’d like to fund compute that cures disease” “tax”.

Isn’t folding@home moot tho?

FexEx says that my box of hard drives is most definitely 100% totally not lost at all, but they also don’t know where it is and have opened an investigation into finding it.

So… first boot of everything as a cluster is delayed slightly. On the bright side I should have fiber hooked up this week, maybe, if they don’t screw it up again.

I like that you got some optimistic intern

I had the same issue as well with UPS that lost 24 hard drives for my cluster, they ended up finding it pretty quickly when I informed the retailer (CDW) that I don’t have my drives.

Drives came in. I made a post over in the Lounge, but the TLDR is that the shipping label was scuffed and wouldn’t scan but they found the box and got them delivered.

I spent a few hours this evening screwing the drives into their trays. Had my pup and beer to keep me company.

Unfortunately, I am having issues with the RAID card in the R610. I dropped a few drives in and told it to create a RAID-1 array. Everything checks out, no SMART errors, but once the array is created it marks every disk in the array as “failed” and will go no further. I suspect I’ll need a new RAID controller, not terribly pleased with the PERC 6/I anyways. I will do more testing tomorrow, see if I can get an array working on another server.

What size/model drives? The old PERC 6/i is 2TB max if I recall. You can buy an old, used H700 RAID card for under $20 and install it in place of the PERC 6/i. Neither supports anything other than 512b sectors (no 4Kn drives), which seems to become a huge problem above the 6TB mark. Drives destined for SAN equipment is often 520b sectors, but that’s usually fixable.