Wall-of-text of managing a small data center (slightly ranty)

I have live migrated Oracle DB VMs in Proxmox without users ever noticing. Lots of times. And lots of VMs at one time (well, I never went over 12 parallel migrations and I think 40 VMs, so ok, maybe not “many,” but not few either). When there were OS updates for Proxmox requiring reboots, I would move VMs, reboot the systems, move them back alongside other VMs, update the other system, then re-balance the VMs on the hosts a little. My hosts never saw more than 9 months of uptime (on average, 3 to 4 months of uptime). I have been using 2x 1 Gbps ports in LACP (mode access) for the NAS connections and 2x 1 Gbps in LACP (mode trunk) for all other traffic including management (the network Proxmox uses to talk to other hosts, migrate VMs, receive commands etc.).

Before me and my colleagues standardized every host to Proxmox and got rid of of 10+ year old hardware that was still in production (eek), I had some hosts with libvirtd on CentOS 6 and others on CentOS 7. I live migrated using Virt-Manager, but you could migrate only on hosts running the same major version of CentOS. And everything was behind OpenNebula, but it wasn’t configured that great (and just like the old CentOS 6, it has barely seen any updates). We did the move to Proxmox before CentOS 6 got EOL, but some hosts and VMs didn’t see updates (they were like, CentOS 6.4 - 6.7 or something) and the move happened in the summer of 2019 (fun times). Still, both libvirtd and proxmox’s qm use KVM and QEMU underneath, so live migration will work flawlessly on both.

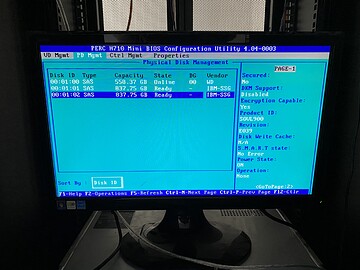

Our small data center was used mostly for development of a suite of programs, but we did have some services that were considered “production,” like our GitLab and Jenkins servers, Samba (albeit this one was a physical server), our VPNs and our public websites (the later which were in the DMZ) and a few others. Despite this, we managed to survive without HA. None of our VMs were highly available. And we only used NASes, each VM had its disk(s) on them. They were running NFS and all Proxmox hosts were connected to them, so we didn’t have to shuffle disks around when live migrating, just the RAM contents between the hosts. We did have backups for basically anything, even the test DBs. While we were in charge, no host or NAS decided to die on its own. And if we noticed issues like failed disks or other errors, we got to moving everything somewhere else. I heard however that there was one time when an old hardware raid card died, leaving all the data on that host unusable (however, backups were being done ok even before me and my team came - but I can only imagine the pain to restore all that back). There was no replacement for that card. The server had 12x SAS Cheetah 500 GB 15k RPM drives, so you can imagine how old that thing was (it was just laying around when I came).

We used Zabbix, then Centreon. I preferred Zabbix personally, it seemed more solid (and I prefer agent-based monitoring), but I did a lot of custom alerts in Centreon with nothing but bash and ssh, which was cool in itself. But then again, Centreon uses SNMP and I was using some SSH to monitor stuff, this wasn’t ideal. At home I’ve installed Prometheus and Grafana, but didn’t get around to configure alerting and other stuff (still haven’t made a mail server). So make sure you configure monitoring early. It’s not a fun chore when you have to manage 100s of VMs, but it should be more doable when you only have to manage the hosts themselves (we were only 3 people with both Linux and Networking knowledge, with no NOC).

Having things on NASes is not so bad, but it’s definitely not as resilient as Ceph / Gluster. However, that hasn’t been an issue at our small scale (9 chunky virt hosts and about 7 NAS boxes). We started with 5 (not full) racks and ended with almost 2 full racks after decommissioning the dinosaurs.

Also, having just an aggregated 2 Gbps pipe (give or take, more take than give) wasn’t ideal either. Frankly, we started experiencing some network issues when we moved from balance-alb on 1 switch (well, they were 3 switches, 1 for management and VM connection and 2 non-stacked switches for the storage network, the later 2 being connected by 1x 10G port on the back), to moving everything on 2 stacked switches and doing LACP (due to the switches getting very loaded by LACP, we had 12 aggregation groups on a 48x2 stacked switch, the maximum allowed). These issues led to the very last few DB backups to not complete in time and fail the backup jobs. Aside from the issues with nightly backups, we encountered some latency / slow responding VMs during the day. These issues weren’t apparent before the switch (pun intended).

Depending on how much resiliency you plan your data center to have, you can do some tricks to save some money now and upgrade later when you can afford it, like not having stacked switches and using balance-alb link aggregation. But that implies having some additional costs later, so it’s either an issue of cash flow, or an issue of total investment costs. Your choice. However, 1 thing I highly recommend is that if you don’t use 10G switches on every connection (which I highly recommend at least for storage), at the very least use minimum 2.5 Gbps on the storage and management interfaces. And split them into 3 different port groups: 1 group for storage access (and have a separate network for it), 1 group for management and 1 group for the VM connection to the internet, so at minimum you need 6 ports for the hosts and 4 for the NASes. Proxmox doesn’t necessarily recommend that the management interface / group have high bandwidth (so not necessarily 10G), but they recommend having low latency, because Corosync is latency sensitive. That said, it still worked for us without a hassle on a shared 2 G pipe between the hosts and the VMs connections (there wasn’t really much traffic going on between the VMs during the day, so YMMV).

My inner-nerd tells me that it’s bad to be an engineer (i.e. create a barely working infrastructure - for reference, it’s an old joke: “people create bridges that stand; engineers create bridges that barely stand,” which is related to being efficient with materials and budgeting and stuff). But nobody will blame you if you don’t go full-out when building your data center. I don’t remember seeing anything related to your LAN speed, but in the pictures, I only saw 2 switches, 1 with 24 port and another with probably 12.

I know people who worked at some VPSes and their company was acquired by a big conglomerate alongside other 4 VPSes. Apparently this is the norm for VPSes: none of them had HA for their VMs. If you bought even the best option they had, there was no HA option. They were the only one out of the 5 where you could order dedicated servers and request Proxmox or CentOS to be installed on them and make a HA cluster yourself. But 3 dedicated servers would be costly.

To improve on their density, VPSes don’t bother with HA, they just give you a VM, they make snapshots regularly and if something goes down, usually they have a SLA with at most 4h response time (so they can take at most 4h to just respond / notify you that something is wrong on their side) and when you agree to their services, they have a total downtime for your services of 2 weeks / year, so plenty of time to just restore VMs from backups if something went wrong. If you lose money as a customer during that time, it’s not their problem, it only becomes if over the course of 1 year, you get more than 2 weeks of total downtime. So VPS providers can have a big profit margin if they don’t do HA. One issue I’ve been told about was when one of their hosts just went down, wouldn’t power on, so they just mailed their customers and restarted their VMs on other hosts (so basically 20 minutes of work). Another time, one of their NASes went down. VMs obviously crashed. What did they do? Just mailed their customers, took the HDDs out of it, put them in another box, started everything back up and they were up to the races. At most 2h of work, if even that. And people can’t complained, they agreed to this.

VPSes depend a lot on snapshots, backups and resilient hardware. They could use cheaper hardware and do HA, but that wouldn’t be as economically feasible, unless they charge a lot more for features like HA and better SLA. So the work the level 1 NOC people do at a VPS provider is monitoring hosts, connectivity, switching hardware around (like failed disks), physically installing new racks and servers and respond to customers. Unless they have other issues, the biggest work they face is monitoring and balancing VMs on hosts. They do actually take their time to look to see which VMs use more resources and balance their hosts accordingly, like 2-10 more resource hogging VMs alongside 20-30-40 (however many can fit) lower demanding VMs on 1 host. The level 2 people are rarely needed (they’re mostly on-call), they just configure new hosts and if the customer is paying for additional support and the level 1 guys can’t solve the issue in a timely manner (say, 1 hour), they grab the steering wheel and solve the issues themselves.

So, conclusion time: aside from the layer 2+3 design choices, you also need a monitoring system (Zabbix / Prometheus), a ticketing system (OTRS), a page that customers can use to access their VMs (I never allowed people to access Proxmox’s management interface, not sure what it’s capable of when used in a VPS scenario, OpenNebula is more catered towards IaaS and VPS stuff - the VPS providers I talked about above were using Proxmox and on their VMs cPanel, I can guess Cockpit can be an alternative, I have no idea how to run a VPS) and some automation with a payment system that will stop or pause VMs if customers don’t pay (you could do it manually at the beginning) and maybe mail them 4 days before the due date (OpenNebula has some “funds / credits” system built-in, never took advantage of that, because my data center was for internal use only).

There may be more stuff that I don’t remember that I probably should mention, but I’ll leave some time to digest this long-ass comment for now.