I really liked Wendel’s comments about a Lego build with hints of black magic. Been watching Level1 for a little while now and honestly used several good ideas form you. I know this is so over the top but I had a few requirements:

No spinning rust

I have 3 gaming rigs all have ISCSI harddrive (steam Libraries)

So many device’s! 5 tv’s, 6 cell phones, 2 playstations, 2 xbox’s, 4 tablets, 3 gaming pc’s, network printer, phone line(fax mostly)… All these connecting through a comcast modem that currently operating at 1.2Gb/s download - 40Mb/s upload. The modem is connected via 2.5Gb/s to a 8 port multigig 10Gb unmanaged switch (supports 10G, 2.5G, 1G) and a couple of other unmanaged switches and stuff… Anyway my point being the amount of data these devices produce is out of control so I built a NAS.

Truenas Scale server build:

AMD Ryzen 7 PRO 4750G Processor

Asrock X470D4U2-2T motherboard (dual 10g nic, IPMI, PCIE bifurcation,

128GB (4x32) ECC UDIMM DDR4-2666 PC4-21300 Memory

14 - Micron 2TB 2048GB 1100 2.5" SATA SSD MTFDDAK2T0TBN (2 x 7 ssd raidz2 vdev’s, 17.21 TB total)

2 - Solidigm Gen4 2TB NVME’s (Mirrored Pool PCIE slotted 4x4x 2TB TOTAL pool)

2 - Intel 120GB SSD’s in Mirrored (OPSYS)

M.2 slot 1 (Gen 3x2 16Gb) - ASM1166 6Gbps Ph516 6 Port Expansion Interface Card with Smart Indicator Gen3x2

M.2 slot 2 (Gen 2x4 20Gb) - ASM1166 6Gbps Ph516 6 Port Expansion Interface Card with Smart Indicator Gen3x2

Motherboard 6 sata III Ports

Total sata ports 18

3 - Icydock 6 bay hot swappable SSD drive caddies, total 18 hot swap bays

Thermaltake 600 Watt power supply

Rack mount chassis

This server test data: idle load 40 watts +/-, max load 110 watts +/- , idle temp 32c, max temp 75c

This file server and my lab server both have dual 10g nics connected to the network. The 3 gaming rigs also have 10g connection to the network

As I am just a basic user all of my network equipment is unmanaged, everthing is Rj45 cat6 (however there are 12 wall drops that are dual cat 5e the longest being 60 feet) future plans to upgrade the wiring is in the works…

There are 2 NVME 2gb drives in a mirror format for VM use but no VM’s are configured at this time

I did test this configuration with a LSI 12gig 16i expansion card, yes the LSI card performed better than M.2 cards not by much though, but the power consumption difference is HUGE! with the LSI card average idol of 60 watts and max load 160 watts. The over all temp in the server case was also significantly higher.

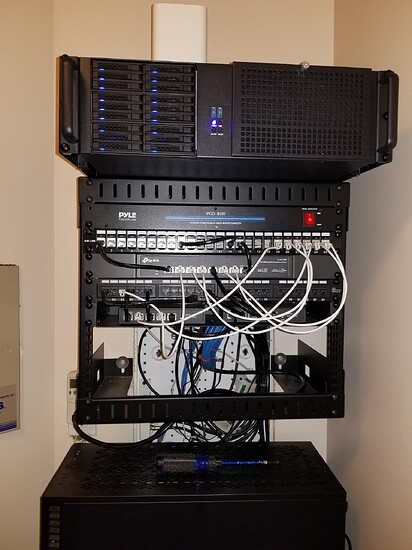

I have been testing this build for a couple of months and finally took it off the test bench and put it in a server case and mounted it.

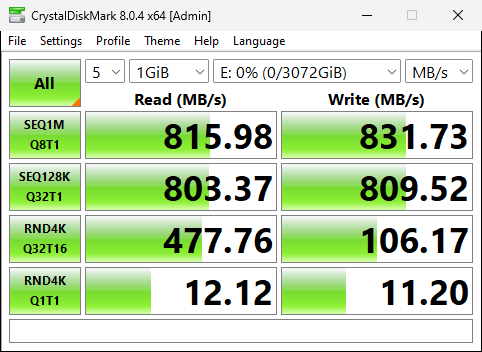

Is this a pretty decent score for this system? The crystalmark score is from a gaming rig with a 3TB ISCSI hardrive.