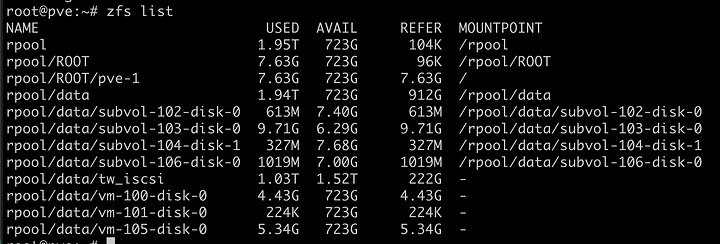

I am in the middle of a massive NAS server consolidation project for my home lab where I am consolidating 4 or 5 NAS systems down to a single, monolithic system.

My plan is that since pretty much all of my storage is shared throughout the house anyways, what I would like to do is carve out like a 10 TB iSCSI target that I can install Steam games on (and then enable compression and deduplication to reduce the total disk space that’s used).

The host OS is going to be Proxmox 7.3-3.

I see that you can mount an iSCSI target that’s provided by another system, but I don’t see a direct way to CREATE said iSCSI target that you can “export” to the VMs that’s hosted by the system itself.

Is there a way of doing that?

The only other way that I would think of doing something like that would be to basically create however many virtual disks (as a qcow2 file) on the host storage itself, and then pass those along to a VM running TrueNAS, (so it would be iSCSI-on-ZFS(VM)-on-ZFS(host)) and then let TrueNAS deal with presenting an iSCSI target for other systems to use.

One of the biggest downside that I can with setting it up this way is that the VM is an extra added layer on top of that, rather than being able to interact directly with the block storage on the ZFS pool.

(If that’s a terrible way of setting that up, please let me know if there is a better way. Because that’s how I have my current TrueNAS server set up.)

So, I wasn’t sure if anybody has set something up like this before.

Thanks.