yeah i’m just talkuing about an iscsi image, it’ll be invisible to the UI

Oh yeah. Yup. Gotcha. Now I get what you mean.

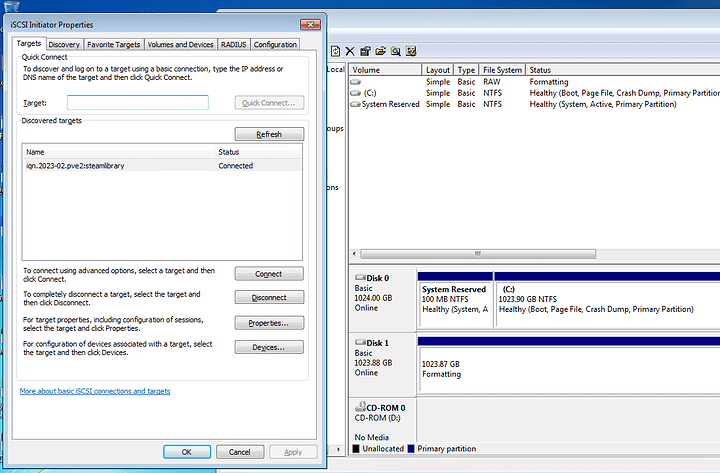

So this is what I get after following your instructions:

root@pve2:/etc/samba# tgtadm --lld iscsi --op show --mode target

Target 1: iqn.2023-02.pve2:steamlibrary

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

SWP: No

Thin-provisioning: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 1099512 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

SWP: No

Thin-provisioning: No

Backing store type: rdwr

Backing store path: /dev/export/steamlibrary

Backing store flags:

Account information:

ACL information:

ALL

It looks like it worked.

Thank you!!!

Nice!!!

Hsppy to have helped! ![]()

I don’t know if you have your own personal blog or something, but if you do, this would be AWESOME information to share with the net.

The instructions were clear and concise.

And it worked! (which is even better!!!) ![]()

Hey thanks for the compliment man appreciate it

nah none of the type, not hubristic enough to think people want to listen to my life story but I could do a wiki on here or something

I just like answering questions if I can

I think you can still store all kinds of data, even with datasets / pools you create in the proxmox GUI.

As far as I know, adding a zfs dataset to the gui only allows proxmox to interact with it. For example for storing VM disk images on it. I also like to add my datastorage - so I can see the remaining space and have a better overview.

You can also chose how images are stored. If you add a zfs dataset directly Proxmox will created zfs volumes for VMs, if you add a dataset as directory VMs get stored as raw or QCOW2 image files.

You could do both.

I actually took your instructions and consolidated it down to my OneNote for myself (so that if/when I will need to re-deploy it - because right now, I am just using one of my towers to do a lot of testing of the various software to make sure everything was going to work before I bought the server), and I think that may help other people who might want to deploy something like this in the future.

It condenses a lot of other sources of the same info down to 5, maybe 7 steps. And the fact that IT WORKS is even more of a bonus (because sometimes, the outdated instructions may not work if the system that you’re trying to execute those instructions are newer or it’s a little different than your specific environment. But in our case, it sounds like that you just did the same thing in Proxmox, so yay!)

(sidebar: the new service name is called tgt rather than tgtd.)

Not in my experience from my testing.

I think that I was able to store maybe like disk images and containers, and that was it. Everything else, VZDump, snippets, ISO images, etc. - couldn’t store any of that on a ZFS that was created via the GUI.

Don’t really know nor understand why there’s a limitation, but there is apparently one.

shrug

That’s why I ended up creating the ZFS pool via the command line instead because then, the mount point was exactly the same, but if I just added it back in as a directory, then I can store the other types of stuff as well beyond just disk images and containers.

Not a big deal.

lmaooooo that was the goal

I can’t tell you how many times that I’ve tried to deploy instructions from various blogs, etc. on the internet and because I am not using that environment specifically, some of the stuff doesn’t always work.

(There are a LOT of posts/internet “white space” talking about, for example, how to deploy Samba. And the most recent one of which (where I was trying to set up a SMB share so that my Windows VM can mount it from the host), when I tried to connect to it, I got an “access denied” dialogue box in Windows. So that was annoying. Luckily, I think that I got it working now, but this is the kind of stuff that I mean where you’d execute their instructions and at the end of it, it doesn’t even work. It might have worked for them when they tried it, but when I am trying it “fresh”/“new”, then it doesn’t work. And I’m not a sysadmin nor a programmer/developer, so it’s challenging to try and figure out WHY following those instructions didn’t work, when it otherwise should have.)

[/rant]

yeah thats completely fair

its hard to give context to instructions

Stupid question:

In your step here:

tgt-admin --dump |grep -v default-driver > /etc/tgt/conf.d/my-targets.conf

Is that so that the iSCSI target will persist through reboots/shutdowns?

(i.e. the iSCSI target will come back online after a reboot and/or a shutdown?)

Thank you.

yep

Thank you.

So, to make sure that I understand that – how does that work?

Is that similar to like a rc.d script where it basically tells the system to run or execute that set of commands to bring the iSCSI target back up online? (i.e. the system doesn’t inherently nor natively does that automatically via the tgt system service? You have to tell it (semi-explicitly) to bring the iSCSI target that you just created (plus any other targets) back up online?

I’m trying to learn more about how that command works and what it is actually doing.

Thank you.

tgtd has no idea what targets to initialize when it has started, so when it starts the following things happen

- tgtd starts with a blank configuration

- tgtd opens the configuration file at

/etc/tgt/targets.conf - the

targets.conffile is usually blank apart from the following statement:include /etc/tgt/conf.d/*.confincludeis a statement that tells it to load any file ending in.confin the/etc/tgt/conf.dfolder for configuration of the targets to load.

it’s worth noting that folders that end in “.d” (<folder name>.d) is a linux convention that means “drop-in”. in tgt’s example you have “drop-in” conf files. Drop-in configuration is a far better way to configure Linux apps because it lets you add and remove elements from the app configuration without changing (and therefore risk damaging) the root configuration file.

- tgt reads this configuration, and sets up what it was told to do

these files aren’t scripts that replay what you typed to set it up in the first place, they are merely data that the software understands enough to set itself up again the way you want it

the dump command tells it to dump the running configuration in a format that is readable by itself if it’s restarted.

a nice analog to this is the smb.conf file for Samba. Samba will not know what to do unless you direct it, via the config file

rc.d are shell scripts to do arbitrary things

hope that makes sense mate

Gotcha.

Again, thank you for your detailed, yet concise explanation.

I really appreciate you taking your time to educate me in regards to this.

more than welcome mate ![]()

Hey there.

Quick question for you - so I deployed the iSCSI target based on the instructions provided here in Proxmox 7.4-3.

Do you ever notice that the iSCSI target is causing a ZFS pool to temporarily hang and/or SIGNIFICANTLY increase the system load averages?

(On my 64-thread, dual Xeon E5-2697A v4 system (16-core/32-thread), I am currently getting a load average of 127.77,116.91,101.26.)

Do you ever see or notice this unusually high load on the system with this iSCSI deployment?

Your help is greatly appreciated.

Thank you.

i don’t notice this behaviour, though i moved it to nfs.

small record sizes? compression?

Well…I have compression and dedup running on the ZFS backend, but I didn’t think/expect that it was going to create a problem on the iSCSI side of things.

(It didn’t, previously, when I had it deployed under TrueNAS Core 12.0-U8.1.)

So I don’t know if this is like a Debian issue, a Proxmox issue, or what.

(I’m using the iSCSI target for my Windows Steam clients, so that if I install the same game on multiple systems, the ZFS dedup and compression will mean that it will take less space on disk than it otherwise would’ve.)