Continuing the discussion from Configuring a headless Linux OS installation strictly for virtualizing then managing a Windows installation?:

To summarize from the previous thread:

-

Trying to passthrough the GPU so that I can have Linux host to manage the computer while the users only see Windows.

-

Hardware is the following:

- AMD Athlon X4 845 CPU

- ASRock FM2A88M PRO3+ Motherboard

- 8GB of DDR3 Kingston RAM

- 120GB SSD

- Nvidia GT 710 GPU

-

Config file for the VM on ProxMox is the following:

bios: ovmf bootdisk: virtio0 cores: 4 cpu: host efidisk0: local-lvm:vm-100-disk-2,size=128K hostpci0: 02:00,pcie=1,x-vga=on,romfile=GK208_BIOS_FILE.bin machine: q35 memory: 7168 name: Test-Windows net0: virtio=96:24:CF:B4:AA:A2,bridge=vmbr0 numa: 0 ostype: win10 scsihw: virtio-scsi-pci smbios1: uuid=f675c872-c390-4668-9c48-423f5b4ff239 sockets: 1 usb0: host=6-1.2 # Mouse & Keyboard usb1: host=2-4 # Other usb2: host=3-1.2.3.4 # Physical usb3: host=1-1.2.3.4 # USB Ports virtio0: local-lvm:vm-100-disk-1,cache=writeback,size=90G -

The BIOS bin file is parsed correctly and UEFI capable. The rom parsing instructions comes from one of the below links:

root@pve-001:~/rom-parser# ./rom-parser /usr/share/kvm/GK208_BIOS_FILE.bin Valid ROM signature found @600h, PCIR offset 190h PCIR: type 0 (x86 PC-AT), vendor: 10de, device: 128b, class: 030000 PCIR: revision 0, vendor revision: 1 Valid ROM signature found @fc00h, PCIR offset 1ch PCIR: type 3 (EFI), vendor: 10de, device: 128b, class: 030000 PCIR: revision 3, vendor revision: 0 EFI: Signature Valid, Subsystem: Boot, Machine: X64 Last image -

I’ve followed the following guides trying a mix and match of settings:

https://pve.proxmox.com/wiki/Pci_passthrough

https://wiki.archlinux.org/index.php/PCI_passthrough_via_OVMF

I realize this isn’t Fedora 26 or RyZen, but useful info regardless:

I could’ve easily missed a step in one of them given all the information I’m trying to combine here.

-

I have the latest Nvidia Drivers installed (version 388).

-

IOMMU is working:

root@pve-001:~# dmesg | grep -e IOMMU

[ 0.615425] AMD-Vi: IOMMU performance counters supported

[ 0.617069] AMD-Vi: Found IOMMU at 0000:00:00.2 cap 0x40

[ 0.618547] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).

root@pve-001:~# find /sys/kernel/iommu_groups/ -type l

/sys/kernel/iommu_groups/7/devices/0000:00:13.2

/sys/kernel/iommu_groups/7/devices/0000:00:13.0

/sys/kernel/iommu_groups/5/devices/0000:00:11.0

/sys/kernel/iommu_groups/3/devices/0000:00:09.0

/sys/kernel/iommu_groups/11/devices/0000:00:15.2

/sys/kernel/iommu_groups/11/devices/0000:00:15.0

/sys/kernel/iommu_groups/11/devices/0000:05:00.0

/sys/kernel/iommu_groups/1/devices/0000:00:03.0

/sys/kernel/iommu_groups/1/devices/0000:02:00.1

/sys/kernel/iommu_groups/1/devices/0000:00:03.1

/sys/kernel/iommu_groups/1/devices/0000:02:00.0

/sys/kernel/iommu_groups/8/devices/0000:00:14.2

/sys/kernel/iommu_groups/8/devices/0000:00:14.0

/sys/kernel/iommu_groups/8/devices/0000:00:14.3

/sys/kernel/iommu_groups/6/devices/0000:00:12.2

/sys/kernel/iommu_groups/6/devices/0000:00:12.0

/sys/kernel/iommu_groups/4/devices/0000:00:10.0

/sys/kernel/iommu_groups/12/devices/0000:00:18.4

/sys/kernel/iommu_groups/12/devices/0000:00:18.2

/sys/kernel/iommu_groups/12/devices/0000:00:18.0

/sys/kernel/iommu_groups/12/devices/0000:00:18.5

/sys/kernel/iommu_groups/12/devices/0000:00:18.3

/sys/kernel/iommu_groups/12/devices/0000:00:18.1

/sys/kernel/iommu_groups/2/devices/0000:00:08.0

/sys/kernel/iommu_groups/10/devices/0000:00:14.5

/sys/kernel/iommu_groups/0/devices/0000:00:02.2

/sys/kernel/iommu_groups/0/devices/0000:00:02.0

/sys/kernel/iommu_groups/0/devices/0000:01:00.0

/sys/kernel/iommu_groups/9/devices/0000:00:14.4

- The vfio-pci driver is being used correctly:

root@pve-001:~# lspci -k

02:00.0 VGA compatible controller: NVIDIA Corporation GK208B [GeForce GT 710] (rev a1)

Subsystem: eVga.com. Corp. GK208 [GeForce GT 710B]

Kernel driver in use: vfio-pci

Kernel modules: nvidiafb, nouveau

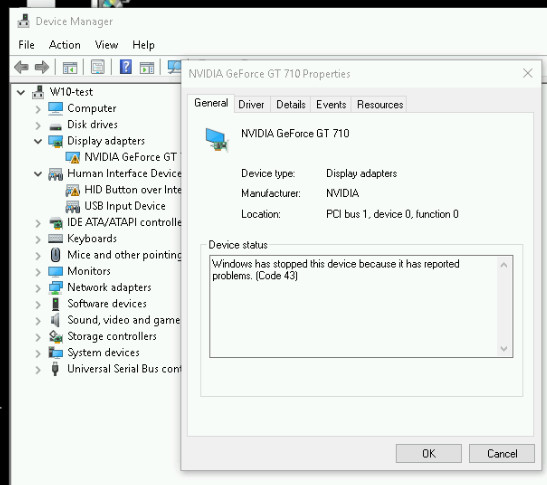

But after all that, I still get this:

Note how the basic “Microsoft Display Adapter” isn’t there.

Is it because I’m using VirtIO? All the guides seem to be using SCSI without VirtIO. I’d like as much performance as possible, but if it’s just not happening with VirtIO, I can use SCSI.

Perhaps that will work. Will definitely try the drivers next.

Perhaps that will work. Will definitely try the drivers next.