[Note: This started out in Build a PC for build help, but devolved into a blog about the journey somewhere along the way.]

Alternate title: “It’s warmer and messier than Lain’s bedroom in here, and none of it is actually doing what I want it to do.”

I’m finding myself at a weird crossroads where if I had the ability to merge two machines I’d have a good-enough daily driver - but due to form factors (and/or lack of thunderbolt for an eGPU dock), this is not possible. One is big, heavy, hot, and old with a side of fresh makeup (big, recent-ish gpu and ssd), the other is a laptop with not enough GPU to drive my monitor and screams all the while when I ask it to do it. The other aspect to all this is that the desktop is a Win10 box, and since it’s x99 it has no path to Win11. Clock is ticking to either repurpose it or get rid of it in some way or another. The other is already Win11 since it came that way.

I also have had a recent GIGO incident with my backup scheme (active backup for business on a synology 1821) - bitrotten file was originally from 2017, backup strategy’s host is from 2021, every copy is rotten. So it goes, not syno’s fault. However, that prompted some additional maintenance there. In the updates on the syno, come to find the gear I explicitly went out of my way to research and buy off their recommended hardware list is no longer on the recommended hardware list and now the machine sasses me about it. Which, practically means that while the device still has a year of warranty it has no support (unless I play games with removing devices before calling in, if I should need to). Needless to say, not a fan.

But, I’m sitting here looking at this stack of laptops, the desktop, and the spare parts left over from upgrading them, and I’m left wondering if there isn’t a better way…

Goals

- Unhook from dependence on synology’s random whims about what hardware they’re wanting to put up with (cause, again, I bought stuff off their list, and they’ve changed their mind even within the warranty period on the device, never mind longer term software support window). My actual data size, inclusive of backups and the snapshots thereof is currently about ~5TB. I don’t have a good measurement on growth rate since most photo/video work stopped in 2020 and those were the drivers on growth – the old logs have since fallen off. Just off folder sizes, maybe 10-15GB/yr from that.

- (Ideally?*) Split up home server services from backup storage, other than backups of the compose files and whatnot. Right now they are cohabitating on the same hardware. This is things like media server and content, pihole, and a lancache. Some of this, though, doesn’t need to be backed up (easy example: lancache’s cache files). The * here is that it’s yet another box to spec/build/power, so maybe this isn’t ideal?

- In the process, I’d love it if I could make the boxes smaller and/or easier to carry (cue obligatory ‘kallax-sized’ meme here, but that is roughly the dimensions i’d like to stay within if I can). This would be 13x13x13 in / 330 x 330 x 330mm.

- I’d also like to give linux a real shot at being my primary OS, too. I’ve used ubuntu before, and dabbled with a few others, but I’ve never thrown ‘real’ hardware at it to ever give it a realistic chance at being my daily driver. I’ll probably be using Pop this time around. My main game (FFXIV) and all of the rest of the apps I use are either cross platform (steam/gog with or without proton et al, davinci resolve, libreoffice, mozilla stuff, etc.), or I can live without / find alts for. Worst case, would still have the windows laptop.

- Gaming wise, I like max pretty settings. The big GPU in the desktop was intended for Cyberpunk, as I have a 3440x1440@60hz ultrawide monitor (and bought for 4k rather than 1440p gaming to ensure headroom for raytracing and whatnot; not that i find raytracing essential after The Experience That Should’ve Sold It To Me). I also have very minimal experience with monitors north of 60hz, but am 120-144hz curious due to the few times I’ve used my laptop’s 1080p@120hz monitor as a primary. It’s just too small for the day to day. However, on my laptop and using the ‘laptop max pretty’ default settings in FFXIV I’m seeing frame dips below 60fps on my ultrawide during boss encounters that are heavy on particles, and there is a big graphics refresh coming to that game in the next expansion (probs 8 months away) that will probably make that worse. I also get memory artifacting if i use reshade when taking screenshots when using the ultrawide, due to lack of vram on my laptop. Desktop is ‘fine’, albeit loading noticeably slower and occasionally suffers the usual big MMO city problems with stuttering. Haven’t pulled out PerfMon to know why, since it’s a non-critical zone.

- Editing wise: I mainly use GIMP and Davinci Resolve at the moment. However, it’s not for dollarydoos, just hobby things - so if it takes a little longer to bash its brains out while I’m sleeping anyway, so be it.

Kits to bash from (hardware summary)

- Desktop: intel 5820k, asus sabertooth x99 (PCIE with a 28lane cpu is x16/x8/x4, no bifurcation and the x4 is shared with the m.2 slot; 10 sata ports), 4x4GB ram, 512GB samsung 950 pro m.2, 4TB pcie3 x8 addon card (WD AN1500), EVGA RTX 3080, 4x 4TB WD Red (pre-SMRgate EFRX) HDD, Silverstone Fortress FT02 (4 empty 5.25 bays, 5th bay is a combo slim optical and 4x 2.5" non-hotswap bay adapter with a BDrom and no populated 2.5" bays)

- Laptop: ryzen 5600H, 2x32GB ram, 2.5TB of SSD, RTX 3060. Originally came with 1x8GB ram stick, currently unused.

- Backup box: DS1821+, 32GB ECC RAM, currently 4x4TB ironwolf pros in raid 10 (primary volume for docker and backups), 2x 1TB ironwolf SSDs for cache / metadata pinning. Hasn’t hit north of 40% utilized on the CPU in the last year’s worth of logs I have even with the extra services i’m running on docker, usually just sits idle.

- Other misc hardware: 4x4TB ironwolf pros sitting unused in an atom c2000 victim DS415+, 4x14TB exos x14s doing chia things for the moment to justify having them spun up (used to live in the ds415, now in the 1821; but these are refurbs so it was a decent enough excuse to see how quickly they’d die. I’ve swapped them in and out of the primary raid array once or twice to beat them up that way, too, so far so good - but i still don’t trust them enough to expand the array to that size since every other drive i have is 4tb or smaller.), and a couple of ‘spare’ SSDs (512GB, 2TB) sitting in old laptops that are currently their boot drives (but the 512 is in a 1st gen intel core box, the other is in a sandy bridge dell xfr laptop – these are rarely if ever used anymore since being superseded by the ryzen box, so waste not want not?). Old chenming/chieftec dragon case (normal ATX thing, but 6 internal 3.5 bays and a few 5.25 bays), with some very old and dead hardware in it - but hey, case’s a case.

- Peripherals of note: current monitor is 3440x1440@60, home network is currently only gigabit wired and 802.11ac (but only current big internal data movement is game reinstalls from lancache).

Preferences

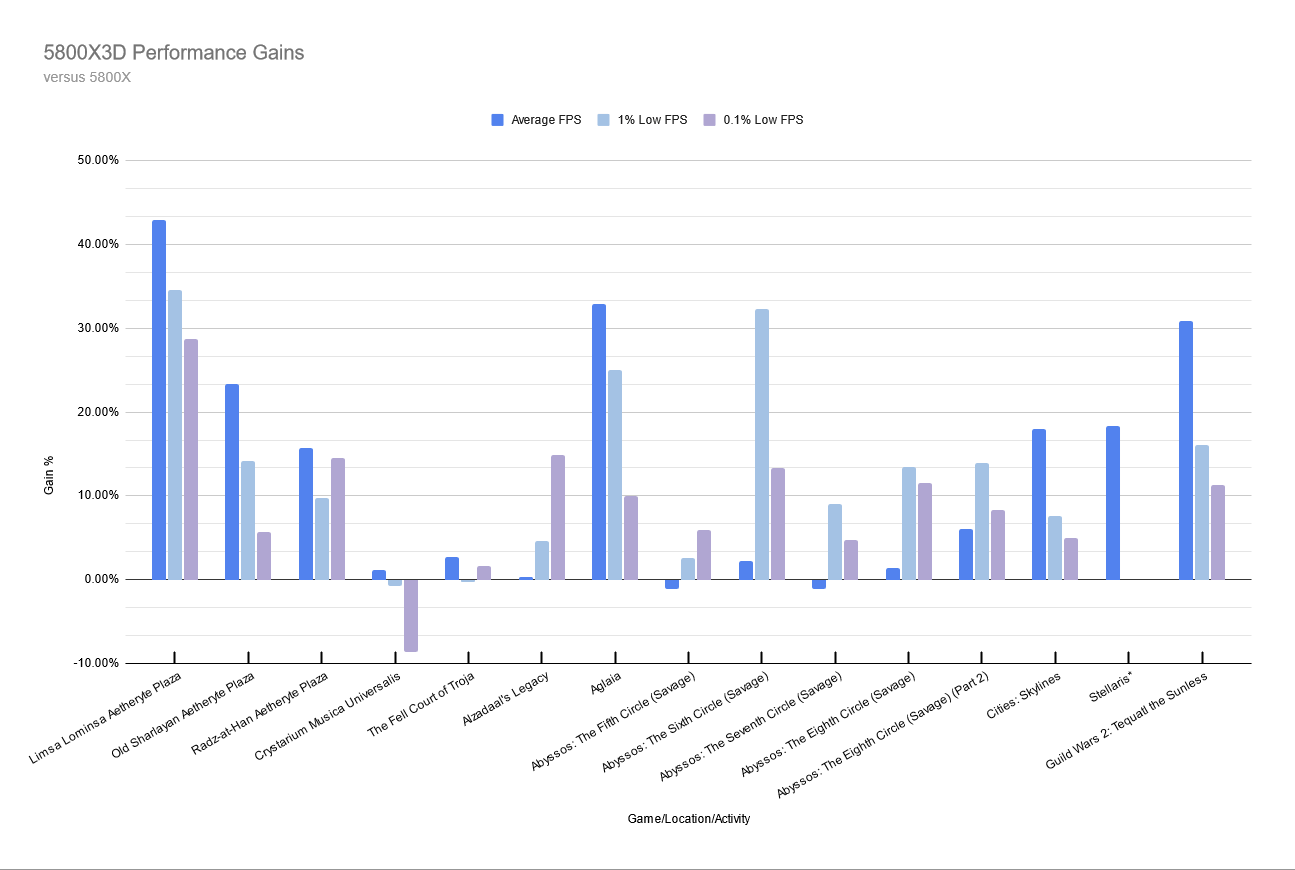

- I am vaaaaguely partial to an all-AMD build, mostly for the 3d vcache and maybe a smidge of excitement about SAM/ReBAR. This is probably because a little knowledge is creating that potential box for me on the extra cache size. On the other hand, the socket 1700 CPUs seem to benchmark better in my main game, and have a core count that ‘feels like an upgrade’ over the 6core/12thread boxes i have. They also usually seem to have boards with a better selection of peripheral options (and/or more likely to not be stuck with trash tier Realtek NICs that don’t even work properly in Windows, never mind any other OS). But to be clear: I don’t superfan for team $color, it’s always been a point in time price/performance/value choice for me.

- I don’t overclock anymore (beyond ‘set xmp and go’), cause I’d rather it just worked so I can get on with what I actually want to do with the computer - running applications. I’m also hoping to knock down how much heat it puts out, and power all of this is using (i.e. yes, I might be an Eco mode heretic, if the opportunity should present itself) – but heck, even an i3 13100 is an uplift over a 5820k, with less than half the TDP to dissipate into the room.

- I prefer air cooling (less things to fail, less disastrous results when it does), and I am probably the target market for those thermal sheets rather than thermal paste because I never pull the cooler after the initial install to repaste unless there’s a severe / runaway temperature issue. Not saying it’s a virtue, just declaring a vice. At least I clean the dust filters somewhat more frequently.

- I’ve had an unscratched itch for a miniITX build since the ncase m1 and dan a4 came out. I even bought a dan a4 at one point, and was ready to build in it about the time the ryzen 5000s and rtx30-series came out. But with the GPUs getting thicker, I ended up selling it off instead. Missed opportunities…

- I do like hot swap in a server / appliance, but it’s not critical. Just a strong preference. Bonus points for toolless hot swap.

Potential options?

- Build a new desktop in the FT02, convert the old one into a truenas box or similar and shove it in the old dragon case. This probably involves getting a new CPU cooler due to case height limits, a couple of 3x 3.5 to 2x 5.25 bay adapters , and a HBA for the x99 rig. Rough ballpark of an idea of the new desktop would be Part List - Intel Core i5-12600K, GeForce RTX 3080 10GB, Silverstone FT02B ATX Mid Tower - PCPartPicker. Pros are these both are pretty decent for cooling things, downside is that one of them is/remains big and heavy. The dragon is the aluminum variant, so not as heavy as the steel ones were - but still big.

- Build something as a condensed version of the current desktop (>= 6 cores, 32gb ram, room for 4 spinning rot boxes). This is probably a node 304 build, like this: Part List - Intel Core i5-12600K, Fractal Design Node 304 Mini ITX Tower - PCPartPicker. Placeholder gpu for price, because the 3080 i have wont fit in a 2-and-a-bit slot enclosure, but probably waiting for a RX 7700 to come out Soon™. Alternate option in this condensed idea would be upscaling to a node 804 / microATX build. In either event, the current desktop would get converted over to truenas duty; hba, 3.5" to 5.25" drive bay adapters, still a tank of a thing to move – and moreso, as fully kitted out it’d be capable of holding 10 HDDs and 6 2.5" SSDs plus the rest of it. Maybe a stretch goal of swapping for a v4 xeon and loading it up with >=512GB RAM, but that’s just technolust talking.

- Just build another big desktop, and repurpose the x99 build into a home server. Example desktop build: Part List - AMD Ryzen 7 7800X3D, Radeon RX 6700 XT, Fractal Design Torrent Compact ATX Mid Tower - PCPartPicker

- Grab a couple of seeed reServers and repurpose those 14tb drives into mirrors, and yank the cache drives from the synology and ram from the ryzen laptop to stand them up - one to each. Set up one as home server (and/or client device backup target?), one as backup target for the other server. Then get another 8GB stick of RAM for the laptop, and a large-ish 1080p@120ish or 1440p@120ish monitor that the laptop can actually drive without as much protestation. Upside? Since we can’t get the ECC reServers anyway, and with how little CPU time i spend on my NAS, it’s a lot easier to just pick the most inexpensive one (and on sale at the time of this post). And would definitely tick the small, power frugal, and capable of big (for my needs) storage density. Downside here, given the recent rot experience, is the lack of ECC, plus then fully dependent on the refurb drives. Plus still leaves me in the line for another daily driver upgrade, though would buy time / sanity (not all fan noises are annoying, but laptop fans certainly are for me) til the main hardware requirement changes.

- Alternate flavour of the reServer idea is Mini or Mini X from iXsystems and a deskmeet, or a deskmeet and a strictly-storage-box Node 304 build but same general setup. I would consider a NUC or something like the MeLE Quieter3c for the home server side given how little pressure I’ve put on the ryzen in my synology while it’s pulled double duty, but local storage capacity would be too small for the backup and/or media data sets at a reasonable price and/or limit reuse of the existing kerfuffle of drives I have (or put them into a USB enclosure, at which point Yet Another Box and Yet More Cables, with a side of USB problems). Mostly the same downsides, though the storage layer would have ECC again at least. I also looked at a few of the Silverstone NAS cases (8bay, 5bay, and/or the 8bay 2.5" ones) but couldn’t find a motherboard that would fit, had ECC support, and had enough ports or slots to allow all the bays to work and still have room for future network speed upgrades, or not be more expensive than just buying from iXsystems (since the motherboards that do work start at ~400, before sorting out cpu, PSU, and hba or network depending on the board). But the benefit here is I could go with truenas core for storage and let it do what its great at, and then have the other box run proxmox or whatever and run services.

Other considerations and questions

-

After all the teething issues on x99 I’ve dealt with (had the build since 2015), I’m somewhat leery of early adoption of another platform, memory, and/or storage standard. All these “will this or that memory capacity work?” discussions about AM5 are reminding me of the same problems I have with x99’s potential upgrade paths because the memory controller is so bad on a 5820k. But if I follow that logic to its extreme, I should be grabbing a new AM4 setup, since DDR4 is cheap and I have never really been in the habit of updating CPUs. I just assume I’ll have to get a new motherboard like I always have had to anyway – obvs I haven’t updated the desktop in 8 years beyond replacing GPUs a couple of times. The NAS had the priority since I knew about the atom bug, and got lucky it waited to die until after the 1821 came online. IOW: Given the starting point I’m at, is it sane to sit on the sidelines this gen and buy the good stuff of last gen, and just wait for DDR5 and the new motherboard platforms to get cheaper and hope we stop finding new ways to set PCs on fire (whether that’s riser cables, power supplies, power cables on GPUs, or burning out the CPUs with crap bios updates)?

-

Separately, am I thinking entirely wrongly about backup strategy and tiering, and duplicating data and/or devices unnecessarily by having it hop from clients->home server as backup for clients->‘master’ backup target server for that server, and instead just treat the home server as another client? Or should I just have one box for storage in a general sense and cohabitate backups and single copies of nonessential/replaceable data there. IDK, part of my brain says ‘compute separate from storage, but have two distinct sets of storage for backups’, but then there’s the part of my brain that knows HCI is a thing, too. But the current setup is just clients->backup to NAS, and NAS is hosting that as well as internal services right now cause it’s always on and (relatively) low power draw - it does have more bays than I actually need, though. Offsite backups are something I’m exploring on the side, so not currently part of this discussion – but that would maybe be the easy answer (i.e. backup clients to nas, + offsite as separate copy)?

-

Am I missing some weird gotchas about, say, which GPU vendor to go with on linux? Far as I know, AMD is recommended for linux? But I think Resolve and plex require intel or nvidia, and FFXIV generally seems to bench better on nvidia gpus and intel cpus at the moment - and doesn’t care about extra cache in the x3d parts.

Sorry for the wall of text, been sitting on this post a couple of months trying to organize my thoughts on this. But any input would be greatly appreciated, as I feel like I’m well and truly lost in the weeds right now (and/or struggling with rampant technolust / gear acquisition syndrome).

TLDR: Current two options for daily driver machines either don’t meet the needs my current setup imposes on them and scream about it, or are old, run hot, and are very thirsty for watts. Also trying to reconfigure the home server / backup target due to a poor experience with the current device’s vendor. Looking for input on options for linux gaming desktop (for 3440x1440@60) + home server (backup target and media serving) with an eye towards TCO (power draw/heat output) and noise since the machines and I have to share a small room. Bonus points if it can be kitbashed out of currently available machines/parts. Budget for now is ~$2k, but there’s wiggle room if there needs to be.