Oh, netboot. Yeah, that would be cool, and definitely a lot cheaper to implement. ![]() Also solves the one problem I’ve had with the 1821+'s hardware in an elegant way (lack of igpu). Not sure how I missed those videos about the netboot RPi setup you have for that, but that was a fun rabbit hole to dive down.

Also solves the one problem I’ve had with the 1821+'s hardware in an elegant way (lack of igpu). Not sure how I missed those videos about the netboot RPi setup you have for that, but that was a fun rabbit hole to dive down.

I don’t immediately recall a network boot option in the minisforum bios, but I’ll have to look again. Was kind of a convoluted mess in there – even the autorestart after power loss option was in an odd place and with a not entirely intuitive label.

At a high level, though – the environment you’re talking about has a ZFS storage server, the ephemeral server (single disk ZFS, sends backups to storage host on a NFS-enabled dataset), and a box that can netboot via NFS that can just pick up that dataset (or others) as needed? Or is it two box and everything lives on the storage host and the compute is entirely run off the network (and/or the classic ‘just grab another one from the closet’ like corporate devices with roaming profiles)?

Warning: Madness, mental gymnastics, rational-lies, and being wrong on the internet to follow (x99 ramble)

On the gaming rig side of house, I set about doing some (fairly casual) performance profiling to really nail down what it is I don’t like about the systems I have vs. the work I’m asking them to do. I hit some weirdness that, in retrospect, makes perfect sense. But I may as well document my foibles, too, so others don’t make the same mistakes.

I started off by just having task manager up on the second screen (aka the laptop’s screen) and playing through some games to check threading behavior. At first I was like, ah ha! This game and that game only use X number of cores/threads. Except for the ‘weird’ bit – every other core beyond the ones in use were doing absolutely nothing at all. For some games this was 4, others 8, others would use everything I’ve got. The weirdest was my main game, FFXIV – on the initial app start, it’d hit all 12, but once it was loaded into the login screen and on through the gameplay it would only use 8 of the 12.

So I moved on and said heck it, lets run 3DMark on the various power plans for the laptop. This is when I realized it was a power limit that was causing the cores to sit idle, when using the Quiet power plan. So I went back and redid it on the High Performance plan, and sure enough – all cores all the time, in nearly every game save the older ones that had tuned for a 4 core system. But everything was playable, save Cyberpunk (usually ~75 fps, but occasional extended dips to 45 in busy scenes). Downside? Noise and thermals, to no one’s surprise. Even the Quiet profile is loud for my taste, high performance is way, way too much. But… overall, not bad for a 5600 and a laptop 3060 inside a ~155W combined power budget.

Desktop, on the other hand… I went trawling through my old 3dmark results and it’s lost ~10C+ in CPU thermals in the last year, even after taking the 5820k off of the 4.4ghz OC down to stock. But what was interesting is that at least in that test, CPU performance when overclocked is dead level with the 5600’s. That’s weird. But, does mean I could probably shimmy along and wait another CPU generation if I have to, with acknowledgements to how inefficient and hot it will be in the meantime. I have never had a noise complaint with the FT02, because I had the fan curves pretty dialed in and added some sound deadening to it, too.

Then the 'tube’s algo got drunk and showed me some things that dovetailed with what I’d tripped over on the CPU performance side, and the madness took hold…

Some videos talking about x99's current 'budget' status

$80 CPU KILLS ZEN2! Core i7 5960X MAXIMUM Gaming Performance - YouTube

Long videos short, the 8c/16t chips that drop in on x99 are dirt cheap. And they were demonstrating some memory overclocks that at once both intuitively make sense, but also run counter to the general ‘moar MT/s’ overclocking recommendations people generally made back when x99 was current, as well as for the dual channel systems more broadly. The benchmarks were flawed (random resolution changes, graphics settings not likely putting full load on the CPU, etc. etc.), but made me curious.

Then there was the guy in the top comment on the first video talking specifically about how Haswell’s ring bus works, bandwidth limits of it vs the RAM (examples given: 4.2 ghz ring bus on 5950x and alder lake 12100 can move about 135GB/s, but dual channel 3200 ram is only good for about 51.2GB/s, quads was put forth as about 85GB/s), and how that would compare to infinity fabric. In particular, mentioned a test of adjusting the ring bus clock on an intel chip to what the infinity fabric was on zen 1, then zen 2, etc. and see how that will affect performance.

But it was a bit of a ‘duh’ moment, in the same way that MB/s per spindle vs. network speed was a ‘duh’ as soon as it was spelled out. The common advice was just ‘dont touch ring bus’ (or uncore or whatever the bios called it), because it ‘didn’t do anything’. But for my box with the GPU it has now, I’m curious if it does give more perf since it’s a 4.0 x16 card in a 3.0 x16 slot (i.e. another 32GB/s of load on that bus after it negotiates down). Ditto curious to test 2666 low latency timings vs 3000, because the IMC on these chips really did not want to go higher than 3000 without really winning the silicon lottery… with what (little) I knew back then. I mean, we were manually locking everything, forcing the high performance power plan, and shutting off all of the C states in the bios.

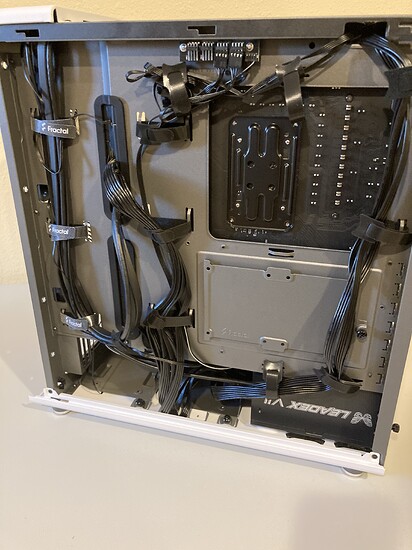

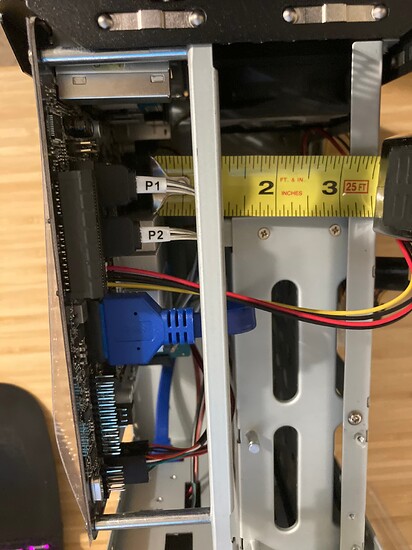

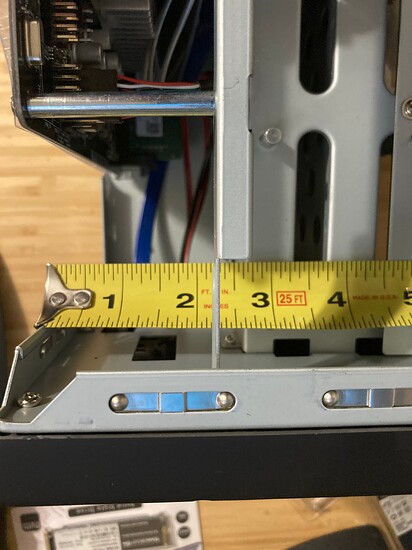

Anyway, long story short – ended up ordering a fractal north (so I can free up the FT02 for a storage build), a new PSU since the seasonic in it is quite old at this point, an arctic liquid freezer 280, 32gb of ddr4 4000 (well, i needed another 16gb anyway…), and one of those thermal grizzly kryosheets I’ve been curious about. I’ve been quite wrong about many things since opening this thread, and if I’m going to be diddling about with old, hot hardware it’s time to see if all my FUD from the old “koolance/danger den/tygon tubes from home depot/heater core from the junkyard” water cooling days needs to die, too. No great loss since x99 is so cheap now, and if I am as wrong as I probably am then it’s good bones to start from when I updoot the platform later on. Plus, if it dies, I still have the laptop to fall back upon.

I’ll know the madness has truly taken hold when I start poking around about enabling resizeable BAR on this board… ![]()